The University of Wisconsin–Madison

23 Sep 2025

We develop a finite-sample optimal estimator for regression discontinuity designs when the outcomes are bounded, including binary outcomes as the leading case. Our finite-sample optimal estimator achieves the exact minimax mean squared error among linear shrinkage estimators with nonnegative weights when the regression function of a bounded outcome lies in a Lipschitz class. Although the original minimax problem involves an iterating (n+1)-dimensional non-convex optimization problem where n is the sample size, we show that our estimator is obtained by solving a convex optimization problem. A key advantage of our estimator is that the Lipschitz constant is the only tuning parameter. We also propose a uniformly valid inference procedure without a large-sample approximation. In a simulation exercise for small samples, our estimator exhibits smaller mean squared errors and shorter confidence intervals than conventional large-sample techniques which may be unreliable when the effective sample size is small. We apply our method to an empirical multi-cutoff design where the sample size for each cutoff is small. In the application, our method yields informative confidence intervals, in contrast to the leading large-sample approach.

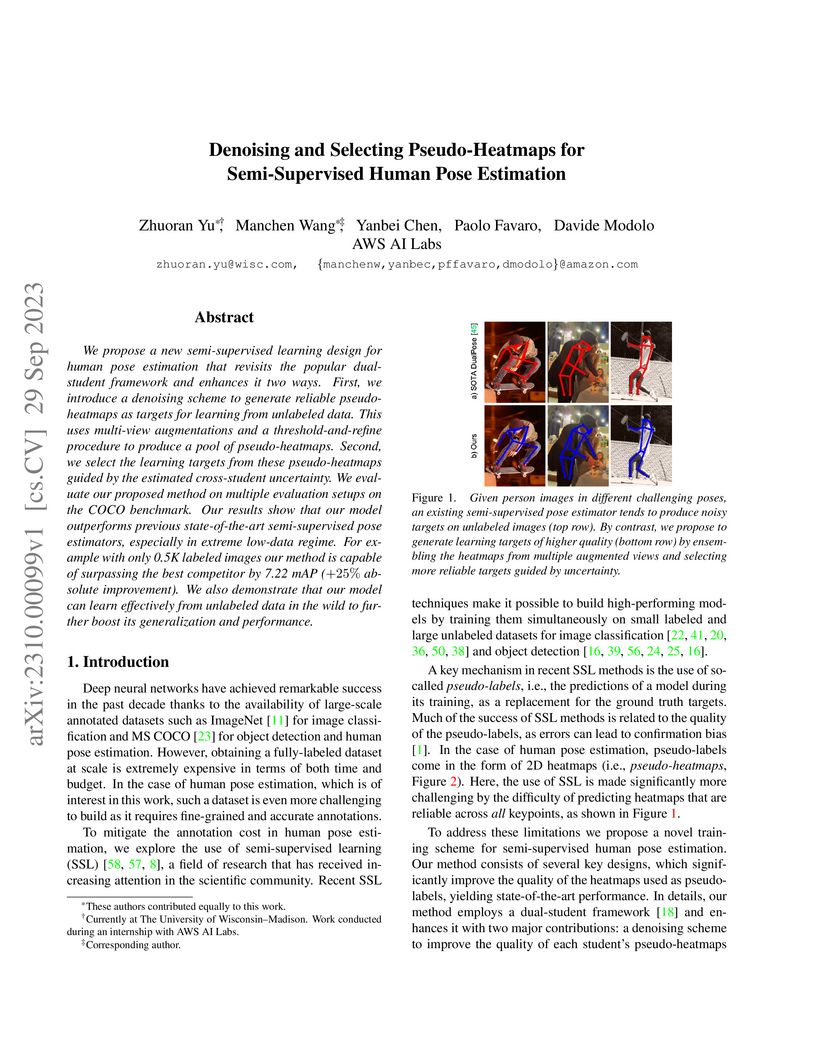

We propose a new semi-supervised learning design for human pose estimation

that revisits the popular dual-student framework and enhances it two ways.

First, we introduce a denoising scheme to generate reliable pseudo-heatmaps as

targets for learning from unlabeled data. This uses multi-view augmentations

and a threshold-and-refine procedure to produce a pool of pseudo-heatmaps.

Second, we select the learning targets from these pseudo-heatmaps guided by the

estimated cross-student uncertainty. We evaluate our proposed method on

multiple evaluation setups on the COCO benchmark. Our results show that our

model outperforms previous state-of-the-art semi-supervised pose estimators,

especially in extreme low-data regime. For example with only 0.5K labeled

images our method is capable of surpassing the best competitor by 7.22 mAP

(+25% absolute improvement). We also demonstrate that our model can learn

effectively from unlabeled data in the wild to further boost its generalization

and performance.

This paper proposes FREEtree, a tree-based method for high dimensional longitudinal data with correlated features. Popular machine learning approaches, like Random Forests, commonly used for variable selection do not perform well when there are correlated features and do not account for data observed over time. FREEtree deals with longitudinal data by using a piecewise random effects model. It also exploits the network structure of the features by first clustering them using weighted correlation network analysis, namely WGCNA. It then conducts a screening step within each cluster of features and a selection step among the surviving features, that provides a relatively unbiased way to select features. By using dominant principle components as regression variables at each leaf and the original features as splitting variables at splitting nodes, FREEtree maintains its interpretability and improves its computational efficiency. The simulation results show that FREEtree outperforms other tree-based methods in terms of prediction accuracy, feature selection accuracy, as well as the ability to recover the underlying structure.

There are no more papers matching your filters at the moment.