atmospheric-and-oceanic-physics

Turbulent flows posses broadband, power-law spectra in which multiscale interactions couple high-wavenumber fluctuations to large-scale dynamics. Although diffusion-based generative models offer a principled probabilistic forecasting framework, we show that standard DDPMs induce a fundamental \emph{spectral collapse}: a Fourier-space analysis of the forward SDE reveals a closed-form, mode-wise signal-to-noise ratio (SNR) that decays monotonically in wavenumber, ∣k∣ for spectra S(k)∝∣k∣−λ, rendering high-wavenumber modes indistinguishable from noise and producing an intrinsic spectral bias. We reinterpret the noise schedule as a spectral regularizer and introduce power-law schedules β(τ)∝τγ that preserve fine-scale structure deeper into diffusion time, along with \emph{Lazy Diffusion}, a one-step distillation method that leverages the learned score geometry to bypass long reverse-time trajectories and prevent high-k degradation. Applied to high-Reynolds-number 2D Kolmogorov turbulence and 1/12∘ Gulf of Mexico ocean reanalysis, these methods resolve spectral collapse, stabilize long-horizon autoregression, and restore physically realistic inertial-range scaling. Together, they show that naïve Gaussian scheduling is structurally incompatible with power-law physics and that physics-aware diffusion processes can yield accurate, efficient, and fully probabilistic surrogates for multiscale dynamical systems.

Hamiltonian and Lagrangian formulations for the two-dimensional quasi-geostrophic equations linearized about a zonally-symmetric basic flow are presented. The Lagrangian and Hamiltonian exhibit an infinite U(1) symmetry due to the absence of wave + wave -> wave interactions in the linearized approximation. By Noether's theorem the symmetry has a corresponding infinite set of conservation laws which are the well-known pseudomomenta. There exist separately conserved pseudomomenta at each zonal wavenumber, a point that has sometimes been obscured in past treatments.

Reliable regional climate information is essential for assessing the impacts of climate change and for planning in sectors such as renewable energy; yet, producing high-resolution projections through coordinated initiatives like CORDEX that run multiple physical regional climate models is both computationally demanding and difficult to organize. Machine learning emulators that learn the mapping between global and regional climate fields offer a promising way to address these limitations. Here we introduce the application of such an emulator: trained on CMIP5 and CORDEX simulations, it reproduces regional climate model data with sufficient accuracy. When applied to CMIP6 simulations not seen during training, it also produces realistic results, indicating stable performance. Using CORDEX data, CMIP5 and CMIP6 simulations, as well as regional data generated by two machine learning models, we analyze the co-occurrence of low wind speed and low solar radiation and find indications that the number of such energy drought days is likely to decrease in the future. Our results highlight that downscaling with machine learning emulators provides an efficient complement to efforts such as CORDEX, supplying the higher-resolution information required for impact assessments.

The atmospheric boundary layer (ABL) plays a critical role in governing turbulent exchanges of momentum, heat moisture, and trace gases between the Earth's surface and the free atmosphere, thereby influencing meteorological phenomena, air quality, and climate processes. Accurate and temporally continuous characterization of the ABL structure and height evolution is crucial for both scientific understanding and practical applications. High-resolution retrievals of the ABL height from vertical velocity measurements is challenging because it is often estimated using empirical thresholds applied to profiles of vertical velocity variance or related turbulence diagnostics at each measurement altitude, which can suffer from limited sampling and sensitivity to noise. To address these limitations, this work employs nonstationary Gaussian process (GP) modeling to more effectively capture the spatio-temporal dependence structure in the data, enabling high-quality -- and, if desired, high-resolution -- estimates of the ABL height without reliance on ad-hoc parameter tuning. By leveraging Vecchia approximations, the proposed method can be applied to large-scale datasets, and example applications using full-day vertical velocity profiles comprising approximately 5M measurements are presented.

Accurate nowcasting of convective storms remains a major challenge for operational forecasting, particularly for convective initiation and the evolution of high-impact rainfall and strong winds. Here we present FuXi-Nowcast, a deep-learning system that jointly predicts composite radar reflectivity, surface precipitation, near-surface temperature, wind speed and wind gusts at 1-km resolution over eastern China. FuXi-Nowcast integrates multi-source observations, such as radar, surface stations and the High-Resolution Land Data Assimilation System (HRLDAS), with three-dimensional atmospheric fields from the machine-learning weather model FuXi-2.0 within a multi-task Swin-Transformer architecture. A convective signal enhancement module and distribution-aware hybrid loss functions are designed to preserve intense convective structures and mitigate the rapid intensity decay common in deep-learning nowcasts. FuXi-Nowcast surpasses the operational CMA-MESO 3-km numerical model in Critical Success Index for reflectivity, precipitation and wind gusts across thresholds and lead times up to 12 h, with the largest gains for heavy rainfall. Case studies further show that FuXi-Nowcast more accurately captures the timing, location and structure of convective initiation and subsequent evolution of convection. These results demonstrate that coupling three-dimensional machine-learning forecasts with high-resolution observations can provide multi-hazard, long-lead nowcasts that outperforms current operational systems.

A fundamental challenge in numerical weather prediction is to efficiently produce high-resolution forecasts. A common solution is applying downscaling methods, which include dynamical downscaling and statistical downscaling, to the outputs of global models. This work focuses on statistical downscaling, which establishes statistical relationships between low-resolution and high-resolution historical data using statistical models. Deep learning has emerged as a powerful tool for this task, giving rise to various high-performance super-resolution models, which can be directly applied for downscaling, such as diffusion models and Generative Adversarial Networks. This work relies on a diffusion-based downscaling framework named CorrDiff. In contrast to the original work of CorrDiff, the region considered in this work is nearly 20 times larger, and we not only consider surface variables as in the original work, but also encounter high-level variables (six pressure levels) as target downscaling variables. In addition, a global residual connection is added to improve accuracy. In order to generate the 3km forecasts for the China region, we apply our trained models to the 25km global grid forecasts of CMA-GFS, an operational global model of the China Meteorological Administration (CMA), and SFF, a data-driven deep learning-based weather model developed from Spherical Fourier Neural Operators (SFNO). CMA-MESO, a high-resolution regional model, is chosen as the baseline model. The experimental results demonstrate that the forecasts downscaled by our method generally outperform the direct forecasts of CMA-MESO in terms of MAE for the target variables. Our forecasts of radar composite reflectivity show that CorrDiff, as a generative model, can generate fine-scale details that lead to more realistic predictions compared to the corresponding deterministic regression models.

This study distinguishes and quantifies aleatoric and epistemic uncertainties in weather and climate models using a Bayesian Neural Network within a simplified chaotic system. It provides a unified framework for uncertainty analysis, demonstrating how each uncertainty type impacts predictions across different timescales and showing improved model calibration and generalization through their joint consideration.

Reduced-order models (ROMs) can efficiently simulate high-dimensional physical systems, but lack robust uncertainty quantification methods. Existing approaches are frequently architecture- or training-specific, which limits flexibility and generalization. We introduce a post hoc, model-agnostic framework for predictive uncertainty quantification in latent space ROMs that requires no modification to the underlying architecture or training procedure. Using conformal prediction, our approach estimates statistical prediction intervals for multiple components of the ROM pipeline: latent dynamics, reconstruction, and end-to-end predictions. We demonstrate the method on a latent space dynamical model for cloud microphysics, where it accurately predicts the evolution of droplet-size distributions and quantifies uncertainty across the ROM pipeline.

It has been suggested that the upwelling branch of the abyssal overturning circulation is characterized by strong flows driven by turbulence along sloping topography. The Boundary Layer Turbulence field campaign has provided direct evidence for strong upslope flows along a deep submarine canyon of the Rockall Trough. Turbulent overturning events spanning 200 m in the vertical were observed every tidal cycle, suggesting that the strong tidal flows in the canyon periodically undergo some form of instability. However, it is shown that the flow never satisfied the classical instability condition for time-independent sheared flows in a stratified fluid commonly used in oceanographic studies to determine transition to turbulence. This study illustrates that the time dependence of the tidal flow changes the stability properties and explains the observed transition to a turbulent state. The findings suggest that turbulent mixing induced by oscillatory shear flow may be ubiquitous over sloping topography and play an important role in deep ocean mixing that supports the global overturning circulation.

Google DeepMind's Functional Generative Networks (FGN) introduce a probabilistic weather forecasting model that generates ensemble predictions by perturbing neural network parameters. FGN achieves superior accuracy and efficiency compared to previous machine learning models like GenCast while capturing complex joint spatial relationships from marginal training.

Accurate, high-resolution ocean forecasting is crucial for maritime operations and environmental monitoring. While traditional numerical models are capable of producing sub-daily, eddy-resolving forecasts, they are computationally intensive and face challenges in maintaining accuracy at fine spatial and temporal scales. In contrast, recent data-driven approaches offer improved computational efficiency and emerging potential, yet typically operate at daily resolution and struggle with sub-daily predictions due to error accumulation over time. We introduce FuXi-Ocean, the first data-driven global ocean forecasting model achieving six-hourly predictions at eddy-resolving 1/12° spatial resolution, reaching depths of up to 1500 meters. The model architecture integrates a context-aware feature extraction module with a predictive network employing stacked attention blocks. The core innovation is the Mixture-of-Time (MoT) module, which adaptively integrates predictions from multiple temporal contexts by learning variable-specific reliability , mitigating cumulative errors in sequential forecasting. Through comprehensive experimental evaluation, FuXi-Ocean demonstrates superior skill in predicting key variables, including temperature, salinity, and currents, across multiple depths.

Atmospheric predictability research has long held that the limit of skillful

deterministic weather forecasts is about 14 days. We challenge this limit using

GraphCast, a machine-learning weather model, by optimizing forecast initial

conditions using gradient-based techniques for twice-daily forecasts spanning

2020. This approach yields an average error reduction of 86% at 10 days, with

skill lasting beyond 30 days. Mean optimal initial-condition perturbations

reveal large-scale, spatially coherent corrections to ERA5, primarily

reflecting an intensification of the Hadley circulation. Forecasts using

GraphCast-optimal initial conditions in the Pangu-Weather model achieve a 21%

error reduction, peaking at 4 days, indicating that analysis corrections

reflect a combination of both model bias and a reduction in analysis error.

These results demonstrate that, given accurate initial conditions, skillful

deterministic forecasts are consistently achievable far beyond two weeks,

challenging long-standing assumptions about the limits of atmospheric

predictability.

Nanjing University

Nanjing University Peking UniversityNational University of Defense Technology

Peking UniversityNational University of Defense Technology Technical University of MunichPotsdam Institute for Climate Impact ResearchChongqing Research Institute of Big DataChinese Academy of Meteorological SciencesInstitute of Atmospheric Physics, Chinese Academy of SciencesNational Climate CenterXiong’an Institute of Meteorological Artificial Intelligence

Technical University of MunichPotsdam Institute for Climate Impact ResearchChongqing Research Institute of Big DataChinese Academy of Meteorological SciencesInstitute of Atmospheric Physics, Chinese Academy of SciencesNational Climate CenterXiong’an Institute of Meteorological Artificial IntelligenceThe Generative Assimilation and Prediction (GAP) framework unifies data assimilation, weather forecasting, and climate simulation through a coherent probabilistic approach. It demonstrates competitive performance across timescales from short-term weather to millennium-scale climate stability, operating with fewer observations than traditional systems and providing calibrated uncertainty estimates.

Conditioning diffusion and flow models have proven effective for super-resolving small-scale details in natural this http URL, in physical sciences such as weather, super-resolving small-scale details poses significant challenges due to: (i) misalignment between input and output distributions (i.e., solutions to distinct partial differential equations (PDEs) follow different trajectories), (ii) multi-scale dynamics, deterministic dynamics at large scales vs. stochastic at small scales, and (iii) limited data, increasing the risk of overfitting. To address these challenges, we propose encoding the inputs to a latent base distribution that is closer to the target distribution, followed by flow matching to generate small-scale physics. The encoder captures the deterministic components, while flow matching adds stochastic small-scale details. To account for uncertainty in the deterministic part, we inject noise into the encoder output using an adaptive noise scaling mechanism, which is dynamically adjusted based on maximum-likelihood estimates of the encoder predictions. We conduct extensive experiments on both the real-world CWA weather dataset and the PDE-based Kolmogorov dataset, with the CWA task involving super-resolving the weather variables for the region of Taiwan from 25 km to 2 km scales. Our results show that the proposed stochastic flow matching (SFM) framework significantly outperforms existing methods such as conditional diffusion and flows.

Microsoft Research's Aurora introduces a 1.3 billion parameter foundation model for the Earth system, pre-trained on diverse global data. This model outperforms existing operational forecasting systems across multiple domains, including air quality, ocean wave dynamics, tropical cyclone tracks, and high-resolution global weather at 0.1° resolution, while achieving substantial improvements in computational efficiency and reducing development timelines from years to weeks.

WeatherBench 2 is an open-source benchmark for data-driven global weather models, providing a standardized framework for rigorous evaluation against operational meteorological standards. It includes a comprehensive suite of deterministic and probabilistic metrics, demonstrating that advanced AI models achieve deterministic skill comparable to traditional physical models for several days while highlighting challenges in forecast realism and probabilistic skill.

Weather and climate simulations produce petabytes of high-resolution data that are later analyzed by researchers in order to understand climate change or severe weather. We propose a new method of compressing this multidimensional weather and climate data: a coordinate-based neural network is trained to overfit the data, and the resulting parameters are taken as a compact representation of the original grid-based data. While compression ratios range from 300x to more than 3,000x, our method outperforms the state-of-the-art compressor SZ3 in terms of weighted RMSE, MAE. It can faithfully preserve important large scale atmosphere structures and does not introduce artifacts. When using the resulting neural network as a 790x compressed dataloader to train the WeatherBench forecasting model, its RMSE increases by less than 2%. The three orders of magnitude compression democratizes access to high-resolution climate data and enables numerous new research directions.

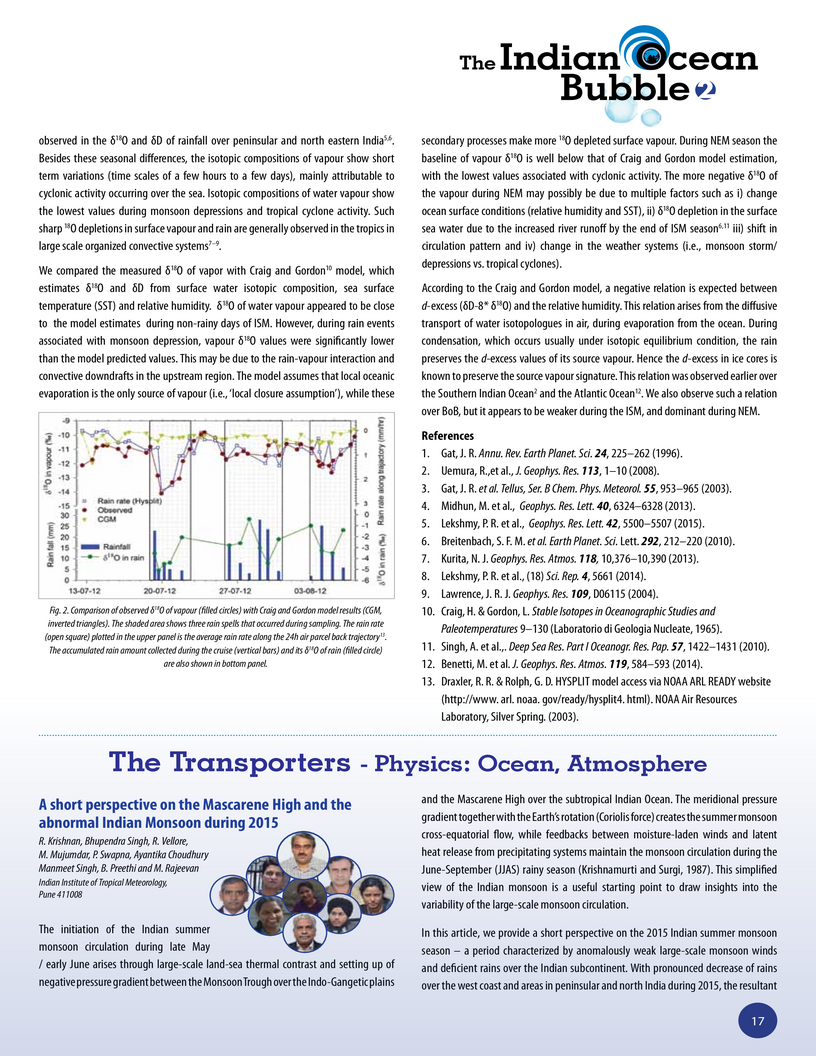

The initiation of the Indian summer monsoon circulation during late May / early June arises through large-scale land-sea thermal contrast and setting up of negative pressure gradient between the Monsoon Trough over the Indo-Gangetic plains and the Mascarene High over the subtropical Indian Ocean. The meridional pressure gradient together with the Earth's rotation (Coriolis force) creates the summer monsoon cross-equatorial flow, while feedbacks between moisture-laden winds and latent heat release from precipitating systems maintain the monsoon circulation during the June-September (JJAS) rainy season (Krishnamurti and Surgi, 1987). This simplified view of the Indian monsoon is a useful starting point to draw insights into the variability of the large-scale monsoon circulation.

Estimating historical evapotranspiration (ET) is essential for understanding

the effects of climate change and human activities on the water cycle. This

study used historical weather station data to reconstruct ET trends over the

past 300 years with machine learning. A Random Forest model, trained on

FLUXNET2015 flux stations' monthly data using precipitation, temperature,

aridity index, and rooting depth as predictors, achieved an R2 of 0.66 and a

KGE of 0.76 through 10-fold cross-validation. Applied to 5267 weather stations,

the model produced monthly ET data showing a general increase in global ET from

1700 to the present, with a notable acceleration after 1900 due to warming.

Regional differences were observed, with higher ET increases in mid-to-high

latitudes of the Northern Hemisphere and decreases in some mid-to-low latitudes

and the Southern Hemisphere. In drylands, ET and temperature were weakly

correlated, while in humid areas, the correlation was much higher. The

correlation between ET and precipitation has remained stable over the

centuries. This study extends the ET data time span, providing valuable

insights into long-term historical ET trends and their drivers, aiding in

reassessing the impact of historical climate change and human activities on the

water cycle and supporting future climate adaptation strategies.

Coupled climate model simulations designed to isolate the effects of Arctic sea-ice loss often apply artificial heating, either directly to the ice or through modification of the surface albedo, to constrain sea-ice in the absence of other forcings. Recent work has shown that this approach may lead to an overestimation of the climate response to sea-ice loss. In this study, we assess the spurious impacts of ice-constraining methods on the climate of an idealised aquaplanet general circulation model (GCM) with thermodynamic sea-ice. The true effect of sea-ice loss in this model is isolated by inducing ice loss through reduction of the freezing point of water, which does not require additional energy input. We compare results from freezing point modification experiments with experiments where sea-ice loss is induced using traditional ice-constraining methods, and confirm the result of previous work that traditional methods induce spurious additional warming. Furthermore, additional warming leads to an overestimation of the circulation response to sea-ice loss, which involves a weakening of the zonal wind and storm track activity in midlatitudes. Our results suggest that coupled model simulations with constrained sea-ice should be treated with caution, especially in boreal summer, where the true effect of sea-ice loss is weakest but we find the largest spurious response. Given that our results may be sensitive to the simplicity of the model we use, we suggest that devising methods to quantify the spurious effects of ice-constraining methods in more sophisticated models should be an urgent priority for future work.

There are no more papers matching your filters at the moment.