bayesian-deep-learning

Neural Network Field Theories (NN-FTs) represent a novel construction of arbitrary field theories, including those of conformal fields, through the specification of the network architecture and prior distribution for the network parameters. In this work, we present a formalism for the construction of conformally invariant defects in these NN-FTs. We demonstrate this new formalism in two toy models of NN scalar field theories. We develop an NN interpretation of an expansion akin to the defect OPE in two-point correlation functions in these models.

This paper proposes a novel diffusion-based posterior sampling method within a plug-and-play (PnP) framework. Our approach constructs a probability transport from an easy-to-sample terminal distribution to the target posterior, using a warm-start strategy to initialize the particles. To approximate the posterior score, we develop a Monte Carlo estimator in which particles are generated using Langevin dynamics, avoiding the heuristic approximations commonly used in prior work. The score governing the Langevin dynamics is learned from data, enabling the model to capture rich structural features of the underlying prior distribution. On the theoretical side, we provide non-asymptotic error bounds, showing that the method converges even for complex, multi-modal target posterior distributions. These bounds explicitly quantify the errors arising from posterior score estimation, the warm-start initialization, and the posterior sampling procedure. Our analysis further clarifies how the prior score-matching error and the condition number of the Bayesian inverse problem influence overall performance. Finally, we present numerical experiments demonstrating the effectiveness of the proposed method across a range of inverse problems.

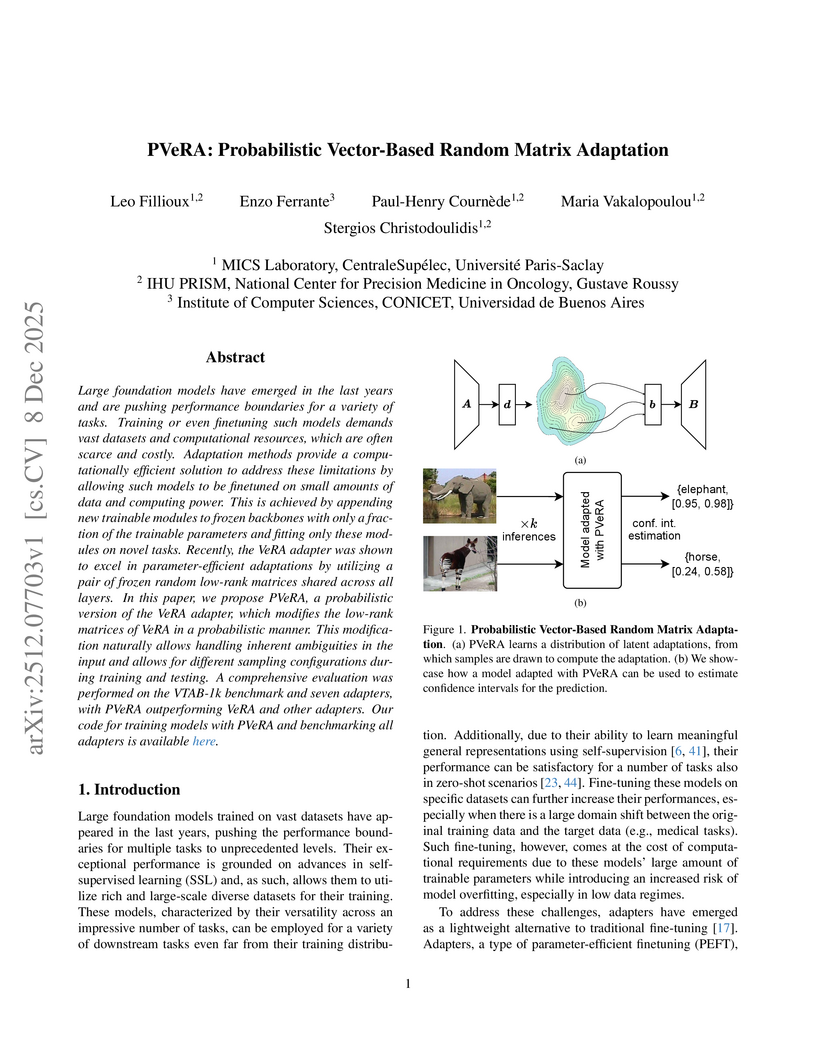

Large foundation models have emerged in the last years and are pushing performance boundaries for a variety of tasks. Training or even finetuning such models demands vast datasets and computational resources, which are often scarce and costly. Adaptation methods provide a computationally efficient solution to address these limitations by allowing such models to be finetuned on small amounts of data and computing power. This is achieved by appending new trainable modules to frozen backbones with only a fraction of the trainable parameters and fitting only these modules on novel tasks. Recently, the VeRA adapter was shown to excel in parameter-efficient adaptations by utilizing a pair of frozen random low-rank matrices shared across all layers. In this paper, we propose PVeRA, a probabilistic version of the VeRA adapter, which modifies the low-rank matrices of VeRA in a probabilistic manner. This modification naturally allows handling inherent ambiguities in the input and allows for different sampling configurations during training and testing. A comprehensive evaluation was performed on the VTAB-1k benchmark and seven adapters, with PVeRA outperforming VeRA and other adapters. Our code for training models with PVeRA and benchmarking all adapters is available this https URL.

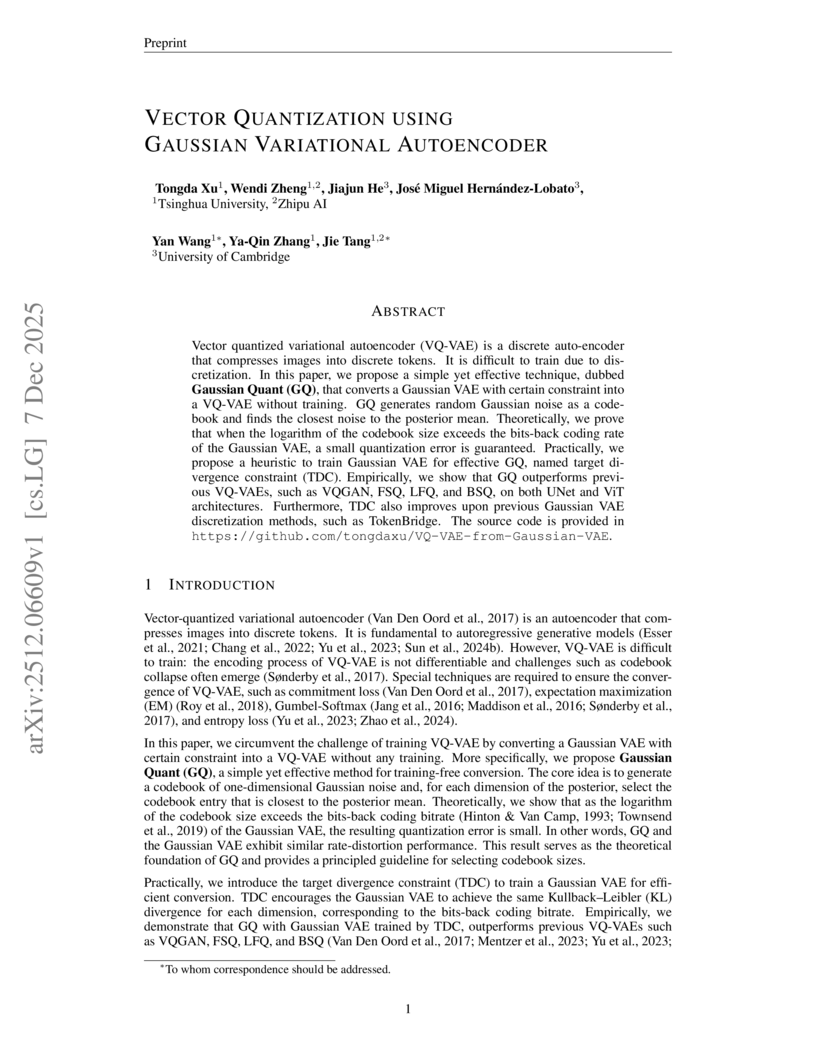

Gaussian Quant (GQ), developed by researchers at Tsinghua University, Zhipu AI, and the University of Cambridge, presents a training-free method to transform pre-trained Gaussian Variational Autoencoders into discrete tokenizers. This approach consistently outperforms existing VQ-VAE variants in image reconstruction across various architectures and bitrates while maintaining high codebook utilization for efficient autoregressive generation.

We present latent nonlinear denoising score matching (LNDSM), a novel training objective for score-based generative models that integrates nonlinear forward dynamics with the VAE-based latent SGM framework. This combination is achieved by reformulating the cross-entropy term using the approximate Gaussian transition induced by the Euler-Maruyama scheme. To ensure numerical stability, we identify and remove two zero-mean but variance exploding terms arising from small time steps. Experiments on variants of the MNIST dataset demonstrate that the proposed method achieves faster synthesis and enhanced learning of inherently structured distributions. Compared to benchmark structure-agnostic latent SGMs, LNDSM consistently attains superior sample quality and variability.

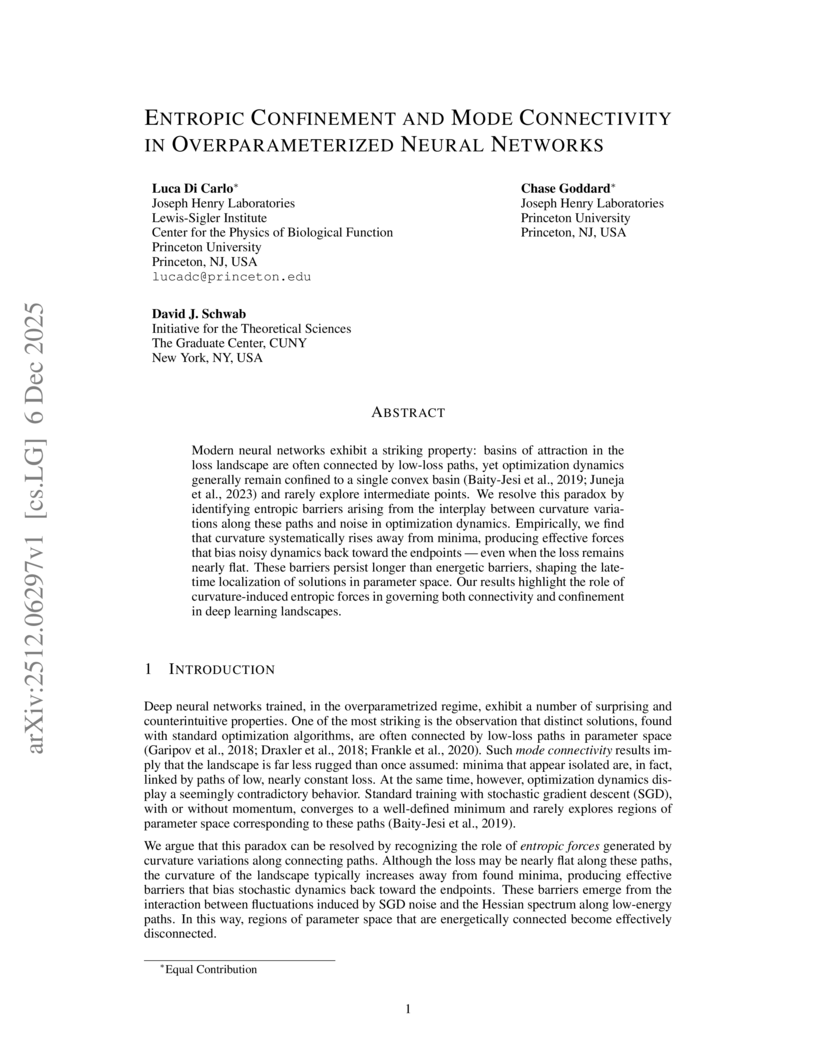

Modern neural networks exhibit a striking property: basins of attraction in the loss landscape are often connected by low-loss paths, yet optimization dynamics generally remain confined to a single convex basin and rarely explore intermediate points. We resolve this paradox by identifying entropic barriers arising from the interplay between curvature variations along these paths and noise in optimization dynamics. Empirically, we find that curvature systematically rises away from minima, producing effective forces that bias noisy dynamics back toward the endpoints - even when the loss remains nearly flat. These barriers persist longer than energetic barriers, shaping the late-time localization of solutions in parameter space. Our results highlight the role of curvature-induced entropic forces in governing both connectivity and confinement in deep learning landscapes.

Sampling from unnormalized target distributions is a fundamental yet challenging task in machine learning and statistics. Existing sampling algorithms typically require many iterative steps to produce high-quality samples, leading to high computational costs. We introduce one-step diffusion samplers which learn a step-conditioned ODE so that one large step reproduces the trajectory of many small ones via a state-space consistency loss. We further show that standard ELBO estimates in diffusion samplers degrade in the few-step regime because common discrete integrators yield mismatched forward/backward transition kernels. Motivated by this analysis, we derive a deterministic-flow (DF) importance weight for ELBO estimation without a backward kernel. To calibrate DF, we introduce a volume-consistency regularization that aligns the accumulated volume change along the flow across step resolutions. Our proposed sampler therefore achieves both sampling and stable evidence estimate in only one or few steps. Across challenging synthetic and Bayesian benchmarks, it achieves competitive sample quality with orders-of-magnitude fewer network evaluations while maintaining robust ELBO estimates.

A theoretical framework unifies continuous and discrete diffusion models, presenting parallel discrete-time and continuous-time formulations using SDEs and CTMCs. It clarifies how forward corruption processes dictate reverse dynamics and training objectives, deriving common loss functions like Denoising Score Matching and Masked Language Modeling from a single variational inference principle.

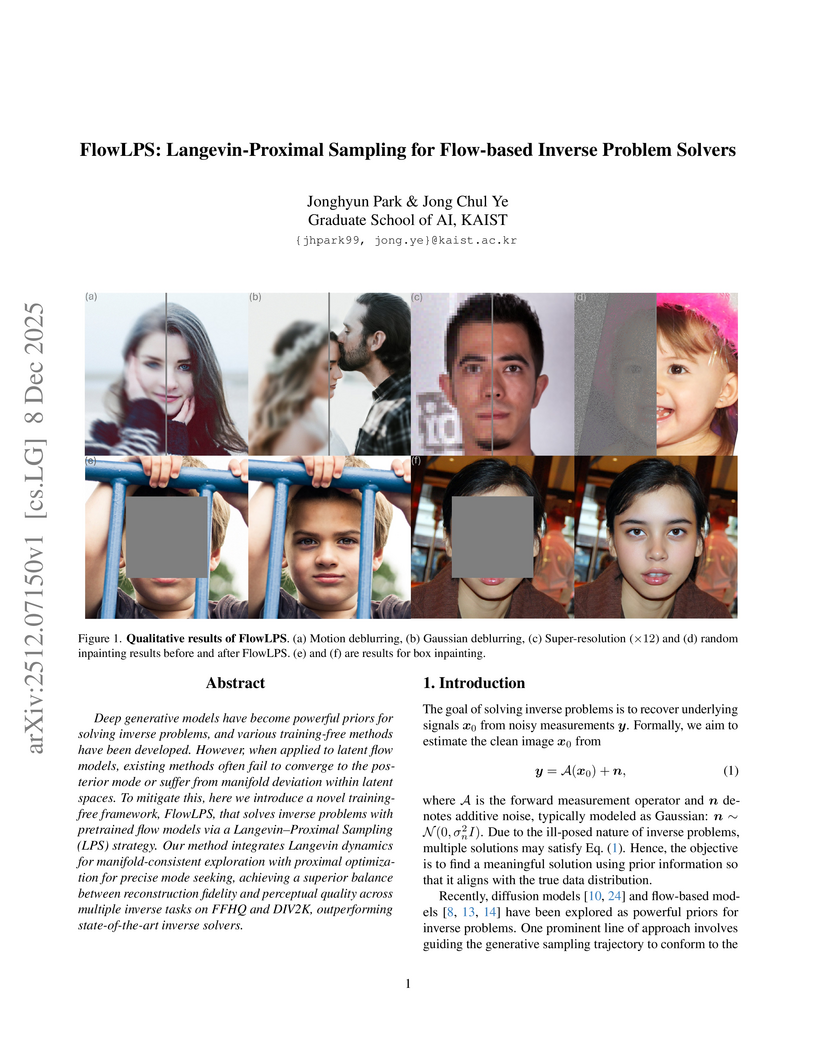

Deep generative models have become powerful priors for solving inverse problems, and various training-free methods have been developed. However, when applied to latent flow models, existing methods often fail to converge to the posterior mode or suffer from manifold deviation within latent spaces. To mitigate this, here we introduce a novel training-free framework, FlowLPS, that solves inverse problems with pretrained flow models via a Langevin Proximal Sampling (LPS) strategy. Our method integrates Langevin dynamics for manifold-consistent exploration with proximal optimization for precise mode seeking, achieving a superior balance between reconstruction fidelity and perceptual quality across multiple inverse tasks on FFHQ and DIV2K, outperforming state of the art inverse solvers.

04 Dec 2025

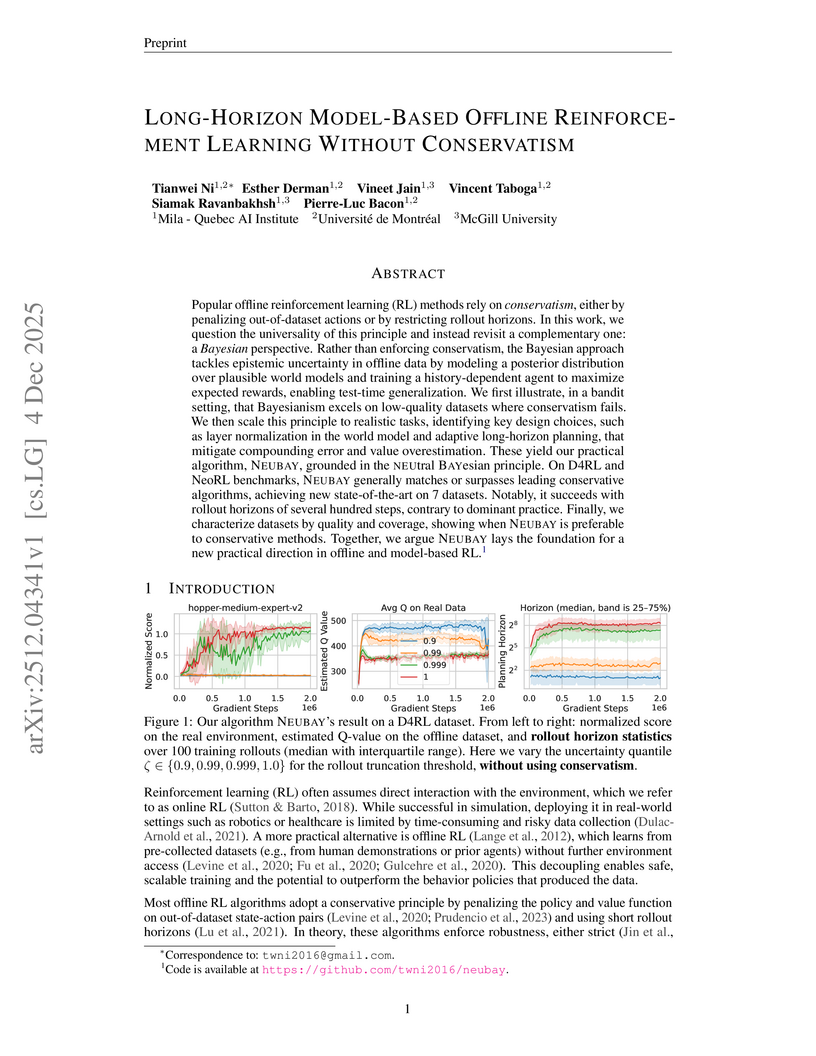

NEUBAY is an algorithm for offline reinforcement learning that challenges the reliance on conservatism, instead adopting a neutral Bayesian perspective. It achieves competitive and state-of-the-art performance across 33 D4RL and NeoRL datasets by leveraging adaptive long-horizon planning and robust world modeling.

Kolmogorov-Arnold Networks have emerged as interpretable alternatives to traditional multi-layer perceptrons. However, standard implementations lack principled uncertainty quantification capabilities essential for many scientific applications. We present a framework integrating sparse variational Gaussian process inference with the Kolmogorov-Arnold topology, enabling scalable Bayesian inference with computational complexity quasi-linear in sample size. Through analytic moment matching, we propagate uncertainty through deep additive structures while maintaining interpretability. We use three example studies to demonstrate the framework's ability to distinguish aleatoric from epistemic uncertainty: calibration of heteroscedastic measurement noise in fluid flow reconstruction, quantification of prediction confidence degradation in multi-step forecasting of advection-diffusion dynamics, and out-of-distribution detection in convolutional autoencoders. These results suggest Sparse Variational Gaussian Process Kolmogorov-Arnold Networks (SVGP KANs) is a promising architecture for uncertainty-aware learning in scientific machine learning.

02 Dec 2025

Researchers introduced the Martingale Score, an unsupervised metric derived from Bayesian statistics, to quantify belief entrenchment in large language model reasoning. This metric revealed pervasive entrenchment across various models and tasks, with higher scores directly correlating with decreased prediction accuracy in truth-resolving domains.

Vision-language models (VLMs), such as CLIP, have shown strong generalization under zero-shot settings, yet adapting them to downstream tasks with limited supervision remains a significant challenge. Existing multi-modal prompt learning methods typically rely on fixed, shared prompts and deterministic parameters, which limits their ability to capture instance-level variation or model uncertainty across diverse tasks and domains. To tackle this issue, we propose a novel Variational Multi-Modal Prompt Learning (VaMP) framework that enables sample-specific, uncertainty-aware prompt tuning in multi-modal representation learning. VaMP generates instance-conditioned prompts by sampling from a learned posterior distribution, allowing the model to personalize its behavior based on input content. To further enhance the integration of local and global semantics, we introduce a class-aware prior derived from the instance representation and class prototype. Building upon these, we formulate prompt tuning as variational inference over latent prompt representations and train the entire framework end-to-end through reparameterized sampling. Experiments on few-shot and domain generalization benchmarks show that VaMP achieves state-of-the-art performance, highlighting the benefits of modeling both uncertainty and task structure in our method. Project page: this https URL

Regime transitions routinely break stationarity in time series, making calibrated uncertainty as important as point accuracy. We study distribution-free uncertainty for regime-switching forecasting by coupling Deep Switching State Space Models with Adaptive Conformal Inference (ACI) and its aggregated variant (AgACI). We also introduce a unified conformal wrapper that sits atop strong sequence baselines including S4, MC-Dropout GRU, sparse Gaussian processes, and a change-point local model to produce online predictive bands with finite-sample marginal guarantees under nonstationarity and model misspecification. Across synthetic and real datasets, conformalized forecasters achieve near-nominal coverage with competitive accuracy and generally improved band efficiency.

Reliable real-time analysis of sensor data is essential for structural health monitoring (SHM) of high-value assets, yet a major challenge is to obtain spatially resolved full-field aleatoric and epistemic uncertainties for trustworthy decision-making. We present an integrated SHM framework that combines principal component analysis (PCA), a Bayesian neural network (BNN), and Hamiltonian Monte Carlo (HMC) inference, mapping sparse strain gauge measurements onto leading PCA modes to reconstruct full-field strain distributions with uncertainty quantification. The framework was validated through cyclic four-point bending tests on carbon fiber reinforced polymer (CFRP) specimens with varying crack lengths, achieving accurate strain field reconstruction (R squared value > 0.9) while simultaneously producing real-time uncertainty fields. A key contribution is that the BNN yields robust full-field strain reconstructions from noisy experimental data with crack-induced strain singularities, while also providing explicit representations of two complementary uncertainty fields. Considered jointly in full-field form, the aleatoric and epistemic uncertainty fields make it possible to diagnose at a local level, whether low-confidence regions are driven by data-inherent issues or by model-related limitations, thereby supporting reliable decision-making. Collectively, the results demonstrate that the proposed framework advances SHM toward trustworthy digital twin deployment and risk-aware structural diagnostics.

We liberate Equilibrium Propagation (EP) from the limit of infinitesimal perturbations by establishing a finite-nudge foundation for local credit assignment. By modeling network states as Gibbs-Boltzmann distributions rather than deterministic points, we prove that the gradient of the difference in Helmholtz free energy between a nudged and free phase is exactly the difference in expected local energy derivatives. This validates the classic Contrastive Hebbian Learning update as an exact gradient estimator for arbitrary finite nudging, requiring neither infinitesimal approximations nor convexity. Furthermore, we derive a generalized EP algorithm based on the path integral of loss-energy covariances, enabling learning with strong error signals that standard infinitesimal approximations cannot support.

Robots in uncertain real-world environments must perform both goal-directed and exploratory actions. However, most deep learning-based control methods neglect exploration and struggle under uncertainty. To address this, we adopt deep active inference, a framework that accounts for human goal-directed and exploratory actions. Yet, conventional deep active inference approaches face challenges due to limited environmental representation capacity and high computational cost in action selection. We propose a novel deep active inference framework that consists of a world model, an action model, and an abstract world model. The world model encodes environmental dynamics into hidden state representations at slow and fast timescales. The action model compresses action sequences into abstract actions using vector quantization, and the abstract world model predicts future slow states conditioned on the abstract action, enabling low-cost action selection. We evaluate the framework on object-manipulation tasks with a real-world robot. Results show that it achieves high success rates across diverse manipulation tasks and switches between goal-directed and exploratory actions in uncertain settings, while making action selection computationally tractable. These findings highlight the importance of modeling multiple timescale dynamics and abstracting actions and state transitions.

Reconstructing full fields from extremely sparse and random measurements is a longstanding ill-posed inverse problem. A powerful framework for addressing such challenges is hierarchical probabilistic modeling, where uncertainty is represented by intermediate variables and resolved through marginalization during inference. Inspired by this principle, we propose Cascaded Sensing (Cas-Sensing), a hierarchical reconstruction framework that integrates an autoencoder-diffusion cascade. First, a neural operator-based functional autoencoder reconstructs the dominant structures of the original field - including large-scale components and geometric boundaries - from arbitrary sparse inputs, serving as an intermediate variable. Then, a conditional diffusion model, trained with a mask-cascade strategy, generates fine-scale details conditioned on these large-scale structures. To further enhance fidelity, measurement consistency is enforced via the manifold constrained gradient based on Bayesian posterior sampling during the generation process. This cascaded pipeline substantially alleviates ill-posedness, delivering accurate and robust reconstructions. Experiments on both simulation and real-world datasets demonstrate that Cas-Sensing generalizes well across varying sensor configurations and geometric boundaries, making it a promising tool for practical deployment in scientific and engineering applications.

Stochastic Neural Dynamic Mode Decomposition (Stochastic NODE–DMD) introduces a framework that reformulates Dynamic Mode Decomposition using a stochastic neural ordinary differential equation, coupled with neural implicit representations. It enables continuous spatiotemporal field reconstruction and principled uncertainty quantification for high-dimensional nonlinear dynamical systems from sparse, noisy observations.

Researchers from the University of California, Irvine, introduced a Bayesian framework to quantify uncertainty in binary evaluation metrics for Large Language Model (LLM) behavior. This method, enhanced with sequential sampling strategies, offers a more nuanced understanding of stochastic LLM outputs while also reducing the computational cost of evaluation.

There are no more papers matching your filters at the moment.