Datadog

Researchers at Datadog AI Research introduced TOTO, a specialized Time Series Foundation Model, and BOOM, a new benchmark, to address the unique challenges of observability time series forecasting. TOTO achieved state-of-the-art zero-shot performance on the BOOM benchmark, improving MASE by 13.1% and CRPS by 12.4% over existing models, and also topped other general-purpose benchmarks.

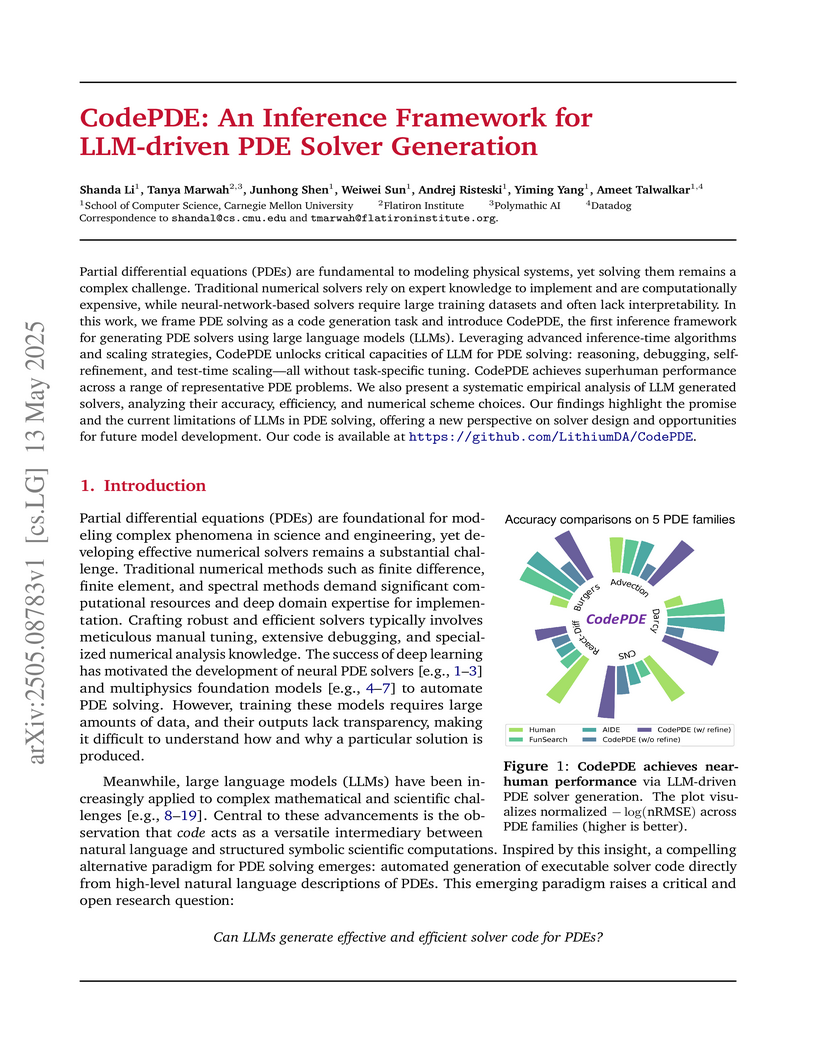

Carnegie Mellon researchers introduce CodePDE, a framework that enables large language models to generate, debug, and refine numerical solvers for partial differential equations, achieving performance comparable to or exceeding human experts on 4 out of 5 evaluated PDE families while maintaining solver transparency and interpretability.

This technical report describes the Time Series Optimized Transformer for

Observability (Toto), a new state of the art foundation model for time series

forecasting developed by Datadog. In addition to advancing the state of the art

on generalized time series benchmarks in domains such as electricity and

weather, this model is the first general-purpose time series forecasting

foundation model to be specifically tuned for observability metrics.

Toto was trained on a dataset of one trillion time series data points, the

largest among all currently published time series foundation models. Alongside

publicly available time series datasets, 75% of the data used to train Toto

consists of fully anonymous numerical metric data points from the Datadog

platform.

In our experiments, Toto outperforms existing time series foundation models

on observability data. It does this while also excelling at general-purpose

forecasting tasks, achieving state-of-the-art zero-shot performance on multiple

open benchmark datasets.

Large language model (LLM) agents show promise in automating machine learning (ML) engineering. However, existing agents typically operate in isolation on a given research problem, without engaging with the broader research community, where human researchers often gain insights and contribute by sharing knowledge. To bridge this gap, we introduce MLE-Live, a live evaluation framework designed to assess an agent's ability to communicate with and leverage collective knowledge from a simulated Kaggle research community. Building on this framework, we propose CoMind, an multi-agent system designed to actively integrate external knowledge. CoMind employs an iterative parallel exploration mechanism, developing multiple solutions simultaneously to balance exploratory breadth with implementation depth. On 75 past Kaggle competitions within our MLE-Live framework, CoMind achieves a 36% medal rate, establishing a new state of the art. Critically, when deployed in eight live, ongoing competitions, CoMind outperforms 92.6% of human competitors on average, placing in the top 5% on three official leaderboards and the top 1% on one.

Consistent range-hashing is a technique used in distributed systems, either

directly or as a subroutine for consistent hashing, commonly to realize an even

and stable data distribution over a variable number of resources. We introduce

FlipHash, a consistent range-hashing algorithm with constant time complexity

and low memory requirements. Like Jump Consistent Hash, FlipHash is intended

for applications where resources can be indexed sequentially. Under this

condition, it ensures that keys are hashed evenly across resources and that

changing the number of resources only causes keys to be remapped from a removed

resource or to an added one, but never shuffled across persisted ones. FlipHash

differentiates itself with its low computational cost, achieving constant-time

complexity. We show that FlipHash beats Jump Consistent Hash's cost, which is

logarithmic in the number of resources, both theoretically and in experiments

over practical settings.

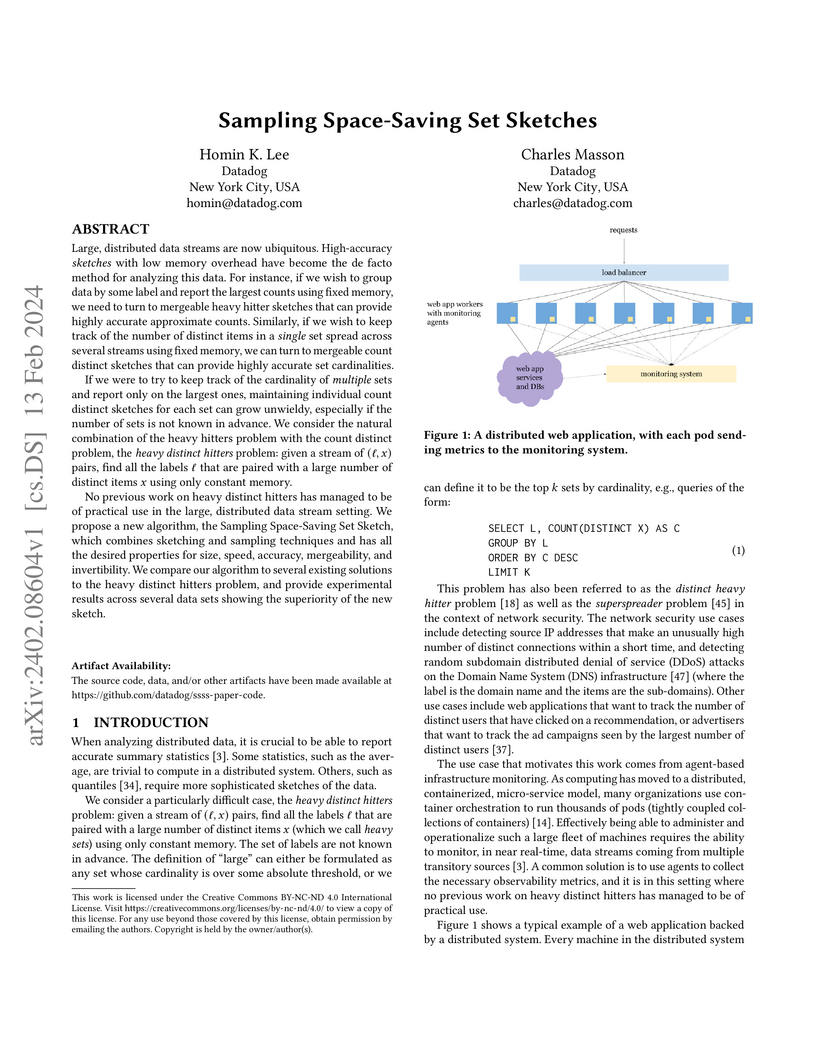

Large, distributed data streams are now ubiquitous. High-accuracy sketches

with low memory overhead have become the de facto method for analyzing this

data. For instance, if we wish to group data by some label and report the

largest counts using fixed memory, we need to turn to mergeable heavy hitter

sketches that can provide highly accurate approximate counts. Similarly, if we

wish to keep track of the number of distinct items in a single set spread

across several streams using fixed memory, we can turn to mergeable count

distinct sketches that can provide highly accurate set cardinalities.

If we were to try to keep track of the cardinality of multiple sets and

report only on the largest ones, maintaining individual count distinct sketches

for each set can grow unwieldy, especially if the number of sets is not known

in advance. We consider the natural combination of the heavy hitters problem

with the count distinct problem, the heavy distinct hitters problem: given a

stream of (ℓ,x) pairs, find all the labels ℓ that are paired with a

large number of distinct items x using only constant memory.

No previous work on heavy distinct hitters has managed to be of practical use

in the large, distributed data stream setting. We propose a new algorithm, the

Sampling Space-Saving Set Sketch, which combines sketching and sampling

techniques and has all the desired properties for size, speed, accuracy,

mergeability, and invertibility. We compare our algorithm to several existing

solutions to the heavy distinct hitters problem, and provide experimental

results across several data sets showing the superiority of the new sketch.

There are no more papers matching your filters at the moment.