ENSIAS

27 Dec 2022

We propose Hercules, a parallel tree-based technique for exact similarity search on massive disk-based data series collections. We present novel index construction and query answering algorithms that leverage different summarization techniques, carefully schedule costly operations, optimize memory and disk accesses, and exploit the multi-threading and SIMD capabilities of modern hardware to perform CPU-intensive calculations. We demonstrate the superiority and robustness of Hercules with an extensive experimental evaluation against state-of-the-art techniques, using many synthetic and real datasets, and query workloads of varying difficulty. The results show that Hercules performs up to one order of magnitude faster than the best competitor (which is not always the same). Moreover, Hercules is the only index that outperforms the optimized scan on all scenarios, including the hard query workloads on disk-based datasets. This paper was published in the Proceedings of the VLDB Endowment, Volume 15, Number 10, June 2022.

18 Apr 2018

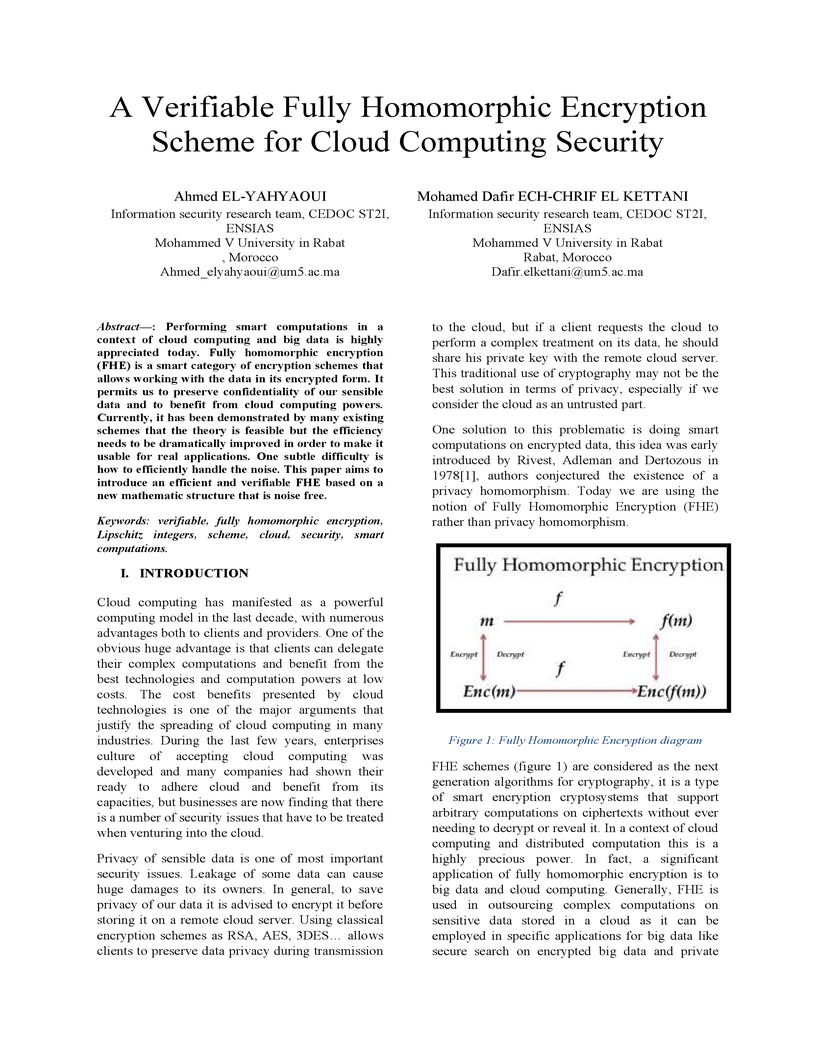

Performing smart computations in a context of cloud computing and big data is

highly appreciated today. Fully homomorphic encryption (FHE) is a smart

category of encryption schemes that allows working with the data in its

encrypted form. It permits us to preserve confidentiality of our sensible data

and to benefit from cloud computing powers. Currently, it has been demonstrated

by many existing schemes that the theory is feasible but the efficiency needs

to be dramatically improved in order to make it usable for real applications.

One subtle difficulty is how to efficiently handle the noise. This paper aims

to introduce an efficient and verifiable FHE based on a new mathematic

structure that is noise free.

27 Aug 2011

The field of information extraction from the Web emerged with the growth of the Web and the multiplication of online data sources. This paper is an analysis of information extraction methods. It presents a service oriented approach for web information extraction considering both web data management and extraction services. Then we propose an SOA based architecture to enhance flexibility and on-the-fly modification of web extraction services. An implementation of the proposed architecture is proposed on the middleware level of Java Enterprise Edition (JEE) servers.

Dimensionality reduction is an important preprocessing step of the hyperspectral images classification (HSI), it is inevitable task. Some methods use feature selection or extraction algorithms based on spectral and spatial information. In this paper, we introduce a new methodology for dimensionality reduction and classification of HSI taking into account both spectral and spatial information based on mutual information. We characterise the spatial information by the texture features extracted from the grey level cooccurrence matrix (GLCM); we use Homogeneity, Contrast, Correlation and Energy. For classification, we use support vector machine (SVM). The experiments are performed on three well-known hyperspectral benchmark datasets. The proposed algorithm is compared with the state of the art methods. The obtained results of this fusion show that our method outperforms the other approaches by increasing the classification accuracy in a good timing. This method may be improved for more performance

Keywords: hyperspectral images; classification; spectral and spatial features; grey level cooccurrence matrix; GLCM; mutual information; support vector machine; SVM.

There are no more papers matching your filters at the moment.