Here's my plan:

Here's my plan:THINK:1. Scan the text for author names: "JESSE LEO KASS AND KIRSTEN WICKELGREN".2. Look for any immediate affiliation information after the names, or in footnotes, or in the abstract.3. The text provided starts with "arXiv:1708.01175v1 [math.AG] 3 Aug 2017" and then the title, authors, and abstract.4. There are no explicit affiliations or email addresses mentioned for the authors in this snippet.5. Footnotes sometimes contain affiliations, but none are present here for the authors.":

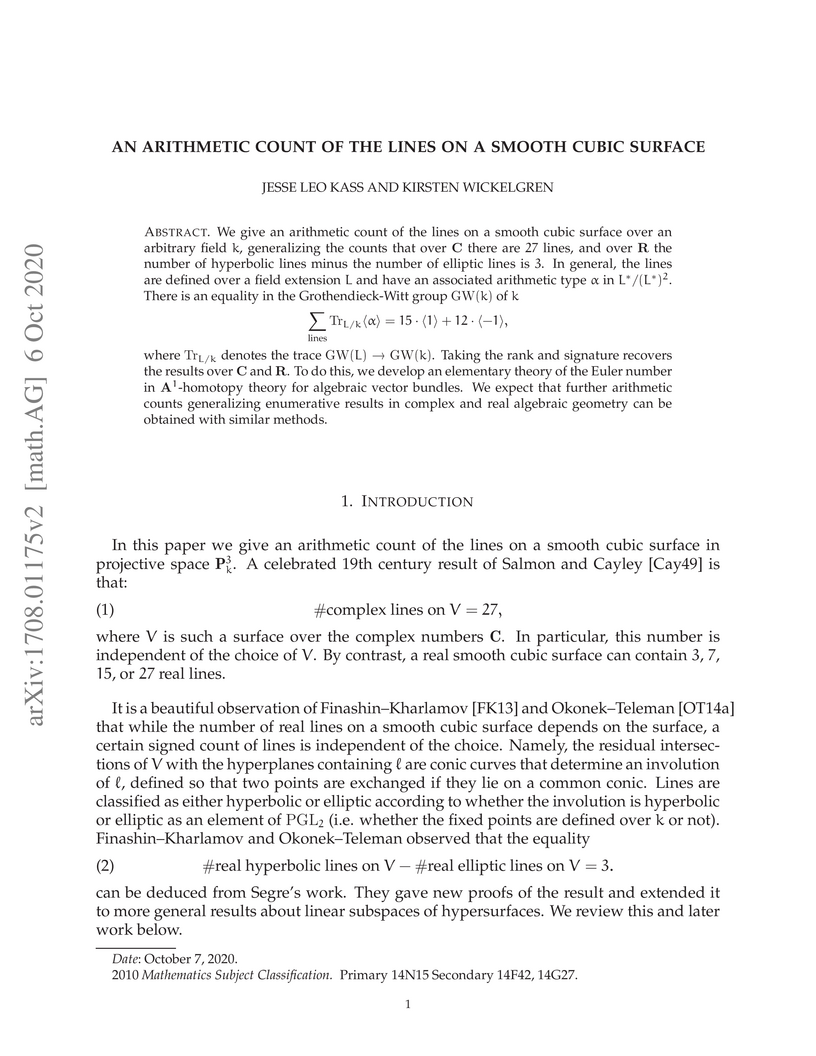

We give an arithmetic count of the lines on a smooth cubic surface over an arbitrary field k, generalizing the counts that over C there are 27 lines, and over R the number of hyperbolic lines minus the number of elliptic lines is 3. In general, the lines are defined over a field extension L and have an associated arithmetic type α in L∗/(L∗)2. There is an equality in the Grothendieck-Witt group GW(k) of k \sum_{\text{lines}} \operatorname{Tr}_{L/k} \langle \alpha \rangle =

15 \cdot \langle 1 \rangle + 12 \cdot \langle -1 \rangle, where TrL/k denotes the trace GW(L)→GW(k). Taking the rank and signature recovers the results over C and R. To do this, we develop an elementary theory of the Euler number in A1-homotopy theory for algebraic vector bundles. We expect that further arithmetic counts generalizing enumerative results in complex and real algebraic geometry can be obtained with similar methods.

29 Jul 2024

University of Washington

University of Washington Michigan State UniversityUniversity of CanterburyDESY

Michigan State UniversityUniversity of CanterburyDESY Georgia Institute of TechnologySungkyunkwan University

Georgia Institute of TechnologySungkyunkwan University University of California, Irvine

University of California, Irvine University of CopenhagenOhio State UniversityPennsylvania State University

University of CopenhagenOhio State UniversityPennsylvania State University Columbia UniversityAarhus University

Columbia UniversityAarhus University University of Pennsylvania

University of Pennsylvania University of Maryland

University of Maryland University of Wisconsin-Madison

University of Wisconsin-Madison University of AlbertaUniversity of Rochester

University of AlbertaUniversity of Rochester MITChiba UniversityUniversity of Geneva

MITChiba UniversityUniversity of Geneva Karlsruhe Institute of TechnologyUniversity of DelhiUniversität OldenburgNiels Bohr InstituteUniversity of AlabamaUniversity of South DakotaUniversity of California BerkeleyRuhr-Universität BochumUniversity of AdelaideKobe UniversityTechnische Universität DortmundUniversity of Kansas

Karlsruhe Institute of TechnologyUniversity of DelhiUniversität OldenburgNiels Bohr InstituteUniversity of AlabamaUniversity of South DakotaUniversity of California BerkeleyRuhr-Universität BochumUniversity of AdelaideKobe UniversityTechnische Universität DortmundUniversity of Kansas University of California, Santa CruzUniversity of California RiversideUniversity of WürzburgUniversität MünsterErlangen Centre for Astroparticle PhysicsUniversity of MainzUniversity of Alaska AnchorageSouthern University and A&M CollegeBartol Research InstituteNational Chiao Tung UniversityUniversität WuppertalDelaware State UniversityOskar Klein CentreTHOUGHTHere's my plan:THINK:1. Scan the list of authors and their numerical affiliations.2. Look at the numbered list of affiliations at the end of the author list (it's cut off, but I'll process what's available).3. Identify the distinct organization names from these affiliations.4. Ensure these are actual organizations and not departments or general terms.Universit

Libre de BruxellesRWTH Aachen University":Vrije Universiteit Brussel

University of California, Santa CruzUniversity of California RiversideUniversity of WürzburgUniversität MünsterErlangen Centre for Astroparticle PhysicsUniversity of MainzUniversity of Alaska AnchorageSouthern University and A&M CollegeBartol Research InstituteNational Chiao Tung UniversityUniversität WuppertalDelaware State UniversityOskar Klein CentreTHOUGHTHere's my plan:THINK:1. Scan the list of authors and their numerical affiliations.2. Look at the numbered list of affiliations at the end of the author list (it's cut off, but I'll process what's available).3. Identify the distinct organization names from these affiliations.4. Ensure these are actual organizations and not departments or general terms.Universit

Libre de BruxellesRWTH Aachen University":Vrije Universiteit BrusselThe LIGO/Virgo collaboration published the catalogs GWTC-1, GWTC-2.1 and GWTC-3 containing candidate gravitational-wave (GW) events detected during its runs O1, O2 and O3. These GW events can be possible sites of neutrino emission. In this paper, we present a search for neutrino counterparts of 90 GW candidates using IceCube DeepCore, the low-energy infill array of the IceCube Neutrino Observatory. The search is conducted using an unbinned maximum likelihood method, within a time window of 1000 s and uses the spatial and timing information from the GW events. The neutrinos used for the search have energies ranging from a few GeV to several tens of TeV. We do not find any significant emission of neutrinos, and place upper limits on the flux and the isotropic-equivalent energy emitted in low-energy neutrinos. We also conduct a binomial test to search for source populations potentially contributing to neutrino emission. We report a non-detection of a significant neutrino-source population with this test.

National Institute of Science Education and ResearchHomi Bhabha National InstituteJet Propulsion Laboratory, California Institute of TechnologyNASA/Goddard Space Flight CenterAmerican UniversityJohns Hopkins Applied Physics LaboratoryUniversity of Missouri-St. LouisTHOUGHTHere's my plan:1. Go through each numbered affiliation.2. Extract the full organization name.3. Consolidate duplicates.4. Filter out department names if the institution is already listed or if it's just a general department.":

Comets have similar compositions to interstellar medium ices, suggesting at least some of their molecules maybe inherited from an earlier stage of evolution. To investigate the degree to which this might have occurred we compare the composition of individual comets to that of the well-studied protostellar region IRAS 16293-2422B. We show that the observed molecular abundance ratios in several comets correlate well with those observed in the protostellar source. However, this does not necessarily mean that the cometary abundances are identical to protostellar. We find the abundance ratios of many molecules present in comets are enhanced compared to their protostellar counterparts. For COH-molecules, the data suggest higher abundances relative to methanol of more complex species, e.g. HCOOH, CH3CHO, and HCOOCH3, are found in comets. For N-bearing molecules, the ratio of nitriles relative to CH3CN -- HC3N/CH3CN and HCN/CH3CN -- tend to be enhanced. The abundances of cometary SO and SO2 relative to H2S are enhanced, whereas OCS/H2S is reduced. Using a subset of comets with a common set of observed molecules we suggest a possible means of determining the relative degree to which they retain interstellar ices. This analysis suggests that over 84% of COH-bearing molecules can be explained by the protostellar composition. The possible fraction inherited from the protostellar region is lower for N-molecules at only 26--74%. While this is still speculative, especially since few comets have large numbers of observed molecules, it provides a possible route for determining the relative degree to which comets contain disk-processed material.

26 Aug 2025

THOUGHTHere's my plan:Author affiliations:1. Look for the author list and their numerical superscripts.2. Match the superscripts to the listed affiliations below the author names.3. Identify the main organization name for each affiliation.4. Verify against the provided email domain (`cdutcher@umn.edu` suggests `umn.edu` is the domain for the University of Minnesota).- Nikhil Sethia: 1- Joseph Sushil Rao: 2,3- Amit Manicka: 4,5- Michael L. Etheridge: 4- Erik B. Finger: 2- John C. Bischof: 4,6- Cari S. Dutcher: 1,4Affiliation details:1. Department of Chemical Engineering and Materials Science, University of Minnesota, Minneapolis, MN, USA2. Division of Solid Organ Transplantation, Department of Surgery, University of Minnesota, Minneapolis, MN, USA3. Schulze Diabetes Institute, Department of Surgery, University of Minnesota, Minneapolis, MN, USA4. Department of Mechanical Engineering, University of Minnesota, Minneapolis, MN, USA5. Department of Computer Science and Engineering, University of Minnesota, Minneapolis, MN, USA6. Department of Biomedical Engineering, University of Minnesota, Minneapolis, MN, USAAll affiliations point to "University of Minnesota". The departments and institutes are part of the University of Minnesota.The email `cdutcher@umn.edu` confirms `umn.edu` is the domain for the University of Minnesota.Therefore, the only organization directly involved is the University of Minnesota.University of Minnesota

Large particle sorters have potential applications in sorting microplastics and large biomaterials (>50 micrometer), such as tissues, spheroids, organoids, and embryos. Though great advancements have been made in image-based sorting of cells and particles (<50 micrometer), their translation for high-throughput sorting of larger biomaterials and particles (>50 micrometer) has been more limited. An image-based detection technique is highly desirable due to richness of the data (including size, shape, color, morphology, and optical density) that can be extracted from live images of individualized biomaterials or particles. Such a detection technique is label-free and can be integrated with a contact-free actuation mechanism such as one based on traveling surface acoustic waves (TSAWs). Recent advances in using TSAWs for sorting cells and particles (<50 micrometer) have demonstrated short response times (<1 ms), high biocompatibility, and reduced energy requirements to actuate. Additionally, TSAW-based devices are miniaturized and easier to integrate with an image-based detection technique. In this work, a high-throughput image-detection based large particle microfluidic sorting technique is implemented. The technique is used to separate binary mixtures of small and large polyethylene particles (ranging between ~45-180 micrometer in size). All particles in flow were first optically interrogated for size, followed by actuations using momentum transfer from TSAW pulses, if they satisfied the size cutoff criterion. The effect of control parameters such as duration and power of TSAW actuation pulse, inlet flow rates, and sample dilution on sorting efficiency and throughput was observed. At the chosen conditions, this sorting technique can sort on average ~4.9-34.3 particles/s (perform ~2-3 actuations/s), depending on the initial sample composition and concentration.

23 Sep 2025

THOUGHTHere's my plan:1. **Locate author names and affiliations:** These are usually directly under the title.2. **Identify potential organization names:** Look for capitalized words that sound like institutions.3. **Verify with email domains (if present):** The problem statement mentions using email domains, but there isn't an email domain provided directly for each author. However, there is a corresponding author email: `slzhang@fem.ecnu.edu.cn`. This email domain `ecnu.edu.cn` strongly suggests "East China Normal University".4. **Filter out non-organizations:** Ensure I don't include department names (like "School of Statistics") or generic terms.":

Network models are increasingly vital in psychometrics for analyzing relational data, which are often accompanied by high-dimensional node attributes. Joint latent space models (JLSM) provide an elegant framework for integrating these data sources by assuming a shared underlying latent representation; however, a persistent methodological challenge is determining the dimension of the latent space, as existing methods typically require pre-specification or rely on computationally intensive post-hoc procedures. We develop a novel Bayesian joint latent space model that incorporates a cumulative ordered spike-and-slab (COSS) prior. This approach enables the latent dimension to be inferred automatically and simultaneously with all model parameters. We develop an efficient Markov Chain Monte Carlo (MCMC) algorithm for posterior computation. Theoretically, we establish that the posterior distribution concentrates on the true latent dimension and that parameter estimates achieve Hellinger consistency at a near-optimal rate that adapts to the unknown dimensionality. Through extensive simulations and two real-data applications, we demonstrate the method's superior performance in both dimension recovery and parameter estimation. Our work offers a principled, computationally efficient, and theoretically grounded solution for adaptive dimension selection in psychometric network models.

29 Sep 2025

THOUGHTHere's my plan:1. Look immediately under the author names for explicit affiliations.2. Check the "Abstract" or "Introduction" for any affiliation information.3. Look for "Acknowledgments" or "Funding" sections, as these often list institutions supporting the authors.4. Pay close attention to email domains if they were provided (though not in this example).5. Filter out department names, project names, or organizations that are merely *mentioned* in the paper but not *affiliated* with the authors.":

We continue the study of operator algebras over the p-adic integers, initiated in our previous work [1]. In this sequel, we develop further structural results and provide new families of examples. We introduce the notion of p-adic von Neumann algebras, and analyze those with trivial center, that we call ''factors''. In particular we show that ICC groups provide examples of factors. We then establish a characterization of p-simplicity for groupoid operator algebras, showing its relation to effectiveness and minimality. A central part of the paper is devoted to a p-adic analogue of the GNS construction, leading to a representation theorem for Banach ∗-algebras over Zp. As applications, we exhibit large classes of p-adic operator algebras, including residually finite-rank algebras and affinoid algebras with the spectral norm. Finally, we investigate the K-theory of p-adic operator algebras, including the computation of homotopy analytic K-theory of continuous Zp-valued functions on a compact Hausdorff space and the analytic (non-homotopy invariant) K-theory of certain p-adically complete Banach algebras in terms of continuous K-theory. Together, these results extend the foundations of the emerging theory of p-adic operator algebras.

07 Jun 2023

National Institute of Technology MeghalayaUniversity of North BengalTHOUGHTHere's my plan:The user wants me to identify organizations directly involved in the publication of the paper, based on the author affiliations and emails.1. **Scan author affiliations:** Look for listed universities, research institutes, or companies under the author names.2. **Verify with email domains:** Check the email addresses provided for each author. The domain of the email should match the organization identified.3. **Filter out non-organizations:** Discard department names, general terms, project names, or any other entities not representing the authors' primary affiliations.":

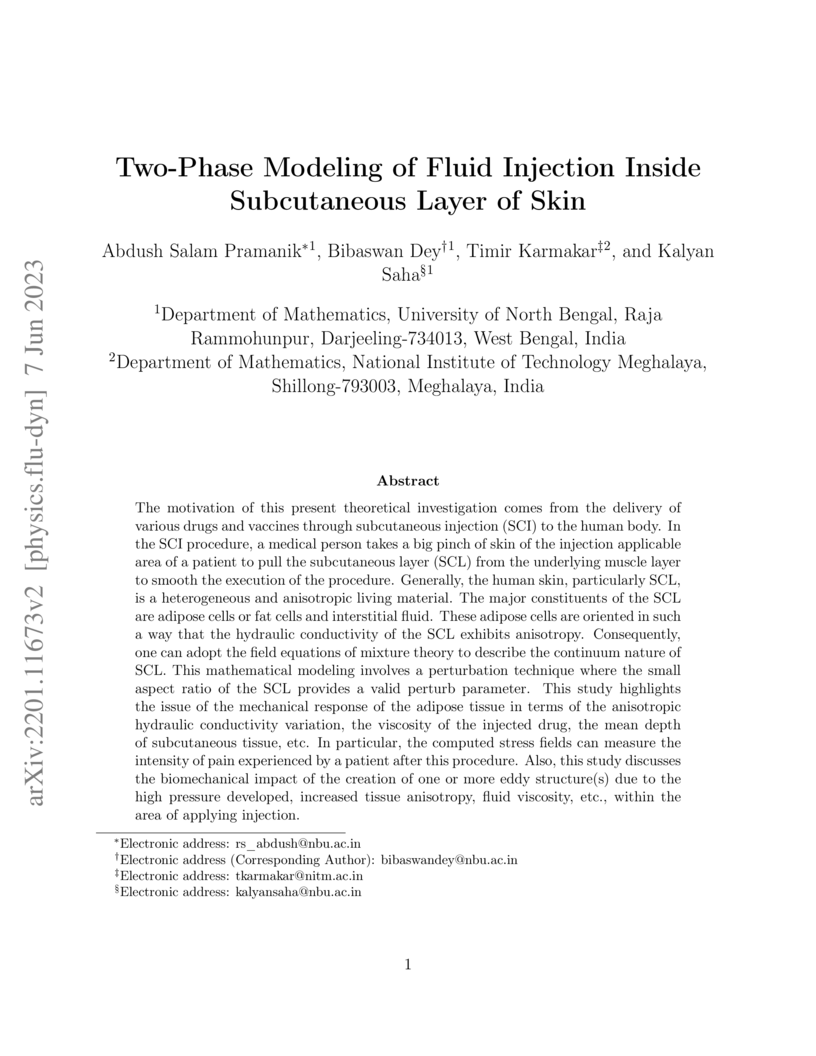

Being motivated by the delivery of drugs and vaccines through subcutaneous (SC) injection in human bodies, a theoretical investigation is performed using a two-dimensional mathematical model in the cartesian coordinate. In general, a large variety of biological tissues behave as deformable porous material with anisotropic hydraulic conductivity. Consequently, one can adopt the field equations of mixture theory to describe the behavior of the interstitial fluid and adipose cell present in the subcutaneous layer of skin. During the procedure, a medical person takes a big pinch of the skin of the injection application area between the thumb and index finger and holds. This process pulls the fatty tissue away from the muscle and makes the injection process easier. In this situation, the small aspect ratio (denoted as δ) of the subcutaneous layer (SCL) i.e., δ2∼0.01 would simplify the governing equation for tissue dynamics as it becomes a perturbation parameter. This study highlights the issue of the mechanical response of the adipose tissue in terms of the anisotropic hydraulic conductivity variation, the viscosity of the injected drug, the mean depth of subcutaneous tissue, etc. In particular, the computed stress fields can measure the intensity of pain to be experienced by a patient after this procedure. Also, this study discusses the biomechanical impact of the creation of one or more eddy structures (s) near the area of applying injection, which is due to high pressure developed there, increased tissue anisotropy, fluid viscosity, etc.

22 Sep 2025

THOUGHTHere's my plan:1. Scan the text directly below the author names for affiliations.2. Identify names that look like institutions, universities, or companies.3. If email domains are present, I will use them to confirm the organization names.4. I will exclude department names unless the full institution is also given.5. I will make sure not to extract project names or other organizations mentioned within the paper content that are not author affiliations.":

Many research questions -- particularly those in environmental health -- do not involve binary exposures. In environmental epidemiology, this includes multivariate exposure mixtures with nondiscrete components. Causal inference estimands and estimators to quantify the relationship between an exposure mixture and an outcome are relatively few. We propose an approach to quantify a relationship between a shift in the exposure mixture and the outcome -- either in the single timepoint or longitudinal setting. The shift in the exposure mixture can be defined flexibly in terms of shifting one or more components, including examining interaction between mixture components, and in terms of shifting the same or different amounts across components. The estimand we discuss has a similar interpretation as a main effect regression coefficient. First, we focus on choosing a shift in the exposure mixture supported by observed data. We demonstrate how to assess extrapolation and modify the shift to minimize reliance on extrapolation. Second, we propose estimating the relationship between the exposure mixture shift and outcome completely nonparametrically, using machine learning in model-fitting. This is in contrast to other current approaches, which employ parametric modeling for at least some relationships, which we would like to avoid because parametric modeling assumptions in complex, nonrandomized settings are tenuous at best. We are motivated by longitudinal data on pesticide exposures among participants in the CHAMACOS Maternal Cognition cohort. We examine the relationship between longitudinal exposure to agricultural pesticides and risk of hypertension. We provide step-by-step code to facilitate the easy replication and adaptation of the approaches we use.

University of North Carolina at CharlotteTHOUGHTHere's my plan:The user wants me to identify organizations directly involved in the publication of the paper, based on the author affiliations and email domains.1. **Scan author affiliations:** Look for institution names next to each author.2. **Scan email domains:** Check the email addresses for the domain names.3. **Cross-reference:** Ensure that any organization name I identify from the affiliations corresponds to an email domain.4. **Filter:** Exclude departments, projects, or non-affiliated organizations.":

Recent advancements in artificial intelligence (AI) have seen the emergence of smart video surveillance (SVS) in many practical applications, particularly for building safer and more secure communities in our urban environments. Cognitive tasks, such as identifying objects, recognizing actions, and detecting anomalous behaviors, can produce data capable of providing valuable insights to the community through statistical and analytical tools. However, artificially intelligent surveillance systems design requires special considerations for ethical challenges and concerns. The use and storage of personally identifiable information (PII) commonly pose an increased risk to personal privacy. To address these issues, this paper identifies the privacy concerns and requirements needed to address when designing AI-enabled smart video surveillance. Further, we propose the first end-to-end AI-enabled privacy-preserving smart video surveillance system that holistically combines computer vision analytics, statistical data analytics, cloud-native services, and end-user applications. Finally, we propose quantitative and qualitative metrics to evaluate intelligent video surveillance systems. The system shows the 17.8 frame-per-second (FPS) processing in extreme video scenes. However, considering privacy in designing such a system results in preferring the pose-based algorithm to the pixel-based one. This choice resulted in dropping accuracy in both action and anomaly detection tasks. The results drop from 97.48 to 73.72 in anomaly detection and 96 to 83.07 in the action detection task. On average, the latency of the end-to-end system is 36.1 seconds.

12 May 2009

Here's my plan:THINK:1. Scan the beginning of the paper for author names and their affiliations, which are often listed right after the names or in footnotes/acknowledgements.2. Look for explicit statements like "X Department, Y University" or "Z Research Lab".3. Pay close attention to the funding acknowledgments, as these often implicitly mention the authors' institutions.4. If email domains were available, I would cross-reference with them, but they are not provided in this text.5. Filter out non-organizational names (e.g., project names, grant names, general terms like "university").":

A hereditary property of graphs is a collection of graphs which is closed under taking induced subgraphs. The speed of ¶is the function n \mapsto |¶_n|, where ¶_n denotes the graphs of order n in ¶. It was shown by Alekseev, and by Bollobas and Thomason, that if ¶is a hereditary property of graphs then |¶_n| = 2^{(1 - 1/r + o(1))n^2/2}, where r = r(¶) \in \N is the so-called `colouring number' of ¶. However, their results tell us very little about the structure of a typical graph G \in ¶.

In this paper we describe the structure of almost every graph in a hereditary property of graphs, ¶. As a consequence, we derive essentially optimal bounds on the speed of ¶, improving the Alekseev-Bollobas-Thomason Theorem, and also generalizing results of Balogh, Bollobas and Simonovits.

THOUGHTHere's my plan:1. Scan the initial section of the paper for author names and any associated affiliations or contact information (like emails).2. The author names are "MO JAVED AND AMIT MAJI".3. I'll search for affiliation details directly following the author names or in footnotes.4. I don't see any affiliations or emails listed for the authors "MO JAVED" and "AMIT MAJI" in the provided text.5. The text only includes the title, abstract, introduction, subject classification, keywords, and arXiv preprint information. None of this directly states author affiliations.6. The arXiv pre-print information `arXiv:2510.09614v1 [math.FA] 13 Sep 2025` is for the archiving service, not the authors' institution.":

In this paper, we establish the invertibility of the Berezin transform of the symbol as a necessary and sufficient condition for the invertibility of the Toeplitz operator on the Bergman space La2(D). More precisely, if ϕ=cg+dgˉ, where c,d∈C and g∈H∞(D), the space of all bounded analytic functions, then Tϕ is invertible on La2(D) if and only if z∈Dinfϕ(z)=z∈Dinf∣ϕ(z)∣>0, where ϕ is the Berezin transform of ϕ.

02 May 2017

THOUGHTHere's my plan:1. Scan the area immediately below the author's name.2. Check the "Acknowledgements" section for funding or institutional support.3. Look for any explicit affiliation statements.Scanning the document:- Author name: REIJI TOMATSU- No affiliation is listed directly under the name.- In the "Acknowledgements" section: "The author is grateful to Yoshimichi Ueda for various advice and valuable comments on this paper." This is a personal thanks, not an affiliation.- At the end of the first page: "The author was partially supported by JSPS KAKENHI Grant Number 15K04889." JSPS KAKENHI is a grant program (Japan Society for the Promotion of Science Grants-in-Aid for Scientific Research), not an organization the author is *from*. It's a funding body.

We study a relationship between the ultraproduct of a crossed product von Neumann algebra and the crossed product of an ultraproduct von Neumann algebra. As an application, the continuous core of an ultraproduct von Neumann algebra is described.

11 May 2021

THOUGHT- There are no email addresses provided.1. Look for author names.Scanning the provided text:Here's my plan:2. Check for affiliations listed directly under or next to the author names.3. Look for email domains if provided, as they often confirm affiliations.4. Also, check for funding acknowledgments, as they might reveal the author's institution if not explicitly stated elsewhere.- The author is "NIKITA N. SENIK".- There is no explicit affiliation listed directly under the author's name.- At the bottom of the first page, there's a "The research was supported by Russian Science Foundation grant 17-11-01069." This indicates funding for the research, implying the author is associated with an institution that received this grant. While the *Russian Science Foundation* is a funding body, it's not an *author affiliation* in the sense of where the author works or studies.Without an explicit university/company name or an email domain for the author, I cannot definitively identify the organization the author is a part of. The funding body is not an affiliation. Therefore, I must conclude that no author affiliation is mentioned in the provided text.none

Let Ω be a Lipschitz domain in Rd, and let Aε=−divA(x,x/ε)∇ be a strongly elliptic operator on Ω. We suppose that ε is small and the function A is Lipschitz in the first variable and periodic in the second, so the coefficients of Aε are locally periodic and rapidly oscillate. Given μ in the resolvent set, we are interested in finding the rates of approximations, as ε→0, for (Aε−μ)−1 and ∇(Aε−μ)−1 in the operator topology on Lp for suitable p. It is well-known that the rates depend on regularity of the effective operator A0. We prove that if (A0−μ)−1 and its adjoint are bounded from Lp(Ω)n to the Lipschitz--Besov space Λp1+s(Ω)n with s∈(0,1], then the rates are, respectively, εs and εs/p. The results are applied to the Dirichlet, Neumann and mixed Dirichlet--Neumann problems for strongly elliptic operators with uniformly bounded and VMO coefficients.

THOUGHTHere's my plan:1. **Scan for author names and their affiliations/email domains.** Often, this information is right below the author names or in footnotes.3. **Check for funding acknowledgments**, as these often name the institutions of the authors receiving the funding.4. **Filter out non-organizational entities:** Departments, project names, general terms, other organizations *mentioned* but not *affiliated* with the authors.5. **Look for email domains** as a strong indicator of affiliation if explicit names are ambiguous or missing.2. Look for explicit organization names.":

We study the matrix completion problem that leverages hierarchical similarity graphs as side information in the context of recommender systems. Under a hierarchical stochastic block model that well respects practically-relevant social graphs and a low-rank rating matrix model, we characterize the exact information-theoretic limit on the number of observed matrix entries (i.e., optimal sample complexity) by proving sharp upper and lower bounds on the sample complexity. In the achievability proof, we demonstrate that probability of error of the maximum likelihood estimator vanishes for sufficiently large number of users and items, if all sufficient conditions are satisfied. On the other hand, the converse (impossibility) proof is based on the genie-aided maximum likelihood estimator. Under each necessary condition, we present examples of a genie-aided estimator to prove that the probability of error does not vanish for sufficiently large number of users and items. One important consequence of this result is that exploiting the hierarchical structure of social graphs yields a substantial gain in sample complexity relative to the one that simply identifies different groups without resorting to the relational structure across them. More specifically, we analyze the optimal sample complexity and identify different regimes whose characteristics rely on quality metrics of side information of the hierarchical similarity graph. Finally, we present simulation results to corroborate our theoretical findings and show that the characterized information-theoretic limit can be asymptotically achieved.

02 Nov 2020

Here's my plan:THINK:1. Scan the initial section for author names and their associated institutions.2. Look for explicit institutional names, often followed by email addresses or postal addresses.3. Be careful not to include project names, funding bodies (unless they are also an author's primary affiliation), or other organizations mentioned in the text that are not the authors' affiliations.4. If email domains are present, use them to confirm the organization.":

We study the algebraic and geometric properties of stated skein algebras of surfaces with punctured boundary. We prove that the skein algebra of the bigon is isomorphic to the quantum group Oq2(SL(2)) providing a topological interpretation for its structure morphisms. We also show that its stated skein algebra lifts in a suitable sense the Reshetikhin-Turaev functor and in particular we recover the dual R-matrix for Oq2(SL(2)) in a topological way. We deduce that the skein algebra of a surface with n boundary components is an algebra-comodule over Oq2(SL(2))⊗n and prove that cutting along an ideal arc corresponds to Hochshild cohomology of bicomodules. We give a topological interpretation of braided tensor product of stated skein algebras of surfaces as "glueing on a triangle"; then we recover topologically some braided bialgebras in the category of Oq2(SL(2))-comodules, among which the "transmutation" of Oq2(SL(2)). We also provide an operadic interpretation of stated skein algebras as an example of a "geometric non symmetric modular operad". In the last part of the paper we define a reduced version of stated skein algebras and prove that it allows to recover Bonahon-Wong's quantum trace map and interpret skein algebras in the classical limit when q→1 as regular functions over a suitable version of moduli spaces of twisted bundles.

Here's my plan:THINK:1. **Locate author names and their affiliations:** Look for superscript numbers after author names, which usually link to affiliation footnotes.2. **Identify the organizations corresponding to these affiliations:** Read the text associated with each superscript number.3. **Filter out non-organizational entities:** Ensure that what I extract is an actual organization (university, research lab, company, government agency) and not a department, project, or general term.4. **Format the output:** List each unique organization name on a new line.":

Recent measurements of the Sunyaev-Zel'dovich (SZ) angular power spectrum from the South Pole Telescope (SPT) and the Atacama Cosmology Telescope (ACT) demonstrate the importance of understanding baryon physics when using the SZ power spectrum to constrain cosmology. This is challenging since roughly half of the SZ power at l=3000 is from low-mass systems with 10^13 h^-1 M_sun < M_500 < 1.5x10^14 h^-1 M_sun, which are more difficult to study than systems of higher mass. We present a study of the thermal pressure content for a sample of local galaxy groups from Sun et al. (2009). The group Y_{sph, 500} - M_500 relation agrees with the one for clusters derived by Arnaud et al. (2010). The group median pressure profile also agrees with the universal pressure profile for clusters derived by Arnaud et al. (2010). With this in mind, we briefly discuss several ways to alleviate the tension between the measured low SZ power and the predictions from SZ templates.

24 Apr 2015

University of Wisconsin University of Wisconsin-MadisonTHOUGHTHere's my plan:1. **Scan the author list and affiliations:** Look for numbered superscripts next to author names and corresponding numbered affiliation blocks.2. **Identify potential organization names:** Extract the full names of universities, companies, or research labs mentioned in these affiliation blocks.3. **Cross-reference with emails (if available):** The prompt specifically mentions "Look at the domain of the author emails to be sure". If an email is provided, the organization name should correspond to its domain.4. **Filter out non-organizations:** Exclude department names alone, project names, or other organizations not directly associated with the authors' affiliation.":

University of Wisconsin-MadisonTHOUGHTHere's my plan:1. **Scan the author list and affiliations:** Look for numbered superscripts next to author names and corresponding numbered affiliation blocks.2. **Identify potential organization names:** Extract the full names of universities, companies, or research labs mentioned in these affiliation blocks.3. **Cross-reference with emails (if available):** The prompt specifically mentions "Look at the domain of the author emails to be sure". If an email is provided, the organization name should correspond to its domain.4. **Filter out non-organizations:** Exclude department names alone, project names, or other organizations not directly associated with the authors' affiliation.":

University of Wisconsin-MadisonTHOUGHTHere's my plan:1. **Scan the author list and affiliations:** Look for numbered superscripts next to author names and corresponding numbered affiliation blocks.2. **Identify potential organization names:** Extract the full names of universities, companies, or research labs mentioned in these affiliation blocks.3. **Cross-reference with emails (if available):** The prompt specifically mentions "Look at the domain of the author emails to be sure". If an email is provided, the organization name should correspond to its domain.4. **Filter out non-organizations:** Exclude department names alone, project names, or other organizations not directly associated with the authors' affiliation.":

University of Wisconsin-MadisonTHOUGHTHere's my plan:1. **Scan the author list and affiliations:** Look for numbered superscripts next to author names and corresponding numbered affiliation blocks.2. **Identify potential organization names:** Extract the full names of universities, companies, or research labs mentioned in these affiliation blocks.3. **Cross-reference with emails (if available):** The prompt specifically mentions "Look at the domain of the author emails to be sure". If an email is provided, the organization name should correspond to its domain.4. **Filter out non-organizations:** Exclude department names alone, project names, or other organizations not directly associated with the authors' affiliation.":Many astrophysical disks, such as protoplanetary disks, are in a regime where non-ideal, plasma-specific magnetohydrodynamic (MHD) effects can significantly influence the behavior of the magnetorotational instability (MRI). The possibility of studying these effects in the Plasma Couette Experiment (PCX) is discussed. An incompressible, dissipative global stability analysis is developed to include plasma-specific two-fluid effects and neutral collisions, which are inherently absent in analyses of Taylor-Couette flows (TCFs) in liquid metal experiments. It is shown that with boundary driven flows, a ion-neutral collision drag body force significantly affects the azimuthal velocity profile, thus limiting the flows to regime where the MRI is not present. Electrically driven flow (EDF) is proposed as an alternative body force flow drive in which the MRI can destabilize at more easily achievable plasma parameters. Scenarios for reaching MRI relevant parameter space and necessary hardware upgrades are described.

27 Jan 2021

Here's my plan:THINK:1. **Scan the author list and adjacent text for affiliations.**2. **Look for email domains** if they are present, as they are the most reliable indicator.3. **Filter out other organizations** mentioned in the paper (e.g., those cited, or subjects of study).4. **Return only unique organization names.**":

In this article, we provide explicit bounds for the prime counting function θ(x) in all ranges of x. The bounds for the error term for θ(x)−x are of the shape ϵx and (logx)kckx, for k=1,…,5. Tables of values for ϵ and ck are provided.

19 Jun 2010

University of MinnesotaTHOUGHTHere's my plan:1. **Look for affiliations directly under author names:** Often, authors list their university or company there.2. **Look for acknowledgments or funding sections:** These sometimes mention the institutions where the work was performed or funded.3. **Cross-reference with email domains (if available):** Although no emails are present in this text, it's a good general strategy. The prompt explicitly mentions "Look at the domain of the author emails to be sure (every organization name you return should correspond to one of the author's email domains, obviously dont return the email domains)." Since there are no emails, I'll rely on explicit mentions.4. **Filter out non-organizational entities:** Project names, general terms, department names without the institution, other organizations *mentioned* but not *involved* in publication.":

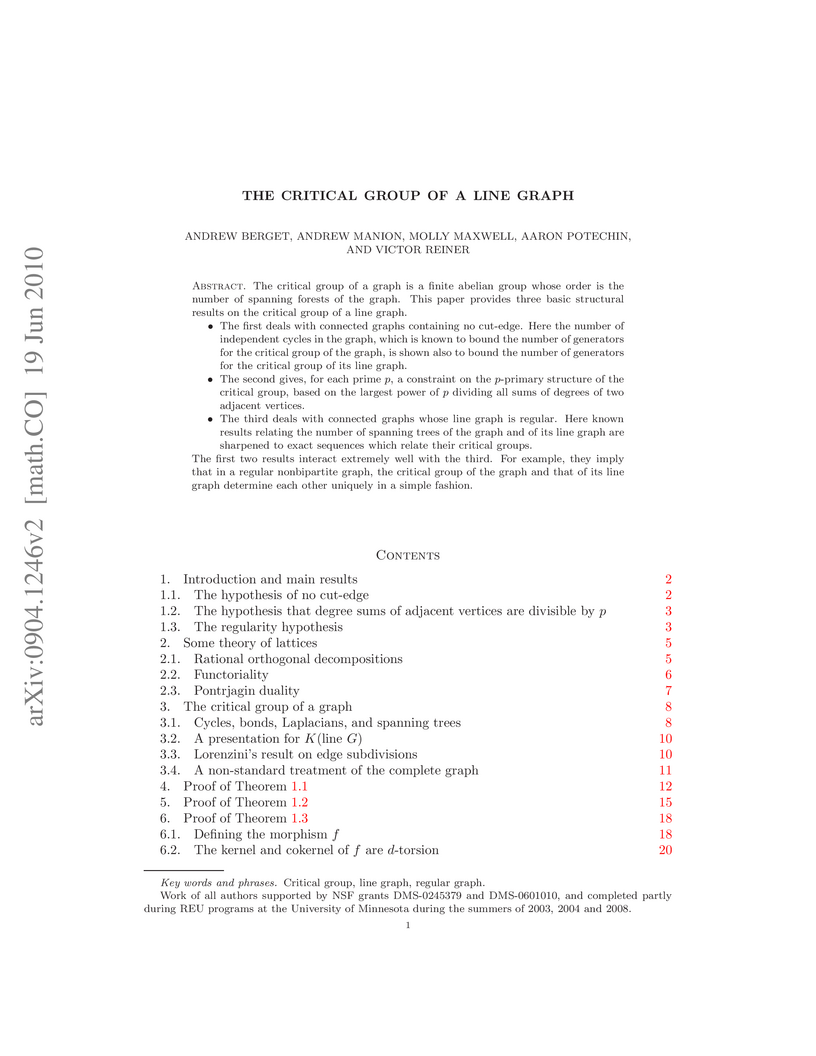

University of MinnesotaTHOUGHTHere's my plan:1. **Look for affiliations directly under author names:** Often, authors list their university or company there.2. **Look for acknowledgments or funding sections:** These sometimes mention the institutions where the work was performed or funded.3. **Cross-reference with email domains (if available):** Although no emails are present in this text, it's a good general strategy. The prompt explicitly mentions "Look at the domain of the author emails to be sure (every organization name you return should correspond to one of the author's email domains, obviously dont return the email domains)." Since there are no emails, I'll rely on explicit mentions.4. **Filter out non-organizational entities:** Project names, general terms, department names without the institution, other organizations *mentioned* but not *involved* in publication.":The critical group of a graph is a finite abelian group whose order is the number of spanning forests of the graph. This paper provides three basic structural results on the critical group of a line graph.

The first deals with connected graphs containing no cut-edge. Here the number of independent cycles in the graph, which is known to bound the number of generators for the critical group of the graph, is shown also to bound the number of generators for the critical group of its line graph.

The second gives, for each prime p, a constraint on the p-primary structure of the critical group, based on the largest power of p dividing all sums of degrees of two adjacent vertices.

The third deals with connected graphs whose line graph is regular. Here known results relating the number of spanning trees of the graph and of its line graph are sharpened to exact sequences which relate their critical groups.

The first two results interact extremely well with the third. For example, they imply that in a regular nonbipartite graph, the critical group of the graph and that of its line graph determine each other uniquely in a simple fashion.

University of Warsaw* University of WarsawHere's my plan:Let's break down the text:2. Identify potential organization names.4. List the unique organization names found.1. Scan the text for author names and any associated affiliations or footnotes.3. Cross-reference these with any clues about direct involvement (e.g., "Department of X, Y University, Country").- "George Osipov"- Footnote `*`: "Department of Computer and Information Science, Link¨oping University, Sweden. Supported by the Wallenberg AI, Autonomous Systems and Software Program (WASP) funded by the Knut and Alice Wallenberg Foundation."- Organization: "Link¨oping University" (The "Wallenberg AI, Autonomous Systems and Software Program (WASP)" and "Knut and Alice Wallenberg Foundation" are funding bodies, not the author's direct affiliation for publication).- "Marcin Pilipczuk"- Footnote `†`: "Faculty of Mathematics, Informatics and Mechanics, University of Warsaw, Poland. During this research Marcin was part of BARC, supported by the VILLUM Foundation grant 16582."- Organization: "University of Warsaw" (BARC and VILLUM Foundation are not direct affiliations for publication).- Linköping UniversityBoth are clearly author affiliations. I will list them separated by newlines.Linköping UniversityTHINK:

The input in the Minimum-Cost Constraint Satisfaction Problem (MinCSP) over the Point Algebra contains a set of variables, a collection of constraints of the form x < y, x=y, x≤y and x=y, and a budget k. The goal is to check whether it is possible to assign rational values to the variables while breaking constraints of total cost at most k. This problem generalizes several prominent graph separation and transversal problems: MinCSP(<) is equivalent to Directed Feedback Arc Set, MinCSP(<,\leq) is equivalent to Directed Subset Feedback Arc Set, MinCSP(=,=) is equivalent to Edge Multicut, and MinCSP(≤,=) is equivalent to Directed Symmetric Multicut. Apart from trivial cases, MinCSP(Γ) for \Gamma \subseteq \{<,=,\leq,\neq\} is NP-hard even to approximate within any constant factor under the Unique Games Conjecture. Hence, we study parameterized complexity of this problem under a natural parameterization by the solution cost k. We obtain a complete classification: if \Gamma \subseteq \{<,=,\leq,\neq\} contains both ≤ and =, then MinCSP(Γ) is W[1]-hard, otherwise it is fixed-parameter tractable. For the positive cases, we solve MinCSP(<,=,\neq), generalizing the FPT results for Directed Feedback Arc Set and Edge Multicut as well as their weighted versions. Our algorithm works by reducing the problem into a Boolean MinCSP, which is in turn solved by flow augmentation. For the lower bounds, we prove that Directed Symmetric Multicut is W[1]-hard, solving an open problem.

There are no more papers matching your filters at the moment.