applications

07 Dec 2025

The proliferation of Large Language Models (LLMs) necessitates valid evaluation methods to provide guidance for both downstream applications and actionable future improvements. The Item Response Theory (IRT) model with Computerized Adaptive Testing has recently emerged as a promising framework for evaluating LLMs via their response accuracy. Beyond simple response accuracy, LLMs' chain of thought (CoT) lengths serve as a vital indicator of their reasoning ability. To leverage the CoT length information to assist the evaluation of LLMs, we propose the Latency-Response Theory (LaRT) model, which jointly models both the response accuracy and CoT length by introducing a key correlation parameter between the latent ability and the latent speed. We derive an efficient stochastic approximation Expectation-Maximization algorithm for parameter estimation. We establish rigorous identifiability results for the latent ability and latent speed parameters to ensure the statistical validity of their estimation. Through both theoretical asymptotic analyses and simulation studies, we demonstrate LaRT's advantages over IRT in terms of superior estimation accuracy and shorter confidence intervals for latent trait estimation. To evaluate LaRT in real data, we collect responses from diverse LLMs on popular benchmark datasets. We find that LaRT yields different LLM rankings than IRT and outperforms IRT across multiple key evaluation metrics including predictive power, item efficiency, ranking validity, and LLM evaluation efficiency. Code and data are available at this https URL.

This paper attempts a first analysis of citation distributions based on the genderedness of authors' first name. Following the extraction of first name and sex data from all human entity triplets contained in Wikidata, a first name genderedness table is first created based on compiled sex frequencies, then merged with bibliometric data from eponymous, US-affiliated authors. Comparisons of various cumulative distributions show that citation concentrations fluctuations are highest at the opposite ends of the genderedness spectrum, as authors with very feminine and masculine first names respectively get a lower and higher share of citations for every article published, irrespective of their contribution role.

09 Dec 2025

The Weibull distribution is a commonly adopted choice for modeling the survival of systems subject to maintenance over time. When only proxy indicators and censored observations are available, it becomes necessary to express the distribution's parameters as functions of time-dependent covariates. Deep neural networks provide the flexibility needed to learn complex relationships between these covariates and operational lifetime, thereby extending the capabilities of traditional regression-based models. Motivated by the analysis of a fleet of military vehicles operating in highly variable and demanding environments, as well as by the limitations observed in existing methodologies, this paper introduces WTNN, a new neural network-based modeling framework specifically designed for Weibull survival studies. The proposed architecture is specifically designed to incorporate qualitative prior knowledge regarding the most influential covariates, in a manner consistent with the shape and structure of the Weibull distribution. Through numerical experiments, we show that this approach can be reliably trained on proxy and right-censored data, and is capable of producing robust and interpretable survival predictions that can improve existing approaches.

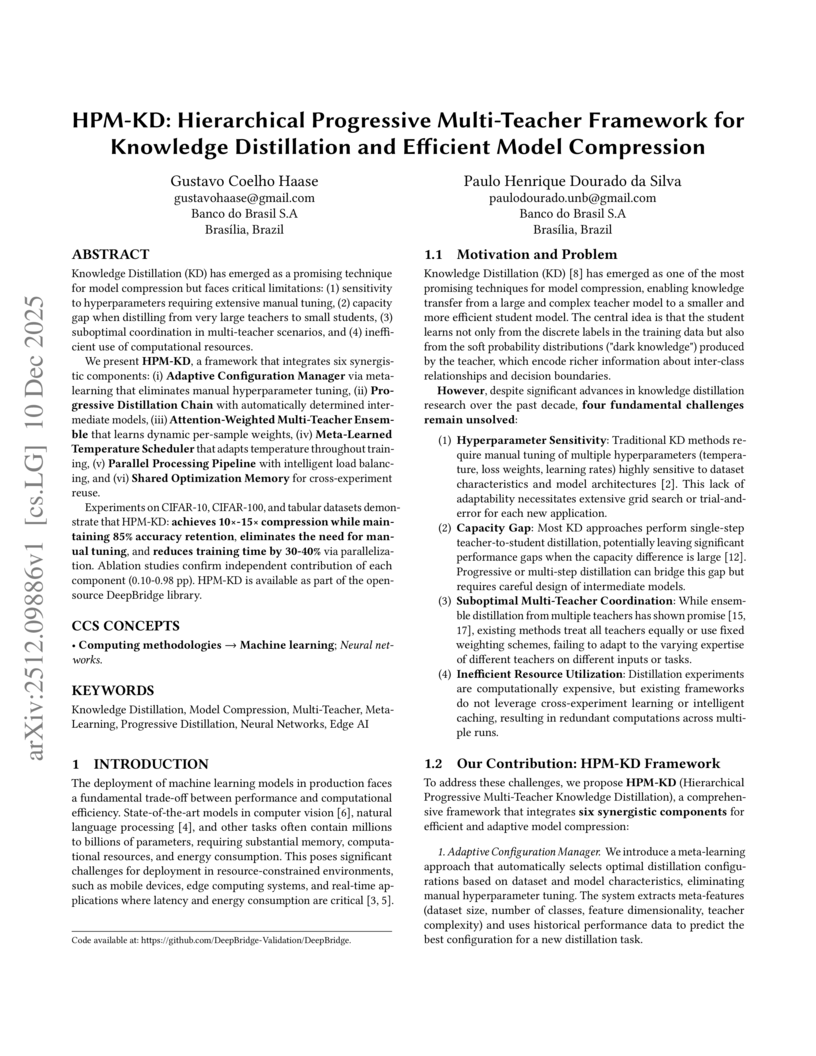

Knowledge Distillation (KD) has emerged as a promising technique for model compression but faces critical limitations: (1) sensitivity to hyperparameters requiring extensive manual tuning, (2) capacity gap when distilling from very large teachers to small students, (3) suboptimal coordination in multi-teacher scenarios, and (4) inefficient use of computational resources. We present \textbf{HPM-KD}, a framework that integrates six synergistic components: (i) Adaptive Configuration Manager via meta-learning that eliminates manual hyperparameter tuning, (ii) Progressive Distillation Chain with automatically determined intermediate models, (iii) Attention-Weighted Multi-Teacher Ensemble that learns dynamic per-sample weights, (iv) Meta-Learned Temperature Scheduler that adapts temperature throughout training, (v) Parallel Processing Pipeline with intelligent load balancing, and (vi) Shared Optimization Memory for cross-experiment reuse. Experiments on CIFAR-10, CIFAR-100, and tabular datasets demonstrate that HPM-KD: achieves 10x-15x compression while maintaining 85% accuracy retention, eliminates the need for manual tuning, and reduces training time by 30-40% via parallelization. Ablation studies confirm independent contribution of each component (0.10-0.98 pp). HPM-KD is available as part of the open-source DeepBridge library.

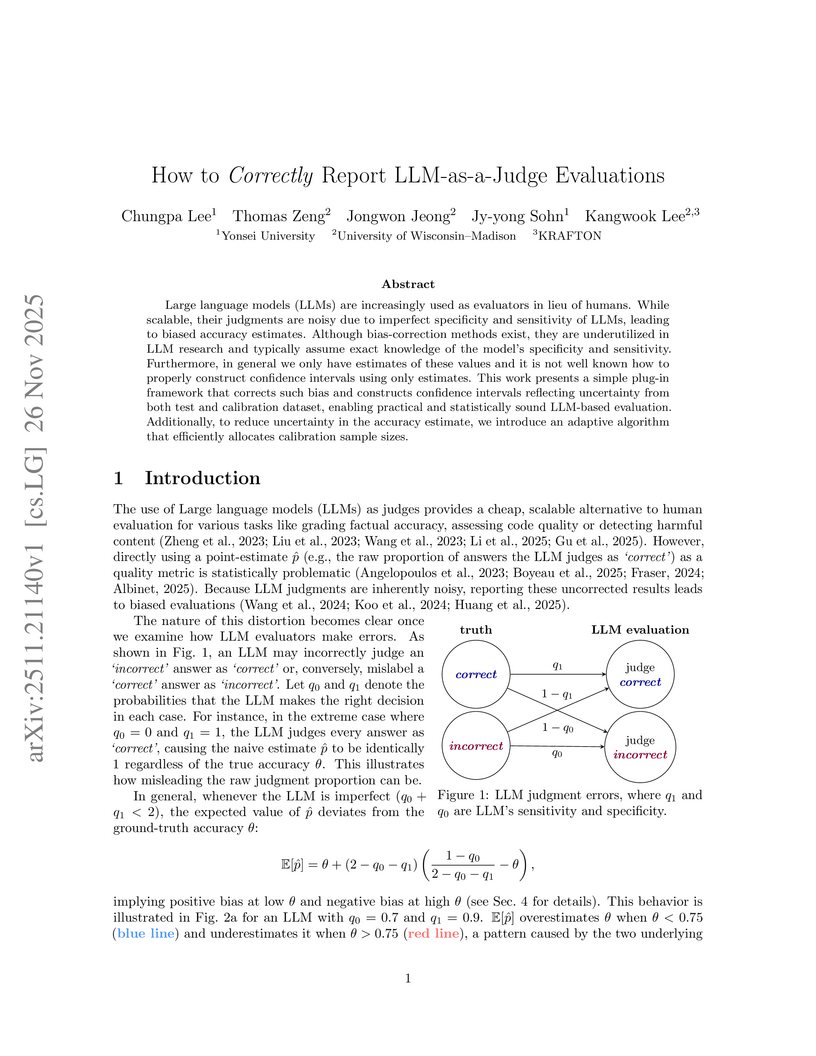

Researchers from Yonsei University and the University of Wisconsin–Madison developed a statistical framework to correct for bias and quantify uncertainty in Large Language Model (LLM)-as-a-Judge evaluations. The framework provides a bias-adjusted estimator for true model accuracy and constructs confidence intervals that account for uncertainty from both test and calibration datasets, demonstrating reliable coverage probabilities and reduced bias in simulations.

10 Dec 2025

Decisions based upon pairwise comparisons of multiple treatments are naturally performed in terms of the mean survival of the selected study arms or functions thereof. However, synthesis of treatment comparisons is usually performed on surrogates of the mean survival, such as hazard ratios or restricted mean survival times. Thus, network meta-analysis techniques may suffer from the limitations of these approaches, such as incorrect proportional hazards assumption or short-term follow-up periods. We propose a Bayesian framework for the network meta-analysis of the main outcome informing the decision, the mean survival of a treatment. Its derivation involves extrapolation of the observed survival curves. We use methods for stable extrapolation that integrate long term evidence based upon mortality projections. Extrapolations are performed using flexible poly-hazard parametric models and M-spline-based methods. We assess the computational and statistical efficiency of different techniques using a simulation study and apply the developed methods to two real data sets. The proposed method is formulated within a decision theoretic framework for cost-effectiveness analyses, where the `best' treatment is to be selected and incorporating the associated cost information is straightforward.

10 Dec 2025

Understanding how vaccines perform against different pathogen genotypes is crucial for developing effective prevention strategies, particularly for highly genetically diverse pathogens like HIV. Sieve analysis is a statistical framework used to determine whether a vaccine selectively prevents acquisition of certain genotypes while allowing breakthrough of other genotypes that evade immune responses. Traditionally, these analyses are conducted with a single sequence available per individual acquiring the pathogen. However, modern sequencing technology can provide detailed characterization of intra-individual viral diversity by capturing up to hundreds of pathogen sequences per person. In this work, we introduce methodology that extends sieve analysis to account for intra-individual viral diversity. Our approach estimates vaccine efficacy against viral populations with varying true (unobservable) frequencies of vaccine-mismatched mutations. To account for differential resolution of information from differing sequence counts per person, we use competing risks Cox regression with modeled causes of failure and propose an empirical Bayes approach for the classification model. Simulation studies demonstrate that our approach reduces bias, provides nominal confidence interval coverage, and improves statistical power compared to conventional methods. We apply our method to the HVTN 705 Imbokodo trial, which assessed the efficacy of a heterologous vaccine regimen in preventing HIV-1 acquisition.

09 Dec 2025

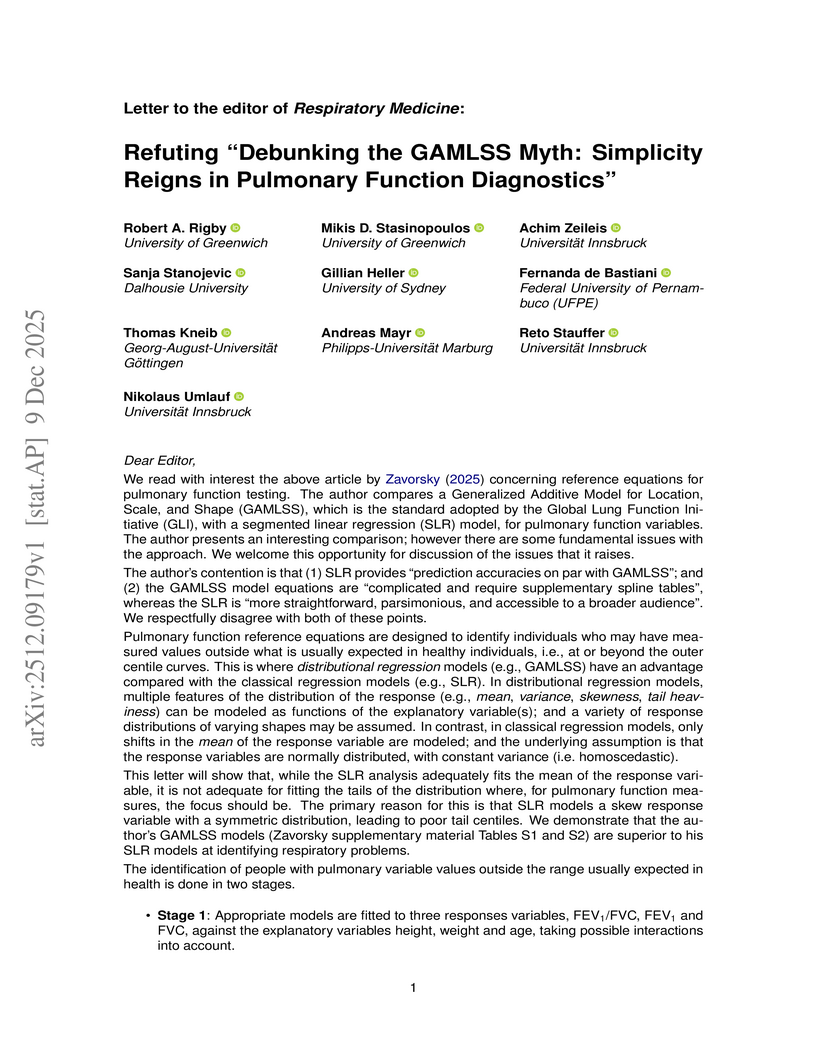

We read with interest the above article by Zavorsky (2025, Respiratory Medicine, doi:https://doi.org/10.1016/j.rmed.2024.107836) concerning reference equations for pulmonary function testing. The author compares a Generalized Additive Model for Location, Scale, and Shape (GAMLSS), which is the standard adopted by the Global Lung Function Initiative (GLI), with a segmented linear regression (SLR) model, for pulmonary function variables. The author presents an interesting comparison; however there are some fundamental issues with the approach. We welcome this opportunity for discussion of the issues that it raises. The author's contention is that (1) SLR provides "prediction accuracies on par with GAMLSS"; and (2) the GAMLSS model equations are "complicated and require supplementary spline tables", whereas the SLR is "more straightforward, parsimonious, and accessible to a broader audience". We respectfully disagree with both of these points.

10 Dec 2025

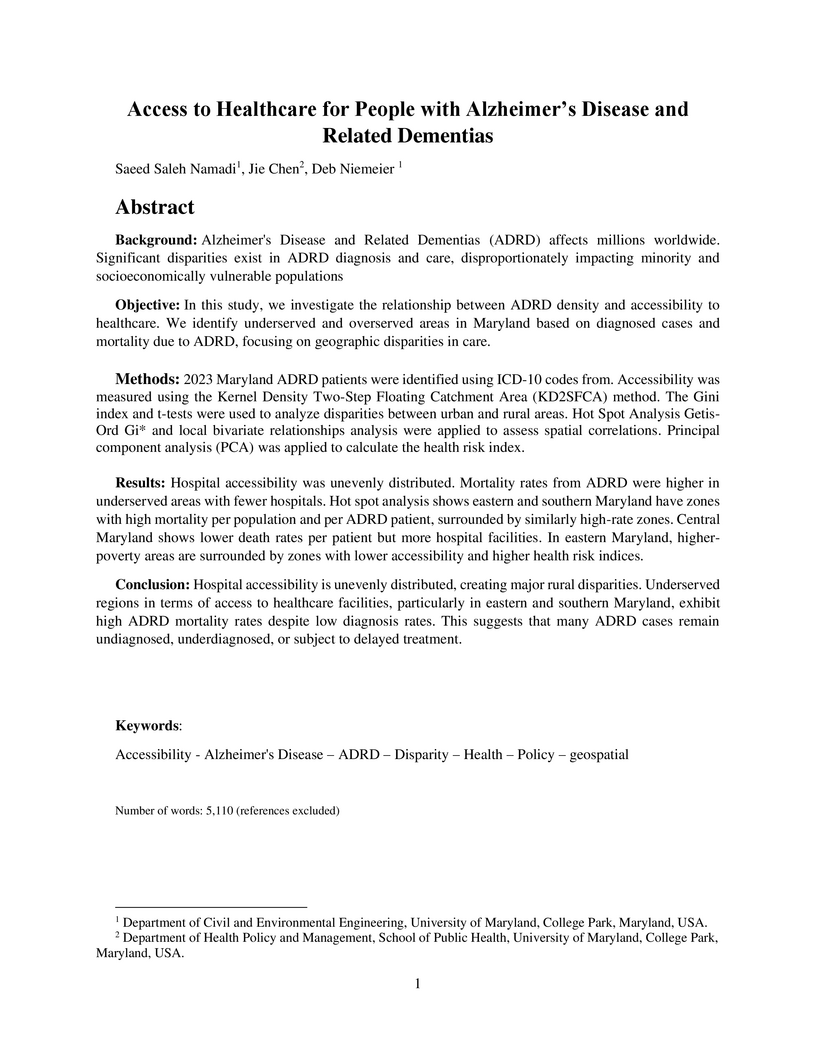

Background: Alzheimer's Disease and Related Dementias (ADRD) affects millions worldwide. Significant disparities exist in ADRD diagnosis and care, disproportionately impacting minority and socioeconomically vulnerable populations Objective: In this study, we investigate the relationship between ADRD density and accessibility to healthcare. We identify underserved and overserved areas in Maryland based on diagnosed cases and mortality due to ADRD, focusing on geographic disparities in care. Methods: 2023 Maryland ADRD patients were identified using ICD-10 codes from. Accessibility was measured using the Kernel Density Two-Step Floating Catchment Area (KD2SFCA) method. The Gini index and t-tests were used to analyze disparities between urban and rural areas. Hot Spot Analysis Getis-Ord Gi* and local bivariate relationships analysis were applied to assess spatial correlations. Principal component analysis (PCA) was applied to calculate the health risk index. Results: Hospital accessibility was unevenly distributed. Mortality rates from ADRD were higher in underserved areas with fewer hospitals. Hot spot analysis shows eastern and southern Maryland have zones with high mortality per population and per ADRD patient, surrounded by similarly high-rate zones. Central Maryland shows lower death rates per patient but more hospital facilities. In eastern Maryland, higher poverty areas are surrounded by zones with lower accessibility and higher health risk indices. Conclusion: Hospital accessibility is unevenly distributed, creating major rural disparities. Underserved regions in terms of access to healthcare facilities, particularly in eastern and southern Maryland, exhibit high ADRD mortality rates despite low diagnosis rates. This suggests that many ADRD cases remain undiagnosed, underdiagnosed, or subject to delayed treatment.

Quantitatively characterizing the spatial organization of cells and their interaction is essential for understanding cancer progression and immune response. Recent advances in machine intelligence have enabled large-scale segmentation and classification of cell nuclei from digitized histopathology slides, generating massive point pattern and marked point pattern datasets. However, accessible tools for quantitative analysis of such complex cellular spatial organization remain limited. In this paper, we first review 27 traditional spatial summary statistics, areal indices, and topological features applicable to point pattern data. Then, we introduce SASHIMI (Spatial Analysis for Segmented Histopathology Images using Machine Intelligence), a browser-based tool for real-time spatial analysis of artificial intelligence (AI)-segmented histopathology images. SASHIMI computes a comprehensive suite of mathematically grounded descriptors, including spatial statistics, proximity-based measures, grid-level similarity indices, spatial autocorrelation measures, and topological descriptors, to quantify cellular abundance and cell-cell interaction. Applied to two cancer datasets, oral potentially malignant disorders (OPMD) and non-small-cell lung cancer (NSCLC), SASHIMI identified multiple spatial features significantly associated with patient survival outcomes. SASHIMI provides an accessible and reproducible platform for single-cell-level spatial profiling of tumor morphological architecture, offering a robust framework for quantitative exploration of tissue organization across cancer types.

08 Dec 2025

In the era of rapid development of artificial intelligence, its applications span across diverse fields, relying heavily on effective data processing and model optimization. Combined Regularized Support Vector Machines (CR-SVMs) can effectively handle the structural information among data features, but there is a lack of efficient algorithms in distributed-stored big data. To address this issue, we propose a unified optimization framework based on consensus structure. This framework is not only applicable to various loss functions and combined regularization terms but can also be effectively extended to non-convex regularization terms, showing strong scalability. Based on this framework, we develop a distributed parallel alternating direction method of multipliers (ADMM) algorithm to efficiently compute CR-SVMs when data is stored in a distributed manner. To ensure the convergence of the algorithm, we also introduce the Gaussian back-substitution method. Meanwhile, for the integrity of the paper, we introduce a new model, the sparse group lasso support vector machine (SGL-SVM), and apply it to music information retrieval. Theoretical analysis confirms that the computational complexity of the proposed algorithm is not affected by different regularization terms and loss functions, highlighting the universality of the parallel algorithm. Experiments on synthetic and free music archiv datasets demonstrate the reliability, stability, and efficiency of the algorithm.

We investigate cosmological parameter inference and model selection from a Bayesian perspective. Type Ia supernova data from the Dark Energy Survey (DES-SN5YR) are used to test the ΛCDM, wCDM, and CPL cosmological models. Posterior inference is performed via Hamiltonian Monte Carlo using the No-U-Turn Sampler (NUTS) implemented in NumPyro and analyzed with ArviZ in Python. Bayesian model comparison is conducted through Bayes factors computed using the \texttt{bridgesampling} library in R. The results indicate that all three models demonstrate similar predictive performance, but wCDM shows stronger evidence relative to ΛCDM and CPL. We conclude that, under the assumptions and data used in this study, wCDM provides a better description of cosmological expansion.

Reliable regional climate information is essential for assessing the impacts of climate change and for planning in sectors such as renewable energy; yet, producing high-resolution projections through coordinated initiatives like CORDEX that run multiple physical regional climate models is both computationally demanding and difficult to organize. Machine learning emulators that learn the mapping between global and regional climate fields offer a promising way to address these limitations. Here we introduce the application of such an emulator: trained on CMIP5 and CORDEX simulations, it reproduces regional climate model data with sufficient accuracy. When applied to CMIP6 simulations not seen during training, it also produces realistic results, indicating stable performance. Using CORDEX data, CMIP5 and CMIP6 simulations, as well as regional data generated by two machine learning models, we analyze the co-occurrence of low wind speed and low solar radiation and find indications that the number of such energy drought days is likely to decrease in the future. Our results highlight that downscaling with machine learning emulators provides an efficient complement to efforts such as CORDEX, supplying the higher-resolution information required for impact assessments.

10 Dec 2025

In order to better fit real-world datasets, studying asymmetric distribution is of great interest. In this work, we derive several mathematical properties of a general class of asymmetric distributions with positive support which shows up as a unified framework for Extreme Value Theory asymptotic results. The new model generalizes some well-known distribution models such as Generalized Gamma, Inverse Gamma, Weibull, Fréchet, Half-normal, Modified half-normal, Rayleigh, and Erlang. To highlight the applicability of our results, the performance of the analytical models is evaluated through real-life dataset modeling.

06 Dec 2025

We propose a statistical framework built on latent variable modeling for scaling laws of large language models (LLMs). Our work is motivated by the rapid emergence of numerous new LLM families with distinct architectures and training strategies, evaluated on an increasing number of benchmarks. This heterogeneity makes a single global scaling curve inadequate for capturing how performance varies across families and benchmarks. To address this, we propose a latent variable modeling framework in which each LLM family is associated with a latent variable that captures the common underlying features in that family. An LLM's performance on different benchmarks is then driven by its latent skills, which are jointly determined by the latent variable and the model's own observable features. We develop an estimation procedure for this latent variable model and establish its statistical properties. We also design efficient numerical algorithms that support estimation and various downstream tasks. Empirically, we evaluate the approach on 12 widely used benchmarks from the Open LLM Leaderboard (v1/v2).

06 Dec 2025

This paper studies whether, how, and for whom generative artificial intelligence (GenAI) facilitates firm creation. Our identification strategy exploits the November 2022 release of ChatGPT as a global shock that lowered start-up costs and leverages variations across geo-coded grids with differential pre-existing AI-specific human capital. Using high-resolution and universal data on Chinese firm registrations by the end of 2024, we find that grids with stronger AI-specific human capital experienced a sharp surge in new firm formation\unicodex2013driven entirely by small firms, contributing to 6.0% of overall national firm entry. Large-firm entry declines, consistent with a shift toward leaner ventures. New firms are smaller in capital, shareholder number, and founding team size, especially among small firms. The effects are strongest among firms with potential AI applications, weaker financing needs, and among first-time entrepreneurs. Overall, our results highlight that GenAI serves as a pro-competitive force by disproportionately boosting small-firm entry.

Correlations in complex systems are often obscured by nonstationarity, long-range memory, and heavy-tailed fluctuations, which limit the usefulness of traditional covariance-based analyses. To address these challenges, we construct scale and fluctuation-dependent correlation matrices using the multifractal detrended cross-correlation coefficient ρr that selectively emphasizes fluctuations of different amplitudes. We examine the spectral properties of these detrended correlation matrices and compare them to the spectral properties of the matrices calculated in the same way from synthetic Gaussian and qGaussian signals. Our results show that detrending, heavy tails, and the fluctuation-order parameter r jointly produce spectra, which substantially depart from the random case even under absence of cross-correlations in time series. Applying this framework to one-minute returns of 140 major cryptocurrencies from 2021-2024 reveals robust collective modes, including a dominant market factor and several sectoral components whose strength depends on the analyzed scale and fluctuation order. After filtering out the market mode, the empirical eigenvalue bulk aligns closely with the limit of random detrended cross-correlations, enabling clear identification of structurally significant outliers. Overall, the study provides a refined spectral baseline for detrended cross-correlations and offers a promising tool for distinguishing genuine interdependencies from noise in complex, nonstationary, heavy-tailed systems.

28 Nov 2025

Researchers from Technische Universität München and UC Berkeley introduce a causal inference framework to transition from descriptive "what-is" analyses to interventional "what-if" insights in human-factor analysis for post-occupancy evaluations. Applying this approach to a CBE Occupant Survey dataset, they reduced the analytical scope from 124 to 28 causally associated factors, revealing a causal hierarchy and identifying "satisfaction with glare and reflections on screens" as a primary causal driver for overall satisfaction, enabling more targeted interventions.

02 Dec 2025

Classification of functional data where observations are curves or trajectories poses unique challenges, particularly under severe class imbalance. Traditional Random Forest algorithms, while robust for tabular data, often fail to capture the intrinsic structure of functional observations and struggle with minority class detection. This paper introduces Functional Random Forest with Adaptive Cost-Sensitive Splitting (FRF-ACS), a novel ensemble framework designed for imbalanced functional data classification. The proposed method leverages basis expansions and Functional Principal Component Analysis (FPCA) to represent curves efficiently, enabling trees to operate on low dimensional functional features. To address imbalance, we incorporate a dynamic cost sensitive splitting criterion that adjusts class weights locally at each node, combined with a hybrid sampling strategy integrating functional SMOTE and weighted bootstrapping. Additionally, curve specific similarity metrics replace traditional Euclidean measures to preserve functional characteristics during leaf assignment. Extensive experiments on synthetic and real world datasets including biomedical signals and sensor trajectories demonstrate that FRF-ACS significantly improves minority class recall and overall predictive performance compared to existing functional classifiers and imbalance handling techniques. This work provides a scalable, interpretable solution for high dimensional functional data analysis in domains where minority class detection is critical.

01 Dec 2025

The beginning of the rainy season and the occurrence of dry spells in West Africa is notoriously difficult to predict, however these are the key indicators farmers use to decide when to plant crops, having a major influence on their overall yield. While many studies have shown correlations between global sea surface temperatures and characteristics of the West African monsoon season, there are few that effectively implementing this information into machine learning (ML) prediction models. In this study we investigated the best ways to define our target variables, onset and dry spell, and produced methods to predict them for upcoming seasons using sea surface temperature teleconnections. Defining our target variables required the use of a combination of two well known definitions of onset. We then applied custom statistical techniques -- like total variation regularization and predictor selection -- to the two models we constructed, the first being a linear model and the other an adaptive-threshold logistic regression model. We found mixed results for onset prediction, with spatial verification showing signs of significant skill, while temporal verification showed little to none. For dry spell though, we found significant accuracy through the analysis of multiple binary classification metrics. These models overcome some limitations that current approaches have, such as being computationally intensive and needing bias correction. We also introduce this study as a framework to use ML methods for targeted prediction of certain weather phenomenon using climatologically relevant variables. As we apply ML techniques to more problems, we see clear benefits for fields like meteorology and lay out a few new directions for further research.

There are no more papers matching your filters at the moment.