L3Cube Labs

This research provides an empirical evaluation of various Large Language Models (LLMs) as annotators for Marathi, a low-resource language, across multiple classification tasks. The findings indicate that current LLMs consistently underperform fine-tuned BERT-based models, highlighting limitations in their ability to reliably generate high-quality annotations for complex tasks in low-resource linguistic contexts.

Semantic evaluation in low-resource languages remains a major challenge in NLP. While sentence transformers have shown strong performance in high-resource settings, their effectiveness in Indic languages is underexplored due to a lack of high-quality benchmarks. To bridge this gap, we introduce L3Cube-IndicHeadline-ID, a curated headline identification dataset spanning ten low-resource Indic languages: Marathi, Hindi, Tamil, Gujarati, Odia, Kannada, Malayalam, Punjabi, Telugu, Bengali and English. Each language includes 20,000 news articles paired with four headline variants: the original, a semantically similar version, a lexically similar version, and an unrelated one, designed to test fine-grained semantic understanding. The task requires selecting the correct headline from the options using article-headline similarity. We benchmark several sentence transformers, including multilingual and language-specific models, using cosine similarity. Results show that multilingual models consistently perform well, while language-specific models vary in effectiveness. Given the rising use of similarity models in Retrieval-Augmented Generation (RAG) pipelines, this dataset also serves as a valuable resource for evaluating and improving semantic understanding in such applications. Additionally, the dataset can be repurposed for multiple-choice question answering, headline classification, or other task-specific evaluations of LLMs, making it a versatile benchmark for Indic NLP. The dataset is shared publicly at this https URL

This study examines the effectiveness of layer pruning in creating efficient Sentence BERT (SBERT) models. Our goal is to create smaller sentence embedding models that reduce complexity while maintaining strong embedding similarity. We assess BERT models like Muril and MahaBERT-v2 before and after pruning, comparing them with smaller, scratch-trained models like MahaBERT-Small and MahaBERT-Smaller. Through a two-phase SBERT fine-tuning process involving Natural Language Inference (NLI) and Semantic Textual Similarity (STS), we evaluate the impact of layer reduction on embedding quality. Our findings show that pruned models, despite fewer layers, perform competitively with fully layered versions. Moreover, pruned models consistently outperform similarly sized, scratch-trained models, establishing layer pruning as an effective strategy for creating smaller, efficient embedding models. These results highlight layer pruning as a practical approach for reducing computational demand while preserving high-quality embeddings, making SBERT models more accessible for languages with limited technological resources.

The availability of text or topic classification datasets in the low-resource Marathi language is limited, typically consisting of fewer than 4 target labels, with some achieving nearly perfect accuracy. In this work, we introduce L3Cube-MahaNews, a Marathi text classification corpus that focuses on News headlines and articles. This corpus stands out as the largest supervised Marathi Corpus, containing over 1.05L records classified into a diverse range of 12 categories. To accommodate different document lengths, MahaNews comprises three supervised datasets specifically designed for short text, long documents, and medium paragraphs. The consistent labeling across these datasets facilitates document length-based analysis. We provide detailed data statistics and baseline results on these datasets using state-of-the-art pre-trained BERT models. We conduct a comparative analysis between monolingual and multilingual BERT models, including MahaBERT, IndicBERT, and MuRIL. The monolingual MahaBERT model outperforms all others on every dataset. These resources also serve as Marathi topic classification datasets or models and are publicly available at this https URL .

Long document classification poses challenges due to the computational limitations of transformer-based models, particularly BERT, which are constrained by fixed input lengths and quadratic attention complexity. Moreover, using the full document for classification is often redundant, as only a subset of sentences typically carries the necessary information. To address this, we propose a TF-IDF-based sentence ranking method that improves efficiency by selecting the most informative content. Our approach explores fixed-count and percentage-based sentence selection, along with an enhanced scoring strategy combining normalized TF-IDF scores and sentence length. Evaluated on the MahaNews LDC dataset of long Marathi news articles, the method consistently outperforms baselines such as first, last, and random sentence selection. With MahaBERT-v2, we achieve near-identical classification accuracy with just a 0.33 percent drop compared to the full-context baseline, while reducing input size by over 50 percent and inference latency by 43 percent. This demonstrates that significant context reduction is possible without sacrificing performance, making the method practical for real-world long document classification tasks.

We present MahaSTS, a human-annotated Sentence Textual Similarity (STS) dataset for Marathi, along with MahaSBERT-STS-v2, a fine-tuned Sentence-BERT model optimized for regression-based similarity scoring. The MahaSTS dataset consists of 16,860 Marathi sentence pairs labeled with continuous similarity scores in the range of 0-5. To ensure balanced supervision, the dataset is uniformly distributed across six score-based buckets spanning the full 0-5 range, thus reducing label bias and enhancing model stability. We fine-tune the MahaSBERT model on this dataset and benchmark its performance against other alternatives like MahaBERT, MuRIL, IndicBERT, and IndicSBERT. Our experiments demonstrate that MahaSTS enables effective training for sentence similarity tasks in Marathi, highlighting the impact of human-curated annotations, targeted fine-tuning, and structured supervision in low-resource settings. The dataset and model are publicly shared at this https URL

We present the MahaSUM dataset, a large-scale collection of diverse news articles in Marathi, designed to facilitate the training and evaluation of models for abstractive summarization tasks in Indic languages. The dataset, containing 25k samples, was created by scraping articles from a wide range of online news sources and manually verifying the abstract summaries. Additionally, we train an IndicBART model, a variant of the BART model tailored for Indic languages, using the MahaSUM dataset. We evaluate the performance of our trained models on the task of abstractive summarization and demonstrate their effectiveness in producing high-quality summaries in Marathi. Our work contributes to the advancement of natural language processing research in Indic languages and provides a valuable resource for future research in this area using state-of-the-art models. The dataset and models are shared publicly at this https URL

Code-mixing is the practice of using two or more languages in a single sentence, which often occurs in multilingual communities such as India where people commonly speak multiple languages. Classic NLP tools, trained on monolingual data, face challenges when dealing with code-mixed data. Extracting meaningful information from sentences containing multiple languages becomes difficult, particularly in tasks like hate speech detection, due to linguistic variation, cultural nuances, and data sparsity. To address this, we aim to analyze the significance of code-mixed embeddings and evaluate the performance of BERT and HingBERT models (trained on a Hindi-English corpus) in hate speech detection. Our study demonstrates that HingBERT models, benefiting from training on the extensive Hindi-English dataset L3Cube-HingCorpus, outperform BERT models when tested on hate speech text datasets. We also found that code-mixed Hing-FastText performs better than standard English FastText and vanilla BERT models.

Natural Language Processing (NLP) for low-resource languages, which lack

large annotated datasets, faces significant challenges due to limited

high-quality data and linguistic resources. The selection of embeddings plays a

critical role in achieving strong performance in NLP tasks. While contextual

BERT embeddings require a full forward pass, non-contextual BERT embeddings

rely only on table lookup. Existing research has primarily focused on

contextual BERT embeddings, leaving non-contextual embeddings largely

unexplored. In this study, we analyze the effectiveness of non-contextual

embeddings from BERT models (MuRIL and MahaBERT) and FastText models (IndicFT

and MahaFT) for tasks such as news classification, sentiment analysis, and hate

speech detection in one such low-resource language Marathi. We compare these

embeddings with their contextual and compressed variants. Our findings indicate

that non-contextual BERT embeddings extracted from the model's first embedding

layer outperform FastText embeddings, presenting a promising alternative for

low-resource NLP.

The rapid progress in question-answering (QA) systems has predominantly

benefited high-resource languages, leaving Indic languages largely

underrepresented despite their vast native speaker base. In this paper, we

present IndicSQuAD, a comprehensive multi-lingual extractive QA dataset

covering nine major Indic languages, systematically derived from the SQuAD

dataset. Building on previous work with MahaSQuAD for Marathi, our approach

adapts and extends translation techniques to maintain high linguistic fidelity

and accurate answer-span alignment across diverse languages. IndicSQuAD

comprises extensive training, validation, and test sets for each language,

providing a robust foundation for model development. We evaluate baseline

performances using language-specific monolingual BERT models and the

multilingual MuRIL-BERT. The results indicate some challenges inherent in

low-resource settings. Moreover, our experiments suggest potential directions

for future work, including expanding to additional languages, developing

domain-specific datasets, and incorporating multimodal data. The dataset and

models are publicly shared at this https URL

L3Cube-IndicNews: News-based Short Text and Long Document Classification Datasets in Indic Languages

L3Cube-IndicNews: News-based Short Text and Long Document Classification Datasets in Indic Languages

In this work, we introduce L3Cube-IndicNews, a multilingual text classification corpus aimed at curating a high-quality dataset for Indian regional languages, with a specific focus on news headlines and articles. We have centered our work on 10 prominent Indic languages, including Hindi, Bengali, Marathi, Telugu, Tamil, Gujarati, Kannada, Odia, Malayalam, and Punjabi. Each of these news datasets comprises 10 or more classes of news articles. L3Cube-IndicNews offers 3 distinct datasets tailored to handle different document lengths that are classified as: Short Headlines Classification (SHC) dataset containing the news headline and news category, Long Document Classification (LDC) dataset containing the whole news article and the news category, and Long Paragraph Classification (LPC) containing sub-articles of the news and the news category. We maintain consistent labeling across all 3 datasets for in-depth length-based analysis. We evaluate each of these Indic language datasets using 4 different models including monolingual BERT, multilingual Indic Sentence BERT (IndicSBERT), and IndicBERT. This research contributes significantly to expanding the pool of available text classification datasets and also makes it possible to develop topic classification models for Indian regional languages. This also serves as an excellent resource for cross-lingual analysis owing to the high overlap of labels among languages. The datasets and models are shared publicly at this https URL

Named Entity Recognition (NER) in code-mixed text, particularly Hindi-English (Hinglish), presents unique challenges due to informal structure, transliteration, and frequent language switching. This study conducts a comparative evaluation of code-mixed fine-tuned models and non-code-mixed multilingual models, along with zero-shot generative large language models (LLMs). Specifically, we evaluate HingBERT, HingMBERT, and HingRoBERTa (trained on code-mixed data), and BERT Base Cased, IndicBERT, RoBERTa and MuRIL (trained on non-code-mixed multilingual data). We also assess the performance of Google Gemini in a zero-shot setting using a modified version of the dataset with NER tags removed. All models are tested on a benchmark Hinglish NER dataset using Precision, Recall, and F1-score. Results show that code-mixed models, particularly HingRoBERTa and HingBERT-based fine-tuned models, outperform others - including closed-source LLMs like Google Gemini - due to domain-specific pretraining. Non-code-mixed models perform reasonably but show limited adaptability. Notably, Google Gemini exhibits competitive zero-shot performance, underlining the generalization strength of modern LLMs. This study provides key insights into the effectiveness of specialized versus generalized models for code-mixed NER tasks.

Emotion recognition in low-resource languages like Marathi remains challenging due to limited annotated data. We present L3Cube-MahaEmotions, a high-quality Marathi emotion recognition dataset with 11 fine-grained emotion labels. The training data is synthetically annotated using large language models (LLMs), while the validation and test sets are manually labeled to serve as a reliable gold-standard benchmark. Building on the MahaSent dataset, we apply the Chain-of-Translation (CoTR) prompting technique, where Marathi sentences are translated into English and emotion labeled via a single prompt. GPT-4 and Llama3-405B were evaluated, with GPT-4 selected for training data annotation due to superior label quality. We evaluate model performance using standard metrics and explore label aggregation strategies (e.g., Union, Intersection). While GPT-4 predictions outperform fine-tuned BERT models, BERT-based models trained on synthetic labels fail to surpass GPT-4. This highlights both the importance of high-quality human-labeled data and the inherent complexity of emotion recognition. An important finding of this work is that generic LLMs like GPT-4 and Llama3-405B generalize better than fine-tuned BERT for complex low-resource emotion recognition tasks. The dataset and model are shared publicly at this https URL

For green AI, it is crucial to measure and reduce the carbon footprint

emitted during the training of large language models. In NLP, performing

pre-training on Transformer models requires significant computational

resources. This pre-training involves using a large amount of text data to gain

prior knowledge for performing downstream tasks. Thus, it is important that we

select the correct data in the form of domain-specific data from this vast

corpus to achieve optimum results aligned with our domain-specific tasks. While

training on large unsupervised data is expensive, it can be optimized by

performing a data selection step before pretraining. Selecting important data

reduces the space overhead and the substantial amount of time required to

pre-train the model while maintaining constant accuracy. We investigate the

existing selection strategies and propose our own domain-adaptive data

selection method - TextGram - that effectively selects essential data from

large corpora. We compare and evaluate the results of finetuned models for text

classification task with and without data selection. We show that the proposed

strategy works better compared to other selection methods.

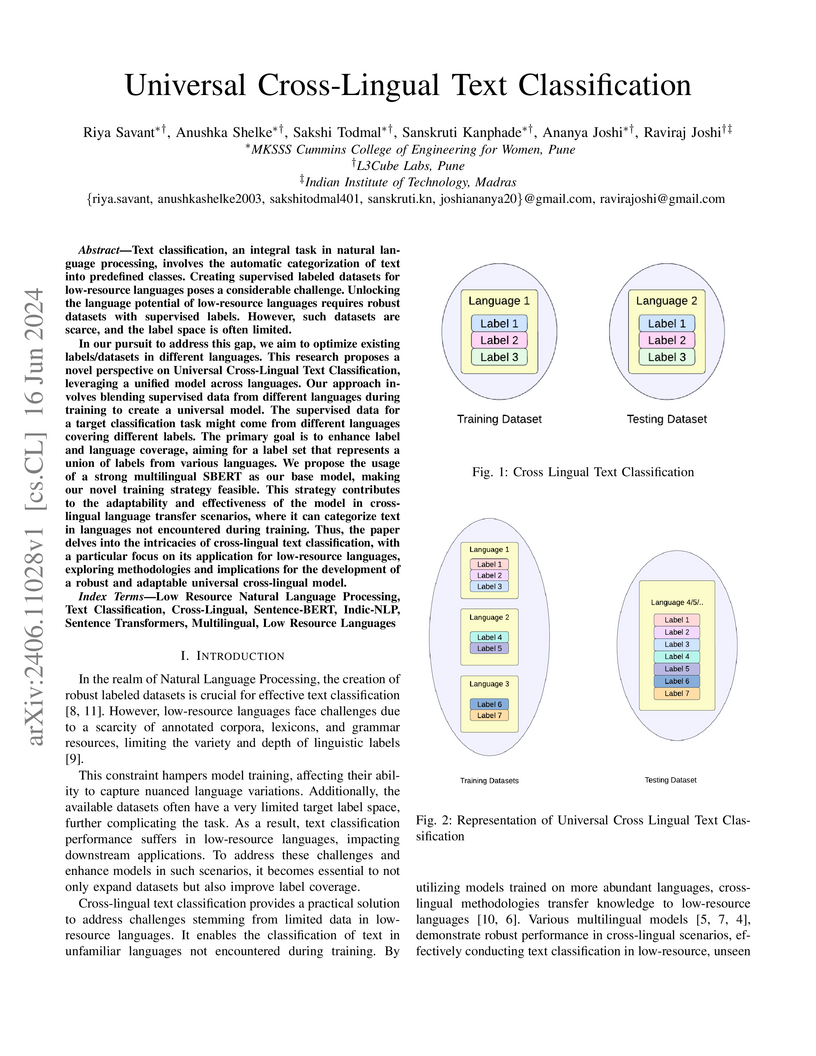

Text classification, an integral task in natural language processing, involves the automatic categorization of text into predefined classes. Creating supervised labeled datasets for low-resource languages poses a considerable challenge. Unlocking the language potential of low-resource languages requires robust datasets with supervised labels. However, such datasets are scarce, and the label space is often limited. In our pursuit to address this gap, we aim to optimize existing labels/datasets in different languages. This research proposes a novel perspective on Universal Cross-Lingual Text Classification, leveraging a unified model across languages. Our approach involves blending supervised data from different languages during training to create a universal model. The supervised data for a target classification task might come from different languages covering different labels. The primary goal is to enhance label and language coverage, aiming for a label set that represents a union of labels from various languages. We propose the usage of a strong multilingual SBERT as our base model, making our novel training strategy feasible. This strategy contributes to the adaptability and effectiveness of the model in cross-lingual language transfer scenarios, where it can categorize text in languages not encountered during training. Thus, the paper delves into the intricacies of cross-lingual text classification, with a particular focus on its application for low-resource languages, exploring methodologies and implications for the development of a robust and adaptable universal cross-lingual model.

Contrastive learning has proven to be an effective method for pre-training models using weakly labeled data in the vision domain. Sentence transformers are the NLP counterparts to this architecture, and have been growing in popularity due to their rich and effective sentence representations. Having effective sentence representations is paramount in multiple tasks, such as information retrieval, retrieval augmented generation (RAG), and sentence comparison. Keeping in mind the deployability factor of transformers, evaluating the robustness of sentence transformers is of utmost importance. This work focuses on evaluating the robustness of the sentence encoders. We employ several adversarial attacks to evaluate its robustness. This system uses character-level attacks in the form of random character substitution, word-level attacks in the form of synonym replacement, and sentence-level attacks in the form of intra-sentence word order shuffling. The results of the experiments strongly undermine the robustness of sentence encoders. The models produce significantly different predictions as well as embeddings on perturbed datasets. The accuracy of the models can fall up to 15 percent on perturbed datasets as compared to unperturbed datasets. Furthermore, the experiments demonstrate that these embeddings does capture the semantic and syntactic structure (sentence order) of sentences. However, existing supervised classification strategies fail to leverage this information, and merely function as n-gram detectors.

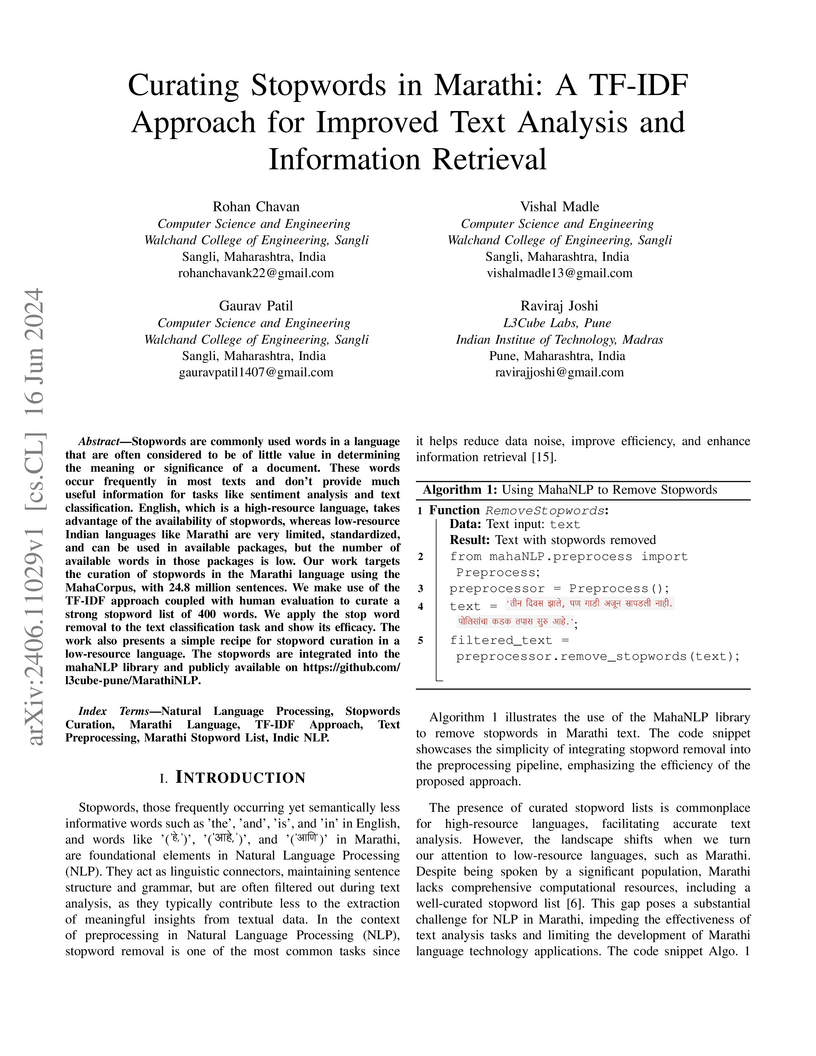

Stopwords are commonly used words in a language that are often considered to be of little value in determining the meaning or significance of a document. These words occur frequently in most texts and don't provide much useful information for tasks like sentiment analysis and text classification. English, which is a high-resource language, takes advantage of the availability of stopwords, whereas low-resource Indian languages like Marathi are very limited, standardized, and can be used in available packages, but the number of available words in those packages is low. Our work targets the curation of stopwords in the Marathi language using the MahaCorpus, with 24.8 million sentences. We make use of the TF-IDF approach coupled with human evaluation to curate a strong stopword list of 400 words. We apply the stop word removal to the text classification task and show its efficacy. The work also presents a simple recipe for stopword curation in a low-resource language. The stopwords are integrated into the mahaNLP library and publicly available on this https URL .

This paper introduces Chain of Translation Prompting (CoTR), a novel strategy

designed to enhance the performance of language models in low-resource

languages. CoTR restructures prompts to first translate the input context from

a low-resource language into a higher-resource language, such as English. The

specified task like generation, classification, or any other NLP function is

then performed on the translated text, with the option to translate the output

back to the original language if needed. All these steps are specified in a

single prompt. We demonstrate the effectiveness of this method through a case

study on the low-resource Indic language Marathi. The CoTR strategy is applied

to various tasks, including sentiment analysis, hate speech classification,

subject classification and text generation, and its efficacy is showcased by

comparing it with regular prompting methods. Our results underscore the

potential of translation-based prompting strategies to significantly improve

multilingual LLM performance in low-resource languages, offering valuable

insights for future research and applications. We specifically see the highest

accuracy improvements with the hate speech detection task. The technique also

has the potential to enhance the quality of synthetic data generation for

underrepresented languages using LLMs.

The rise of large transformer models has revolutionized Natural Language Processing, leading to significant advances in tasks like text classification. However, this progress demands substantial computational resources, escalating training duration, and expenses with larger model sizes. Efforts have been made to downsize and accelerate English models (e.g., Distilbert, MobileBert). Yet, research in this area is scarce for low-resource languages.

In this study, we explore the case of the low-resource Indic language Marathi. Leveraging the marathi-topic-all-doc-v2 model as our baseline, we implement optimization techniques to reduce computation time and memory usage. Our focus is on enhancing the efficiency of Marathi transformer models while maintaining top-tier accuracy and reducing computational demands. Using the MahaNews document classification dataset and the marathi-topic-all-doc-v2 model from L3Cube, we apply Block Movement Pruning, Knowledge Distillation, and Mixed Precision methods individually and in combination to boost efficiency. We demonstrate the importance of strategic pruning levels in achieving desired efficiency gains. Furthermore, we analyze the balance between efficiency improvements and environmental impact, highlighting how optimized model architectures can contribute to a more sustainable computational ecosystem. Implementing these techniques on a single GPU system, we determine that the optimal configuration is 25\% pruning + knowledge distillation. This approach yielded a 2.56x speedup in computation time while maintaining baseline accuracy levels.

In the domain of education, the integration of,technology has led to a transformative era, reshaping traditional,learning paradigms. Central to this evolution is the automation,of grading processes, particularly within the STEM domain encompassing Science, Technology, Engineering, and Mathematics.,While efforts to automate grading have been made in subjects,like Literature, the multifaceted nature of STEM assessments,presents unique challenges, ranging from quantitative analysis,to the interpretation of handwritten diagrams. To address these,challenges, this research endeavors to develop efficient and reliable grading methods through the implementation of automated,assessment techniques using Artificial Intelligence (AI). Our,contributions lie in two key areas: firstly, the development of a,robust system for evaluating textual answers in STEM, leveraging,sample answers for precise comparison and grading, enabled by,advanced algorithms and natural language processing techniques.,Secondly, a focus on enhancing diagram evaluation, particularly,flowcharts, within the STEM context, by transforming diagrams,into textual representations for nuanced assessment using a,Large Language Model (LLM). By bridging the gap between,visual representation and semantic meaning, our approach ensures accurate evaluation while minimizing manual intervention.,Through the integration of models such as CRAFT for text,extraction and YoloV5 for object detection, coupled with LLMs,like Mistral-7B for textual evaluation, our methodology facilitates,comprehensive assessment of multimodal answer sheets. This,paper provides a detailed account of our methodology, challenges,encountered, results, and implications, emphasizing the potential,of AI-driven approaches in revolutionizing grading practices in,STEM education.

There are no more papers matching your filters at the moment.