University of Los Andes

01 Nov 2024

In high-energy physics (HEP), both the exclusion and discovery of new theories depend not only on the acquisition of high-quality experimental data but also on the rigorous application of statistical methods. These methods provide probabilistic criteria (such as p-values) to compare experimental data with theoretical models, aiming to describe the data as accurately as possible. Hypothesis testing plays a central role in this process, as it enables comparisons between established theories and potential new explanations for the observed data. This report reviews key statistical methods currently employed in particle physics, using synthetic data and numerical comparisons to illustrate the concepts in a clear and accessible way. Our results highlight the practical significance of these statistical tools in enhancing the experimental sensitivity and model exclusion capabilities in HEP. All numerical results are estimated using Python and RooFit, a high-level statistical modeling package used by the ATLAS and CMS collaborations at CERN to model and report results from experimental data.

Researchers evaluated the effectiveness of airborne, unmanned, and simulated spaceborne laser scanning platforms alongside machine learning for Aboveground Biomass (AGB) estimation in tropical dry forests. The study found that Support Vector Machine regression models achieved high overall accuracy, with errors below 19%, providing a robust method for carbon accounting in these critical ecosystems.

14 May 2024

This tutorial discusses methodology for causal inference using longitudinal modified treatment policies. This method facilitates the mathematical formalization, identification, and estimation of many novel parameters, and mathematically generalizes many commonly used parameters, such as the average treatment effect. Longitudinal modified treatment policies apply to a wide variety of exposures, including binary, multivariate, and continuous, and can accommodate time-varying treatments and confounders, competing risks, loss-to-follow-up, as well as survival, binary, or continuous outcomes. Longitudinal modified treatment policies can be seen as an extension of static and dynamic interventions to involve the natural value of treatment, and, like dynamic interventions, can be used to define alternative estimands with a positivity assumption that is more likely to be satisfied than estimands corresponding to static interventions. This tutorial aims to illustrate several practical uses of the longitudinal modified treatment policy methodology, including describing different estimation strategies and their corresponding advantages and disadvantages. We provide numerous examples of types of research questions which can be answered using longitudinal modified treatment policies. We go into more depth with one of these examples--specifically, estimating the effect of delaying intubation on critically ill COVID-19 patients' mortality. We demonstrate the use of the open-source R package lmtp to estimate the effects, and we provide code on this https URL.

In this article we show for the first time the role played by the

hypergeneralized Heun equation (HHE) in the context of Quantum Field Theory in

curved space-times. More precisely, we find suitable transformations relating

the separated radial and angular parts of a massive Dirac equation in the

Kerr-Newman-deSitter metric to a HHE.

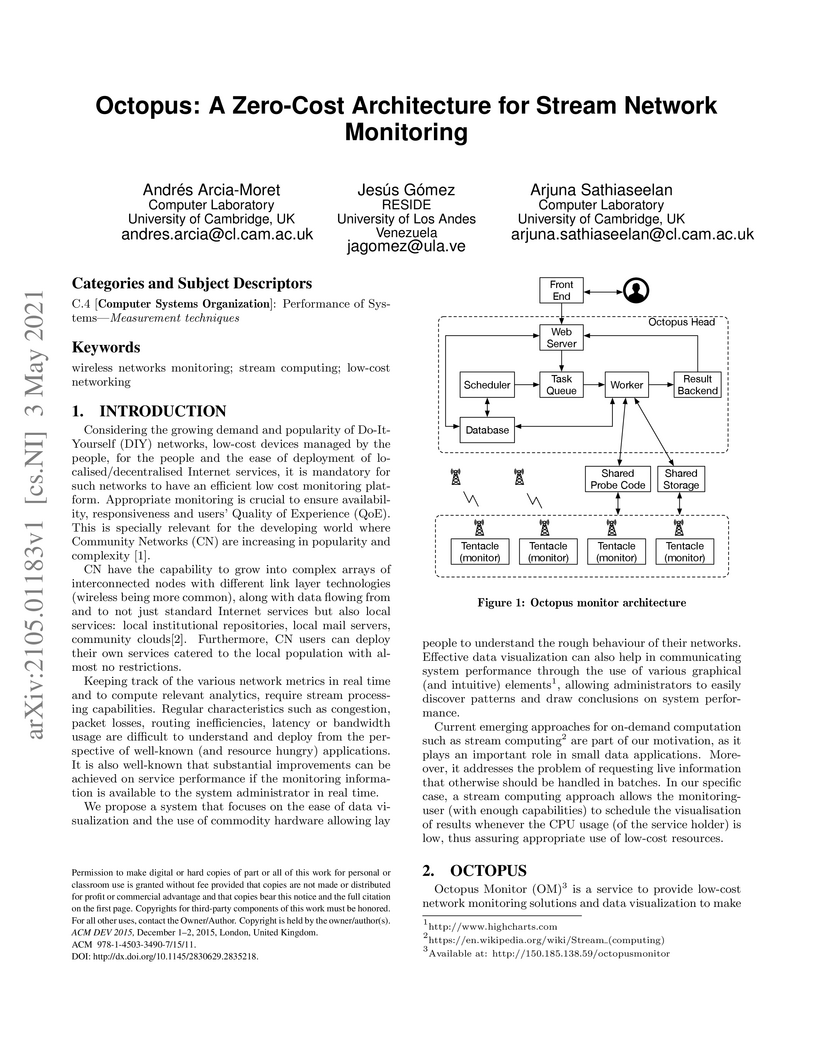

Considering the growing demand and popularity of Do-It-Yourself (DIY) networks, low-cost devices managed by the people, for the people and the ease of deployment of localised/decentralised Internet services, it is convenient for such networks to have an efficient low-cost monitoring platform to ensure availability, responsiveness and users' Quality of Experience (QoE). This is especially relevant for the developing world where Community Networks (CN) are increasing in popularity and complexity. In this letter, we discuss Octopus: an architecture for stream network monitoring.

26 Jun 2022

In this paper we study frame definability in finitely-valued modal logics and establish two main results via suitable translations: (1) in finitely-valued modal logics one cannot define more classes of frames than are already definable in classical modal logic (cf.~\citep[Thm.~8]{tho}), and (2) a large family of finitely-valued modal logics define exactly the same classes of frames as classical modal logic (including modal logics based on finite Heyting and \MV-algebras, or even \BL-algebras). In this way one may observe, for example, that the celebrated Goldblatt--Thomason theorem applies immediately to these logics. In particular, we obtain the central result from~\citep{te} with a much simpler proof and answer one of the open questions left in that paper. Moreover, the proposed translations allow us to determine the computational complexity of a big class of finitely-valued modal logics.

This paper presents the advantages of alternative data from Super-Apps to

enhance user' s income estimation models. It compares the performance of these

alternative data sources with the performance of industry-accepted bureau

income estimators that takes into account only financial system information;

successfully showing that the alternative data manage to capture information

that bureau income estimators do not. By implementing the TreeSHAP method for

Stochastic Gradient Boosting Interpretation, this paper highlights which of the

customer' s behavioral and transactional patterns within a Super-App have a

stronger predictive power when estimating user' s income. Ultimately, this

paper shows the incentive for financial institutions to seek to incorporate

alternative data into constructing their risk profiles.

There are no more papers matching your filters at the moment.