signal-processing

Audio deepfakes have reached a level of realism that makes it increasingly difficult to distinguish between human and artificial voices, which poses risks such as identity theft or spread of disinformation. Despite these concerns, research on humans' ability to identify deepfakes is limited, with most studies focusing on English and very few exploring the reasons behind listeners' perceptual decisions. This study addresses this gap through a perceptual experiment in which 54 listeners (28 native Spanish speakers and 26 native Japanese speakers) classified voices as natural or synthetic, and justified their choices. The experiment included 80 stimuli (50% artificial), organized according to three variables: language (Spanish/Japanese), speech style (audiobooks/interviews), and familiarity with the voice (familiar/unfamiliar). The goal was to examine how these variables influence detection and to analyze qualitatively the reasoning behind listeners' perceptual decisions. Results indicate an average accuracy of 59.11%, with higher performance on authentic samples. Judgments of vocal naturalness rely on a combination of linguistic and non-linguistic cues. Comparing Japanese and Spanish listeners, our qualitative analysis further reveals both shared cues and notable cross-linguistic differences in how listeners conceptualize the "humanness" of speech. Overall, participants relied primarily on suprasegmental and higher-level or extralinguistic characteristics - such as intonation, rhythm, fluency, pauses, speed, breathing, and laughter - over segmental features. These findings underscore the complexity of human perceptual strategies in distinguishing natural from artificial speech and align partly with prior research emphasizing the importance of prosody and phenomena typical of spontaneous speech, such as disfluencies.

We present a novel approach to EEG decoding for non-invasive brain machine interfaces (BMIs), with a focus on motor-behavior classification. While conventional convolutional architectures such as EEGNet and DeepConvNet are effective in capturing local spatial patterns, they are markedly less suited for modeling long-range temporal dependencies and nonlinear dynamics. To address this limitation, we integrate an Echo State Network (ESN), a prominent paradigm in reservoir computing into the decoding pipeline. ESNs construct a high-dimensional, sparsely connected recurrent reservoir that excels at tracking temporal dynamics, thereby complementing the spatial representational power of CNNs. Evaluated on a skateboard-trick EEG dataset preprocessed via the PREP pipeline and implemented in MNE-Python, our ESNNet achieves 83.2% within-subject and 51.3% LOSO accuracies, surpassing widely used CNN-based baselines. Code is available at this https URL

Expectation Propagation (EP) is a widely used message-passing algorithm that decomposes a global inference problem into multiple local ones. It approximates marginal distributions (beliefs) using intermediate functions (messages). While beliefs must be proper probability distributions that integrate to one, messages may have infinite integral values. In Gaussian-projected EP, such messages take a Gaussian form and appear as if they have "negative" variances. Although allowed within the EP framework, these negative-variance messages can impede algorithmic progress.

In this paper, we investigate EP in linear models and analyze the relationship between the corresponding beliefs. Based on the analysis, we propose both non-persistent and persistent approaches that prevent the algorithm from being blocked by messages with infinite integral values.

Furthermore, by examining the relationship between the EP messages in linear models, we develop an additional approach that avoids the occurrence of messages with infinite integral values.

Always-on sensors are increasingly expected to embark a variety of tiny neural networks and to continuously perform inference on time-series of the data they sense. In order to fit lifetime and energy consumption requirements when operating on battery, such hardware uses microcontrollers (MCUs) with tiny memory budget e.g., 128kB of RAM. In this context, optimizing data flows across neural network layers becomes crucial. In this paper, we introduce TinyDéjàVu, a new framework and novel algorithms we designed to drastically reduce the RAM footprint required by inference using various tiny ML models for sensor data time-series on typical microcontroller hardware. We publish the implementation of TinyDéjàVu as open source, and we perform reproducible benchmarks on hardware. We show that TinyDéjàVu can save more than 60% of RAM usage and eliminate up to 90% of redundant compute on overlapping sliding window inputs.

Estimation of differences in conditional independence graphs (CIGs) of two time series Gaussian graphical models (TSGGMs) is investigated where the two TSGGMs are known to have similar structure. The TSGGM structure is encoded in the inverse power spectral density (IPSD) of the time series. In several existing works, one is interested in estimating the difference in two precision matrices to characterize underlying changes in conditional dependencies of two sets of data consisting of independent and identically distributed (i.i.d.) observations. In this paper we consider estimation of the difference in two IPSDs to characterize the underlying changes in conditional dependencies of two sets of time-dependent data. Our approach accounts for data time dependencies unlike past work. We analyze a penalized D-trace loss function approach in the frequency domain for differential graph learning, using Wirtinger calculus. We consider both convex (group lasso) and non-convex (log-sum and SCAD group penalties) penalty/regularization functions. An alternating direction method of multipliers (ADMM) algorithm is presented to optimize the objective function. We establish sufficient conditions in a high-dimensional setting for consistency (convergence of the inverse power spectral density to true value in the Frobenius norm) and graph recovery. Both synthetic and real data examples are presented in support of the proposed approaches. In synthetic data examples, our log-sum-penalized differential time-series graph estimator significantly outperformed our lasso based differential time-series graph estimator which, in turn, significantly outperformed an existing lasso-penalized i.i.d. modeling approach, with F1 score as the performance metric.

Semantic Soft Bootstrapping (SSB), an RL-free self-distillation framework developed at the University of Maryland, enhances large language model reasoning by having the model act as both teacher and student. It boosted pass@1 accuracy on the MATH500 benchmark by 10.6% and on AIME2024 by 10% over a GRPO baseline, while utilizing a smaller dataset and maintaining concise response lengths.

Estimation of the conditional independence graph (CIG) of high-dimensional multivariate Gaussian time series from multi-attribute data is considered. Existing methods for graph estimation for such data are based on single-attribute models where one associates a scalar time series with each node. In multi-attribute graphical models, each node represents a random vector or vector time series. In this paper we provide a unified theoretical analysis of multi-attribute graph learning for dependent time series using a penalized log-likelihood objective function formulated in the frequency domain using the discrete Fourier transform of the time-domain data. We consider both convex (sparse-group lasso) and non-convex (log-sum and SCAD group penalties) penalty/regularization functions. We establish sufficient conditions in a high-dimensional setting for consistency (convergence of the inverse power spectral density to true value in the Frobenius norm), local convexity when using non-convex penalties, and graph recovery. We do not impose any incoherence or irrepresentability condition for our convergence results. We also empirically investigate selection of the tuning parameters based on the Bayesian information criterion, and illustrate our approach using numerical examples utilizing both synthetic and real data.

This paper provides a fundamental characterization of the discrete ambiguity functions (AFs) of random communication waveforms under arbitrary orthonormal modulation with random constellation symbols, which serve as a key metric for evaluating the delay-Doppler sensing performance in future ISAC applications. A unified analytical framework is developed for two types of AFs, namely the discrete periodic AF (DP-AF) and the fast-slow time AF (FST-AF), where the latter may be seen as a small-Doppler approximation of the DP-AF. By analyzing the expectation of squared AFs, we derive exact closed-form expressions for both the expected sidelobe level (ESL) and the expected integrated sidelobe level (EISL) under the DP-AF and FST-AF formulations. For the DP-AF, we prove that the normalized EISL is identical for all orthogonal waveforms. To gain structural insights, we introduce a matrix representation based on the finite Weyl-Heisenberg (WH) group, where each delay-Doppler shift corresponds to a WH operator acting on the ISAC signal. This WH-group viewpoint yields sharp geometric constraints on the lowest sidelobes: The minimum ESL can only occur along a one-dimensional cut or over a set of widely dispersed delay-Doppler bins. Consequently, no waveform can attain the minimum ESL over any compact two-dimensional region, leading to a no-optimality (no-go) result under the DP-AF framework. For the FST-AF, the closed-form ESL and EISL expressions reveal a constellation-dependent regime governed by its kurtosis: The OFDM modulation achieves the minimum ESL for sub-Gaussian constellations, whereas the OTFS waveform becomes optimal for super-Gaussian constellations. Finally, four representative waveforms, namely, SC, OFDM, OTFS, and AFDM, are examined under both frameworks, and all theoretical results are verified through numerical examples.

This paper explores signal and image analysis by using the Singular Value Decomposition (SVD) and its extension, the Generalized Singular Value Decomposition (GSVD). A key strength of SVD lies in its ability to separate information into orthogonal subspaces. While SVD is a well-established tool in ECG analysis, particularly for source separation, this work proposes a refined method for selecting a threshold to distinguish between maternal and fetal components more effectively. In the first part of the paper, the focus is onmedical signal analysis,where the concepts of Energy Gap Variation (EGV) and Singular Energy are introduced to isolate fetal and maternal ECG signals, improving the known ones. Furthermore, the approach is significantly enhanced by the application of GSVD, which provides additional discriminative power for more accurate signal separation. The second part introduces a novel technique called Singular Smoothness, developed for image analysis. This method incorporates Singular Entropy and the Frobenius normto evaluate information density, and is applied to the detection of natural anomalies such asmountain fractures and burned forest regions. Numerical experiments are presented to demonstrate the effectiveness of the proposed approaches.

Counterfeit products pose significant risks to public health and safety through infiltrating untrusted supply chains. Among numerous anti-counterfeiting techniques, leveraging inherent, unclonable microscopic irregularities of paper surfaces is an accurate and cost-effective solution. Prior work of this approach has focused on enabling ubiquitous acquisition of these physically unclonable features (PUFs). However, we will show that existing authentication methods relying on paper surface PUFs may be vulnerable to adversaries, resulting in a gap between technological feasibility and secure real-world deployment. This gap is investigated through formalizing an operational framework for paper-PUF-based authentication. Informed by this framework, we reveal system-level vulnerabilities across both physical and digital domains, designing physical denial-of-service and digital forgery attacks to disrupt proper authentication. The effectiveness of the designed attacks underscores the strong need for security countermeasures for reliable and resilient authentication based on paper PUFs. The proposed framework further facilitates a comprehensive, stage-by-stage security analysis, guiding the design of future counterfeit prevention systems. This analysis delves into potential attack strategies, offering a foundational understanding of how various system components, such as physical features and verification processes, might be exploited by adversaries.

Radio maps that describe spatial variations in wireless signal strength are widely used to optimize networks and support aerial platforms. Their construction requires location-labeled signal measurements from distributed users, raising fundamental concerns about location privacy. Even when raw data are kept local, the shared model updates can reveal user locations through their spatial structure, while naive noise injection either fails to hide this leakage or degrades model accuracy. This work analyzes how location leakage arises from gradients in a virtual-environment radio map model and proposes a geometry-aligned differential privacy mechanism with heterogeneous noise tailored to both confuse localization and cover gradient spatial patterns. The approach is theoretically supported with a convergence guarantee linking privacy strength to learning accuracy. Numerical experiments show the approach increases attacker localization error from 30 m to over 180 m, with only 0.2 dB increase in radio map construction error compared to a uniform-noise baseline.

Neural decoding, a critical component of Brain-Computer Interface (BCI), has recently attracted increasing research interest. Previous research has focused on leveraging signal processing and deep learning methods to enhance neural decoding performance. However, the in-depth exploration of model architectures remains underexplored, despite its proven effectiveness in other tasks such as energy forecasting and image classification. In this study, we propose NeuroSketch, an effective framework for neural decoding via systematic architecture optimization. Starting with the basic architecture study, we find that CNN-2D outperforms other architectures in neural decoding tasks and explore its effectiveness from temporal and spatial perspectives. Building on this, we optimize the architecture from macro- to micro-level, achieving improvements in performance at each step. The exploration process and model validations take over 5,000 experiments spanning three distinct modalities (visual, auditory, and speech), three types of brain signals (EEG, SEEG, and ECoG), and eight diverse decoding tasks. Experimental results indicate that NeuroSketch achieves state-of-the-art (SOTA) performance across all evaluated datasets, positioning it as a powerful tool for neural decoding. Our code and scripts are available at this https URL.

Shading faults remain one of the most critical challenges affecting photovoltaic (PV) system efficiency, as they not only reduce power generation but also disturb maximum power point tracking (MPPT). To address this issue, this study introduces a hybrid optimization framework that combines Fuzzy Logic Control (FLC) with a Shading-Aware Particle Swarm Optimization (SA-PSO) method. The proposed scheme is designed to adapt dynamically to both partial shading (20%-80%) and complete shading events, ensuring reliable global maximum power point (GMPP) detection. In this approach, the fuzzy controller provides rapid decision support based on shading patterns, while SA-PSO accelerates the search process and prevents the system from becoming trapped in local minima. A comparative performance assessment with the conventional Perturb and Observe (P\&O) algorithm highlights the advantages of the hybrid model, showing up to an 11.8% improvement in power output and a 62% reduction in tracking time. These results indicate that integrating intelligent control with shading-aware optimization can significantly enhance the resilience and energy yield of PV systems operating under complex real-world conditions.

We discuss the prospect of using cascaded phase modulators and dispersive elements to achieve arbitrary optical waveform generation. This transform is not limited by the bandwidth of its constituent modulators and is theoretically lossless.

Accurate channel state information (CSI) underpins reliable and efficient wireless communication. However, acquiring CSI via pilot estimation incurs substantial overhead, especially in massive multiple-input multiple-output (MIMO) systems operating in high-Doppler environments. By leveraging the growing availability of environmental sensing data, this treatise investigates pilot-free channel inference that estimates complete CSI directly from multimodal observations, including camera images, LiDAR point clouds, and GPS coordinates. In contrast to prior studies that rely on predefined channel models, we develop a data-driven framework that formulates the sensing-to-channel mapping as a cross-modal flow matching problem. The framework fuses multimodal features into a latent distribution within the channel domain, and learns a velocity field that continuously transforms the latent distribution toward the channel distribution. To make this formulation tractable and efficient, we reformulate the problem as an equivalent conditional flow matching objective and incorporate a modality alignment loss, while adopting low-latency inference mechanisms to enable real-time CSI estimation. In experiments, we build a procedural data generator based on Sionna and Blender to support realistic modeling of sensing scenes and wireless propagation. System-level evaluations demonstrate significant improvements over pilot- and sensing-based benchmarks in both channel estimation accuracy and spectral efficiency for the downstream beamforming task.

The sixth-generation and beyond (B6G) networks are envisioned to support advanced applications that demand high-speed communication, high-precision sensing, and high-performance computing. To underpin this multi-functional evolution, energy- and cost-efficient programmable metasurfaces (PMs) have emerged as a promising technology for dynamically manipulating electromagnetic waves. This paper provides a comprehensive survey of representative multi-functional PM paradigms, with a specific focus on achieving \emph{full-space communication coverage}, \emph{ubiquitous sensing}, as well as \emph{intelligent signal processing and computing}. i) For simultaneously transmitting and reflecting surfaces (STARS)-enabled full-space communications, we elaborate on their operational protocols and pivotal applications in supporting efficient communications, physical layer security, unmanned aerial vehicle networks, and wireless power transfer. ii) For PM-underpinned ubiquitous sensing, we formulate the signal models for the PM-assisted architecture and systematically characterize its advantages in near-field and cooperative sensing, while transitioning to the PM-enabled transceiver architecture and demonstrating its superior performance in multi-band operations. iii) For advanced signal processing and computing, we explore the novel paradigm of stacked intelligent metasurfaces (SIMs), investigating their implementation in wave-domain analog processing and over-the-air mathematical computing. Finally, we identify key research challenges and envision future directions for multi-functional PMs towards B6G.

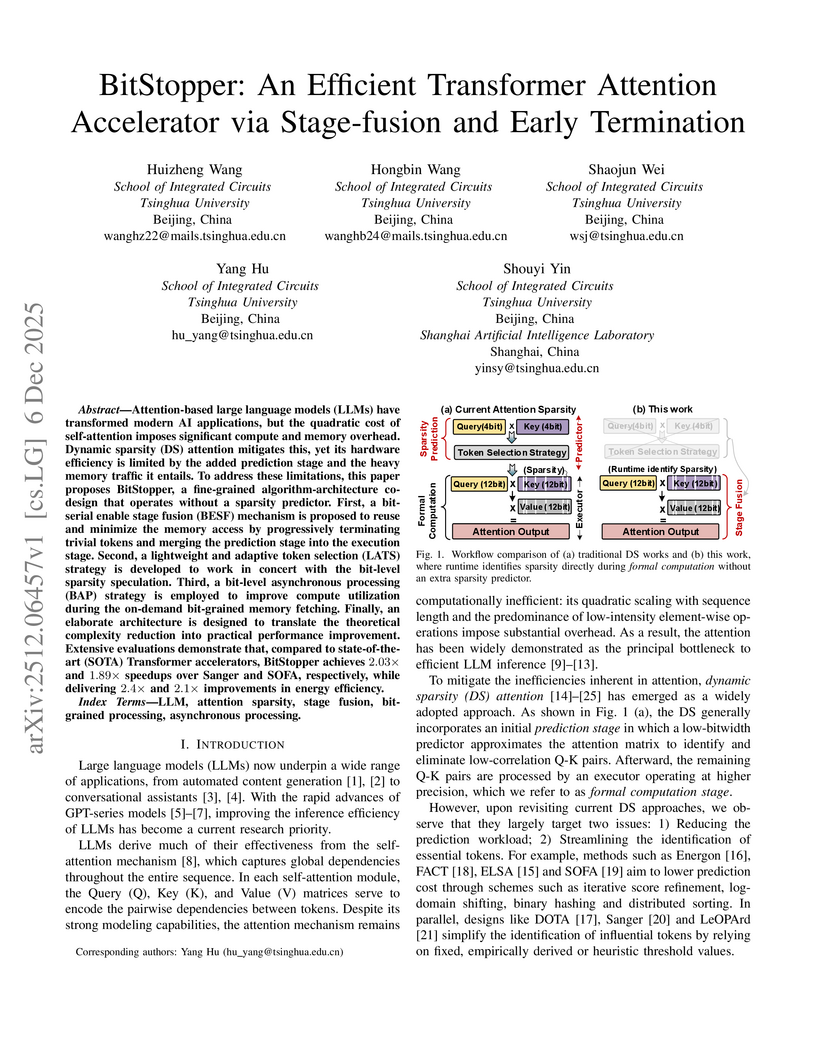

BitStopper is an efficient Transformer attention accelerator for Large Language Models that eliminates the separate sparsity prediction stage by fusing prediction and formal computation. It achieves this through bit-level processing and adaptive pruning, resulting in up to 3.2x speedup and 3.7x energy efficiency over a dense baseline.

Wearable physiological signals exhibit strong nonlinear and subject-dependent behavior, challenging traditional linear models. This study provides a unified evaluation of cognitive load, stress, and physical exercise recognition using three public Empatica~E4 datasets. Across all conditions, nonlinear machine learning models consistently outperformed linear baselines, achieving 0.89--0.98 accuracy and 0.96--0.99 ROC--AUC, while linear models remained below 0.70--0.73 AUC. Although Leave-One-Subject-Out validation revealed substantial inter-individual variability, nonlinear models maintained moderate cross-person generalization. Ablation and statistical analyses confirmed the necessity of multimodal fusion, particularly EDA, temperature, and ACC, while SHAP interpretability validated these findings by uncovering physiologically meaningful feature contributions across tasks. Overall, the results demonstrate that physiological state recognition is fundamentally nonlinear and establish a unified benchmark to guide the development of more robust wearable health-monitoring systems.

Resistive random-access memory (RRAM) provides an excellent platform for analog matrix computing (AMC), enabling both matrix-vector multiplication (MVM) and the solution of matrix equations through open-loop and closed-loop circuit architectures. While RRAM-based AMC has been widely explored for accelerating neural networks, its application to signal processing in massive multiple-input multiple-output (MIMO) wireless communication is rapidly emerging as a promising direction. In this Review, we summarize recent advances in applying AMC to massive MIMO, including DFT/IDFT computation for OFDM modulation and demodulation using MVM circuits; MIMO detection and precoding using MVM-based iterative algorithms; and rapid one-step solutions enabled by matrix inversion (INV) and generalized inverse (GINV) circuits. We also highlight additional opportunities, such as AMC-based compressed-sensing recovery for channel estimation and eigenvalue circuits for leakage-based precoding. Finally, we outline key challenges, including RRAM device reliability, analog circuit precision, array scalability, and data conversion bottlenecks, and discuss the opportunities for overcoming these barriers. With continued progress in device-circuit-algorithm co-design, RRAM-based AMC holds strong promise for delivering high-efficiency, high-reliability solutions to (ultra)massive MIMO signal processing in the 6G era.

The main objective of this study is to propose an optimal transport based semi-supervised approach to learn from scarce labelled image data using deep convolutional networks. The principle lies in implicit graph-based transductive semi-supervised learning where the similarity metric between image samples is the Wasserstein distance. This metric is used in the label propagation mechanism during learning. We apply and demonstrate the effectiveness of the method on a GNSS real life application. More specifically, we address the problem of multi-path interference detection. Experiments are conducted under various signal conditions. The results show that for specific choices of hyperparameters controlling the amount of semi-supervision and the level of sensitivity to the metric, the classification accuracy can be significantly improved over the fully supervised training method.

There are no more papers matching your filters at the moment.