networking-and-internet-architecture

With the rise of mega-satellite constellations, the integration of hierarchical non-terrestrial and terrestrial networks has become a cornerstone of 6G coverage enhancements. In these hierarchical satellite networks, controllers manage satellite switches within their assigned domains. However, the high mobility of LEO satellites and field-of-view (FOV) constraints pose fundamental challenges to efficient domain partitioning. Centralized control approaches face scalability bottlenecks, while distributed architectures with onboard controllers often disregard FOV limitations, leading to excessive signaling overhead. LEO satellites outside a controller's FOV require an average of five additional hops, resulting in a 10.6-fold increase in response time. To address these challenges, we propose Eunomia, a three-step domain-partitioning framework that leverages movement-aware FOV segmentation within a hybrid control plane combining ground stations and MEO satellites. Eunomia reduces control plane latency by constraining domains to FOV-aware regions and ensures single-hop signaling. It further balances traffic load through spectral clustering on a Control Overhead Relationship Graph and optimizes controller assignment via the Kuhn-Munkres algorithm. We implement Eunomia on the Plotinus emulation platform with realistic constellation parameters. Experimental results demonstrate that Eunomia reduces request loss by up to 58.3%, control overhead by up to 50.3\%, and algorithm execution time by 77.7% significantly outperforming current state-of-the-art solutions.

Non terrestrial networks are critical for achieving global 6G coverage, yet efficient resource management in aerial and space environments remains challenging due to limited onboard power and dynamic operational conditions. Network slicing offers a promising solution for spectrum optimization in UAV based systems serving heterogeneous service demands. For that, this paper proposes a hierarchical network slicing framework for UAV satellite integrated networks supporting eMBB, URLLC, and mMTC services. Specifically, we formulate a joint optimization of UAV trajectory, transmission power, and spectrum allocation as a decentralized partially observable Markov decision process that ensures quality of service while minimizing energy consumption and maximizing resource fairness. To address the computational intractability and partial observability, we develop a multi agent deep reinforcement learning solution under the centralized training and decentralized execution paradigm. In the proposed system, UAV agents act as distributed actors coordinated by a shared critic operating with multi head attention mechanism at a low Earth orbit satellite. Experimental results then demonstrate that our approach outperforms existing methods by up to 33% in cumulative reward while achieving superior energy efficiency and fairness.

10 Dec 2025

Backpressure (BP) routing and scheduling is an established resource allocation method for wireless multi-hop networks, noted for its fully distributed operation and maximum queue stability. Recent advances in shortest path-biased BP routing (SP-BP) mitigate shortcomings such as slow startup and random walks, yet exclusive link-level commodity selection still causes last-packet problem and bandwidth underutilization. By revisiting the Lyapunov drift theory underlying BP, we show that the legacy exclusive commodity selection is unnecessary, and propose a Maximum Utility (MaxU) link-sharing method to expand its performance envelope without increasing control message overhead. Numerical results show that MaxU SP-BP substantially mitigates the last-packet problem and slightly expands the network capacity region.

High-Throughput Satellites (HTS) use beam hopping to handle non-uniform and time-varying ground traffic demand. A significant technical challenge in beam hopping is the computation of effective illumination patterns. Traditional algorithms, like the genetic algorithm, require over 300 seconds to compute a single illumination pattern for just 37 cells, whereas modern HTS typically covers over 300 cells, rendering current methods impractical for real-world applications. Advanced approaches, such as multi-agent deep reinforcement learning, face convergence issues when the number of cells exceeds 40. In this paper, we introduce Tyche, a hybrid computation framework designed to address this challenge. Tyche incorporates a Monte Carlo Tree Search Beam Hopping (MCTS-BH) algorithm for computing illumination patterns and employs sliding window and pruning techniques to significantly reduce computation time. Specifically, MCTS-BH can compute one illumination pattern for 37 cells in just 12 seconds. To ensure real-time computation, we use a Greedy Beam Hopping (G-BH) algorithm, which provides a provisional solution while MCTS-BH completes its computation in the background. Our evaluation results show that MCTS-BH can increase throughput by up to 98.76%, demonstrating substantial improvements over existing solutions.

As modern networks grow in scale and complexity, manual configuration becomes increasingly inefficient and prone to human error. While intent-driven self-configuration using large language models has shown significant promise, such models remain computationally expensive, resource-intensive, and often raise privacy concerns because they typically rely on external cloud infrastructure. This work introduces SLM_netconfig, a fine-tuned small language model framework that uses an agent-based architecture and parameter-efficient adaptation techniques to translate configuration intents expressed as natural language requirements or questions into syntactically and semantically valid network configurations. The system is trained on a domain-specific dataset generated through a pipeline derived from vendor documentation, ensuring strong alignment with real-world configuration practices. Extensive evaluation shows that SLM_netconfig, when using its question-to-configuration model, achieves higher syntactic accuracy and goal accuracy than LLM-NetCFG while substantially reducing translation latency and producing concise, interpretable configurations. These results demonstrate that fine-tuned small language models, as implemented in SLM_netconfig, can deliver efficient, accurate, and privacy-preserving automated configuration generation entirely on-premise, making them a practical and scalable solution for modern autonomous network configuration.

Efficient information exchange and reliable contextual reasoning are essential for vehicle-to-everything (V2X) networks. Conventional communication schemes often incur significant transmission overhead and latency, while existing trajectory prediction models generally lack environmental perception and logical inference capabilities. This paper presents a trajectory prediction framework that integrates semantic communication with Agentic AI to enhance predictive performance in vehicular environments. In vehicle-to-infrastructure (V2I) communication, a feature-extraction agent at the Roadside Unit (RSU) derives compact representations from historical vehicle trajectories, followed by semantic reasoning performed by a semantic-analysis agent. The RSU then transmits both feature representations and semantic insights to the target vehicle via semantic communication, enabling the vehicle to predict future trajectories by combining received semantics with its own historical data. In vehicle-to-vehicle (V2V) communication, each vehicle performs local feature extraction and semantic analysis while receiving predicted trajectories from neighboring vehicles, and jointly utilizes this information for its own trajectory prediction. Extensive experiments across diverse communication conditions demonstrate that the proposed method significantly outperforms baseline schemes, achieving up to a 47.5% improvement in prediction accuracy under low signal-to-noise ratio (SNR) conditions.

The convergence of the Internet of Things (IoT) and 5G technologies is transforming modern communication systems by enabling massive connectivity, low latency, and high-speed data transmission. In this evolving landscape, Content-Centric Networking (CCN) is emerging as a promising alternative to traditional Internet Protocol (IP)-based architectures. CCN offers advantages such as in-network caching, scalability, and efficient content dissemination, all of which are particularly well-suited to the constraints of the IoT. However, deploying content-centric approaches in 5G-based IoT environments introduces significant security challenges. Key concerns include content authentication, data integrity, privacy protection, and resilience against attacks such as spoofing and cache poisoning. Such issues are exacerbated by the distributed, mobile, and heterogeneous nature of IoT and 5G systems. In this survey, we review and classify existing security solutions for content-centric architectures in IoT-5G scenarios. We highlight current trends, identify limitations in existing approaches, and outline future research directions with a focus on lightweight and adaptive security mechanisms.

This paper investigates a reconfigurable intelligent surface (RIS)-assisted multi-waveguide pinching-antenna (PA) system (PASS) for multi-user downlink information transmission, motivated by the unknown impact of the integration of emerging PASS and RIS on wireless communications. First, we formulate sum rate (SR) and energy efficiency (EE) maximization problems in a unified framework, subject to constraints on the movable region of PAs, total power budget, and tunable phase of RIS elements. Then, by leveraging a graph-structured topology of the RIS-assisted PASS, a novel three-stage graph neural network (GNN) is proposed, which learns PA positions based on user locations, and RIS phase shifts according to composite channel conditions at the first two stages, respectively, and finally determines beamforming vectors. Specifically, the proposed GNN is achieved through unsupervised training, together with three implementation strategies for its integration with convex optimization, thus offering trade-offs between inference time and solution optimality. Extensive numerical results are provided to validate the effectiveness of the proposed GNN, and to support its unique attributes of viable generalization capability, good performance reliability, and real-time applicability. Moreover, the impact of key parameters on RIS-assisted PASS is illustrated and analyzed.

The accelerating expansion of AI workloads is colliding with an energy landscape increasingly dominated by intermittent renewable generation. While vast quantities of zero-carbon energy are routinely curtailed, today's centralized datacenter architectures remain poorly matched to this reality in both energy proportionality and geographic flexibility. This work envisions a shift toward a distributed fabric of renewable-powered micro-datacenters that dynamically follow the availability of surplus green energy through live workload migration.

At the core of this vision lies a formal feasibility-domain model that delineates when migratory AI computation is practically achievable. By explicitly linking checkpoint size, wide-area bandwidth, and renewable-window duration, the model reveals that migration is almost always energetically justified, and that time-not energy-is the dominant constraint shaping feasibility. This insight enables the design of a feasibility-aware orchestration framework that transforms migration from a best-effort heuristic into a principled control mechanism. Trace-driven evaluation shows that such orchestration can simultaneously reduce non-renewable energy use and improve performance stability, overcoming the tradeoffs of purely energy-driven strategies.

Beyond the immediate feasibility analysis, the extended version explores the architectural horizon of renewable-aware AI infrastructures. It examines the role of emerging ultra-efficient GPU-enabled edge platforms, anticipates integration with grid-level control and demand-response ecosystems, and outlines paths toward supporting partially migratable and distributed workloads. The work positions feasibility-aware migration as a foundational building block for a future computing paradigm in which AI execution becomes fluid, geographically adaptive, and aligned with renewable energy availability.

The trust-based nature of Border Gateway Protocol (BGP) makes it vulnerable to disruptions like prefix hijacking and misconfigurations, threatening routing stability. Traditional detection relies on manual inspection with limited scalability. Machine/Deep Learning (M/DL) approaches automate detection but suffer from suboptimal precision, limited generalizability, and high retraining costs. This is because existing methods focus on topological structures rather than comprehensive semantic characteristics of Autonomous Systems (ASes), often misinterpreting functionally similar but topologically distant ASes.

To address this, we propose BGPShield, an anomaly detection framework built on LLM embeddings that captures the Behavior Portrait and Routing Policy Rationale of each AS beyond topology, such as operational scale and global role. We propose a segment-wise aggregation scheme to transform AS descriptions into LLM representations without information loss, and a lightweight contrastive reduction network to compress them into a semantic-consistent version. Using these representations, our AR-DTW algorithm aligns and accumulates semantic distances to reveal behavioral inconsistencies. Evaluated on 16 real-world datasets, BGPShield detects 100% of verified anomalies with a false discovery rate below 5%. Notably, the employed LLMs were released prior to evaluation events, verifying generalizability. Furthermore, BGPShield constructs representations for unseen ASes within one second, significantly outperforming BEAM which demands costly retraining (averaging 65 hours).

Wireless communication is evolving with the adoption of dynamic and self-organizing networks. They are expected to play a crucial role in shaping sixth-generation (6G) systems and the ongoing standardization process. The concept of non-public networks (NPNs) introduced in fifth-generation (5G) will be enhanced by nomadic non-public networks (NNPNs), extending mobility and adaptability beyond fixed locations. These networks help overcome the limitations of traditional static infrastructures, making them applicable to areas such as emergency response, transportation, agriculture, and others. This paper examines the transition from NPNs to NNPNs, highlighting key technical aspects such as network architecture, dynamic resource allocation, and wireless backhauling. Several use cases illustrate how NNPNs improve connectivity in environments where traditional networks are limited. Additionally, the study defines Key Performance Indicators (KPIs) to evaluate NNPN applications and establishes a framework for categorizing them based on mobility and operational requirements. Despite their advantages, NNPNs introduce architectural, regulatory, and security challenges such as new approaches for handovers, spectrum policies or cross-border functionality, and trust mechanisms to maintain reliable operations. By identifying use cases, defining evaluation criteria, and addressing technical and regulatory challenges, this paper provides insights into integrating NNPNs into future 6G networks. These findings contribute to ongoing standardization efforts and emphasize the need for adaptable policies and network architectures to maximize the benefits of NNPNs in next-generation communication systems.

Modern networks support network slicing, which partitions physical infrastructure into virtual slices tailored to different service requirements (for example, high bandwidth or low latency). Optimally allocating users to slices is a constrained optimization problem that traditionally requires complex algorithms. In this paper, we explore the use of Large Language Models (LLMs) to tackle radio resource allocation for network slicing. We focus on two approaches: (1) using an LLM in a zero-shot setting to directly assign user service requests to slices, and (2) formulating an integer programming model where the LLM provides semantic insight by estimating similarity between requests. Our experiments show that an LLM, even with zero-shot prompting, can produce a reasonable first draft of slice assignments, although it may violate some capacity or latency constraints. We then incorporate the LLM's understanding of service requirements into an optimization solver to generate an improved allocation. The results demonstrate that LLM-guided grouping of requests, based on minimal textual input, achieves performance comparable to traditional methods that use detailed numerical data, in terms of resource utilization and slice isolation. While the LLM alone does not perfectly satisfy all constraints, it significantly reduces the search space and, when combined with exact solvers, provides a promising approach for efficient 5G network slicing resource allocation.

04 Nov 2025

Geospatial decentralization is essential for blockchains, ensuring regulatory resilience, robustness, and fairness. We empirically analyze five major Proof of Stake (PoS) blockchains: Aptos, Avalanche, Ethereum, Solana, and Sui, revealing that a few geographic regions dominate consensus voting power, resulting in limited geospatial decentralization. To address this, we propose Geospatially aware Proof of Stake (GPoS), which integrates geospatial diversity with stake-based voting power. Experimental evaluation demonstrates an average 45% improvement in geospatial decentralization, as measured by the Gini coefficient of Eigenvector centrality, while incurring minimal performance overhead in BFT protocols, including HotStuff and CometBFT. These results demonstrate that GPoS can improve geospatial decentralization {while, in our experiments, incurring minimal overhead} to consensus performance.

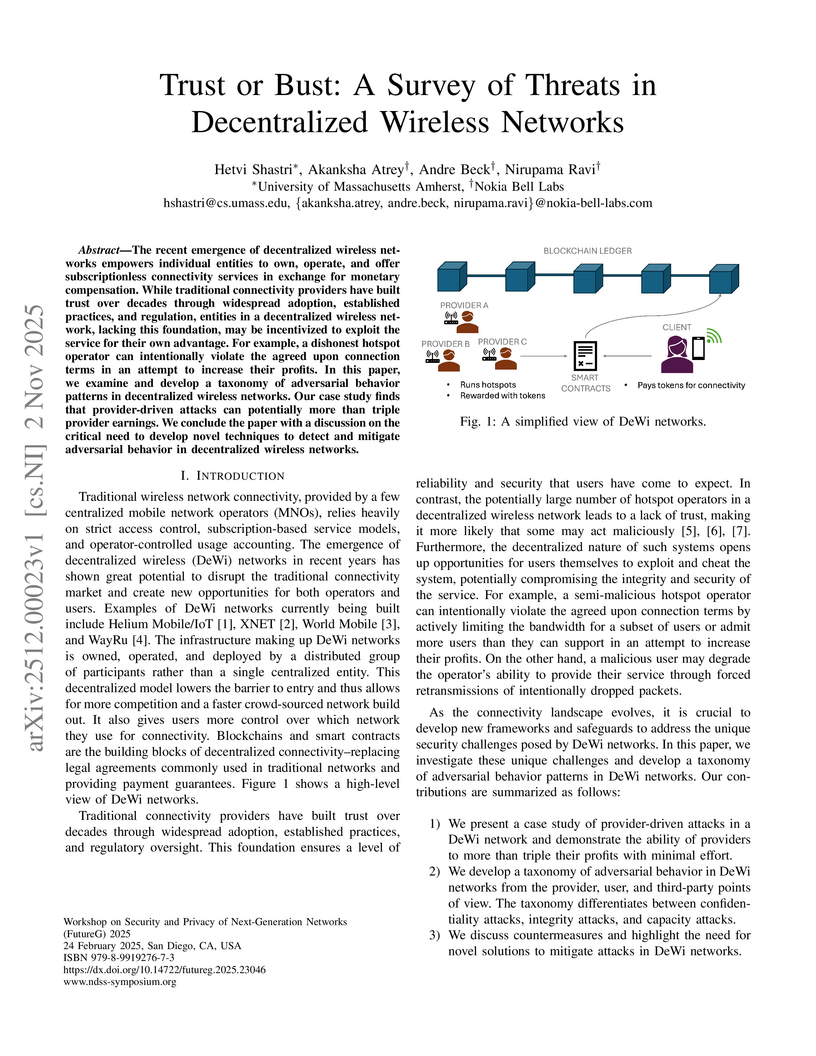

The recent emergence of decentralized wireless networks empowers individual entities to own, operate, and offer subscriptionless connectivity services in exchange for monetary compensation. While traditional connectivity providers have built trust over decades through widespread adoption, established practices, and regulation, entities in a decentralized wireless network, lacking this foundation, may be incentivized to exploit the service for their own advantage. For example, a dishonest hotspot operator can intentionally violate the agreed upon connection terms in an attempt to increase their profits. In this paper, we examine and develop a taxonomy of adversarial behavior patterns in decentralized wireless networks. Our case study finds that provider-driven attacks can potentially more than triple provider earnings. We conclude the paper with a discussion on the critical need to develop novel techniques to detect and mitigate adversarial behavior in decentralized wireless networks.

Meta developed NCCLX, a high-performance collective communication framework, to enable training and inference of large language models like Llama4 on clusters exceeding 100,000 GPUs. NCCLX reduces Llama4 training step latency by up to 12% and inference decoding latency by 15-80% for MoE models, while accelerating startup by 11x at 96K GPUs and nearly halving GPU HBM usage compared to existing solutions.

Next-generation (NextG) cellular networks are expected to manage dynamic traffic while sustaining high performance. Large language models (LLMs) provide strategic reasoning for 6G planning, but their computational cost and latency limit real-time use. Multi-agent reinforcement learning (MARL) supports localized adaptation, yet coordination at scale remains challenging. We present AURA, a framework that integrates cloud-based LLMs for high-level planning with base stations modeled as MARL agents for local decision-making. The LLM generates objectives and subgoals from its understanding of the environment and reasoning capabilities, while agents at base stations execute these objectives autonomously, guided by a trust mechanism that balances local learning with external input. To reduce latency, AURA employs batched communication so that agents update the LLM's view of the environment and receive improved feedback. In a simulated 6G scenario, AURA improves resilience, reducing dropped handoff requests by more than half under normal and high traffic and lowering system failures. Agents use LLM input in fewer than 60\% of cases, showing that guidance augments rather than replaces local adaptability, thereby mitigating latency and hallucination risks. These results highlight the promise of combining LLM reasoning with MARL adaptability for scalable, real-time NextG network management.

In recent years, significant research efforts have focused on improving blockchain throughput and confirmation speeds without compromising security. While decreasing the time it takes for a transaction to be included in the blockchain ledger enhances user experience, a fundamental delay still remains between when a transaction is issued by a user and when its inclusion is confirmed in the blockchain ledger. This delay limits user experience gains through the confirmation uncertainty it brings for users. This inherent delay in conventional blockchain protocols has led to the emergence of preconfirmation protocols -- protocols that provide users with early guarantees of eventual transaction confirmation.

This article presents a Systematization of Knowledge (SoK) on preconfirmations. We present the core terms and definitions needed to understand preconfirmations, outline a general framework for preconfirmation protocols, and explore the economics and risks of preconfirmations. Finally, we survey and apply our framework to several implementations of real-world preconfirmation protocols, bridging the gap between theory and practice.

While simulators exist for vehicular IoT nodes communicating with the Cloud through Edge nodes in a fully-simulated osmotic architecture, they often lack support for dynamic agent planning and optimisation to minimise vehicular battery consumption while ensuring fair communication times. Addressing these challenges requires extending current simulator architectures with AI algorithms for both traffic prediction and dynamic agent planning. This paper presents an extension of SimulatorOrchestrator (SO) to meet these requirements. Preliminary results over a realistic urban dataset show that utilising vehicular planning algorithms can lead to improved battery and QoS performance compared with traditional shortest path algorithms. The additional inclusion of desirability areas enabled more ambulances to be routed to their target destinations while utilising less energy to do so, compared to traditional and weighted algorithms without desirability considerations.

We introduce the concept of 1Q, the first wireless generation of integrated classical and quantum communication. 1Q features quantum base stations (QBSs) that support entanglement distribution via free-space optical links alongside traditional radio communications. Key new components include quantum cells, quantum user equipment (QUEs), and hybrid resource allocation spanning classical time-frequency and quantum entanglement domains. Several application scenarios are discussed and illustrated through system design requirements for quantum key distribution, blind quantum computing, and distributed quantum sensing. A range of unique quantum constraints are identified, including decoherence timing, fidelity requirements, and the interplay between quantum and classical error probabilities. Protocol adaptations extend cellular connection management to incorporate entanglement generation, distribution, and handover procedures, expanding the Quantum Internet to the cellular wireless.

Quantum networks rely on high fidelity entangled pairs distributed to nodes, but maintaining their fidelity is challenged by environmental decoherence during storage. Entanglement purification is used to restore fidelity, but the idle periods imposed by the associated classical communication delays counteract this goal by exposing the states to further decoherence. In this work, we analyze the practical viability of entanglement purification protocols (BBPSSW, DEJMPS), under non-instantaneous classical coordination over Internet protocol (IP) communications networks. We present a comprehensive performance evaluation of these protocols in various network conditions for a range of quantum memory technologies. We employ a microscopic Lindblad treatment of the underlying quantum dynamics, and use current-generation metropolitan IP network latency statistics and parameters drawn from quantum memory testbeds. In doing so we identify the regions in which entanglement purification succeeds and fails, delineated by break-even iso-fidelity contours in the phase space. We then determine the total number of entangled pairs required to complete a multi-round purification protocol, and the steady-state throughput of entangled pairs with purified fidelities that exceed application-specific thresholds. This provides latency budgets, memory quality targets, and resource-overhead estimates for deploying purification on current and near-future networks.

There are no more papers matching your filters at the moment.