Zhejiang Key Laboratory of Visual Information Intelligent Processing

Region representation learning plays a pivotal role in urban computing by extracting meaningful features from unlabeled urban data. Analogous to how perceived facial age reflects an individual's health, the visual appearance of a city serves as its "portrait", encapsulating latent socio-economic and environmental characteristics. Recent studies have explored leveraging Large Language Models (LLMs) to incorporate textual knowledge into imagery-based urban region representation learning. However, two major challenges remain: i) difficulty in aligning fine-grained visual features with long captions, and ii) suboptimal knowledge incorporation due to noise in LLM-generated captions. To address these issues, we propose a novel pre-training framework called UrbanLN that improves Urban region representation learning through Long-text awareness and Noise suppression. Specifically, we introduce an information-preserved stretching interpolation strategy that aligns long captions with fine-grained visual semantics in complex urban scenes. To effectively mine knowledge from LLM-generated captions and filter out noise, we propose a dual-level optimization strategy. At the data level, a multi-model collaboration pipeline automatically generates diverse and reliable captions without human intervention. At the model level, we employ a momentum-based self-distillation mechanism to generate stable pseudo-targets, facilitating robust cross-modal learning under noisy conditions. Extensive experiments across four real-world cities and various downstream tasks demonstrate the superior performance of our UrbanLN.

Next activity prediction represents a fundamental challenge for optimizing business processes in service-oriented architectures such as microservices environments, distributed enterprise systems, and cloud-native platforms, which enables proactive resource allocation and dynamic service composition. Despite the prevalence of sequence-based methods, these approaches fail to capture non-sequential relationships that arise from parallel executions and conditional dependencies. Even though graph-based approaches address structural preservation, they suffer from homogeneous representations and static structures that apply uniform modeling strategies regardless of individual process complexity characteristics. To address these limitations, we introduce RLHGNN, a novel framework that transforms event logs into heterogeneous process graphs with three distinct edge types grounded in established process mining theory. Our approach creates four flexible graph structures by selectively combining these edges to accommodate different process complexities, and employs reinforcement learning formulated as a Markov Decision Process to automatically determine the optimal graph structure for each specific process instance. RLHGNN then applies heterogeneous graph convolution with relation-specific aggregation strategies to effectively predict the next activity. This adaptive methodology enables precise modeling of both sequential and non-sequential relationships in service interactions. Comprehensive evaluation on six real-world datasets demonstrates that RLHGNN consistently outperforms state-of-the-art approaches. Furthermore, it maintains an inference latency of approximately 1 ms per prediction, representing a highly practical solution suitable for real-time business process monitoring applications. The source code is available at this https URL.

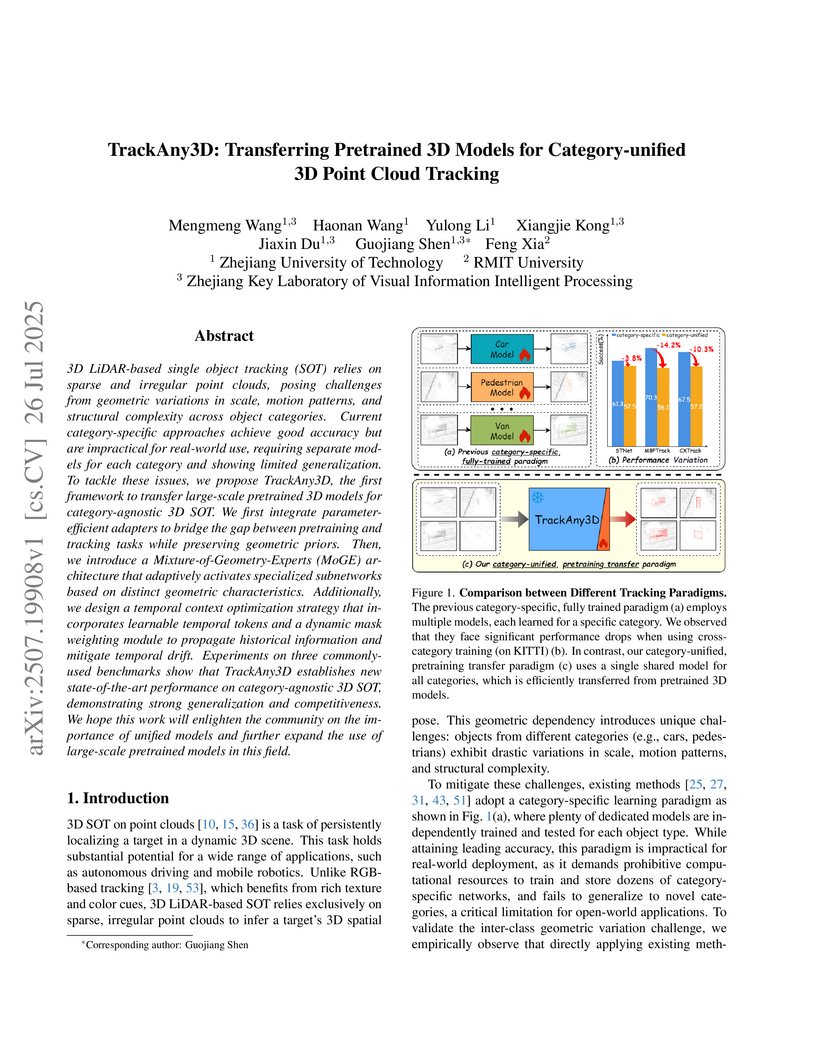

3D LiDAR-based single object tracking (SOT) relies on sparse and irregular point clouds, posing challenges from geometric variations in scale, motion patterns, and structural complexity across object categories. Current category-specific approaches achieve good accuracy but are impractical for real-world use, requiring separate models for each category and showing limited generalization. To tackle these issues, we propose TrackAny3D, the first framework to transfer large-scale pretrained 3D models for category-agnostic 3D SOT. We first integrate parameter-efficient adapters to bridge the gap between pretraining and tracking tasks while preserving geometric priors. Then, we introduce a Mixture-of-Geometry-Experts (MoGE) architecture that adaptively activates specialized subnetworks based on distinct geometric characteristics. Additionally, we design a temporal context optimization strategy that incorporates learnable temporal tokens and a dynamic mask weighting module to propagate historical information and mitigate temporal drift. Experiments on three commonly-used benchmarks show that TrackAny3D establishes new state-of-the-art performance on category-agnostic 3D SOT, demonstrating strong generalization and competitiveness. We hope this work will enlighten the community on the importance of unified models and further expand the use of large-scale pretrained models in this field.

Tropical Cyclone (TC) estimation aims to accurately estimate various TC attributes in real time. However, distribution shifts arising from the complex and dynamic nature of TC environmental fields, such as varying geographical conditions and seasonal changes, present significant challenges to reliable estimation. Most existing methods rely on multi-modal fusion for feature extraction but overlook the intrinsic distribution of feature representations, leading to poor generalization under out-of-distribution (OOD) scenarios. To address this, we propose an effective Identity Distribution-Oriented Physical Invariant Learning framework (IDOL), which imposes identity-oriented constraints to regulate the feature space under the guidance of prior physical knowledge, thereby dealing distribution variability with physical invariance. Specifically, the proposed IDOL employs the wind field model and dark correlation knowledge of TC to model task-shared and task-specific identity tokens. These tokens capture task dependencies and intrinsic physical invariances of TC, enabling robust estimation of TC wind speed, pressure, inner-core, and outer-core size under distribution shifts. Extensive experiments conducted on multiple datasets and tasks demonstrate the outperformance of the proposed IDOL, verifying that imposing identity-oriented constraints based on prior physical knowledge can effectively mitigates diverse distribution shifts in TC this http URL is available at this https URL.

There are no more papers matching your filters at the moment.