formal-languages-and-automata-theory

We study the unambiguisability problem for min-plus (tropical) weighted automata (WFAs), and the counter-minimisation problem for tropical Cost Register Automata (CRAs), which are expressively-equivalent to WFAs. Both problems ask whether the "amount of nondeterminism" in the model can be reduced. We show that WFA unambiguisability is decidable, thus resolving this long-standing open problem. Our proof is via reduction to WFA determinisability, which was recently shown to be decidable. On the negative side, we show that CRA counter minimisation is undecidable, even for a fixed number of registers (specifically, already for 7 registers).

We investigate the connection between properties of formal languages and properties of their generating series, with a focus on the class of holonomic power series. We first prove a strong version of a conjecture by Castiglione and Massazza: weakly-unambiguous Parikh automata are equivalent to unambiguous two-way reversal bounded counter machines, and their multivariate generating series are holonomic. We then show that the converse is not true: we construct a language whose generating series is algebraic (thus holonomic), but which is inherently weakly-ambiguous as a Parikh automata language. Finally, we prove an effective decidability result for the inclusion problem for weakly-unambiguous Parikh automata, and provide an upper-bound on to its complexity.

The idea of using Formal Verification tools with large language models (LLMs) has enabled scaling software verification beyond manual workflows. However, current methods remain unreliable. Without a solid theoretical footing, the refinement process can wander; sometimes it settles, sometimes it loops back, and sometimes it breaks away from any stable trajectory. This work bridges this critical gap by developing an LLM-Verifier Convergence Theorem, providing the first formal framework with provable guarantees for termination and convergence. We model the interaction between the LLM and the verifier as a discrete-time Markov Chain, with state transitions determined by a key parameter: the error-reduction probability (δ). The procedure reaching the Verified state almost surely demonstrates that the program terminates for any δ>0, with an expected iteration count bounded by E[n]≤4/δ. We then stress-tested this prediction in an extensive empirical campaign comprising more than 90,000 trials. The empirical results match the theory with striking consistency. Every single run reached verification, and the convergence factor clustered tightly around Cf≈ 1.0. Consequently, the bound mirrors the system's actual behavior. The evidence is sufficiently robust to support dividing the workflow into three distinct operating zones: marginal, practical, and high-performance. Consequently, we establish the design thresholds with absolute confidence. Together, the theoretical guarantee and the experimental evidence provide a clearer architectural foundation for LLM-assisted verification. Heuristic tuning no longer has to be carried out by the system. Engineers gain a framework that supports predictable resource planning and performance budgeting, precisely what is needed before deploying these pipelines into safety-critical software environments.

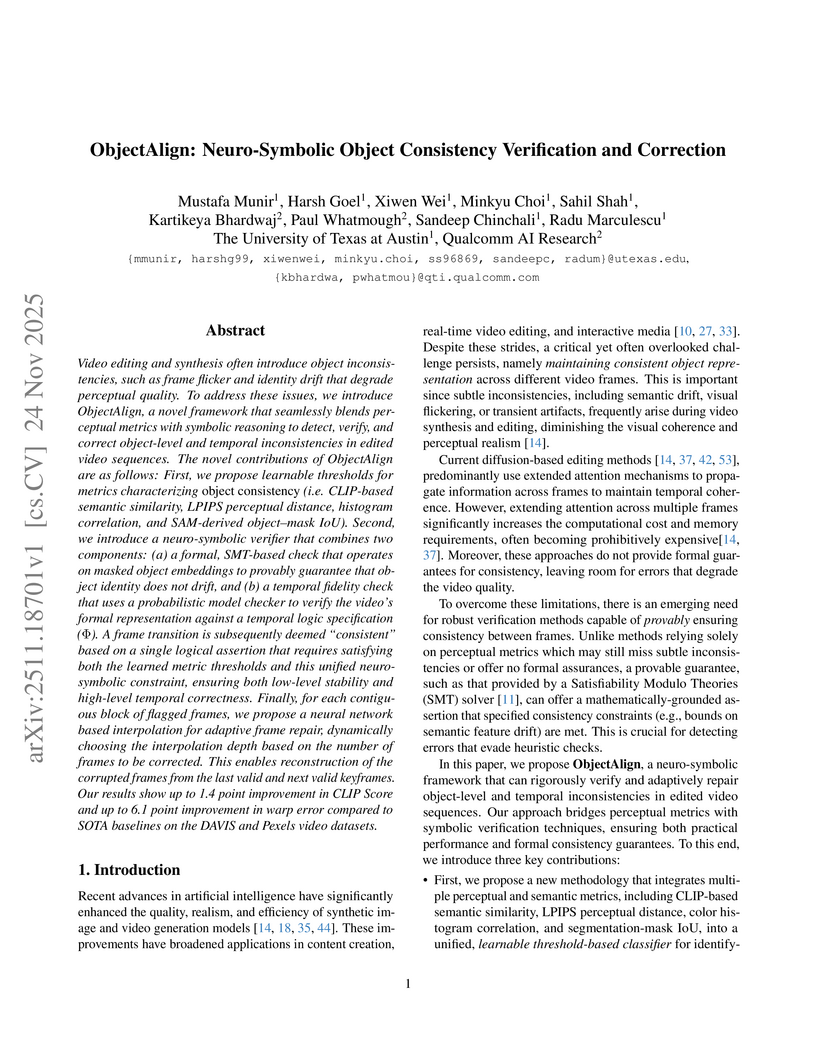

Video editing and synthesis often introduce object inconsistencies, such as frame flicker and identity drift that degrade perceptual quality. To address these issues, we introduce ObjectAlign, a novel framework that seamlessly blends perceptual metrics with symbolic reasoning to detect, verify, and correct object-level and temporal inconsistencies in edited video sequences. The novel contributions of ObjectAlign are as follows: First, we propose learnable thresholds for metrics characterizing object consistency (i.e. CLIP-based semantic similarity, LPIPS perceptual distance, histogram correlation, and SAM-derived object-mask IoU). Second, we introduce a neuro-symbolic verifier that combines two components: (a) a formal, SMT-based check that operates on masked object embeddings to provably guarantee that object identity does not drift, and (b) a temporal fidelity check that uses a probabilistic model checker to verify the video's formal representation against a temporal logic specification. A frame transition is subsequently deemed "consistent" based on a single logical assertion that requires satisfying both the learned metric thresholds and this unified neuro-symbolic constraint, ensuring both low-level stability and high-level temporal correctness. Finally, for each contiguous block of flagged frames, we propose a neural network based interpolation for adaptive frame repair, dynamically choosing the interpolation depth based on the number of frames to be corrected. This enables reconstruction of the corrupted frames from the last valid and next valid keyframes. Our results show up to 1.4 point improvement in CLIP Score and up to 6.1 point improvement in warp error compared to SOTA baselines on the DAVIS and Pexels video datasets.

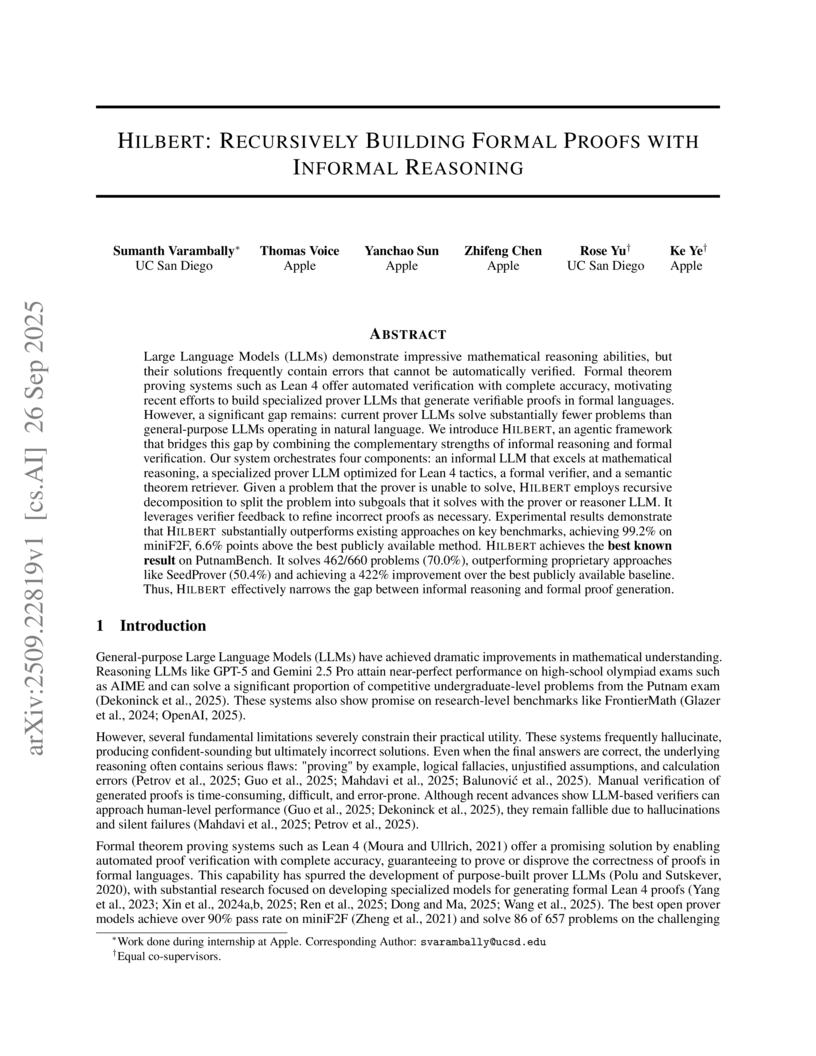

HILBERT presents a multi-turn agentic framework that recursively decomposes complex mathematical problems to generate formal Lean 4 proofs, effectively combining the strategic reasoning of general-purpose LLMs with the precision of formal provers. The system achieves a 99.2% pass rate on MiniF2F and a 70.0% pass rate on PutnamBench, significantly surpassing existing state-of-the-art methods by up to 20 percentage points on PutnamBench while constructing proofs of unprecedented length.

Counting properties (e.g. determining whether certain tokens occur more than other tokens in a given input text) have played a significant role in the study of expressiveness of transformers. In this paper, we provide a formal framework for investigating the counting power of transformers. We argue that all existing results demonstrate transformers' expressivity only for (semi-)linear counting properties, i.e., which are expressible as a boolean combination of linear inequalities. Our main result is that transformers can express counting properties that are highly nonlinear. More precisely, we prove that transformers can capture all semialgebraic counting properties, i.e., expressible as a boolean combination of arbitrary multivariate polynomials (of any degree). Among others, these generalize the counting properties that can be captured by C-RASP softmax transformers, which capture only linear counting properties. To complement this result, we exhibit a natural subclass of (softmax) transformers that completely characterizes semialgebraic counting properties. Through connections with the Hilbert's tenth problem, this expressivity of transformers also yields a new undecidability result for analyzing an extremely simple transformer model -- surprisingly with neither positional encodings (i.e. NoPE-transformers) nor masking. We also experimentally validate trainability of such counting properties.

We present an efficient algorithm for checking language equivalence of states

in top-down deterministic finite tree automata (DFTAs). Unlike string automata,

tree automata operate over hierarchical structures, posing unique challenges

for algorithmic analysis. Our approach reduces the equivalence checking problem

to that of checking the solvability of a system of language-theoretic

equations, which specify the behavior of a DFTA. By constructing such a system

of equations and systematically manipulating with it through substitution and

conflict detection rules, we develop a decision procedure that determines

whether two states accept the same tree language. We formally prove the

correctness and termination of the algorithm and establish its worst-case time

complexity as O(n2) under the RAM (Random Access Machine) model of

computation augmented with pointers.

Linear Recurrent Neural Networks (linear RNNs) have emerged as competitive alternatives to Transformers for sequence modeling, offering efficient training and linear-time inference. However, existing architectures face a fundamental trade-off between expressivity and efficiency, dictated by the structure of their state-transition matrices. Diagonal matrices, used in models such as Mamba, GLA, or mLSTM, yield fast runtime but have limited expressivity. To address this, recent architectures such as DeltaNet and RWKV-7 adopted a diagonal plus rank--1 structure, which allows simultaneous token and channel mixing, improving associative recall and, as recently shown, state-tracking when allowing state-transition matrices to have negative eigenvalues. Building on the interpretation of DeltaNet's recurrence as performing one step of online gradient descent per token on an associative recall loss, we introduce DeltaProduct, which instead takes multiple (nh) steps per token. This naturally leads to diagonal plus rank--nh state-transition matrices, formed as products of nh generalized Householder transformations, providing a tunable mechanism to balance expressivity and efficiency. We provide a detailed theoretical characterization of the state-tracking capability of DeltaProduct in finite precision, showing how it improves by increasing nh. Our extensive experiments demonstrate that DeltaProduct outperforms DeltaNet in both state-tracking and language modeling, while also showing significantly improved length extrapolation capabilities.

We show that for any i > 0, it is decidable, given a regular language, whether it is expressible in the \Sigma_i[<] fragment of first-order logic FO[<]. This settles a question open since 1971.

Our main technical result relies on the notion of polynomial closure of a class of languages V, that is, finite unions of languages of the form L0a1L1⋯anLn where each ai is a letter and each Li a language of V. We show that if a class V of regular languages with some closure properties (namely, a positive variety) has a decidable separation problem, then so does its polynomial closure Pol(V). The resulting algorithm for Pol(V) has time complexity that is exponential in the time complexity for V and we propose a natural conjecture that would lead to a polynomial time blowup instead.

Corollaries include the decidability of half levels of the dot-depth hierarchy and the group-based concatenation hierarchy.

In this paper, different variants of reversible finite automata are compared, and their hierarchy by the expressive power is established. It is shown that one-way reversible automata with multiple initial states (MRFA) recognize strictly more languages than sweeping reversible automata (sRFA), which are in turn stronger than one-way reversible automata with a single initial state (1RFA). The latter recognize strictly more languages than one-way permutation automata (1PerFA). It is also shown that the hierarchy of sRFA by the number of passes over the input string collapses: it turns out that three passes are always enough. On the other hand, MRFA form a hierarchy by the number of initial states: their subclass with at most k initial states (MRFAk) recognize strictly fewer languages than MRFAk+1, and also MRFAk are incomparable with sRFA. In the unary case, sRFA, MRFAk and MRFA become equal in their expressive power, and the inclusion of 1RFA into sRFA remains proper.

Language models (LMs) are often expected to generate strings in some formal

language; for example, structured data, API calls, or code snippets. Although

LMs can be tuned to improve their adherence to formal syntax, this does not

guarantee conformance, especially with smaller LMs suitable for large-scale

deployment. In addition, tuning requires significant resources, making it

impractical for uncommon or task-specific formats. To prevent downstream

parsing errors we would ideally constrain the LM to only produce valid output,

but this is severely complicated by tokenization, which is typically both

ambiguous and misaligned with the formal grammar. We solve these issues through

the application of automata theory, deriving an efficient closed-form solution

for the regular languages, a broad class of formal languages with many

practical applications, including API calls or schema-guided JSON and YAML. We

also discuss pragmatic extensions for coping with the issue of high branching

factor, and extend our techniques to deterministic context-free languages,

which similarly admit an efficient closed-form solution. Previous work on this

topic (Willard and Louf, 2023) layers bespoke solutions onto automata, leading

to problems with speed, correctness, and extensibility. Instead, we reformulate

the entire task in terms of automata so we can leverage well-studied and

well-optimized algorithms. Our system compiles constraints ~7,000x faster, is

provably correct, and can be extended in a modular fashion.

Algorithmic reasoning requires capabilities which are most naturally understood through recurrent models of computation, like the Turing machine. However, Transformer models, while lacking recurrence, are able to perform such reasoning using far fewer layers than the number of reasoning steps. This raises the question: what solutions are learned by these shallow and non-recurrent models? We find that a low-depth Transformer can represent the computations of any finite-state automaton (thus, any bounded-memory algorithm), by hierarchically reparameterizing its recurrent dynamics. Our theoretical results characterize shortcut solutions, whereby a Transformer with o(T) layers can exactly replicate the computation of an automaton on an input sequence of length T. We find that polynomial-sized O(logT)-depth solutions always exist; furthermore, O(1)-depth simulators are surprisingly common, and can be understood using tools from Krohn-Rhodes theory and circuit complexity. Empirically, we perform synthetic experiments by training Transformers to simulate a wide variety of automata, and show that shortcut solutions can be learned via standard training. We further investigate the brittleness of these solutions and propose potential mitigations.

The Connection between Process Complexity of Event Sequences and Models discovered by Process Mining

The Connection between Process Complexity of Event Sequences and Models discovered by Process Mining

Process mining is a research area focusing on the design of algorithms that

can automatically provide insights into business processes. Among the most

popular algorithms are those for automated process discovery, which have the

ultimate goal to generate a process model that summarizes the behavior recorded

in an event log. Past research had the aim to improve process discovery

algorithms irrespective of the characteristics of the input log. In this paper,

we take a step back and investigate the connection between measures capturing

characteristics of the input event log and the quality of the discovered

process models. To this end, we review the state-of-the-art process complexity

measures, propose a new process complexity measure based on graph entropy, and

analyze this set of complexity measures on an extensive collection of event

logs and corresponding automatically discovered process models. Our analysis

shows that many process complexity measures correlate with the quality of the

discovered process models, demonstrating the potential of using complexity

measures as predictors of process model quality. This finding is important for

process mining research, as it highlights that not only algorithms, but also

connections between input data and output quality should be studied.

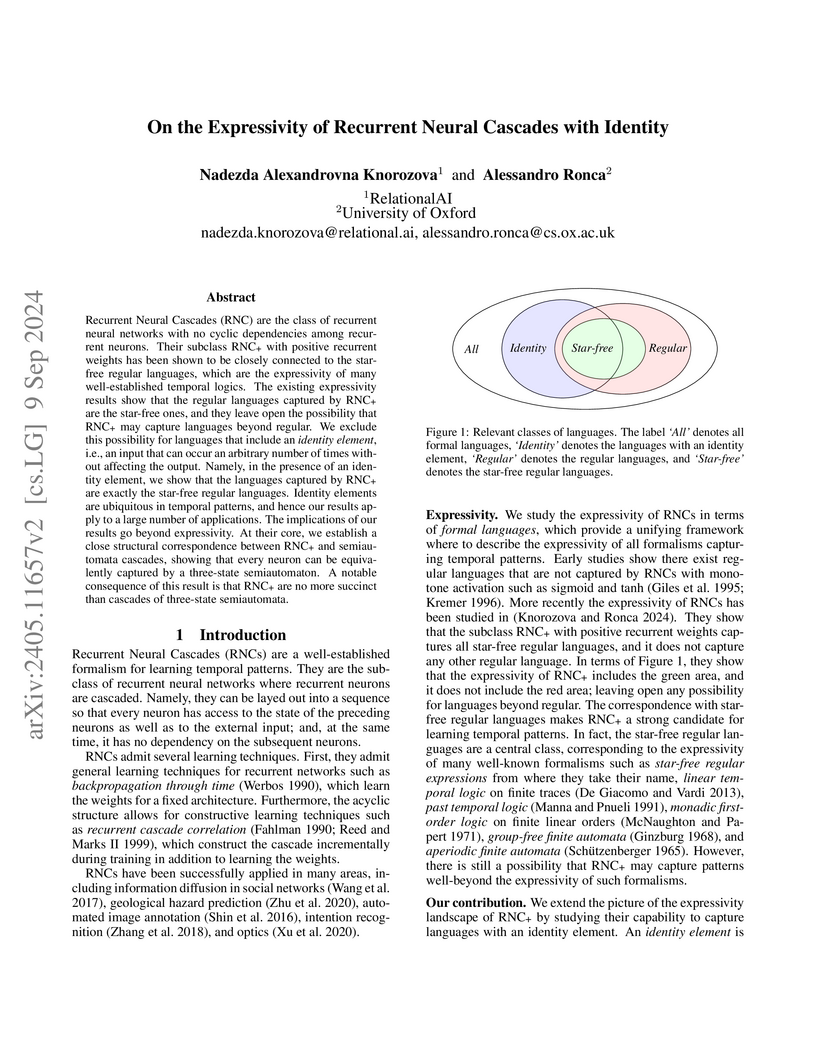

Recurrent Neural Cascades (RNC) are the class of recurrent neural networks with no cyclic dependencies among recurrent neurons. Their subclass RNC+ with positive recurrent weights has been shown to be closely connected to the star-free regular languages, which are the expressivity of many well-established temporal logics. The existing expressivity results show that the regular languages captured by RNC+ are the star-free ones, and they leave open the possibility that RNC+ may capture languages beyond regular. We exclude this possibility for languages that include an identity element, i.e., an input that can occur an arbitrary number of times without affecting the output. Namely, in the presence of an identity element, we show that the languages captured by RNC+ are exactly the star-free regular languages. Identity elements are ubiquitous in temporal patterns, and hence our results apply to a large number of applications. The implications of our results go beyond expressivity. At their core, we establish a close structural correspondence between RNC+ and semiautomata cascades, showing that every neuron can be equivalently captured by a three-state semiautomaton. A notable consequence of this result is that RNC+ are no more succinct than cascades of three-state semiautomata.

Formal-LLM introduces a framework that integrates formal language with natural language to guide Large Language Model (LLM) agents in generating multi-step plans. The system consistently achieved 100% executable plans on the OpenAGI benchmark and demonstrated over a 50% average performance increase for closed-source LLMs, addressing a key challenge of plan validity and executability.

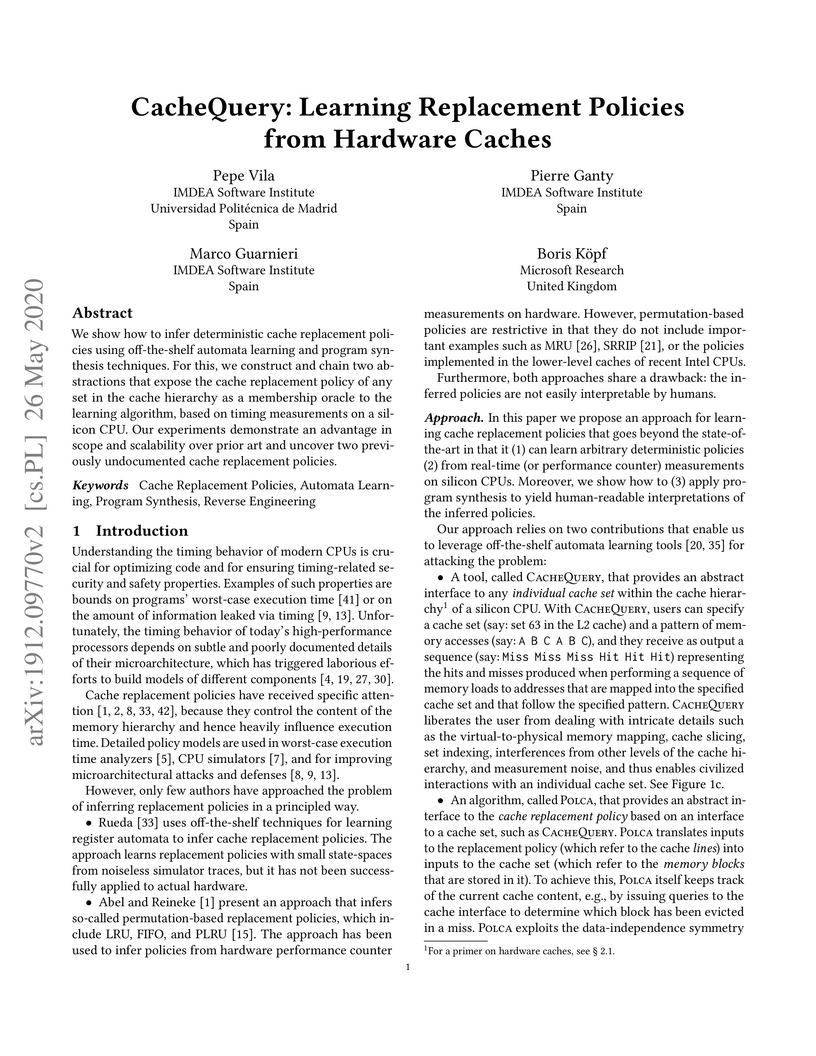

We show how to infer deterministic cache replacement policies using

off-the-shelf automata learning and program synthesis techniques. For this, we

construct and chain two abstractions that expose the cache replacement policy

of any set in the cache hierarchy as a membership oracle to the learning

algorithm, based on timing measurements on a silicon CPU. Our experiments

demonstrate an advantage in scope and scalability over prior art and uncover 2

previously undocumented cache replacement policies.

With the emerging applications that involve complex distributed systems branching-time specifications are specifically important as they reflect dynamic and non-deterministic nature of such applications. We describe the expressive power of a simple yet powerful branching-time specification framework -- branching-time normal form (BNF), which has been developed as part of clausal resolution for branching-time temporal logics. We show the encoding of Buchi Tree Automata in the language of the normal form, thus representing, syntactically, tree automata in a high-level way. Thus we can treat BNF as a normal form for the latter. These results enable us (1) to translate given problem specifications into the normal form and apply as a verification method a deductive reasoning technique -- the clausal temporal resolution; (2) to apply one of the core components of the resolution method -- the loop searching to extract, syntactically, hidden invariants in a wide range of complex temporal specifications.

Since the definition of the Busy Beaver function by Rado in 1962, an

interesting open question has been the smallest value of n for which BB(n) is

independent of ZFC set theory. Is this n approximately 10, or closer to

1,000,000, or is it even larger? In this paper, we show that it is at most

7,910 by presenting an explicit description of a 7,910-state Turing machine Z

with 1 tape and a 2-symbol alphabet that cannot be proved to run forever in ZFC

(even though it presumably does), assuming ZFC is consistent. The machine is

based on the work of Harvey Friedman on independent statements involving

order-invariant graphs. In doing so, we give the first known upper bound on the

highest provable Busy Beaver number in ZFC. To create Z, we develop and use a

higher-level language, Laconic, which is much more convenient than direct state

manipulation. We also use Laconic to design two Turing machines, G and R, that

halt if and only if there are counterexamples to Goldbach's Conjecture and the

Riemann Hypothesis, respectively.

This paper studies temporal planning in probabilistic environments, modeled as labeled Markov decision processes (MDPs), with user preferences over multiple temporal goals. Existing works reflect such preferences as a prioritized list of goals. This paper introduces a new specification language, termed prioritized qualitative choice linear temporal logic on finite traces, which augments linear temporal logic on finite traces with prioritized conjunction and ordered disjunction from prioritized qualitative choice logic. This language allows for succinctly specifying temporal objectives with corresponding preferences accomplishing each temporal task. The finite traces that describe the system's behaviors are ranked based on their dissatisfaction scores with respect to the formula. We propose a systematic translation from the new language to a weighted deterministic finite automaton. Utilizing this computational model, we formulate and solve a problem of computing an optimal policy that minimizes the expected score of dissatisfaction given user preferences. We demonstrate the efficacy and applicability of the logic and the algorithm on several case studies with detailed analyses for each.

Runtime verification (RV) has the potential to enable the safe operation of

safety-critical systems that are too complex to formally verify, such as Robot

Operating System 2 (ROS2) applications. Writing correct monitors can itself be

complex, and errors in the monitoring subsystem threaten the mission as a

whole. This paper provides an overview of a formal approach to generating

runtime monitors for autonomous robots from requirements written in a

structured natural language. Our approach integrates the Formal Requirement

Elicitation Tool (FRET) with Copilot, a runtime verification framework, through

the Ogma integration tool. FRET is used to specify requirements with

unambiguous semantics, which are then automatically translated into temporal

logic formulae. Ogma generates monitor specifications from the FRET output,

which are compiled into hard-real time C99. To facilitate integration of the

monitors in ROS2, we have extended Ogma to generate ROS2 packages defining

monitoring nodes, which run the monitors when new data becomes available, and

publish the results of any violations. The goal of our approach is to treat the

generated ROS2 packages as black boxes and integrate them into larger ROS2

systems with minimal effort.

There are no more papers matching your filters at the moment.