other-computer-science

Neural operators have demonstrated considerable effectiveness in accelerating the solution of time-dependent partial differential equations (PDEs) by directly learning governing physical laws from data. However, for PDEs governed by conservation laws(e.g., conservation of mass, energy, or matter), existing neural operators fail to satisfy conservation properties, which leads to degraded model performance and limited generalizability. Moreover, we observe that distinct PDE problems generally require different optimal neural network architectures. This finding underscores the inherent limitations of specialized models in generalizing across diverse problem domains.

To address these limitations, we propose Exterior-Embedded Conservation Framework (ECF), a universal conserving framework that can be integrated with various data-driven neural operators to enforce conservation laws strictly in predictions. The framework consists of two key components: a conservation quantity encoder that extracts conserved quantities from input data, and a conservation quantity decoder that adjusts the neural operator's predictions using these quantities to ensure strict conservation compliance in the final output. Since our architecture enforces conservation laws, we theoretically prove that it enhances model performance. To validate the performance of our method, we conduct experiments on multiple conservation-law-constrained PDE scenarios, including adiabatic systems, shallow water equations, and the Allen-Cahn problem. These baselines demonstrate that our method effectively improves model accuracy while strictly enforcing conservation laws in the predictions.

This work introduces ParamRF: a Python library for efficient, parametric modelling of radio frequency (RF) circuits. Built on top of the next-generation computational library JAX, as well as the object-oriented wrapper Equinox, the framework provides an easy-to-use, declarative modelling interface, without sacrificing performance. By representing circuits as JAX PyTrees and leveraging just-in-time compilation, models are compiled as pure functions into an optimized, algebraic graph. Since the resultant functions are JAX-native, this allows computation on CPUs, GPUs, or TPUs, providing integration with a wide range of solvers. Further, thanks to JAX's automatic differentiation, gradients with respect to both frequency and circuit parameters can be calculated for any circuit model outputs. This allows for more efficient optimization, as well as exciting new analysis opportunities. We showcase ParamRF's typical use-case of fitting a model to measured data via its built-in fitting engines, which include classical optimizers like L-BFGS and SLSQP, as well as modern Bayesian samplers such as PolyChord and BlackJAX. The result is a flexible framework for frequency-domain circuit modelling, fitting and analysis.

Computers have profoundly changed the way scientific research is done.

Whereas the importance of computers as research tools is evident to everyone,

the impact of the digital revolution on the representation of scientific

knowledge is not yet widely recognized. An ever increasing part of today's

scientific knowledge is expressed, published, and archived exclusively in the

form of software and electronic datasets. In this essay, I compare these

digital scientific notations to the the traditional scientific notations that

have been used for centuries, showing how the digital notations optimized for

computerized processing are often an obstacle to scientific communication and

to creative work by human scientists. I analyze the causes and propose

guidelines for the design of more human-friendly digital scientific notations.

We study the biological evolution of low-latency natural neural networks for

short-term survival, and its parallels in the development of low latency

high-performance Central Processing Unit in computer design and architecture.

The necessity of accurate high-quality display of motion picture led to the

special processing units known as the GPU, just as how special visual cortex

regions of animals produced such low-latency computational capacity. The human

brain, especially considered as nothing but a scaled-up version of a primate

brain evolved in response to genomic bottleneck, producing a brain that is

trainable and prunable by society, and as a further extension, invents

language, writing and storage of narratives displaced in time and space. We

conclude that this modern digital invention of social media and the archived

collective common corpus has further evolved from just simple CPU-based

low-latency fast retrieval to high-throughput parallel processing of data using

GPUs to train Attention based Deep Learning Neural Networks producing

Generative AI with aspects like toxicity, bias, memorization, hallucination,

with intriguing close parallels in humans and their society. We show how this

paves the way for constructive approaches to eliminating such drawbacks from

human society and its proxy and collective large-scale mirror, the Generative

AI of the LLMs.

FBCNet, a multi-view convolutional neural network, integrates neurophysiological priors and a novel variance layer to classify Motor Imagery from EEG signals. This approach achieved state-of-the-art accuracy on healthy subject datasets and improved BCI usability by enabling 28% more chronic stroke patients to reach over 70% classification accuracy compared to baseline methods.

As a fire erupts, the first few minutes can be critical, and first

respondents must race to the scene to analyze the situation and act fast before

it gets out of hand. Factors such as road traffic condition and distance may

not allow quick rescue operation using traditional means and methods, leading

to unmanageable spreading of fire, injuries or even deaths that can be avoided.

FireFly drone-based rescue consists of a squad of highly equipped drones that

will be the first responders to the fire site. Their intervention will make the

task of the fire rescue team much more effective and will contribute to reduce

the overall damage. As soon as the fire is detected by in-building implanted

sensors, the fire department would deploy a set of FireFly drones that would

fly to the site, scan the building, and send live fire status information to

the Fire fighter team. The drones would have the ability to identify trapped

humans using AI based pattern recognition tools (using sensors and thermal

cameras) and then drop them rescue kits as appropriate. The drones will also be

equipped with fire detection and recognition capabilities and be able to drop

fire extinguishing balls as first attempts to put off seeds of fires before

they evolve. The integration of drones with firefighting will allow for ease of

access and control of fire outbreaks. Drones will also result in increased

response time, prevention of further damage, and allow relaying of vital

information to out of reach places regarding the characteristics of the fire

scene.

Dynamic digital timing analysis is a promising alternative to analog simulations for verifying particularly timing-critical parts of a circuit. A necessary prerequisite is a digital delay model, which allows to accurately predict the input-to-output delay of a given transition in the input signal(s) of a gate. Since all existing digital delay models for dynamic digital timing analysis are deterministic, however, they cannot cover delay fluctuations caused by PVT variations, aging and analog signal noise. The only exception known to us is the η-IDM introduced by Függer et al. at DATE'18, which allows to add (very) small adversarially chosen delay variations to the deterministic involution delay model, without endangering its faithfulness. In this paper, we show that it is possible to extend the range of allowed delay variations so significantly that realistic PVT variations and aging are covered by the resulting extended η-IDM.

Real-time monitoring of nervous system function with immediate communication of relevant information to the surgeon enables prevention and/or mitigation of iatrogenic injury in many surgical procedures. The hardware and software infrastructure and demonstrated usefulness of telemedicine in support of IONM originated in a busy university health center environment and then spread widely as comparable functional capabilities were added by commercial equipment manufacturers. The earliest implementations included primitive data archival and case documentation capabilities and relied primarily on deidentification for security. They emphasized full-featured control of the real-time data display by remote observers. Today, remote IONM is routinely utilized in more than 200,000 high-risk surgical procedures/year in the United States. For many cases, remote observers rely on screen capture to view the data as it is displayed in the remote operating room while providing sophisticated security capabilities and data archival and standardized metadata and case documentation.

By using the onboard sensing and external connectivity technology, connected

and automated vehicles (CAV) could lead to improved energy efficiency, better

routing, and lower traffic congestion. With the rapid development of the

technology and adaptation of CAV, it is more critical to develop the universal

evaluation method and the testing standard which could evaluate the impacts on

energy consumption and environmental pollution of CAV fairly, especially under

the various traffic conditions. In this paper, we proposed a new method and

framework to evaluate the energy efficiency and emission of the vehicle based

on the unsupervised learning methods. Both the real-world driving data of the

evaluated vehicle and the large naturalistic driving dataset are used to

perform the driving primitive analysis and coupling. Then the linear weighted

estimation method could be used to calculate the testing result of the

evaluated vehicle. The results show that this method can successfully identify

the typical driving primitives. The couples of the driving primitives from the

evaluated vehicle and the typical driving primitives from the large real-world

driving dataset coincide with each other very well. This new method could

enhance the standard development of the energy efficiency and emission testing

of CAV and other off-cycle credits.

There has been an increasing recognition of the value of data and of data-based decision making. As a consequence, the development of data science as a field of study has intensified in recent years. However, there is no systematic and comprehensive treatment and understanding of data science. This article describes a systematic and end-to-end framing of the field based on an inclusive definition. It identifies the core components making up the data science ecosystem, presents its lifecycle modeling the development process, and argues its interdisciplinarity.

How to bid on a Google shopping account (set of shopping campaigns) with

query-level matching like in Google Adwords.

Bowling is a target sport that is popular among all age groups with professionals and amateur players. Delivering an accurate and consistent bowling throw into the lane requires the incorporation of motion techniques. Consequently, this research presents a novel IoT-Cloud based system for providing real-time monitoring and coaching services to bowling athletes. The system includes two inertial measurement units (IMUs) sensors for capturing motion data, a mobile application and a cloud server for processing the data. First, the quality of each phase of a throw is assessed using a Dynamic Time Wrapping (DTW) based algorithm. Second, an on device-level technique is proposed to identify common bowling errors. Finally, an SVM classification model is employed for assessing the skill level of bowler athletes. We recruited nine right-handed bowlers to perform 50 throws wearing the two sensors and using the proposed system. The results of our experiments suggest that the proposed system can effectively and efficiently assess the quality of the throw, detect common bowling errors and classify the skill level of the bowler.

Agriculture is a huge domain where an enormous landscape of systems interact to support agricultural processes, which are becoming increasingly digital. From the perspective of agricultural service providers, a prominent challenge is interoperability. In the Fraunhofer lighthouse project Cognitive Agriculture (COGNAC), we investigated how the usage of Industry 4.0 digital twins (I4.0 DTs) can help overcome this challenge. This paper contributes architecture drivers and a solution concept using I4.0 DTs in the agricultural domain. Furthermore, we discuss the opportunities and limitations offered by I4.0 DTs for the agricultural domain.

This paper examines the relevance of the Godot Engine in the indie game

industry. The Godot Engine is a relatively new game engine from 2014 and

competes with leading market players. To get to the bottom of its relevance,

two major online sales platforms and the game engines that are commonly used,

Steam and itch[dot]io, are examined. Mainly, these findings are compared with

reference data from 2018. It turns out that the Godot engine has gained massive

relevance in 2020 and now seems to be one of the leading players in the indie

game industry. The exact causes are difficult to determine. However, this paper

provides many clues for further research in this area.

We propose a flat, analytic, mixed-size placement algorithm ePlace-3D for three-dimension integrated circuits (3D-ICs) using nonlinear optimization. Our contributions are (1) electrostatics based 3D density function with globally uniform smoothness (2) 3D numerical solution with improved spectral formulation (3) 3D nonlinear pre-conditioner for convergence acceleration (4) interleaved 2D-3D placement for efficiency enhancement. Our placer outperforms the leading work mPL6-3D and NTUplace3-3D with 6.44% and 37.15% shorter wirelength, 9.11% and 10.27% fewer 3D vertical interconnects (VI) on average of IBM-PLACE circuits. Validation on the large-scale modern mixed-size (MMS) 3D circuits shows high performance and scalability.

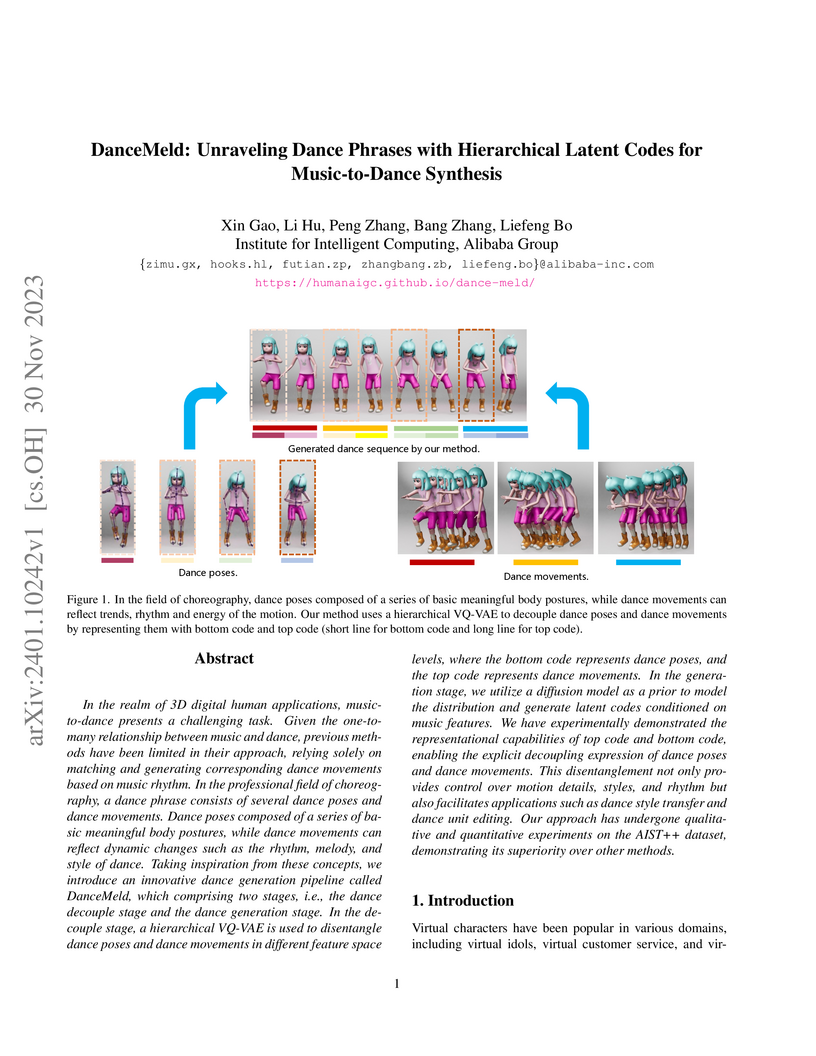

In the realm of 3D digital human applications, music-to-dance presents a

challenging task. Given the one-to-many relationship between music and dance,

previous methods have been limited in their approach, relying solely on

matching and generating corresponding dance movements based on music rhythm. In

the professional field of choreography, a dance phrase consists of several

dance poses and dance movements. Dance poses composed of a series of basic

meaningful body postures, while dance movements can reflect dynamic changes

such as the rhythm, melody, and style of dance. Taking inspiration from these

concepts, we introduce an innovative dance generation pipeline called

DanceMeld, which comprising two stages, i.e., the dance decouple stage and the

dance generation stage. In the decouple stage, a hierarchical VQ-VAE is used to

disentangle dance poses and dance movements in different feature space levels,

where the bottom code represents dance poses, and the top code represents dance

movements. In the generation stage, we utilize a diffusion model as a prior to

model the distribution and generate latent codes conditioned on music features.

We have experimentally demonstrated the representational capabilities of top

code and bottom code, enabling the explicit decoupling expression of dance

poses and dance movements. This disentanglement not only provides control over

motion details, styles, and rhythm but also facilitates applications such as

dance style transfer and dance unit editing. Our approach has undergone

qualitative and quantitative experiments on the AIST++ dataset, demonstrating

its superiority over other methods.

In terrain mapping, there are so many ways to measure and estimate the

terrain measurements like contouring, vertical profiling, hill shading,

hypsometric tinting, perspective view, etc. Here in this paper we are using the

contouring techniques to generate the contours for the different digital

elevation data like DEM, HGT, IMG etc. The elevation data is captured in dem,

hgt and img formats of the same projected area and the contour is generated

using the existing techniques and applications. The exact differences, errors

of elevation (contour) intervals, slopes and heights are analyzed and

recovered.

Multilayered ceramic substrates with embedded micro patterns are becoming

increasingly important, for example, in harsh environment electronics and

microfluidic devices. Fabrication of these embedded micro patterns, such as

micro channels, cavities and vias, is a challenge. This study focuses on the

process of patterning micro features on ceramic green substrates using micro

embossing. A ceramic green tape that possessed near-zero shrinkage in the x-y

plane was used, six layers of which were laminated as the embossing substrate.

The process parameters that impact on the pattern fidelity were investigated

and optimized in this study. Micro features with line-width as small as several

micrometers were formed on the ceramic green substrates. The dynamic

thermo-mechanical analysis indicated that extending the holding time at certain

temperature range would harden the green substrates with little effect on

improving the embossing fidelity. Ceramic substrates with embossed micro

patterns were obtain d after co-firing. The embedded micro channels were also

obtained by laminating the green tapes on the embossed substrates.

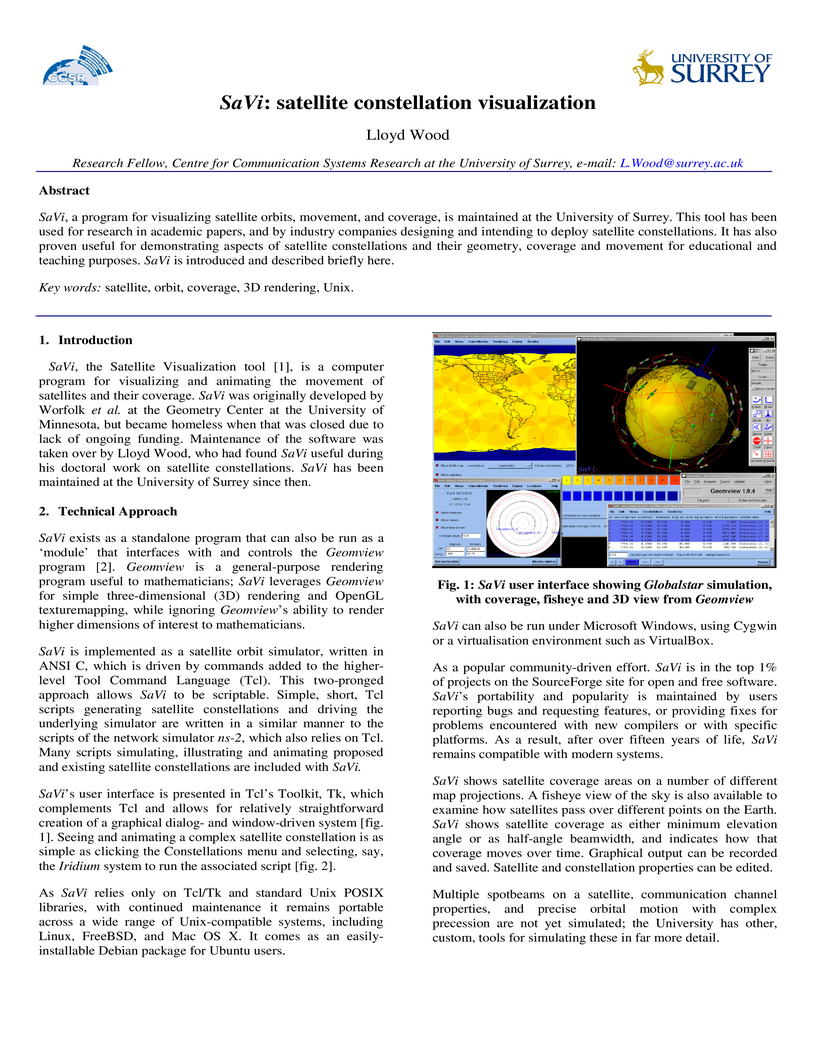

SaVi, a program for visualizing satellite orbits, movement, and coverage, is

maintained at the University of Surrey. This tool has been used for research in

academic papers, and by industry companies designing and intending to deploy

satellite constellations. It has also proven useful for demonstrating aspects

of satellite constellations and their geometry, coverage and movement for

educational and teaching purposes. SaVi is introduced and described briefly

here.

The online sports gambling industry employs teams of data analysts to build forecast models that turn the odds at sports games in their favour. While several betting strategies have been proposed to beat bookmakers, from expert prediction models and arbitrage strategies to odds bias exploitation, their returns have been inconsistent and it remains to be shown that a betting strategy can outperform the online sports betting market. We designed a strategy to beat football bookmakers with their own numbers. Instead of building a forecasting model to compete with bookmakers predictions, we exploited the probability information implicit in the odds publicly available in the marketplace to find bets with mispriced odds. Our strategy proved profitable in a 10-year historical simulation using closing odds, a 6-month historical simulation using minute to minute odds, and a 5-month period during which we staked real money with the bookmakers (we made code, data and models publicly available). Our results demonstrate that the football betting market is inefficient - bookmakers can be consistently beaten across thousands of games in both simulated environments and real-life betting. We provide a detailed description of our betting experience to illustrate how the sports gambling industry compensates these market inefficiencies with discriminatory practices against successful clients.

There are no more papers matching your filters at the moment.