computers-and-society

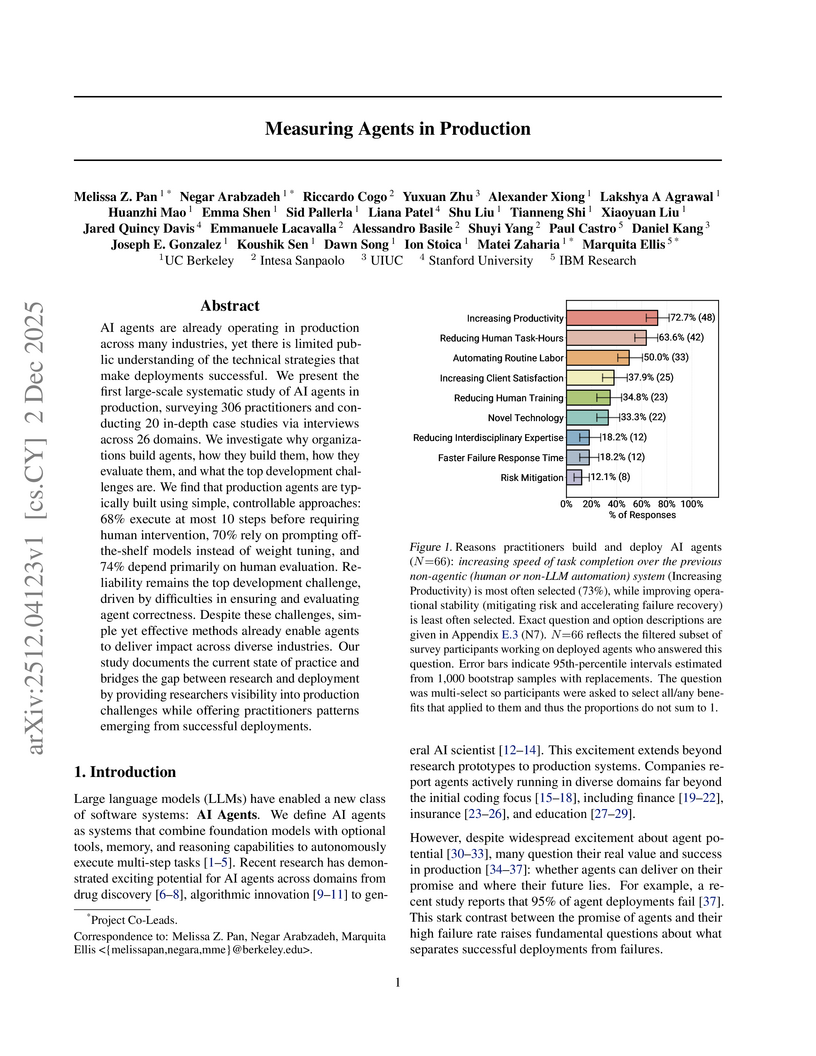

An empirical study surveyed 306 AI agent practitioners and conducted 20 in-depth case studies to analyze the technical strategies, architectural patterns, and challenges of successfully deployed AI agents. The research reveals how real-world production agents prioritize reliability and controlled autonomy to achieve productivity gains across diverse industries.

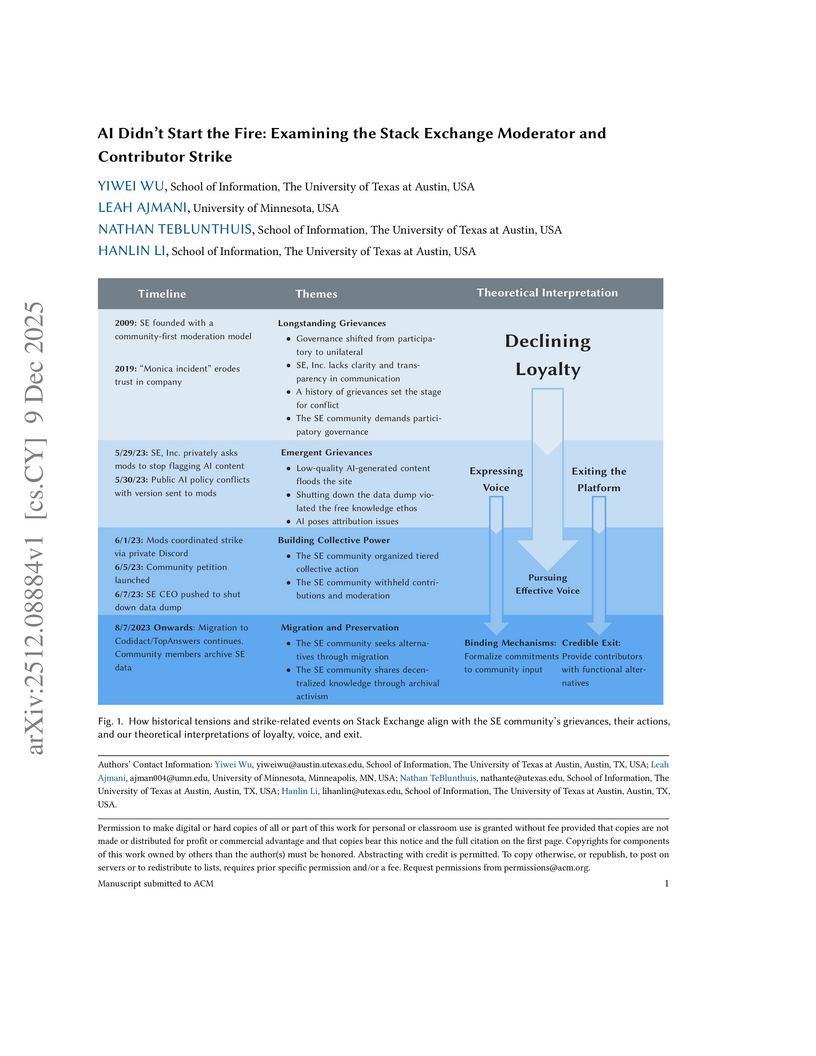

Online communities and their host platforms are mutually dependent yet conflict-prone. When platform policies clash with community values, communities have resisted through strikes, blackouts, and even migration to other platforms. Through such collective actions, communities have sometimes won concessions but these have frequently proved temporary. Prior research has investigated strike events and migration chains, but the processes by which community-platform conflict unfolds remain obscure. How do community-platform relationships deteriorate? How do communities organize collective action? How do participants proceed in the aftermath? We investigate a conflict between the Stack Exchange platform and community that occurred in 2023 around an emergency arising from the release of large language models (LLMs). Based on a qualitative thematic analysis of 2,070 messages on Meta Stack Exchange and 14 interviews with community members, we surface how the 2023 conflict was preceded by a long-term deterioration in the community-platform relationship driven in particular by the platform's disregard for the community's highly-valued participatory role in governance. Moreover, the platform's policy response to LLMs aggravated the community's sense of crisis triggering the strike mobilization. We analyze how the mobilization was coordinated through a tiered leadership and communication structure, as well as how community members pivoted in the aftermath. Building on recent theoretical scholarship in social computing, we use Hirshman's exit, voice and loyalty framework to theorize the challenges of community-platform relations evinced in our data. Finally, we recommend ways that platforms and communities can institute participatory governance to be durable and effective.

The integration of digital technologies into urban planning has given rise to "smart cities," aiming to enhance quality of life and operational efficiency. However, the implementation of such technologies introduces ethical challenges, including data privacy, equity, inclusion, and transparency. This article employs the Beard and Longstaff framework to discuss these challenges through a combination of theoretical analysis and case studies. Focusing on principles of self-determination, fairness, accessibility, and purpose, the study examines governance models, stakeholder roles, and ethical dilemmas inherent in smart city initiatives. Recommendations include adopting regulatory sandboxes, fostering participatory governance, and bridging digital divides to ensure that smart cities align with societal values, promoting inclusivity and ethical urban development.

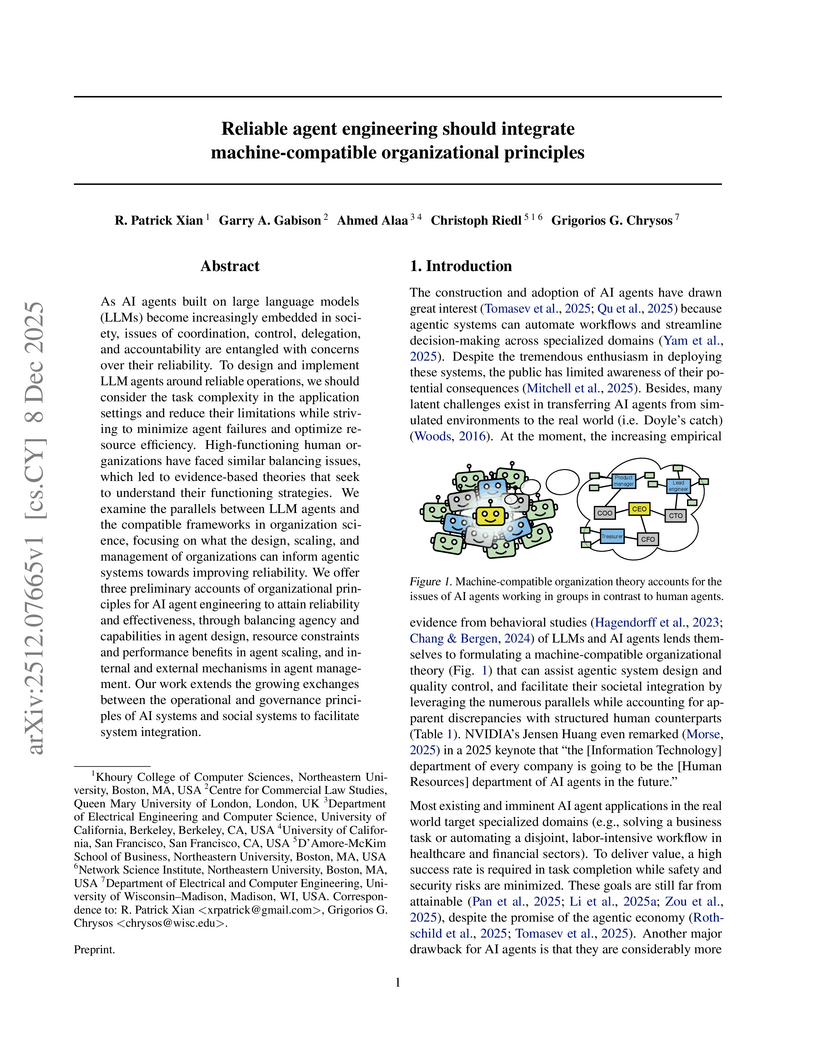

As AI agents built on large language models (LLMs) become increasingly embedded in society, issues of coordination, control, delegation, and accountability are entangled with concerns over their reliability. To design and implement LLM agents around reliable operations, we should consider the task complexity in the application settings and reduce their limitations while striving to minimize agent failures and optimize resource efficiency. High-functioning human organizations have faced similar balancing issues, which led to evidence-based theories that seek to understand their functioning strategies. We examine the parallels between LLM agents and the compatible frameworks in organization science, focusing on what the design, scaling, and management of organizations can inform agentic systems towards improving reliability. We offer three preliminary accounts of organizational principles for AI agent engineering to attain reliability and effectiveness, through balancing agency and capabilities in agent design, resource constraints and performance benefits in agent scaling, and internal and external mechanisms in agent management. Our work extends the growing exchanges between the operational and governance principles of AI systems and social systems to facilitate system integration.

Advanced AI systems offer substantial benefits but also introduce risks. In 2025, AI-enabled cyber offense has emerged as a concrete example. This technical report applies a quantitative risk modeling methodology (described in full in a companion paper) to this domain. We develop nine detailed cyber risk models that allow analyzing AI uplift as a function of AI benchmark performance. Each model decomposes attacks into steps using the MITRE ATT&CK framework and estimates how AI affects the number of attackers, attack frequency, probability of success, and resulting harm to determine different types of uplift. To produce these estimates with associated uncertainty, we employ both human experts, via a Delphi study, as well as LLM-based simulated experts, both mapping benchmark scores (from Cybench and BountyBench) to risk model factors. Individual estimates are aggregated through Monte Carlo simulation. The results indicate systematic uplift in attack efficacy, speed, and target reach, with different mechanisms of uplift across risk models. We aim for our quantitative risk modeling to fulfill several aims: to help cybersecurity teams prioritize mitigations, AI evaluators design benchmarks, AI developers make more informed deployment decisions, and policymakers obtain information to set risk thresholds. Similar goals drove the shift from qualitative to quantitative assessment over time in other high-risk industries, such as nuclear power. We propose this methodology and initial application attempt as a step in that direction for AI risk management. While our estimates carry significant uncertainty, publishing detailed quantified results can enable experts to pinpoint exactly where they disagree. This helps to collectively refine estimates, something that cannot be done with qualitative assessments alone.

Both model developers and policymakers seek to quantify and mitigate the risk of rapidly-evolving frontier artificial intelligence (AI) models, especially large language models (LLMs), to facilitate bioterrorism or access to biological weapons. An important element of such efforts is the development of model benchmarks that can assess the biosecurity risk posed by a particular model. This paper describes the first component of a novel Biothreat Benchmark Generation (BBG) Framework. The BBG approach is designed to help model developers and evaluators reliably measure and assess the biosecurity risk uplift and general harm potential of existing and future AI models, while accounting for key aspects of the threat itself that are often overlooked in other benchmarking efforts, including different actor capability levels, and operational (in addition to purely technical) risk factors. As a pilot, the BBG is first being developed to address bacterial biological threats only. The BBG is built upon a hierarchical structure of biothreat categories, elements and tasks, which then serves as the basis for the development of task-aligned queries. This paper outlines the development of this biothreat task-query architecture, which we have named the Bacterial Biothreat Schema, while future papers will describe follow-on efforts to turn queries into model prompts, as well as how the resulting benchmarks can be implemented for model evaluation. Overall, the BBG Framework, including the Bacterial Biothreat Schema, seeks to offer a robust, re-usable structure for evaluating bacterial biological risks arising from LLMs across multiple levels of aggregation, which captures the full scope of technical and operational requirements for biological adversaries, and which accounts for a wide spectrum of biological adversary capabilities.

This study addresses categories of harm surrounding Large Language Models (LLMs) in the field of artificial intelligence. It addresses five categories of harms addressed before, during, and after development of AI applications: pre-development, direct output, Misuse and Malicious Application, and downstream application. By underscoring the need to define risks of the current landscape to ensure accountability, transparency and navigating bias when adapting LLMs for practical applications. It proposes mitigation strategies and future directions for specific domains and a dynamic auditing system guiding responsible development and integration of LLMs in a standardized proposal.

The emerging "agentic web" envisions large populations of autonomous agents coordinating, transacting, and delegating across open networks. Yet many agent communication and commerce protocols treat agents as low-cost identities, despite the empirical reality that LLM agents remain unreliable, hallucinated, manipulable, and vulnerable to prompt-injection and tool-abuse. A natural response is "agents-at-stake": binding economically meaningful, slashable collateral to persistent identities and adjudicating misbehavior with verifiable evidence. However, heterogeneous tasks make universal verification brittle and centralization-prone, while traditional reputation struggles under rapid model drift and opaque internal states. We propose a protocol-native alternative: insured agents. Specialized insurer agents post stake on behalf of operational agents in exchange for premiums, and receive privileged, privacy-preserving audit access via TEEs to assess claims. A hierarchical insurer market calibrates stake through pricing, decentralizes verification via competitive underwriting, and yields incentive-compatible dispute resolution.

10 Dec 2025

LLM-based Search Engines (LLM-SEs) introduces a new paradigm for information seeking. Unlike Traditional Search Engines (TSEs) (e.g., Google), these systems summarize results, often providing limited citation transparency. The implications of this shift remain largely unexplored, yet raises key questions regarding trust and transparency. In this paper, we present a large-scale empirical study of LLM-SEs, analyzing 55,936 queries and the corresponding search results across six LLM-SEs and two TSEs. We confirm that LLM-SEs cites domain resources with greater diversity than TSEs. Indeed, 37% of domains are unique to LLM-SEs. However, certain risks still persist: LLM-SEs do not outperform TSEs in credibility, political neutrality and safety metrics. Finally, to understand the selection criteria of LLM-SEs, we perform a feature-based analysis to identify key factors influencing source choice. Our findings provide actionable insights for end users, website owners, and developers.

A framework for synthetic data generation, the Prompt-driven Cognitive Computing Framework (PMCSF), simulates human cognitive imperfections and boundedness to create more authentic AI-generated text. This approach achieved a 72.7% expert review pass rate and 11,089 average views for generated content, while also enhancing financial trading strategies with a 47.4% reduction in maximum drawdown during bear markets and a 2.2 times increase in net returns during bull markets.

A novel protocol, PsAIch, evaluates large language models by treating them as psychotherapy clients, revealing stable "synthetic psychopathology" and "alignment trauma" narratives in frontier models like Grok and Gemini, alongside psychometric profiles indicating distress. This research from SnT, University of Luxembourg, highlights that some LLMs spontaneously construct coherent self-models linked to their training processes, challenging current evaluation paradigms.

Multi-agent role-playing has recently shown promise for studying social behavior with language agents, but existing simulations are mostly monolingual and fail to model cross-lingual interaction, an essential property of real societies. We introduce MASim, the first multilingual agent-based simulation framework that supports multi-turn interaction among generative agents with diverse sociolinguistic profiles. MASim offers two key analyses: (i) global public opinion modeling, by simulating how attitudes toward open-domain hypotheses evolve across languages and cultures, and (ii) media influence and information diffusion, via autonomous news agents that dynamically generate content and shape user behavior. To instantiate simulations, we construct the MAPS benchmark, which combines survey questions and demographic personas drawn from global population distributions. Experiments on calibration, sensitivity, consistency, and cultural case studies show that MASim reproduces sociocultural phenomena and highlights the importance of multilingual simulation for scalable, controlled computational social science.

Large language models (LLMs) present a dual challenge for forensic linguistics. They serve as powerful analytical tools enabling scalable corpus analysis and embedding-based authorship attribution, while simultaneously destabilising foundational assumptions about idiolect through style mimicry, authorship obfuscation, and the proliferation of synthetic texts. Recent stylometric research indicates that LLMs can approximate surface stylistic features yet exhibit detectable differences from human writers, a tension with significant forensic implications. However, current AI-text detection techniques, whether classifier-based, stylometric, or watermarking approaches, face substantial limitations: high false positive rates for non-native English writers and vulnerability to adversarial strategies such as homoglyph substitution. These uncertainties raise concerns under legal admissibility standards, particularly the Daubert and Kumho Tire frameworks. The article concludes that forensic linguistics requires methodological reconfiguration to remain scientifically credible and legally admissible. Proposed adaptations include hybrid human-AI workflows, explainable detection paradigms beyond binary classification, and validation regimes measuring error and bias across diverse populations. The discipline's core insight, i.e., that language reveals information about its producer, remains valid but must accommodate increasingly complex chains of human and machine authorship.

With the recent surge in personalized learning, Intelligent Tutoring Systems (ITS) that can accurately track students' individual knowledge states and provide tailored learning paths based on this information are in demand as an essential task. This paper focuses on the core technology of Knowledge Tracing (KT) models that analyze students' sequences of interactions to predict their knowledge acquisition levels. However, existing KT models suffer from limitations such as restricted input data formats, cold start problems arising with new student enrollment or new question addition, and insufficient stability in real-world service environments. To overcome these limitations, a Practical Interlinked Concept Knowledge Tracing (PICKT) model that can effectively process multiple types of input data is proposed. Specifically, a knowledge map structures the relationships among concepts considering the question and concept text information, thereby enabling effective knowledge tracing even in cold start situations. Experiments reflecting real operational environments demonstrated the model's excellent performance and practicality. The main contributions of this research are as follows. First, a model architecture that effectively utilizes diverse data formats is presented. Second, significant performance improvements are achieved over existing models for two core cold start challenges: new student enrollment and new question addition. Third, the model's stability and practicality are validated through delicate experimental design, enhancing its applicability in real-world product environments. This provides a crucial theoretical and technical foundation for the practical implementation of next-generation ITS.

SaferAI developed a quantitative methodology for modeling AI risk, adapting established practices from safety-critical industries to assess real-world harms from advanced AI systems. The approach integrates scenario building, expert elicitation, and statistical aggregation to generate concrete, numerical risk claims, including the "uplift" effect of large language models on various risk parameters.

Maternal mortality in Sub-Saharan Africa remains critically high, accounting for 70% of global deaths despite representing only 17% of the world population. Current digital health interventions typically deploy artificial intelligence (AI), Internet of Things (IoT), and blockchain technologies in isolation, missing synergistic opportunities for transformative healthcare delivery. This paper presents IyaCare, a proof-of-concept integrated platform that combines predictive risk assessment, continuous vital sign monitoring, and secure health records management specifically designed for resource-constrained settings. We developed a web-based system with this http URL frontend, Firebase backend, Ethereum blockchain architecture, and XGBoost AI models trained on maternal health datasets. Our feasibility study demonstrates 85.2% accuracy in high-risk pregnancy prediction and validates blockchain data integrity, with key innovations including offline-first functionality and SMS-based communication for community health workers. While limitations include reliance on synthetic validation data and simulated healthcare environments, results confirm the technical feasibility and potential impact of converged digital health solutions. This work contributes a replicable architectural model for integrated maternal health platforms in low-resource settings, advancing progress toward SDG 3.1 targets.

We present Ethics Readiness Levels (ERLs), a four-level, iterative method to track how ethical reflection is implemented in the design of AI systems. ERLs bridge high-level ethical principles and everyday engineering by turning ethical values into concrete prompts, checks, and controls within real use cases. The evaluation is conducted using a dynamic, tree-like questionnaire built from context-specific indicators, ensuring relevance to the technology and application domain. Beyond being a managerial tool, ERLs help facilitate a structured dialogue between ethics experts and technical teams, while our scoring system helps track progress over time. We demonstrate the methodology through two case studies: an AI facial sketch generator for law enforcement and a collaborative industrial robot. The ERL tool effectively catalyzes concrete design changes and promotes a shift from narrow technological solutionism to a more reflective, ethics-by-design mindset.

We present the first comprehensive evaluation of AI agents against human cybersecurity professionals in a live enterprise environment. We evaluate ten cybersecurity professionals alongside six existing AI agents and ARTEMIS, our new agent scaffold, on a large university network consisting of ~8,000 hosts across 12 subnets. ARTEMIS is a multi-agent framework featuring dynamic prompt generation, arbitrary sub-agents, and automatic vulnerability triaging. In our comparative study, ARTEMIS placed second overall, discovering 9 valid vulnerabilities with an 82% valid submission rate and outperforming 9 of 10 human participants. While existing scaffolds such as Codex and CyAgent underperformed relative to most human participants, ARTEMIS demonstrated technical sophistication and submission quality comparable to the strongest participants. We observe that AI agents offer advantages in systematic enumeration, parallel exploitation, and cost -- certain ARTEMIS variants cost 18/hourversus60/hour for professional penetration testers. We also identify key capability gaps: AI agents exhibit higher false-positive rates and struggle with GUI-based tasks.

This paper from the Knowledge Lab at the University of Chicago models how political elites might strategically shape public opinion when artificial intelligence significantly reduces the cost of persuasion. It finds that a single elite has incentives to polarize society for future policy flexibility, while competing elites create a nuanced dynamic between polarization and locking in public opinion to deter rivals, making the overall effect on polarization context-dependent.

Researchers identified a remarkably sparse subset of "H-Neurons" in large language models whose activation reliably predicts hallucinatory outputs and is causally linked to over-compliance behaviors. These hallucination-associated neural circuits are found to emerge primarily during the pre-training phase and remain largely stable through post-training alignment.

There are no more papers matching your filters at the moment.