other-quantitative-biology

Post-deployment monitoring of artificial intelligence (AI) systems in health care is essential to ensure their safety, quality, and sustained benefit-and to support governance decisions about which systems to update, modify, or decommission. Motivated by these needs, we developed a framework for monitoring deployed AI systems grounded in the mandate to take specific actions when they fail to behave as intended. This framework, which is now actively used at Stanford Health Care, is organized around three complementary principles: system integrity, performance, and impact. System integrity monitoring focuses on maximizing system uptime, detecting runtime errors, and identifying when changes to the surrounding IT ecosystem have unintended effects. Performance monitoring focuses on maintaining accurate system behavior in the face of changing health care practices (and thus input data) over time. Impact monitoring assesses whether a deployed system continues to have value in the form of benefit to clinicians and patients. Drawing on examples of deployed AI systems at our academic medical center, we provide practical guidance for creating monitoring plans based on these principles that specify which metrics to measure, when those metrics should be reviewed, who is responsible for acting when metrics change, and what concrete follow-up actions should be taken-for both traditional and generative AI. We also discuss challenges to implementing this framework, including the effort and cost of monitoring for health systems with limited resources and the difficulty of incorporating data-driven monitoring practices into complex organizations where conflicting priorities and definitions of success often coexist. This framework offers a practical template and starting point for health systems seeking to ensure that AI deployments remain safe and effective over time.

Free-ranging dogs (Canis lupus familiaris) constitute the majority of the global dog population and rely heavily on human-derived resources. Studies show different levels of responses to various cues like food, petting and gazing by humans. However, the relative importance that dogs associate with these rewards, driving their interactions with unfamiliar humans remain understudied. Understanding how these dogs prioritize different rewards, ranging from food to social contact, can offer insights into their adaptive strategies within human-dominated ecosystems, and help to reduce conflict. We investigated the motivational value of different reward types in 150 adult free-ranging dogs in West Bengal, India. Using a between-subjects design, unfamiliar experimenters offered one of five rewards: high-value food (chicken), low-value food (biscuit), social interaction (petting), human gaze only, or human presence only. Motivation was assessed by measuring the number of rewards accepted, approach latency, duration of proximity, and behaviour via a Socialization Index (SI). High-value food was the most potent driver of approach behaviour and sustained proximity. While petting elicited higher SI scores, indicating affiliative engagement, it was associated with more rapid satiation than food. Human gaze alone functioned as a subtle reinforcer compared to passive presence, maintaining dog attention longer than presence alone. These findings suggest that free-ranging dogs prioritize high-energy intake over social interaction with strangers, consistent with an optimal foraging strategy. This behavioural flexibility enables them to balance energy needs against potential risks, demonstrating the sophisticated decision-making crucial for survival in urban environments where humans act as both resource providers and potential threats.

Clinical dialogue represents a complex duality requiring both the empathetic fluency of natural conversation and the rigorous precision of evidence-based medicine. While Large Language Models possess unprecedented linguistic capabilities, their architectural reliance on reactive and stateless processing often favors probabilistic plausibility over factual veracity. This structural limitation has catalyzed a paradigm shift in medical AI from generative text prediction to agentic autonomy, where the model functions as a central reasoning engine capable of deliberate planning and persistent memory. Moving beyond existing reviews that primarily catalog downstream applications, this survey provides a first-principles analysis of the cognitive architecture underpinning this shift. We introduce a novel taxonomy structured along the orthogonal axes of knowledge source and agency objective to delineate the provenance of clinical knowledge against the system's operational scope. This framework facilitates a systematic analysis of the intrinsic trade-offs between creativity and reliability by categorizing methods into four archetypes: \textit{Latent Space Clinicians}, \textit{Emergent Planners}, \textit{Grounded Synthesizers}, and \textit{Verifiable Workflow Automators}. For each paradigm, we deconstruct the technical realization across the entire cognitive pipeline, encompassing strategic planning, memory management, action execution, collaboration, and evolution to reveal how distinct architectural choices balance the tension between autonomy and safety.

Coral colonies exhibit complex, self-similar branching architectures shaped by biochemical interactions and environmental constraints. To model their growth and calcification dynamics, we propose a novel p-adic reaction-diffusion framework defined over p-adic ultrametric spaces. The model incorporates biologically grounded reactions involving calcium and bicarbonate ions, whose interplay drives the precipitation of calcium carbonate (CaCO3). Nonlocal diffusion is governed by the Vladimirov operator over the p-adic integers, naturally capturing the hierarchical geometry of branching coral structures. Discretization over p-adic balls yields a high-dimensional nonlinear ODE system, which we solve numerically to examine how environmental and kinetic parameters, particularly CO2 concentration, influence morphogenetic outcomes. The resulting simulations reproduce structurally diverse and biologically plausible branching patterns. This approach bridges non-Archimedean analysis with morphogenesis modeling and provides a mathematically rigorous framework for investigating hierarchical structure formation in developmental biology.

CNRS

CNRS University of CambridgeHeidelberg University

University of CambridgeHeidelberg University Imperial College LondonUniversity of Zurich

Imperial College LondonUniversity of Zurich University College London

University College London The Chinese University of Hong Kong

The Chinese University of Hong Kong University of PennsylvaniaTUD Dresden University of Technology

University of PennsylvaniaTUD Dresden University of Technology Sorbonne Université

Sorbonne Université InriaHelmholtz-Zentrum Dresden-Rossendorf (HZDR)INSERMHelmholtz-Zentrum Dresden-RossendorfUniversity Hospital CologneWestern UniversityGerman Cancer Research CenterHeidelberg University HospitalUniversity of StrasbourgNational Center for Tumor Diseases (NCT)Lawson Health Research InstituteHelmholtz ImagingAP-HPInstitut du Cerveau – Paris Brain Institute - ICMBalgrist University HospitalChildren’s National HospitalReutlingen UniversityGerman Cancer Research Center (DKFZ) HeidelbergUCL Hawkes InstituteUniversity College London HospitalNational Center for Tumor DiseasesEnAcuity Ltd.University Hospital LeipzigHIDSS4Health - Helmholtz Information and Data Science School for HealthHôpital de la Pitié SalpêtrièreHI Helmholtz ImagingElse Kröner Fresenius Center for Digital HealthFaculty of Medicine and University Hospital Carl Gustav CarusAI Health Innovation ClusterUniversity Medical Center HeidelbergAP-HP, Hôpital de la Pitié-SalpêtrièreScialyticsDZHK Partnersite Heidelberg-MannheimVerb Surgical Inc.Children

DANQueens

’ University

InriaHelmholtz-Zentrum Dresden-Rossendorf (HZDR)INSERMHelmholtz-Zentrum Dresden-RossendorfUniversity Hospital CologneWestern UniversityGerman Cancer Research CenterHeidelberg University HospitalUniversity of StrasbourgNational Center for Tumor Diseases (NCT)Lawson Health Research InstituteHelmholtz ImagingAP-HPInstitut du Cerveau – Paris Brain Institute - ICMBalgrist University HospitalChildren’s National HospitalReutlingen UniversityGerman Cancer Research Center (DKFZ) HeidelbergUCL Hawkes InstituteUniversity College London HospitalNational Center for Tumor DiseasesEnAcuity Ltd.University Hospital LeipzigHIDSS4Health - Helmholtz Information and Data Science School for HealthHôpital de la Pitié SalpêtrièreHI Helmholtz ImagingElse Kröner Fresenius Center for Digital HealthFaculty of Medicine and University Hospital Carl Gustav CarusAI Health Innovation ClusterUniversity Medical Center HeidelbergAP-HP, Hôpital de la Pitié-SalpêtrièreScialyticsDZHK Partnersite Heidelberg-MannheimVerb Surgical Inc.Children

DANQueens

’ UniversitySurgical data science (SDS) is rapidly advancing, yet clinical adoption of artificial intelligence (AI) in surgery remains severely limited, with inadequate validation emerging as a key obstacle. In fact, existing validation practices often neglect the temporal and hierarchical structure of intraoperative videos, producing misleading, unstable, or clinically irrelevant results. In a pioneering, consensus-driven effort, we introduce the first comprehensive catalog of validation pitfalls in AI-based surgical video analysis that was derived from a multi-stage Delphi process with 91 international experts. The collected pitfalls span three categories: (1) data (e.g., incomplete annotation, spurious correlations), (2) metric selection and configuration (e.g., neglect of temporal stability, mismatch with clinical needs), and (3) aggregation and reporting (e.g., clinically uninformative aggregation, failure to account for frame dependencies in hierarchical data structures). A systematic review of surgical AI papers reveals that these pitfalls are widespread in current practice, with the majority of studies failing to account for temporal dynamics or hierarchical data structure, or relying on clinically uninformative metrics. Experiments on real surgical video datasets provide the first empirical evidence that ignoring temporal and hierarchical data structures can lead to drastic understatement of uncertainty, obscure critical failure modes, and even alter algorithm rankings. This work establishes a framework for the rigorous validation of surgical video analysis algorithms, providing a foundation for safe clinical translation, benchmarking, regulatory review, and future reporting standards in the field.

Bioinformatic analysis of microbiota revealed that certain metabolic pathways are associated with low- and high- residual feed intake (HRFI and LRFI), such as the amino-acid biosynthesis pathway and the tRNA-aminoacyl synthesis pathway. The latter is associated with increased propionate production. Yet, in vitro fermentation-profile analyses revealed that LRFI pigs, from the most efficient genetic line, produced more acetate (+15%) and propionate (+56%) from the insoluble fraction (IF) containing the insoluble dietary fibre recovered after simulation of upper gastrointestinal digestion. Valerate was also more frequently abundant in LRFI pigs (P < 0.01). 16S sequencing analysis of the microbes responsible for fermentation suggested that propionate obtained from the fraction of feed that is indigestible by the host is produced mainly by Prevotella and Lactobacillus. This production was strongly correlated with backfat thickness in LRFI pigs (Spearman's correlation = 0.80), while a moderate correlation existed between butyrate production and feed efficiency in HRFI pigs (Spearman's correlation = 0.44). These results revealed that propionate production is related to fat metabolism, suggesting that GPR43 receptor activation by propionate could play a physiological role in adipose cells in RFI-pigs. These observations highlight significant functional differences between the microbiota of HRFI and LRFI pigs, as well as variability within more efficient pigs that could be exploited to improve performance.

Living systems exhibit a range of fundamental characteristics: they are active, self-referential, self-modifying systems. This paper explores how these characteristics create challenges for conventional scientific approaches and why they require new theoretical and formal frameworks. We introduce a distinction between 'natural time', the continuing present of physical processes, and 'representational time', with its framework of past, present and future that emerges with life itself. Representational time enables memory, learning and prediction, functions of living systems essential for their survival. Through examples from evolution, embryogenesis and metamorphosis we show how living systems navigate the apparent contradictions arising from self-reference as natural time unwinds self-referential loops into developmental spirals. Conventional mathematical and computational formalisms struggle to model self-referential and self-modifying systems without running into paradox. We identify promising new directions for modelling self-referential systems, including domain theory, co-algebra, genetic programming, and self-modifying algorithms. There are broad implications for biology, cognitive science and social sciences, because self-reference and self-modification are not problems to be avoided but core features of living systems that must be modelled to understand life's open-ended creativity.

A groundbreaking multi-institutional collaboration introduces an AI co-scientist system built on Gemini 2.0 that successfully generates and validates novel scientific hypotheses across biomedical domains, achieving experimental validation of drug repurposing predictions and independently discovering unpublished findings in bacterial evolution.

Laboratory research is a complex, collaborative process that involves several

stages, including hypothesis formulation, experimental design, data generation

and analysis, and manuscript writing. Although reproducibility and data sharing

are increasingly prioritized at the publication stage, integrating these

principles at earlier stages of laboratory research has been hampered by the

lack of broadly applicable solutions. Here, we propose that the workflow used

in modern software development offers a robust framework for enhancing

reproducibility and collaboration in laboratory research. In particular, we

show that GitHub, a platform widely used for collaborative software projects,

can be effectively adapted to organize and document all aspects of a research

project's lifecycle in a molecular biology laboratory. We outline a three-step

approach for incorporating the GitHub ecosystem into laboratory research

workflows: 1. designing and organizing experiments using issues and project

boards, 2. documenting experiments and data analyses with a version control

system, and 3. ensuring reproducible software environments for data analyses

and writing tasks with containerized packages. The versatility, scalability,

and affordability of this approach make it suitable for various scenarios,

ranging from small research groups to large, cross-institutional

collaborations. Adopting this framework from a project's outset can increase

the efficiency and fidelity of knowledge transfer within and across research

laboratories. An example GitHub repository based on this approach is available

at this https URL and a template repository that can be

copied is available at this https URL

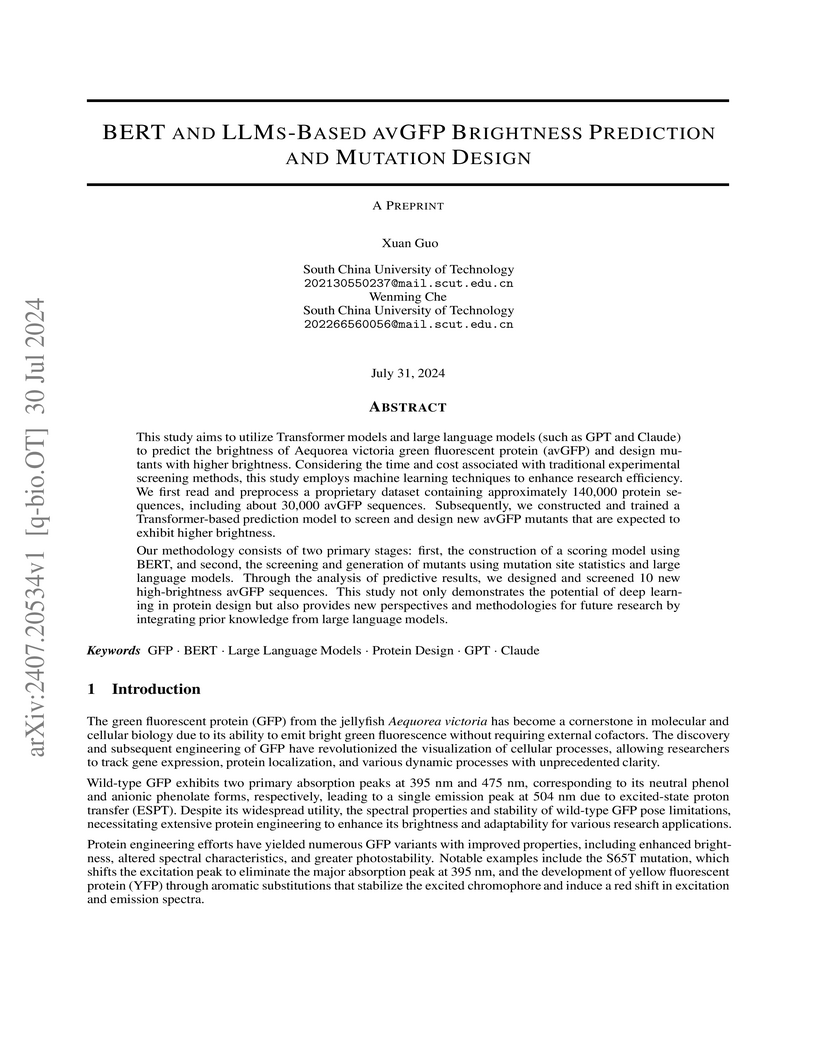

This study aims to utilize Transformer models and large language models (such

as GPT and Claude) to predict the brightness of Aequorea victoria green

fluorescent protein (avGFP) and design mutants with higher brightness.

Considering the time and cost associated with traditional experimental

screening methods, this study employs machine learning techniques to enhance

research efficiency. We first read and preprocess a proprietary dataset

containing approximately 140,000 protein sequences, including about 30,000

avGFP sequences. Subsequently, we constructed and trained a Transformer-based

prediction model to screen and design new avGFP mutants that are expected to

exhibit higher brightness.

Our methodology consists of two primary stages: first, the construction of a

scoring model using BERT, and second, the screening and generation of mutants

using mutation site statistics and large language models. Through the analysis

of predictive results, we designed and screened 10 new high-brightness avGFP

sequences. This study not only demonstrates the potential of deep learning in

protein design but also provides new perspectives and methodologies for future

research by integrating prior knowledge from large language models.

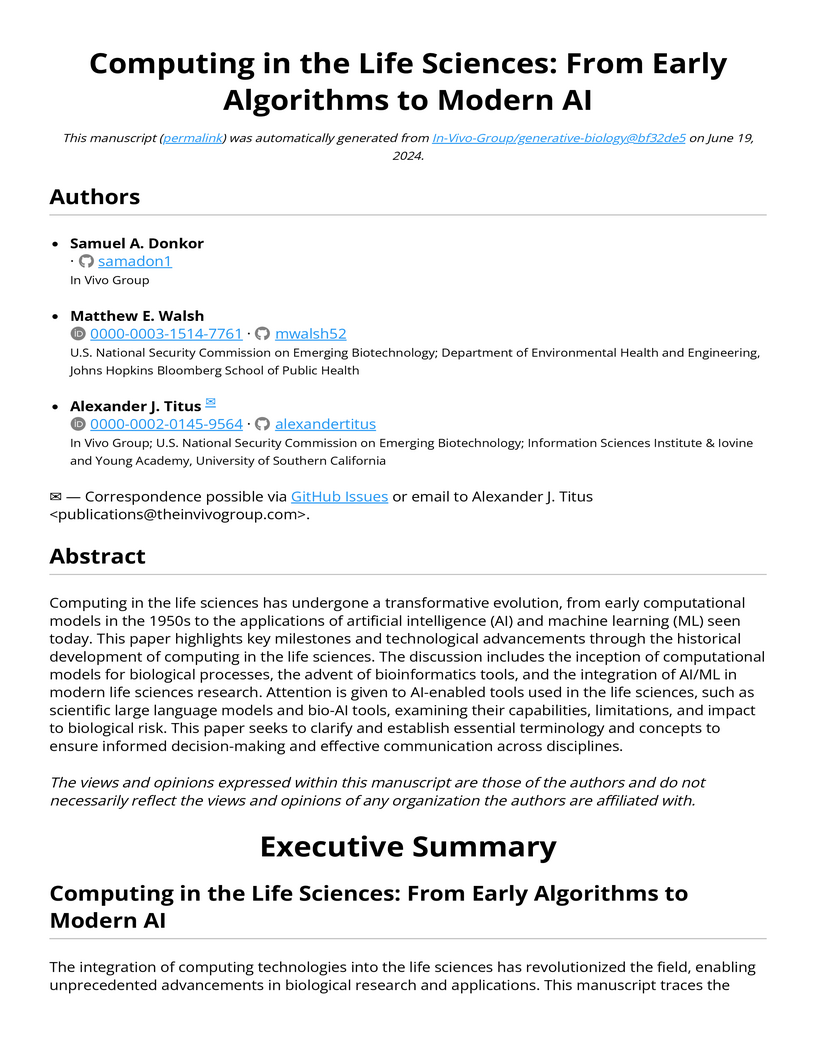

Computing in the life sciences has undergone a transformative evolution, from

early computational models in the 1950s to the applications of artificial

intelligence (AI) and machine learning (ML) seen today. This paper highlights

key milestones and technological advancements through the historical

development of computing in the life sciences. The discussion includes the

inception of computational models for biological processes, the advent of

bioinformatics tools, and the integration of AI/ML in modern life sciences

research. Attention is given to AI-enabled tools used in the life sciences,

such as scientific large language models and bio-AI tools, examining their

capabilities, limitations, and impact to biological risk. This paper seeks to

clarify and establish essential terminology and concepts to ensure informed

decision-making and effective communication across disciplines.

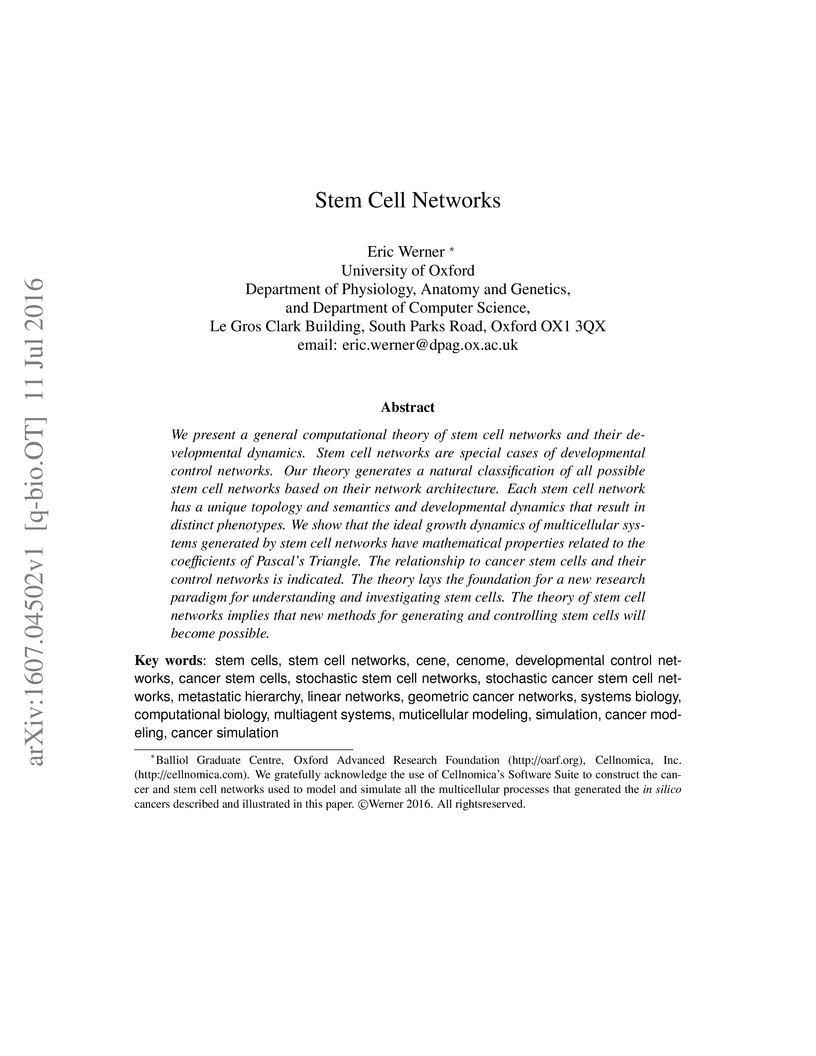

We present a general computational theory of stem cell networks and their

developmental dynamics. Stem cell networks are special cases of developmental

control networks. Our theory generates a natural classification of all possible

stem cell networks based on their network architecture. Each stem cell network

has a unique topology and semantics and developmental dynamics that result in

distinct phenotypes. We show that the ideal growth dynamics of multicellular

systems generated by stem cell networks have mathematical properties related to

the coefficients of Pascal's Triangle. The relationship to cancer stem cells

and their control networks is indicated. The theory lays the foundation for a

new research paradigm for understanding and investigating stem cells. The

theory of stem cell networks implies that new methods for generating and

controlling stem cells will become possible.

G-quadruplexes represent a novelty for molecular biology. Their role inside

the cell remains mysterious. We investigate a possible correlation with mRNA

localization. In particular, we hypothesize that Gquadruplexes influence fluid

dynamics.

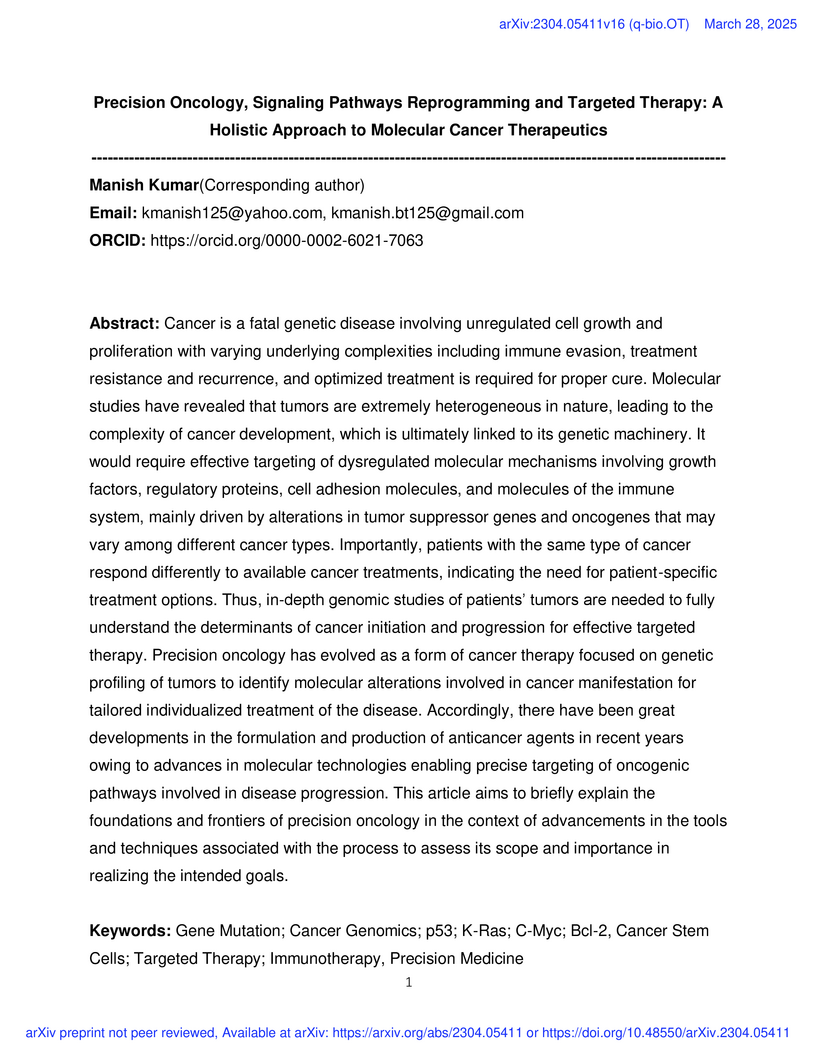

Cancer is a fatal genetic disease involving unregulated cell growth and

proliferation with varying underlying complexities including immune evasion,

treatment resistance and recurrence, and optimized treatment is required for

proper cure. Molecular studies have revealed that tumors are extremely

heterogeneous in nature, leading to the complexity of cancer development, which

is ultimately linked to its genetic machinery. It would require effective

targeting of dysregulated molecular mechanisms involving growth factors,

regulatory proteins, cell adhesion molecules, and molecules of immune system

mainly driven by alterations in tumor suppressor genes and oncogenes that may

vary among different cancer types. Importantly, patients with the same type of

cancer respond differently to available cancer treatments, indicating the need

for patient-specific treatment options. Thus, in-depth genomic studies of

patients' tumors are needed to fully understand the determinants of cancer

initiation and progression for effective targeted therapy. Precision oncology

has evolved as a form of cancer therapy focused on genetic profiling of tumors

to identify molecular alterations involved in cancer manifestation for tailored

individualized treatment of the disease. Accordingly, there have been great

developments in the formulation and production of anticancer agents in recent

years owing to advances in molecular technologies enabling precise targeting of

oncogenic pathways involved in disease progression. This article aims to

briefly explain the foundations and frontiers of precision oncology in the

context of advancements in the tools and techniques associated with the process

to assess its scope and importance in realizing the intended goals.

Our binary intuitive understanding of life and lifelikeness is good enough

for daily life, but not for research in the natural sciences. Here we propose

an operational definition of lifeness of a particular entity as a scalar,

product of its defining information (algorithmic complexity) integrated over

its lifetime. We provide a parameter-free dynamical filtering algorithm that

can efficiently and on-the-fly fit configurations of entities constituted by

moving particles to a tree structure that can model a wide range of

hierarchical system entities, while extracting and calculating the complexity,

lifespan, and lifeness of the entity and all of its constituting subtrees or

subentities. We simulated 41 interacting particle worlds and found preliminary

evidence suggesting that the lifeness of entities is associated with the

distance to criticality, as roughly measured by the range of the pairwise

interaction forces of the elemental particles of the worlds they inhabit. This

study is a proof of concept that defining, measuring, and quantifying lifeness

is (1) feasible, useful for (2) simplifying theoretical discussions, for (3)

hierarchically assessing biotic properties such as number of hierarchical

levels and predicted longevity and for (4) classifying both artificial and

biological entities, respectively via computer simulations and a combination of

evolutionary and molecular biology approaches.

Although it has been notoriously difficult to pin down precisely what it is

that makes life so distinctive and remarkable, there is general agreement that

its informational aspect is one key property, perhaps the key property. The

unique informational narrative of living systems suggests that life may be

characterized by context-dependent causal influences, and in particular, that

top-down (or downward) causation -- where higher-levels influence and constrain

the dynamics of lower-levels in organizational hierarchies -- may be a major

contributor to the hierarchal structure of living systems. Here we propose that

the origin of life may correspond to a physical transition associated with a

shift in causal structure, where information gains direct, and

context-dependent causal efficacy over the matter it is instantiated in. Such a

transition may be akin to more traditional physical transitions (e.g.

thermodynamic phase transitions), with the crucial distinction that determining

which phase (non-life or life) a given system is in requires dynamical

information and therefore can only be inferred by identifying causal

architecture. We discuss some potential novel research directions based on this

hypothesis, including potential measures of such a transition that may be

amenable to laboratory study, and how the proposed mechanism corresponds to the

onset of the unique mode of (algorithmic) information processing characteristic

of living systems.

Sea shells are found to be a very rich natural resource for calcium

carbonate. Sea shells are made up of CaCO3 mainly in the aragonite form, which

are columnar or fibrous or microsphere structured crystals. The bioactivity of

nanoparticles of sea shell has been studied in this work. The sea shells

collected were thoroughly washed, dried and pulverized. The powder was sieved

and particles in the range of 45 to 63 microns were collected. The powdered sea

shells were characterized using X-Ray Diffraction and Field Emission Scanning

Electron Microscopy. The XRD data showed that the particles were mainly

microspheres. Traces of calcite and vaterite were also present. Experiments

were conducted to study the aspirin and strontium ranelate drug loading into

the sea shell powder using soak and dry method. Different concentrations of

drug solution was made in ethanol and water. The shell powder was soaked in

drug solutions and was kept soaking for 48 hrs with intermittent

ultrasonication. The mixture was gently dried in a vacuum oven. The in vitro

drug release studies were done using Phosphate Buffered Saline. The FESEM

images displayed a distribution of differently sized and shaped particles. The

sea shells due to its natural porosity and crystallinity are expected to be

useful for drug delivery. About 50% drug entrapment efficiency for aspirin and

39% for strontium ranelate was seen. A burst release of the drug (80 percent)

was observed within two hours for both the drugs studied. Rest of the drug was

released slowly in 19 hrs. Further modification of the sea shell with non toxic

polymers is also planned as a part of this work. Sea shell powder has become a

potential candidate for drug delivery due to all the aforementioned advantages.

In this review we summarize theoretical progress in the field of active

matter, placing it in the context of recent experiments. Our approach offers a

unified framework for the mechanical and statistical properties of living

matter: biofilaments and molecular motors in vitro or in vivo, collections of

motile microorganisms, animal flocks, and chemical or mechanical imitations. A

major goal of the review is to integrate the several approaches proposed in the

literature, from semi-microscopic to phenomenological. In particular, we first

consider "dry" systems, defined as those where momentum is not conserved due to

friction with a substrate or an embedding porous medium, and clarify the

differences and similarities between two types of orientationally ordered

states, the nematic and the polar. We then consider the active hydrodynamics of

a suspension, and relate as well as contrast it with the dry case. We further

highlight various large-scale instabilities of these nonequilibrium states of

matter. We discuss and connect various semi-microscopic derivations of the

continuum theory, highlighting the unifying and generic nature of the continuum

model. Throughout the review, we discuss the experimental relevance of these

theories for describing bacterial swarms and suspensions, the cytoskeleton of

living cells, and vibrated granular materials. We suggest promising extensions

towards greater realism in specific contexts from cell biology to ethology, and

remark on some exotic active-matter analogues. Lastly, we summarize the outlook

for a quantitative understanding of active matter, through the interplay of

detailed theory with controlled experiments on simplified systems, with living

or artificial constituents.

The rise of advanced chatbots, such as ChatGPT, has sparked curiosity in the

scientific community. ChatGPT is a general-purpose chatbot powered by large

language models (LLMs) GPT-3.5 and GPT-4, with the potential to impact numerous

fields, including computational biology. In this article, we offer ten tips

based on our experience with ChatGPT to assist computational biologists in

optimizing their workflows. We have collected relevant prompts and reviewed the

nascent literature in the field, compiling tips we project to remain pertinent

for future ChatGPT and LLM iterations, ranging from code refactoring to

scientific writing to prompt engineering. We hope our work will help

bioinformaticians to complement their workflows while staying aware of the

various implications of using this technology. Additionally, to track new and

creative applications for bioinformatics tools such as ChatGPT, we have

established a GitHub repository at

this https URL Our belief is that ethical

adherence to ChatGPT and other LLMs will increase the efficiency of

computational biologists, ultimately advancing the pace of scientific discovery

in the life sciences.

Because obesity is a risk factor for many serious illnesses such as diabetes,

better understandings of obesity and eating disorders have been attracting

attention in neurobiology, psychiatry, and neuroeconomics. This paper presents

future study directions by unifying (i) economic theory of addiction and

obesity (Becker and Murphy, 1988; Levy 2002; Dragone 2009), and (ii) recent

empirical findings in neuroeconomics and neurobiology of obesity and addiction.

It is suggested that neurobiological substrates such as adiponectin, dopamine

(D2 receptors), endocannabinoids, ghrelin, leptin, nesfatin-1, norepinephrine,

orexin, oxytocin, serotonin, vasopressin, CCK, GLP-1, MCH, PYY, and stress

hormones (e.g., CRF) in the brain (e.g., OFC, VTA, NAcc, and the hypothalamus)

may determine parameters in the economic theory of obesity. Also, the

importance of introducing time-inconsistent and gain/loss-asymmetrical temporal

discounting (intertemporal choice) models based on Tsallis' statistics and

incorporating time-perception parameters into the neuroeconomic theory is

emphasized. Future directions in the application of the theory to studies in

neuroeconomics and neuropsychiatry of obesity at the molecular level, which may

help medical/psychopharmacological treatments of obesity (e.g., with

sibutramine), are discussed.

There are no more papers matching your filters at the moment.