symbolic-computation

A novel symbolic regression framework combines multimodal large language models with Kolmogorov-Arnold Networks to infer mathematical expressions from data plots, achieving higher success rates than Mathematica's FindFormula on randomly generated functions while demonstrating effectiveness with both commercial and open-source LLMs.

Peking University researchers develop AI-Newton, a concept-driven framework that autonomously discovers physical laws from experimental data without prior knowledge, successfully rediscovering fundamental laws of Newtonian mechanics while identifying approximately 90 physical concepts and 50 general laws through an iterative knowledge building process.

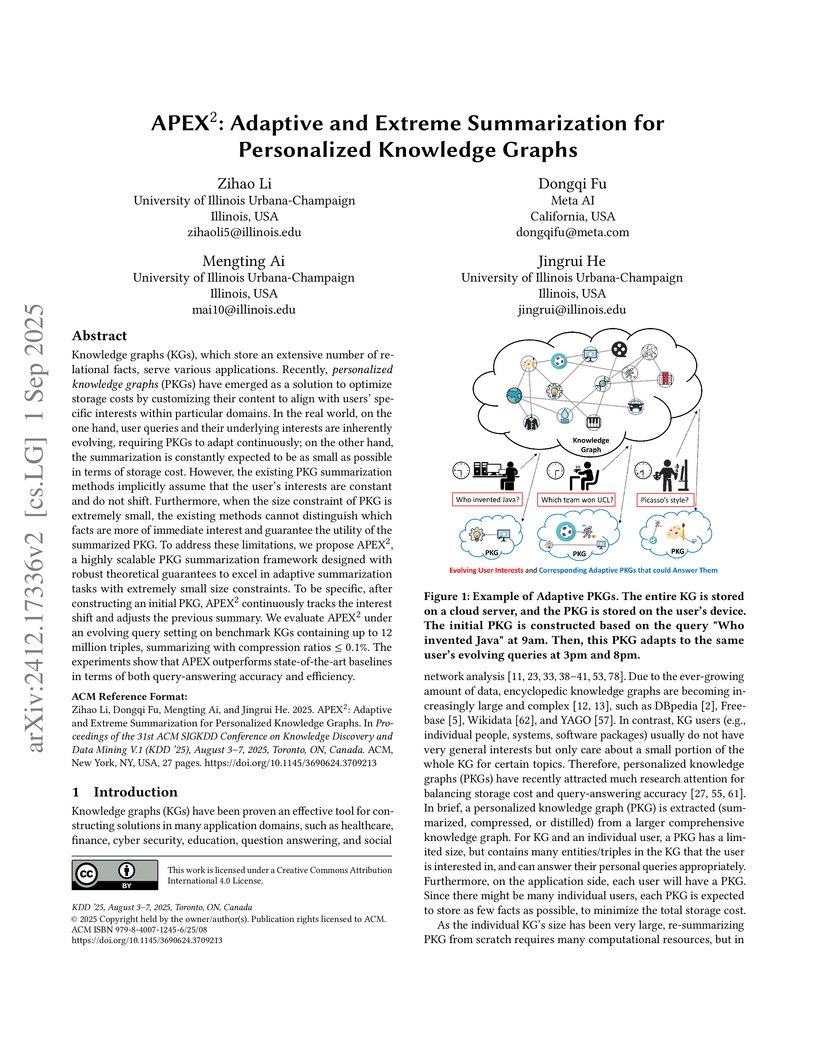

Knowledge graphs (KGs), which store an extensive number of relational facts, serve various applications. Recently, personalized knowledge graphs (PKGs) have emerged as a solution to optimize storage costs by customizing their content to align with users' specific interests within particular domains. In the real world, on one hand, user queries and their underlying interests are inherently evolving, requiring PKGs to adapt continuously; on the other hand, the summarization is constantly expected to be as small as possible in terms of storage cost. However, the existing PKG summarization methods implicitly assume that the user's interests are constant and do not shift. Furthermore, when the size constraint of PKG is extremely small, the existing methods cannot distinguish which facts are more of immediate interest and guarantee the utility of the summarized PKG. To address these limitations, we propose APEX2, a highly scalable PKG summarization framework designed with robust theoretical guarantees to excel in adaptive summarization tasks with extremely small size constraints. To be specific, after constructing an initial PKG, APEX2 continuously tracks the interest shift and adjusts the previous summary. We evaluate APEX2 under an evolving query setting on benchmark KGs containing up to 12 million triples, summarizing with compression ratios ≤0.1%. The experiments show that APEX outperforms state-of-the-art baselines in terms of both query-answering accuracy and efficiency. Code is available at this https URL.

We present a novel method for symbolic regression (SR), the task of searching for compact programmatic hypotheses that best explain a dataset. The problem is commonly solved using genetic algorithms; we show that we can enhance such methods by inducing a library of abstract textual concepts. Our algorithm, called LaSR, uses zero-shot queries to a large language model (LLM) to discover and evolve concepts occurring in known high-performing hypotheses. We discover new hypotheses using a mix of standard evolutionary steps and LLM-guided steps (obtained through zero-shot LLM queries) conditioned on discovered concepts. Once discovered, hypotheses are used in a new round of concept abstraction and evolution. We validate LaSR on the Feynman equations, a popular SR benchmark, as well as a set of synthetic tasks. On these benchmarks, LaSR substantially outperforms a variety of state-of-the-art SR approaches based on deep learning and evolutionary algorithms. Moreover, we show that LaSR can be used to discover a novel and powerful scaling law for LLMs.

Researchers from Princeton University introduce CoALA, a conceptual framework that leverages historical cognitive architectures and production systems to organize and guide the design of language agents. It demonstrates CoALA's utility by systematically categorizing existing agents and identifying directions for developing more capable AI systems.

For various 2≤n,m≤6, we propose some new algorithms for multiplying an n×m matrix with an m×6 matrix over a possibly noncommutative coefficient ring.

PySR is an open-source library for practical symbolic regression, a type of

machine learning which aims to discover human-interpretable symbolic models.

PySR was developed to democratize and popularize symbolic regression for the

sciences, and is built on a high-performance distributed back-end, a flexible

search algorithm, and interfaces with several deep learning packages. PySR's

internal search algorithm is a multi-population evolutionary algorithm, which

consists of a unique evolve-simplify-optimize loop, designed for optimization

of unknown scalar constants in newly-discovered empirical expressions. PySR's

backend is the extremely optimized Julia library SymbolicRegression.jl, which

can be used directly from Julia. It is capable of fusing user-defined operators

into SIMD kernels at runtime, performing automatic differentiation, and

distributing populations of expressions to thousands of cores across a cluster.

In describing this software, we also introduce a new benchmark,

"EmpiricalBench," to quantify the applicability of symbolic regression

algorithms in science. This benchmark measures recovery of historical empirical

equations from original and synthetic datasets.

We investigate the local integrability and linearizability of a family of

three-dimensional polynomial systems with the matrix of the linear

approximation having the eigenvalues 1,ζ,ζ2, where ζ is a

primitive cubic root of unity. We establish a criterion for the convergence of

the Poincar\'e--Dulac normal form of the systems and examine the relationship

between the normal form and integrability. Additionally, we introduce an

efficient algorithm to determine the necessary conditions for the integrability

of the systems. This algorithm is then applied to a quadratic subfamily of the

systems to analyze its integrability and linearizability. Our findings offer

insights into the integrability properties of three-dimensional polynomial

systems.

Real-world phenomena can often be conveniently described by dynamical systems (that is, ODE systems in the state-space form). However, if one observes the state of the system only partially, the observed quantities (outputs) and the inputs of the system can typically be related by more complicated differential-algebraic equations (DAEs). Therefore, a natural question (referred to as the realizability problem) is: given a differential-algebraic equation (say, fitted from data), does it come from a partially observed dynamical system? A special case in which the functions involved in the dynamical system are rational is of particular interest. For a single differential-algebraic equation in a single output variable, Forsman has shown that it is realizable by a rational dynamical system if and only if the corresponding hypersurface is unirational, and he turned this into an algorithm in the first-order case.

In this paper, we study a more general case of single-input-single-output equations. We show that if a realization by a rational dynamical system exists, the system can be taken to have the dimension equal to the order of the DAE. We provide a complete algorithm for first-order DAEs. We also show that the same approach can be used for higher-order DAEs using several examples from the literature.

A framework uses Large Language Models to automatically discover explicit mathematical equations for nonlinear dynamical systems directly from observational data. It accurately identifies governing equations for various PDEs and ODEs, achieving high reconstruction and generalization accuracy while significantly reducing the need for handcrafted algorithms.

We show how polynomial path orders can be employed efficiently in conjunction

with weak innermost dependency pairs to automatically certify polynomial

runtime complexity of term rewrite systems and the polytime computability of

the functions computed. The established techniques have been implemented and we

provide ample experimental data to assess the new method.

This expository and review paper deals with the Diamond Lemma for ring

theory, which is proved in the first section of G. M. Bergman, The Diamond

Lemma for Ring Theory, Advances in Mathematics, 29 (1978), pp. 178-218. No

originality of the present note is claimed on the part of the author, except

for some suggestions and figures. Throughout this paper, I shall mostly use

Bergman's expressions in his paper. In Remarks and Notes, the reader will find

some useful information on this topic.

We consider the problem of determining multiple steady states for positive

real values in models of biological networks. Investigating the potential for

these in models of the mitogen-activated protein kinases (MAPK) network has

consumed considerable effort using special insights into the structure of

corresponding models. Here we apply combinations of symbolic computation

methods for mixed equality/inequality systems, specifically virtual

substitution, lazy real triangularization and cylindrical algebraic

decomposition. We determine multistationarity of an 11-dimensional MAPK network

when numeric values are known for all but potentially one parameter. More

precisely, our considered model has 11 equations in 11 variables and 19

parameters, 3 of which are of interest for symbolic treatment, and furthermore

positivity conditions on all variables and parameters.

Explainable Artificial Intelligence (or xAI) has become an important research

topic in the fields of Machine Learning and Deep Learning. In this paper, we

propose a Genetic Programming (GP) based approach, named Genetic Programming

Explainer (GPX), to the problem of explaining decisions computed by AI systems.

The method generates a noise set located in the neighborhood of the point of

interest, whose prediction should be explained, and fits a local explanation

model for the analyzed sample. The tree structure generated by GPX provides a

comprehensible analytical, possibly non-linear, symbolic expression which

reflects the local behavior of the complex model. We considered three machine

learning techniques that can be recognized as complex black-box models: Random

Forest, Deep Neural Network and Support Vector Machine in twenty data sets for

regression and classifications problems. Our results indicate that the GPX is

able to produce more accurate understanding of complex models than the state of

the art. The results validate the proposed approach as a novel way to deploy GP

to improve interpretability.

How are emotions formed? Through extensive debate and the promulgation of diverse theories , the theory of constructed emotion has become prevalent in recent research on emotions. According to this theory, an emotion concept refers to a category formed by interoceptive and exteroceptive information associated with a specific emotion. An emotion concept stores past experiences as knowledge and can predict unobserved information from acquired information. Therefore, in this study, we attempted to model the formation of emotion concepts using a constructionist approach from the perspective of the constructed emotion theory. Particularly, we constructed a model using multilayered multimodal latent Dirichlet allocation , which is a probabilistic generative model. We then trained the model for each subject using vision, physiology, and word information obtained from multiple people who experienced different visual emotion-evoking stimuli. To evaluate the model, we verified whether the formed categories matched human subjectivity and determined whether unobserved information could be predicted via categories. The verification results exceeded chance level, suggesting that emotion concept formation can be explained by the proposed model.

Computational context understanding refers to an agent's ability to fuse disparate sources of information for decision-making and is, therefore, generally regarded as a prerequisite for sophisticated machine reasoning capabilities, such as in artificial intelligence (AI). Data-driven and knowledge-driven methods are two classical techniques in the pursuit of such machine sense-making capability. However, while data-driven methods seek to model the statistical regularities of events by making observations in the real-world, they remain difficult to interpret and they lack mechanisms for naturally incorporating external knowledge. Conversely, knowledge-driven methods, combine structured knowledge bases, perform symbolic reasoning based on axiomatic principles, and are more interpretable in their inferential processing; however, they often lack the ability to estimate the statistical salience of an inference. To combat these issues, we propose the use of hybrid AI methodology as a general framework for combining the strengths of both approaches. Specifically, we inherit the concept of neuro-symbolism as a way of using knowledge-bases to guide the learning progress of deep neural networks. We further ground our discussion in two applications of neuro-symbolism and, in both cases, show that our systems maintain interpretability while achieving comparable performance, relative to the state-of-the-art.

We propose an effective method for primary decomposition of symmetric ideals. Let K[X]=K[x1,…,xn] be the n-valuables polynomial ring over a field K and Sn the symmetric group of order n. We consider the canonical action of Sn on K[X] i.e. σ(f(x1,…,xn))=f(xσ(1),…,xσ(n)) for σ∈Sn. For an ideal I of K[X], I is called {\em symmetric} if σ(I)=I for any σ∈Sn. For a minimal primary decomposition I=Q1∩⋯∩Qr of a symmetric ideal I, σ(I)=σ(Q1)∩⋯∩σ(Qr) is a minimal primary decomposition of I for any σ∈Sn. We utilize this property to compute a full primary decomposition of I efficiently from partial primary components. We investigate the effectiveness of our algorithm by implementing it in the computer algebra system Risa/Asir.

In this report, we summarize the takeaways from the first NeurIPS 2021 NetHack Challenge. Participants were tasked with developing a program or agent that can win (i.e., 'ascend' in) the popular dungeon-crawler game of NetHack by interacting with the NetHack Learning Environment (NLE), a scalable, procedurally generated, and challenging Gym environment for reinforcement learning (RL). The challenge showcased community-driven progress in AI with many diverse approaches significantly beating the previously best results on NetHack. Furthermore, it served as a direct comparison between neural (e.g., deep RL) and symbolic AI, as well as hybrid systems, demonstrating that on NetHack symbolic bots currently outperform deep RL by a large margin. Lastly, no agent got close to winning the game, illustrating NetHack's suitability as a long-term benchmark for AI research.

Traditional logic programming relies on symbolic computation on the CPU, which can limit performance for large-scale inference tasks. Recent advances in GPU hardware enable high-throughput matrix operations, motivating a shift toward parallel logic inference. Boolean Matrix Logic Programming (BMLP) introduces a novel approach to datalog query evaluation using Boolean matrix algebra, well-suited to GPU acceleration. Building on this paradigm, we present two GPU-accelerated BMLP algorithms for bottom-up inference over linear dyadic recursive datalog programs. We further extend the BMLP theoretical framework to support general linear recursion with binary predicates. Empirical evaluations on reachability queries in large directed graphs and the Freebase 15K dataset show that our methods achieve 1-4 orders of magnitude speed up over state-of-the-art systems. These results demonstrate that Boolean matrix-based reasoning can significantly advance the scalability and efficiency of logic programming on modern hardware. Source code is available on this https URL.

We present an explicit algorithmic method for computing square roots in quaternion algebras over global fields of characteristic different from 2.

There are no more papers matching your filters at the moment.