causal-inference

This research disentangles the causal effects of pre-training, mid-training, and reinforcement learning (RL) on language model reasoning using a controlled synthetic task framework. It establishes that RL extends reasoning capabilities only under specific conditions of pre-training exposure and data calibration, with mid-training playing a crucial role in bridging training stages and improving generalization.

The DEMOCRITUS system establishes a new framework for building large causal models (LCMs) by extracting and structuring textual knowledge from Large Language Models (LLMs) across diverse domains. It leverages a Geometric Transformer to embed and organize vast causal claims into coherent, navigable manifolds, which, unlike raw LLM outputs, exhibit global causal coherence and interpretable local structures.

Video unified models exhibit strong capabilities in understanding and generation, yet they struggle with reason-informed visual editing even when equipped with powerful internal vision-language models (VLMs). We attribute this gap to two factors: 1) existing datasets are inadequate for training and evaluating reasoning-aware video editing, and 2) an inherent disconnect between the models' reasoning and editing capabilities, which prevents the rich understanding from effectively instructing the editing process. Bridging this gap requires an integrated framework that connects reasoning with visual transformation. To address this gap, we introduce the Reason-Informed Video Editing (RVE) task, which requires reasoning about physical plausibility and causal dynamics during editing. To support systematic evaluation, we construct RVE-Bench, a comprehensive benchmark with two complementary subsets: Reasoning-Informed Video Editing and In-Context Video Generation. These subsets cover diverse reasoning dimensions and real-world editing scenarios. Building upon this foundation, we propose the ReViSE, a Self-Reflective Reasoning (SRF) framework that unifies generation and evaluation within a single architecture. The model's internal VLM provides intrinsic feedback by assessing whether the edited video logically satisfies the given instruction. The differential feedback that refines the generator's reasoning behavior during training. Extensive experiments on RVE-Bench demonstrate that ReViSE significantly enhances editing accuracy and visual fidelity, achieving a 32% improvement of the Overall score in the reasoning-informed video editing subset over state-of-the-art methods.

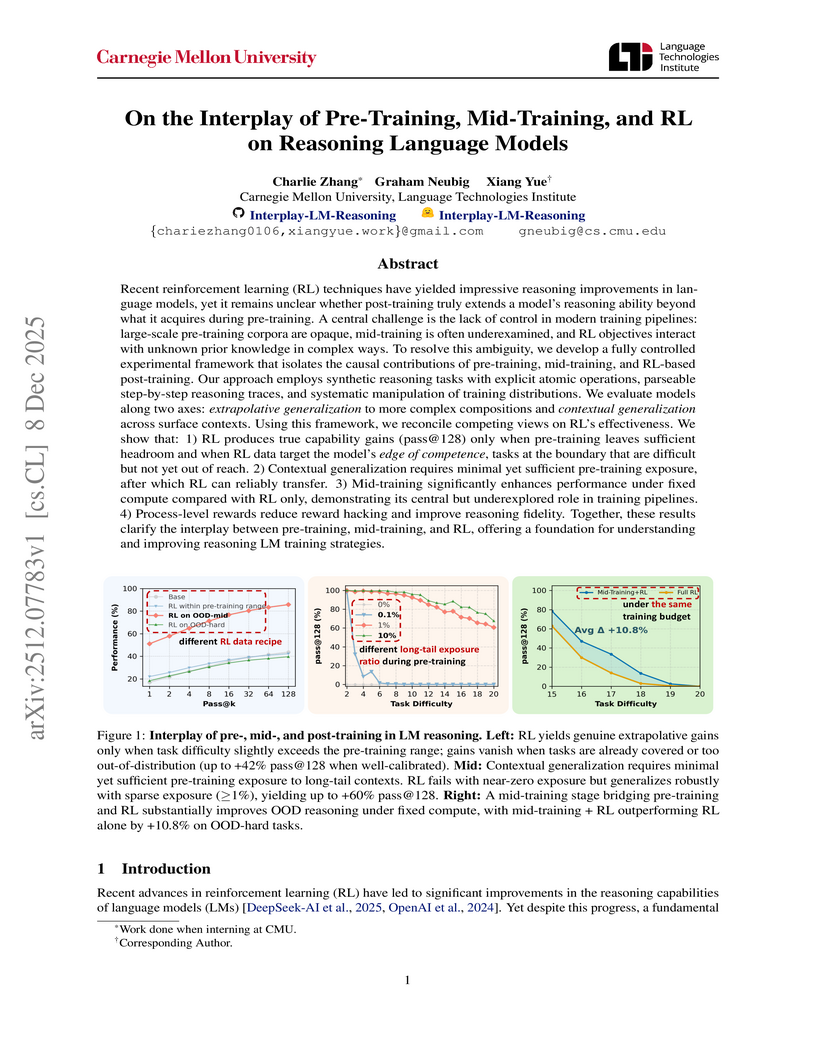

Recent reinforcement learning (RL) techniques have yielded impressive reasoning improvements in language models, yet it remains unclear whether post-training truly extends a model's reasoning ability beyond what it acquires during pre-training. A central challenge is the lack of control in modern training pipelines: large-scale pre-training corpora are opaque, mid-training is often underexamined, and RL objectives interact with unknown prior knowledge in complex ways. To resolve this ambiguity, we develop a fully controlled experimental framework that isolates the causal contributions of pre-training, mid-training, and RL-based post-training. Our approach employs synthetic reasoning tasks with explicit atomic operations, parseable step-by-step reasoning traces, and systematic manipulation of training distributions. We evaluate models along two axes: extrapolative generalization to more complex compositions and contextual generalization across surface contexts. Using this framework, we reconcile competing views on RL's effectiveness. We show that: 1) RL produces true capability gains (pass@128) only when pre-training leaves sufficient headroom and when RL data target the model's edge of competence, tasks at the boundary that are difficult but not yet out of reach. 2) Contextual generalization requires minimal yet sufficient pre-training exposure, after which RL can reliably transfer. 3) Mid-training significantly enhances performance under fixed compute compared with RL only, demonstrating its central but underexplored role in training pipelines. 4) Process-level rewards reduce reward hacking and improve reasoning fidelity. Together, these results clarify the interplay between pre-training, mid-training, and RL, offering a foundation for understanding and improving reasoning LM training strategies.

08 Dec 2025

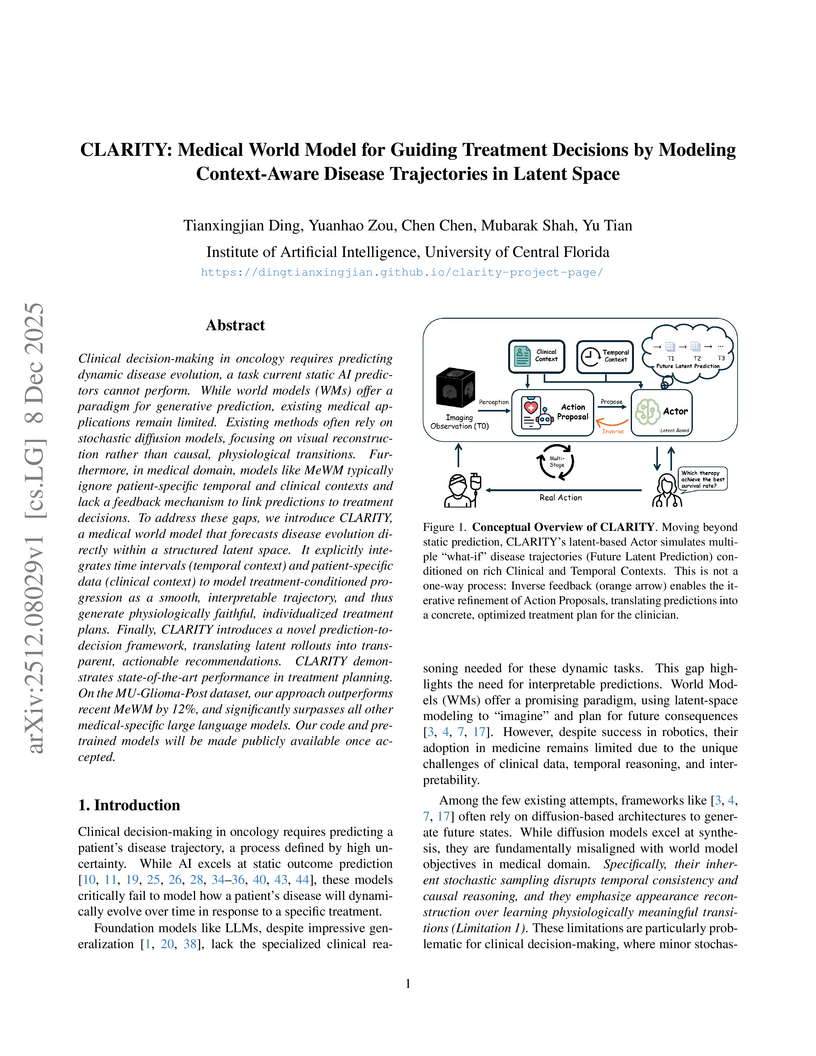

A medical world model named CLARITY, developed by researchers at the University of Central Florida, dynamically simulates treatment-conditioned disease trajectories and refines therapy decisions. It achieves state-of-the-art performance in treatment exploration with a 9.2% F1 improvement over leading baselines on glioma datasets and provides significantly improved survival risk stratification while being 9-15x more computationally efficient than diffusion-based alternatives.

Traffic accidents result in millions of injuries and fatalities globally, with a significant number occurring at intersections each year. Traffic Signal Control (TSC) is an effective strategy for enhancing safety at these urban junctures. Despite the growing popularity of Reinforcement Learning (RL) methods in optimizing TSC, these methods often prioritize driving efficiency over safety, thus failing to address the critical balance between these two aspects. Additionally, these methods usually need more interpretability. CounterFactual (CF) learning is a promising approach for various causal analysis fields. In this study, we introduce a novel framework to improve RL for safety aspects in TSC. This framework introduces a novel method based on CF learning to address the question: ``What if, when an unsafe event occurs, we backtrack to perform alternative actions, and will this unsafe event still occur in the subsequent period?'' To answer this question, we propose a new structure causal model to predict the result after executing different actions, and we propose a new CF module that integrates with additional ``X'' modules to promote safe RL practices. Our new algorithm, CFLight, which is derived from this framework, effectively tackles challenging safety events and significantly improves safety at intersections through a near-zero collision control strategy. Through extensive numerical experiments on both real-world and synthetic datasets, we demonstrate that CFLight reduces collisions and improves overall traffic performance compared to conventional RL methods and the recent safe RL model. Moreover, our method represents a generalized and safe framework for RL methods, opening possibilities for applications in other domains. The data and code are available in the github this https URL.

09 Dec 2025

Learning about the causal structure of the world is a fundamental problem for human cognition. Causal models and especially causal learning have proved to be difficult for large pretrained models using standard techniques of deep learning. In contrast, cognitive scientists have applied advances in our formal understanding of causation in computer science, particularly within the Causal Bayes Net formalism, to understand human causal learning. In the very different tradition of reinforcement learning, researchers have described an intrinsic reward signal called "empowerment" which maximizes mutual information between actions and their outcomes. "Empowerment" may be an important bridge between classical Bayesian causal learning and reinforcement learning and may help to characterize causal learning in humans and enable it in machines. If an agent learns an accurate causal world model, they will necessarily increase their empowerment, and increasing empowerment will lead to a more accurate causal world model. Empowerment may also explain distinctive features of childrens causal learning, as well as providing a more tractable computational account of how that learning is possible. In an empirical study, we systematically test how children and adults use cues to empowerment to infer causal relations, and design effective causal interventions.

Extreme weather events are widely studied in fields such as agriculture, ecology, and meteorology. The spatio-temporal co-occurrence of extreme events can strengthen or weaken under changing climate conditions. In this paper, we propose a novel approach to model spatio-temporal extremes by integrating climate indices via a conditional variational autoencoder (cXVAE). A convolutional neural network (CNN) is embedded in the decoder to convolve climatological indices with the spatial dependence within the latent space, thereby allowing the decoder to be dependent on the climate variables. There are three main contributions here. First, we demonstrate through extensive simulations that the proposed conditional XVAE accurately emulates spatial fields and recovers spatially and temporally varying extremal dependence with very low computational cost post training. Second, we provide a simple, scalable approach to detecting condition-driven shifts and whether the dependence structure is invariant to the conditioning variable. Third, when dependence is found to be condition-sensitive, the conditional XVAE supports counterfactual experiments allowing intervention on the climate covariate and propagating the associated change through the learned decoder to quantify differences in joint tail risk, co-occurrence ranges, and return metrics. To demonstrate the practical utility and performance of the model in real-world scenarios, we apply our method to analyze the monthly maximum Fire Weather Index (FWI) over eastern Australia from 2014 to 2024 conditioned on the El Niño/Southern Oscillation (ENSO) index.

As artificial intelligence and machine learning tools become more accessible, and scientists face new obstacles to data collection (e.g., rising costs, declining survey response rates), researchers increasingly use predictions from pre-trained algorithms as substitutes for missing or unobserved data. Though appealing for financial and logistical reasons, using standard tools for inference can misrepresent the association between independent variables and the outcome of interest when the true, unobserved outcome is replaced by a predicted value. In this paper, we characterize the statistical challenges inherent to drawing inference with predicted data (IPD) and show that high predictive accuracy does not guarantee valid downstream inference. We show that all such failures reduce to statistical notions of (i) bias, when predictions systematically shift the estimand or distort relationships among variables, and (ii) variance, when uncertainty from the prediction model and the intrinsic variability of the true data are ignored. We then review recent methods for conducting IPD and discuss how this framework is deeply rooted in classical statistical theory. We then comment on some open questions and interesting avenues for future work in this area, and end with some comments on how to use predicted data in scientific studies that is both transparent and statistically principled.

28 Nov 2025

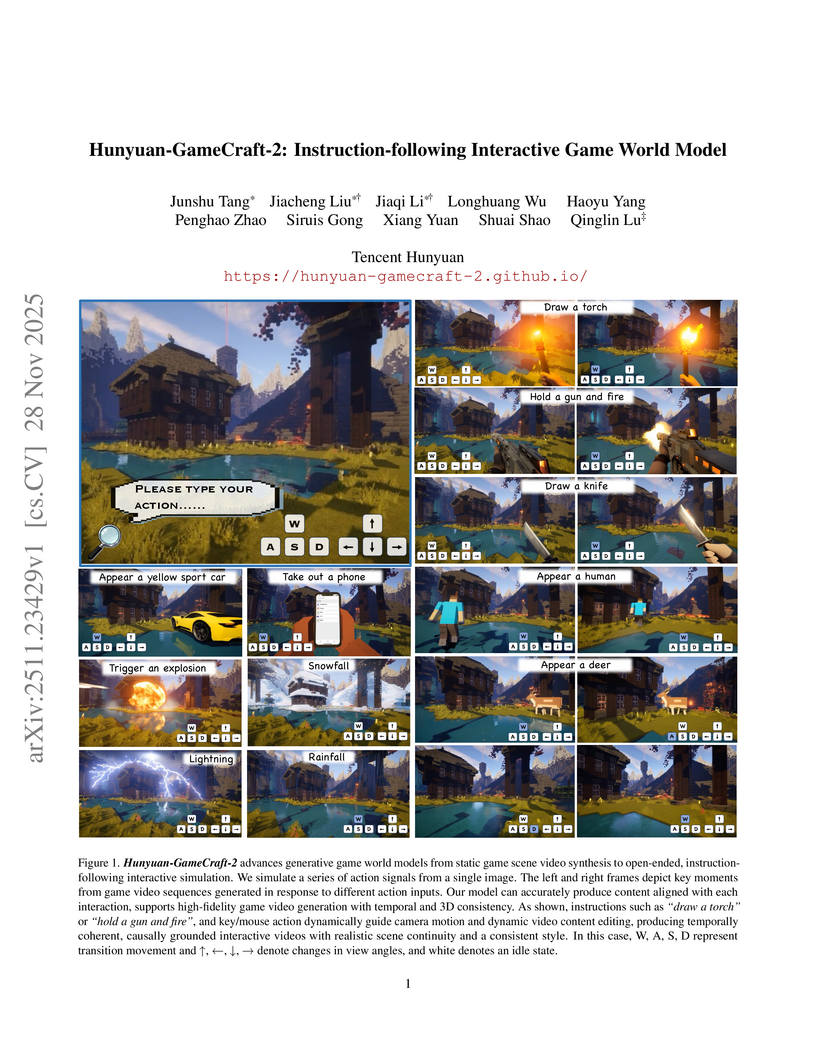

Tencent Hunyuan developed Hunyuan-GameCraft-2, an instruction-following interactive game world model that generates dynamic video content responsive to natural language and traditional game inputs. The model achieved high interaction trigger rates (e.g., 0.983 for Actor Actions) and superior physics correctness on a new InterBench evaluation, operating in real-time at 16 FPS.

Large language models for code (LLM4Code) have greatly improved developer productivity but also raise privacy concerns due to their reliance on open-source repositories containing abundant personally identifiable information (PII). Prior work shows that commercial models can reproduce sensitive PII, yet existing studies largely treat PII as a single category and overlook the heterogeneous risks among different types. We investigate whether distinct PII types vary in their likelihood of being learned and leaked by LLM4Code, and whether this relationship is causal. Our methodology includes building a dataset with diverse PII types, fine-tuning representative models of different scales, computing training dynamics on real PII data, and formulating a structural causal model to estimate the causal effect of learnability on leakage. Results show that leakage risks differ substantially across PII types and correlate with their training dynamics: easy-to-learn instances such as IP addresses exhibit higher leakage, while harder types such as keys and passwords leak less frequently. Ambiguous types show mixed behaviors. This work provides the first causal evidence that leakage risks are type-dependent and offers guidance for developing type-aware and learnability-aware defenses for LLM4Code.

08 Dec 2025

LLM-based agents are rapidly being plugged into expert decision-support, yet in messy, high-stakes settings they rarely make the team smarter: human-AI teams often underperform the best individual, experts oscillate between verification loops and over-reliance, and the promised complementarity does not materialise. We argue this is not just a matter of accuracy, but a fundamental gap in how we conceive AI assistance: expert decisions are made through collaborative cognitive processes where mental models, goals, and constraints are continually co-constructed, tested, and revised between human and AI. We propose Collaborative Causal Sensemaking (CCS) as a research agenda and organizing framework for decision-support agents: systems designed as partners in cognitive work, maintaining evolving models of how particular experts reason, helping articulate and revise goals, co-constructing and stress-testing causal hypotheses, and learning from the outcomes of joint decisions so that both human and agent improve over time. We sketch challenges around training ecologies that make collaborative thinking instrumentally valuable, representations and interaction protocols for co-authored models, and evaluation centred on trust and complementarity. These directions can reframe MAS research around agents that participate in collaborative sensemaking and act as AI teammates that think with their human partners.

We consider an adaptive experiment for treatment choice and design a minimax and Bayes optimal adaptive experiment with respect to regret. Given binary treatments, the experimenter's goal is to choose the treatment with the highest expected outcome through an adaptive experiment, in order to maximize welfare. We consider adaptive experiments that consist of two phases, the treatment allocation phase and the treatment choice phase. The experiment starts with the treatment allocation phase, where the experimenter allocates treatments to experimental subjects to gather observations. During this phase, the experimenter can adaptively update the allocation probabilities using the observations obtained in the experiment. After the allocation phase, the experimenter proceeds to the treatment choice phase, where one of the treatments is selected as the best. For this adaptive experimental procedure, we propose an adaptive experiment that splits the treatment allocation phase into two stages, where we first estimate the standard deviations and then allocate each treatment proportionally to its standard deviation. We show that this experiment, often referred to as Neyman allocation, is minimax and Bayes optimal in the sense that its regret upper bounds exactly match the lower bounds that we derive. To show this optimality, we derive minimax and Bayes lower bounds for the regret using change-of-measure arguments. Then, we evaluate the corresponding upper bounds using the central limit theorem and large deviation bounds.

09 Dec 2025

This paper introduces the Impact-Driven AI Framework (IDAIF), a novel architectural methodology that integrates Theory of Change (ToC) principles with modern artificial intelligence system design. As AI systems increasingly influence high-stakes domains including healthcare, finance, and public policy, the alignment problem--ensuring AI behavior corresponds with human values and intentions--has become critical. Current approaches predominantly optimize technical performance metrics while neglecting the sociotechnical dimensions of AI deployment. IDAIF addresses this gap by establishing a systematic mapping between ToC's five-stage model (Inputs-Activities-Outputs-Outcomes-Impact) and corresponding AI architectural layers (Data Layer-Pipeline Layer-Inference Layer-Agentic Layer-Normative Layer). Each layer incorporates rigorous theoretical foundations: multi-objective Pareto optimization for value alignment, hierarchical multi-agent orchestration for outcome achievement, causal directed acyclic graphs (DAGs) for hallucination mitigation, and adversarial debiasing with Reinforcement Learning from Human Feedback (RLHF) for fairness assurance. We provide formal mathematical formulations for each component and introduce an Assurance Layer that manages assumption failures through guardian architectures. Three case studies demonstrate IDAIF application across healthcare, cybersecurity, and software engineering domains. This framework represents a paradigm shift from model-centric to impact-centric AI development, providing engineers with concrete architectural patterns for building ethical, trustworthy, and socially beneficial AI systems.

Researchers from Shanghai Artificial Intelligence Laboratory developed Envision, a benchmark and evaluation metric to assess how well multimodal generative models understand and simulate dynamic, causally-linked events across multiple images. Their analysis revealed that current models universally struggle with spatial-temporal consistency and physical plausibility in generating these sequences, highlighting a core limitation in their comprehension of real-world dynamics.

This paper from the Knowledge Lab at the University of Chicago models how political elites might strategically shape public opinion when artificial intelligence significantly reduces the cost of persuasion. It finds that a single elite has incentives to polarize society for future policy flexibility, while competing elites create a nuanced dynamic between polarization and locking in public opinion to deter rivals, making the overall effect on polarization context-dependent.

Researchers identified a remarkably sparse subset of "H-Neurons" in large language models whose activation reliably predicts hallucinatory outputs and is causally linked to over-compliance behaviors. These hallucination-associated neural circuits are found to emerge primarily during the pre-training phase and remain largely stable through post-training alignment.

Recent advances in natural language processing have enabled the increasing use of text data in causal inference, particularly for adjusting confounding factors in treatment effect estimation. Although high-dimensional text can encode rich contextual information, it also poses unique challenges for causal identification and estimation. In particular, the positivity assumption, which requires sufficient treatment overlap across confounder values, is often violated at the observational level, when massive text is represented in feature spaces. Redundant or spurious textual features inflate dimensionality, producing extreme propensity scores, unstable weights, and inflated variance in effect estimates. We address these challenges with Confounding-Aware Token Rationalization (CATR), a framework that selects a sparse necessary subset of tokens using a residual-independence diagnostic designed to preserve confounding information sufficient for unconfoundedness. By discarding irrelevant texts while retaining key signals, CATR mitigates observational-level positivity violations and stabilizes downstream causal effect estimators. Experiments on synthetic data and a real-world study using the MIMIC-III database demonstrate that CATR yields more accurate, stable, and interpretable causal effect estimates than existing baselines.

Large language models (LLMs) can reliably distinguish grammatical from ungrammatical sentences, but how grammatical knowledge is represented within the models remains an open question. We investigate whether different syntactic phenomena recruit shared or distinct components in LLMs. Using a functional localization approach inspired by cognitive neuroscience, we identify the LLM units most responsive to 67 English syntactic phenomena in seven open-weight models. These units are consistently recruited across sentences containing the phenomena and causally support the models' syntactic performance. Critically, different types of syntactic agreement (e.g., subject-verb, anaphor, determiner-noun) recruit overlapping sets of units, suggesting that agreement constitutes a meaningful functional category for LLMs. This pattern holds in English, Russian, and Chinese; and further, in a cross-lingual analysis of 57 diverse languages, structurally more similar languages share more units for subject-verb agreement. Taken together, these findings reveal that syntactic agreement-a critical marker of syntactic dependencies-constitutes a meaningful category within LLMs' representational spaces.

Researchers from Technische Universität München and UC Berkeley introduce a causal inference framework to transition from descriptive "what-is" analyses to interventional "what-if" insights in human-factor analysis for post-occupancy evaluations. Applying this approach to a CBE Occupant Survey dataset, they reduced the analytical scope from 124 to 28 causally associated factors, revealing a causal hierarchy and identifying "satisfaction with glare and reflections on screens" as a primary causal driver for overall satisfaction, enabling more targeted interventions.

There are no more papers matching your filters at the moment.