AIP Labs

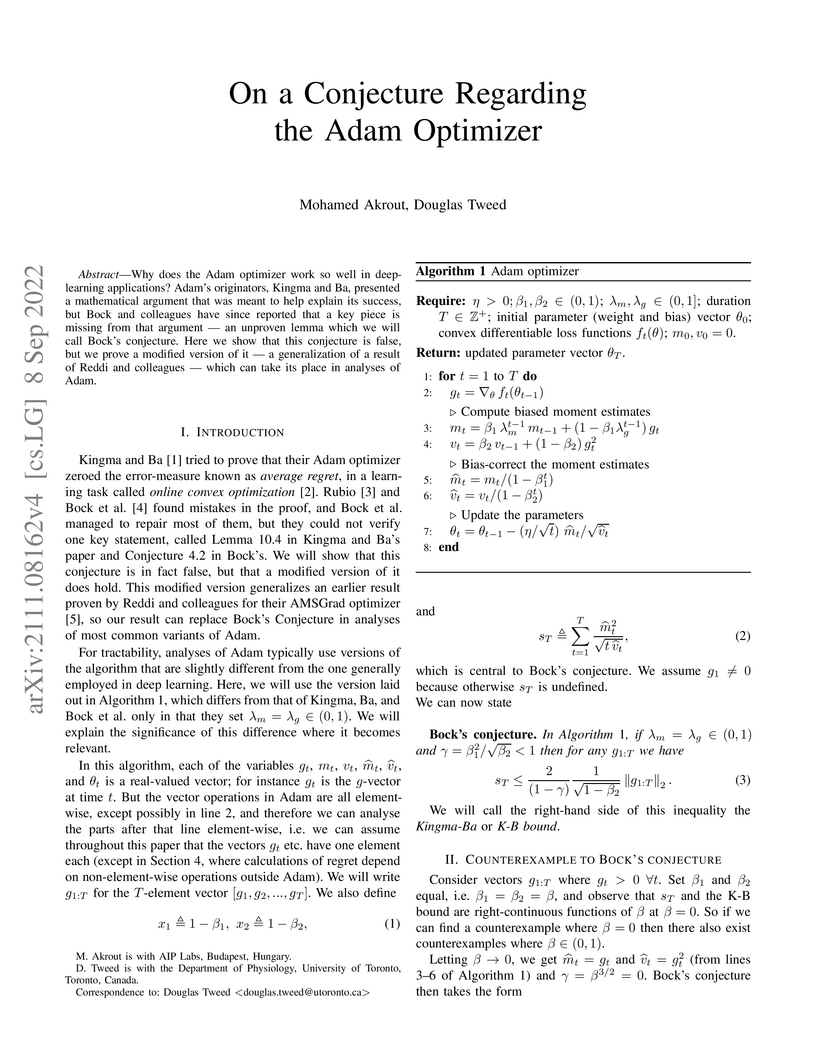

Why does the Adam optimizer work so well in deep-learning applications? Adam's originators, Kingma and Ba, presented a mathematical argument that was meant to help explain its success, but Bock and colleagues have since reported that a key piece is missing from that argument − an unproven lemma which we will call Bock's conjecture. Here we show that this conjecture is false, but we prove a modified version of it − a generalization of a result of Reddi and colleagues − which can take its place in analyses of Adam.

Despite continued advancement in recent years, deep neural networks still rely on large amounts of training data to avoid overfitting. However, labeled training data for real-world applications such as healthcare is limited and difficult to access given longstanding privacy, and strict data sharing policies. By manipulating image datasets in the pixel or feature space, existing data augmentation techniques represent one of the effective ways to improve the quantity and diversity of training data. Here, we look to advance augmentation techniques by building upon the emerging success of text-to-image diffusion probabilistic models in augmenting the training samples of our macroscopic skin disease dataset. We do so by enabling fine-grained control of the image generation process via input text prompts. We demonstrate that this generative data augmentation approach successfully maintains a similar classification accuracy of the visual classifier even when trained on a fully synthetic skin disease dataset. Similar to recent applications of generative models, our study suggests that diffusion models are indeed effective in generating high-quality skin images that do not sacrifice the classifier performance, and can improve the augmentation of training datasets after curation.

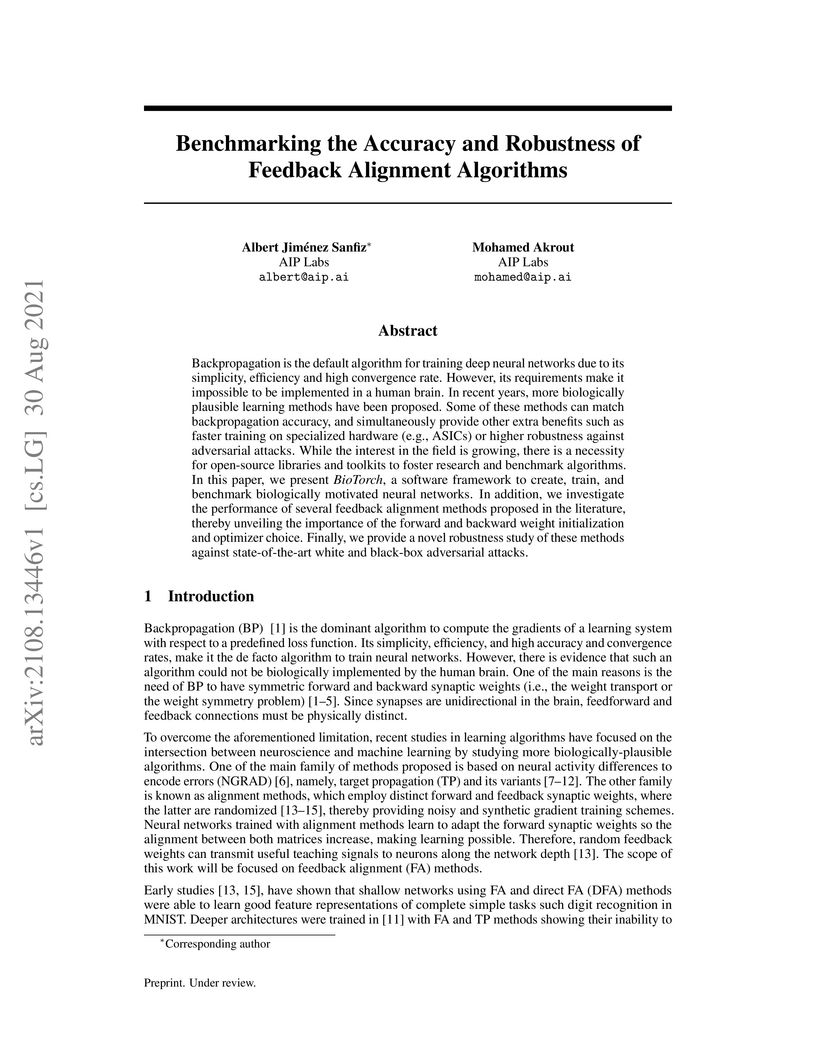

Backpropagation is the default algorithm for training deep neural networks due to its simplicity, efficiency and high convergence rate. However, its requirements make it impossible to be implemented in a human brain. In recent years, more biologically plausible learning methods have been proposed. Some of these methods can match backpropagation accuracy, and simultaneously provide other extra benefits such as faster training on specialized hardware (e.g., ASICs) or higher robustness against adversarial attacks. While the interest in the field is growing, there is a necessity for open-source libraries and toolkits to foster research and benchmark algorithms. In this paper, we present BioTorch, a software framework to create, train, and benchmark biologically motivated neural networks. In addition, we investigate the performance of several feedback alignment methods proposed in the literature, thereby unveiling the importance of the forward and backward weight initialization and optimizer choice. Finally, we provide a novel robustness study of these methods against state-of-the-art white and black-box adversarial attacks.

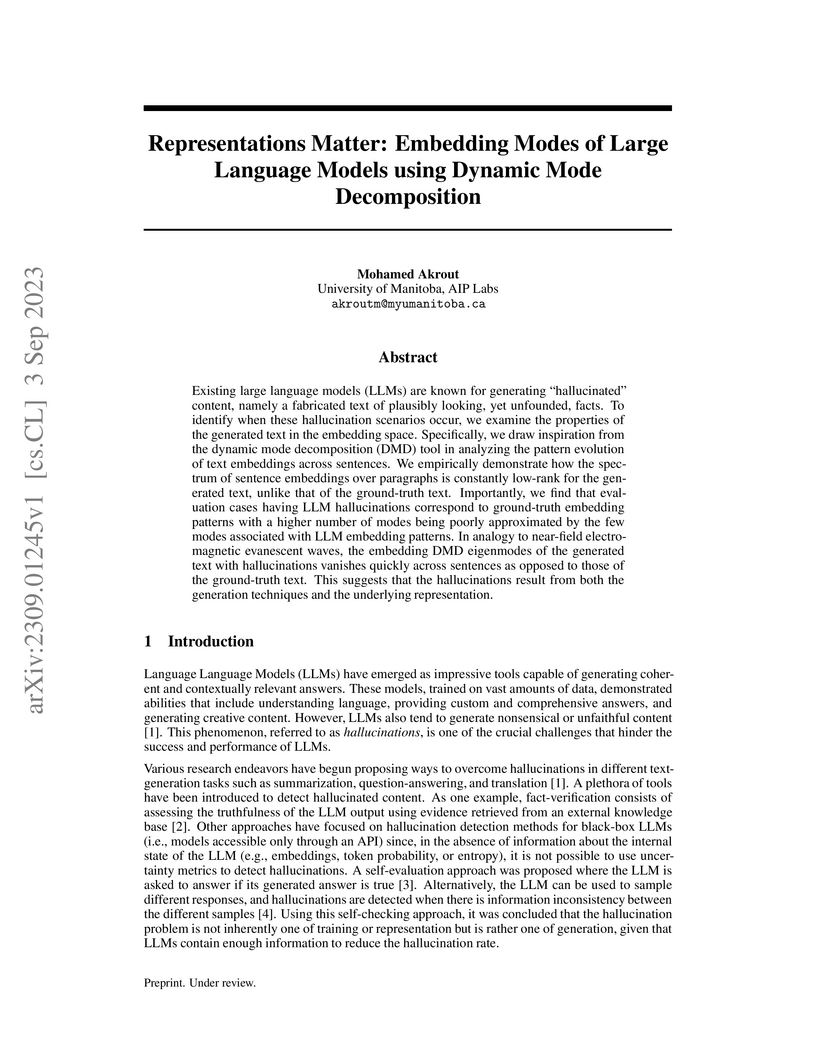

Existing large language models (LLMs) are known for generating "hallucinated"

content, namely a fabricated text of plausibly looking, yet unfounded, facts.

To identify when these hallucination scenarios occur, we examine the properties

of the generated text in the embedding space. Specifically, we draw inspiration

from the dynamic mode decomposition (DMD) tool in analyzing the pattern

evolution of text embeddings across sentences. We empirically demonstrate how

the spectrum of sentence embeddings over paragraphs is constantly low-rank for

the generated text, unlike that of the ground-truth text. Importantly, we find

that evaluation cases having LLM hallucinations correspond to ground-truth

embedding patterns with a higher number of modes being poorly approximated by

the few modes associated with LLM embedding patterns. In analogy to near-field

electromagnetic evanescent waves, the embedding DMD eigenmodes of the generated

text with hallucinations vanishes quickly across sentences as opposed to those

of the ground-truth text. This suggests that the hallucinations result from

both the generation techniques and the underlying representation.

Variability in medical image segmentation, arising from annotator

preferences, expertise, and their choice of tools, has been well documented.

While the majority of multi-annotator segmentation approaches focus on modeling

annotator-specific preferences, they require annotator-segmentation

correspondence. In this work, we introduce the problem of segmentation style

discovery, and propose StyleSeg, a segmentation method that learns plausible,

diverse, and semantically consistent segmentation styles from a corpus of

image-mask pairs without any knowledge of annotator correspondence. StyleSeg

consistently outperforms competing methods on four publicly available skin

lesion segmentation (SLS) datasets. We also curate ISIC-MultiAnnot, the largest

multi-annotator SLS dataset with annotator correspondence, and our results show

a strong alignment, using our newly proposed measure AS2, between the predicted

styles and annotator preferences. The code and the dataset are available at

this https URL

There are no more papers matching your filters at the moment.