CERMICS

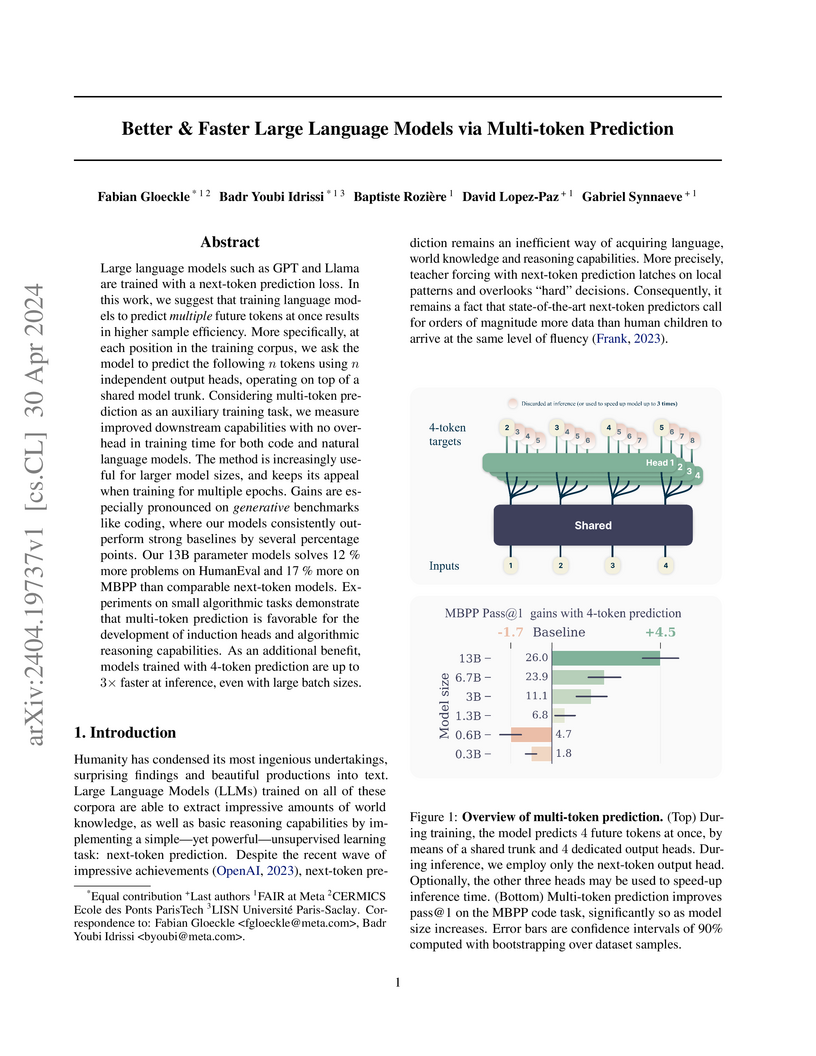

Researchers at FAIR at Meta and affiliated institutions introduced a method to train large language models by predicting multiple future tokens simultaneously, rather than just the immediate next one. This approach improves model performance, especially in code generation tasks with gains up to +7.5 percentage points on HumanEval, enhances reasoning capabilities, and accelerates inference speed by up to 3 times without requiring core architectural changes.

18 Sep 2024

We consider the electrons of a molecule in the adiabatic time-dependent density functional theory approximation. We establish the well-posedness of the time evolution and its linear response close to a non-degenerate ground state, and prove the appearance of resonances at relevant frequencies. The main mathematical difficulty is due to the structure of the linearized equations, which are not complex-linear. We bypass this difficulty by reformulating the linearized problem as a real Hamiltonian system, whose stability is ensured by the second-order optimality conditions on the energy.

16 Jul 2025

The definition of metastable states is an ubiquitous task in the design and analysis of molecular simulation, and is a crucial input in a variety of acceleration methods for the sampling of long configurational trajectories.

Although standard definitions based on local energy minimization procedures can sometimes be used, these definitions are typically suboptimal, or entirely inadequate when entropic effects are significant, or when the lowest energy barriers are quickly overcome by thermal fluctuations.

In this work, we propose an approach to the definition of metastable states, based on the shape-optimization of a local separation of timescale metric directly linked to the efficiency of a class of accelerated molecular dynamics algorithms.

To realize this approach, we derive analytic expressions for shape-variations of Dirichlet eigenvalues for a class of operators associated with reversible elliptic diffusions, and use them to construct a local ascent algorithm, explicitly treating the case of multiple eigenvalues.

We propose two methods to make our method tractable in high-dimensional systems: one based on dynamical coarse-graining, the other on recently obtained low-temperature shape-sensitive spectral asymptotics.

We validate our method on a benchmark biomolecular system, showcasing a significant improvement over conventional definitions of metastable states.

02 Oct 2023

This article provides the first mathematical analysis of the Density Matrix Embedding Theory (DMET) method. We prove that, under certain assumptions, (i) the exact ground-state density matrix is a fixed-point of the DMET map for non-interacting systems, (ii) there exists a unique physical solution in the weakly-interacting regime, and (iii) DMET is exact at first order in the coupling parameter. We provide numerical simulations to support our results and comment on the physical meaning of the assumptions under which they hold true. We show that the violation of these assumptions may yield multiple solutions of the DMET equations. We moreover introduce and discuss a specific N-representability problem inherent to DMET.

30 Jun 2025

In this paper, we first show continuity of both Wasserstein projections in the convex order when they are unique. We also check that, in arbitrary dimension d, the quadratic Wasserstein projection of a probability measure μ on the set of probability measures dominated by ν in the convex order is non-expansive in μ and Hölder continuous with exponent 1/2 in ν. When μ and ν are Gaussian, we check that this projection is Gaussian and also consider the quadratic Wasserstein projection on the set of probability measures ν dominating μ in the convex order. In the case when d≥2 and ν is not absolutely continuous with respect to the Lebesgue measure where uniqueness of the latter projection was not known, we check that there is always a unique Gaussian projection and characterize when non Gaussian projections with the same covariance matrix also exist. Still for Gaussian distributions, we characterize the covariance matrices of the two projections. It turns out that there exists an orthogonal transformation of space under which the computations are similar to the easy case when the covariance matrices of μ and ν are diagonal.

07 Feb 2025

Overdamped Langevin dynamics are reversible stochastic differential equations

which are commonly used to sample probability measures in high-dimensional

spaces, such as the ones appearing in computational statistical physics and

Bayesian inference. By varying the diffusion coefficient, there are in fact

infinitely many overdamped Langevin dynamics which are reversible with respect

to the target probability measure at hand. This suggests to optimize the

diffusion coefficient in order to increase the convergence rate of the

dynamics, as measured by the spectral gap of the generator associated with the

stochastic differential equation. We analytically study this problem here,

obtaining in particular necessary conditions on the optimal diffusion

coefficient. We also derive an explicit expression of the optimal diffusion in

some appropriate homogenized limit. Numerical results, both relying on

discretizations of the spectral gap problem and Monte Carlo simulations of the

stochastic dynamics, demonstrate the increased quality of the sampling arising

from an appropriate choice of the diffusion coefficient.

The purpose of this paper is to revisit the Bianchi identities existing for the Riemann and Weyl tensors in the combined framework of the formal theory of systems of partial differential equations (Spencer cohomology, differential systems, formal integrability) and Algebraic Analysis (homological algebra, differential modules, duality). In particular, we prove that the n 2 (n 2 -- 1)(n -- 2)/24 generating Bianchi identities for the Riemann tensor are first order and can be easily described by means of the Spencer cohomology of the first order Killing symbol in arbitrary dimension n ≥ 2. Similarly, the n(n 2 -- 1)(n + 2)(n -- 4)/24 generating Bianchi identities for the Weyl tensor are first order and can be easily described by means of the Spencer cohomology of the first order conformal Killing symbol in arbitrary dimension n ≥ 5. As A MOST SURPRISING RESULT, the 9 generating Bianchi identities for the Weyl tensor are of second order in dimension n = 4 while the analogue of the Weyl tensor has 5 components of third order in the metric with 3 first order generating Bianchi identities in dimension n = 3. The above results, which could not be obtained otherwise, are valid for any non-degenerate metric of constant riemannian curvature and do not depend on any conformal factor. They are checked in an Appendix produced by Alban Quadrat (INRIA, Lille) by means of computer algebra. We finally explain why the work of Lanczos and followers is not coherent with these results and must therefore be also revisited.

We analyze the regularity of the optimal exercise boundary for the American Put option when the underlying asset pays a discrete dividend at a known time td during the lifetime of the option. The ex-dividend asset price process is assumed to follow Black-Scholes dynamics and the dividend amount is a deterministic function of the ex-dividend asset price just before the dividend date. The solution to the associated optimal stopping problem can be characterised in terms of an optimal exercise boundary which, in contrast to the case when there are no dividends, may no longer be monotone. In this paper we prove that when the dividend function is positive and concave, then the boundary is non-increasing in a left-hand neighbourhood of td, and tends to 0 as time tends to td− with a speed that we can characterize. When the dividend function is linear in a neighbourhood of zero, then we show continuity of the exercise boundary and a high contact principle in the left-hand neighbourhood of td. When it is globally linear, then right-continuity of the boundary and the high contact principle are proved to hold globally. Finally, we show how all the previous results can be extended to multiple dividend payment dates in that case.

30 Jun 2025

In this note, we give a simple derivation of the formula obtained in Dowson and Landau (1982), Olkin and Pukelsheim (1982) and Givens and Shortt (1984) for the quadratic Wasserstein distance between two Gaussian distributions on Rd with respective covariance matrices Σμ and Σν. This derivation relies on the existence of an orthogonal matrix O such that O∗ΣμO and O∗ΣνO share the same correlation matrix and on the simplicity of optimal couplings in the case with the same correlation matrix and therefore the same copula.

07 Jan 2015

This note presents an elementary and direct proof for the convexity of the Choquet integral when the corresponding set function is submodular.

12 Apr 2023

We provide a formal derivation of a reduced model for twisted bilayer graphene (TBG) from Density Functional Theory. Our derivation is based on a variational approximation of the TBG Kohn-Sham Hamiltonian and asymptotic limit techniques. In contrast with other approaches, it does not require the introduction of an intermediate tight-binding model. The so-obtained model is similar to that of the Bistritzer-MacDonald (BM) model but contains additional terms. Its parameters can be easily computed from Kohn-Sham calculations on single-layer graphene and untwisted bilayer graphene with different stackings. It allows one in particular to estimate the parameters wAA and wAB of the BM model from first-principles. The resulting numerical values, namely wAA=wAB≃126 meV for the experimental interlayer mean distance are in good agreement with the empirical values wAA=wAB=110 meV obtained by fitting to experimental data. We also show that if the BM parameters are set to wAA=wAB≃126 meV, the BM model is an accurate approximation of our reduced model.

13 Jun 2017

This paper aims to revisit the mathematical foundations of both General Relativity and Electromagnetism after one century, in the light of the formal theory of systems of partial differential equations and Lie pseudogroups (D.C. Spencer, 1970) or Algebraic Analysis, namely a mixture of differential geometry and homological algebra (M. Kashiwara, 1970). Among the new results obtained, we may quote: 1) In dimension 4 only, the 9 Bianchi identities that must be satisfied by the 10 components of the Weyl tensor are described by a second order operator and have thus nothing to do with the 20 first order Bianchi identities for the 20 components of the Riemann tensor. This result, not known after one century, has been recently confirmed by A. Quadrat (INRIA) using new computer algebra packages. 2) The Ricci tensor R is a section of the Ricci bundle of symmetric covariant 2-tensors which is the kernel of the canonical projection of the Riemann bundle onto the Weyl bundle, induced by the canonical inclusion of the classical Killing system (Poincare group) into the conformal Killing system (Conformal group). It has only to do with the second order jets (elations) of the conformal Killing system because any 1-form with value in the bundle of elations can be decomposed in the direct sum (R,F) where the electromagnetic field F is a section of the vector bundle of skewsymmetric covariant 2-tensors. It follows therefore that electromagnetism and gravitation have only to do with second order jets. 3) The 10 linearized second order Einstein equations are parametrizing the 4 first order Cauchy stress equations but cannot be parametrized themselves. As a byproduct of this negative result, these 4 Cauchy stress equations have nothing to do with the 4 divergence-type equations usually obtained from the 20 Bianchi identities by contraction of indices.

22 Aug 2022

Wasserstein projections in the convex order were first considered in the

framework of weak optimal transport, and found application in various problems

such as concentration inequalities and martingale optimal transport. In

dimension one, it is well-known that the set of probability measures with a

given mean is a lattice w.r.t. the convex order. Our main result is that,

contrary to the minimum and maximum in the convex order, the Wasserstein

projections are Lipschitz continuity w.r.t. the Wasserstein distance in

dimension one. Moreover, we provide examples that show sharpness of the

obtained bounds for the 1-Wasserstein distance.

31 Jul 2020

Feynman-Kac semigroups appear in various areas of mathematics: non-linear filtering, large deviations theory, spectral analysis of Schrodinger operators among others. Their long time behavior provides important information, for example in terms of ground state energy of Schrodinger operators, or scaled cumulant generating function in large deviations theory. In this paper, we propose a simple and natural extension of the stability of Markov chains for these non-linear evolutions. As other classical ergodicity results, it relies on two assumptions: a Lyapunov condition that induces some compactness, and a minorization condition ensuring some mixing. Illustrative examples are provided, where the stability of the non-linear semigroup arises either from the underlying dynamics or from the Feynman-Kac weight function. We also use our technique to provide uniform in the time step convergence estimates for discretizations of stochastic differential equations

This paper focuses on an extension of the Limit Order Book (LOB) model with general shape introduced by Alfonsi, Fruth and Schied. Here, the additional feature allows a time-varying LOB depth. We solve the optimal execution problem in this framework for both discrete and continuous time strategies. This gives in particular sufficient conditions to exclude Price Manipulations in the sense of Huberman and Stanzl or Transaction-Triggered Price Manipulations (see Alfonsi, Schied and Slynko). These conditions give interesting qualitative insights on how market makers may create or not price manipulations.

25 Mar 2024

We prove an ergodic theorem for Markov chains indexed by the Ulam-Harris-Neveu tree over large subsets with arbitrary shape under two assumptions: with high probability, two vertices in the large subset are far from each other and have their common ancestor close to the root. The assumption on the common ancestor can be replaced by some regularity assumption on the Markov transition kernel. We verify that those assumptions are satisfied for some usual trees. Finally, with Markov-Chain Monte-Carlo considerations in mind, we prove when the underlying Markov chain is stationary and reversible that the Markov chain, that is the line graph, yields minimal variance for the empirical average estimator among trees with a given number of nodes.

06 Mar 2023

Transport coefficients, such as the mobility, thermal conductivity and shear

viscosity, are quantities of prime interest in statistical physics. At the

macroscopic level, transport coefficients relate an external forcing of

magnitude η, with η≪1, acting on the system to an average

response expressed through some steady-state flux. In practice, steady-state

averages involved in the linear response are computed as time averages over a

realization of some stochastic differential equation. Variance reduction

techniques are of paramount interest in this context, as the linear response is

scaled by a factor of 1/η, leading to large statistical error. One way to

limit the increase in the variance is to allow for larger values of η by

increasing the range of values of the forcing for which the nonlinear part of

the response is sufficiently small. In theory, one can add an extra forcing to

the physical perturbation of the system, called synthetic forcing, as long as

this extra forcing preserves the invariant measure of the reference system. The

aim is to find synthetic perturbations allowing to reduce the nonlinear part of

the response as much as possible. We present a mathematical framework for

quantifying the quality of synthetic forcings, in the context of linear

response theory, and discuss various possible choices for them. Our findings

are illustrated with numerical results in low-dimensional systems.

28 Dec 2015

A standard approach to computing expectations with respect to a given target measure is to introduce an overdamped Langevin equation which is reversible with respect to the target distribution, and to approximate the expectation by a time-averaging estimator. As has been noted in recent papers, introducing an appropriately chosen nonreversible component to the dynamics is beneficial, both in terms of reducing the asymptotic variance and of speeding up convergence to the target distribution. In this paper we present a detailed study of the dependence of the asymptotic variance on the deviation from reversibility. Our theoretical findings are supported by numerical simulations.

In this paper, we are interested in continuous time models in which the index level induces some feedback on the dynamics of its composing stocks. More precisely, we propose a model in which the log-returns of each stock may be decomposed into a systemic part proportional to the log-returns of the index plus an idiosyncratic part. We show that, when the number of stocks in the index is large, this model may be approximated by a local volatility model for the index and a stochastic volatility model for each stock with volatility driven by the index. This result is useful in a calibration perspective : it suggests that one should first calibrate the local volatility of the index and then calibrate the dynamics of each stock. We explain how to do so in the limiting simplified model and in the original model.

This article addresses the probabilistic nature of fatigue life in structures subjected to cyclic loading with variable amplitude. Drawing on the formalisation of Miner's cumulative damage rule that we introduced in the recent article [Cartiaux, Ehrlacher, Legoll, Libal and Reygner, Prob. Eng. Mech. 2023], we apply our methodology to estimate the survival probability of an industrial structure using experimental data. The study considers both the randomness in the initial state of the structure and in the amplitude of loading cycles. The results indicate that the variability of loading cycles can be captured through the concept of deterministic equivalent damage, providing a computationally efficient method for assessing the fatigue life of the structure. Furthermore, the article highlights that the usual combination of Miner's rule and of the weakest link principle systematically overestimates the structure's fatigue life. On the case study that we consider, this overestimation reaches a multiplicative factor of more than two. We then describe how the probabilistic framework that we have introduced offers a remedy to this overestimation.

There are no more papers matching your filters at the moment.