trading-and-market-microstructure

A framework for synthetic data generation, the Prompt-driven Cognitive Computing Framework (PMCSF), simulates human cognitive imperfections and boundedness to create more authentic AI-generated text. This approach achieved a 72.7% expert review pass rate and 11,089 average views for generated content, while also enhancing financial trading strategies with a 47.4% reduction in maximum drawdown during bear markets and a 2.2 times increase in net returns during bull markets.

This paper develops a comprehensive theoretical framework that imports concepts from stochastic thermodynamics to model price impact and characterize the feasibility of round-trip arbitrage in financial markets. A trading cycle is treated as a non-equilibrium thermodynamic process, where price impact represents dissipative work and market noise plays the role of thermal fluctuations. The paper proves a Financial Second Law: under general convex impact functionals, any round-trip trading strategy yields non-positive expected profit. This structural constraint is complemented by a fluctuation theorem that bounds the probability of profitable cycles in terms of dissipated work and market volatility. The framework introduces a statistical ensemble of trading strategies governed by a Gibbs measure, leading to a free energy decomposition that connects expected cost, strategy entropy, and a market temperature parameter. The framework provides rigorous, testable inequalities linking microstructural impact to macroscopic no-arbitrage conditions, offering a novel physics-inspired perspective on market efficiency. The paper derives explicit analytical results for prototypical trading strategies and discusses empirical validation protocols.

Autodeleveraging (ADL) is a last-resort loss socialization mechanism for perpetual futures venues. It is triggered when solvency-preserving liquidations fail. Despite the dominance of perpetual futures in the crypto derivatives market, with over \60 trillion of volume in 2024, there has been no formal study of ADL. In this paper, we provide the first rigorous model of ADL. We prove that ADL mechanisms face a fundamental \emph{trilemma}: no policy can simultaneously satisfy exchange \emph{solvency}, \emph{revenue}, and \emph{fairness} to traders. This impossibility theorem implies that as participation scales, a novel form of \emph{moral hazard} grows asymptotically, rendering `zero-loss' socialization impossible. Constructively, we show that three classes of ADL mechanisms can optimally navigate this trilemma to provide fairness, robustness to price shocks, and maximal exchange revenue. We analyze these mechanisms on the Hyperliquid dataset from October 10, 2025, when ADL was used repeatedly to close \2.1 billion of positions in 12 minutes. By comparing our ADL mechanisms to the standard approaches used in practice, we demonstrate empirically that Hyperliquid's production queue overutilized ADL by approximately 8× relative to our optimal policy, imposing roughly \$630 million of unnecessary haircuts on winning traders. This comparison also suggests that Binance overutilized ADL far more than Hyperliquid. Our results both theoretically and empirically demonstrate that optimized ADL mechanisms can dramatically reduce the loss of trader profits while maintaining exchange solvency.

Forecasting cryptocurrency prices is hindered by extreme volatility and a methodological dilemma between information-scarce univariate models and noise-prone full-multivariate models. This paper investigates a partial-multivariate approach to balance this trade-off, hypothesizing that a strategic subset of features offers superior predictive power. We apply the Partial-Multivariate Transformer (PMformer) to forecast daily returns for BTCUSDT and ETHUSDT, benchmarking it against eleven classical and deep learning models. Our empirical results yield two primary contributions. First, we demonstrate that the partial-multivariate strategy achieves significant statistical accuracy, effectively balancing informative signals with noise. Second, we experiment and discuss an observable disconnect between this statistical performance and practical trading utility; lower prediction error did not consistently translate to higher financial returns in simulations. This finding challenges the reliance on traditional error metrics and highlights the need to develop evaluation criteria more aligned with real-world financial objectives.

Large language models (LLMs) achieve strong performance across benchmarks--from knowledge quizzes and math reasoning to web-agent tasks--but these tests occur in static settings, lacking real dynamics and uncertainty. Consequently, they evaluate isolated reasoning or problem-solving rather than decision-making under uncertainty. To address this, we introduce LiveTradeBench, a live trading environment for evaluating LLM agents in realistic and evolving markets. LiveTradeBench follows three design principles: (i) Live data streaming of market prices and news, eliminating dependence on offline backtesting and preventing information leakage while capturing real-time uncertainty; (ii) a portfolio-management abstraction that extends control from single-asset actions to multi-asset allocation, integrating risk management and cross-asset reasoning; and (iii) multi-market evaluation across structurally distinct environments--U.S. stocks and Polymarket prediction markets--differing in volatility, liquidity, and information flow. At each step, an agent observes prices, news, and its portfolio, then outputs percentage allocations that balance risk and return. Using LiveTradeBench, we run 50-day live evaluations of 21 LLMs across families. Results show that (1) high LMArena scores do not imply superior trading outcomes; (2) models display distinct portfolio styles reflecting risk appetite and reasoning dynamics; and (3) some LLMs effectively leverage live signals to adapt decisions. These findings expose a gap between static evaluation and real-world competence, motivating benchmarks that test sequential decision making and consistency under live uncertainty.

Researchers at UCLA, University of Washington, and Stanford, along with Tauric Research, developed Trading-R1, a financial reasoning LLM trained with a multi-stage curriculum of supervised fine-tuning and reinforcement learning to generate structured investment theses and actionable trade recommendations. The model demonstrates improved risk-adjusted returns and lower maximum drawdowns compared to baselines on a held-out dataset of major equities and ETFs.

Polymarket is a prediction market platform where users can speculate on future events by trading shares tied to specific outcomes, known as conditions. Each market is associated with a set of one or more such conditions. To ensure proper market resolution, the condition set must be exhaustive -- collectively accounting for all possible outcomes -- and mutually exclusive -- only one condition may resolve as true. Thus, the collective prices of all related outcomes should be \1, representing a combined probability of 1 of any outcome. Despite this design, Polymarket exhibits cases where dependent assets are mispriced, allowing for purchasing (or selling) a certain outcome for less than (or more than) \1, guaranteeing profit. This phenomenon, known as arbitrage, could enable sophisticated participants to exploit such inconsistencies.

In this paper, we conduct an empirical arbitrage analysis on Polymarket data to answer three key questions: (Q1) What conditions give rise to arbitrage (Q2) Does arbitrage actually occur on Polymarket and (Q3) Has anyone exploited these opportunities. A major challenge in analyzing arbitrage between related markets lies in the scalability of comparisons across a large number of markets and conditions, with a naive analysis requiring O(2n+m) comparisons. To overcome this, we employ a heuristic-driven reduction strategy based on timeliness, topical similarity, and combinatorial relationships, further validated by expert input.

Our study reveals two distinct forms of arbitrage on Polymarket: Market Rebalancing Arbitrage, which occurs within a single market or condition, and Combinatorial Arbitrage, which spans across multiple markets. We use on-chain historical order book data to analyze when these types of arbitrage opportunities have existed, and when they have been executed by users. We find a realized estimate of 40 million USD of profit extracted.

In financial trading, large language model (LLM)-based agents demonstrate significant potential. However, the high sensitivity to market noise undermines the performance of LLM-based trading systems. To address this limitation, we propose a novel multi-agent system featuring an internal competitive mechanism inspired by modern corporate management structures. The system consists of two specialized teams: (1) Data Team - responsible for processing and condensing massive market data into diversified text factors, ensuring they fit the model's constrained context. (2) Research Team - tasked with making parallelized multipath trading decisions based on deep research methods. The core innovation lies in implementing a real-time evaluation and ranking mechanism within each team, driven by authentic market feedback. Each agent's performance undergoes continuous scoring and ranking, with only outputs from top-performing agents being adopted. The design enables the system to adaptively adjust to dynamic environment, enhances robustness against market noise and ultimately delivers superior trading performance. Experimental results demonstrate that our proposed system significantly outperforms prevailing multi-agent systems and traditional quantitative investment methods across diverse evaluation metrics. ContestTrade is open-sourced on GitHub at this https URL.

This paper provides a comprehensive empirical analysis of the economics and dynamics behind arbitrages between centralized and decentralized exchanges (CEX-DEX) on Ethereum. We refine heuristics to identify arbitrage transactions from on-chain data and introduce a robust empirical framework to estimate arbitrage revenue without knowing traders' actual behaviors on CEX. Leveraging an extensive dataset spanning 19 months from August 2023 to March 2025, we estimate a total of 233.8M USD extracted by 19 major CEX-DEX searchers from 7,203,560 identified CEX-DEX arbitrages. Our analysis reveals increasing centralization trends as three searchers captured three-quarters of both volume and extracted value. We also demonstrate that searchers' profitability is tied to their integration level with block builders and uncover exclusive searcher-builder relationships and their market impact. Finally, we correct the previously underestimated profitability of block builders who vertically integrate with a searcher. These insights illuminate the darkest corner of the MEV landscape and highlight the critical implications of CEX-DEX arbitrages for Ethereum's decentralization.

Quoting algorithms are fundamental to electronic trading systems, enabling participants to post limit orders in a systematic and adaptive manner. In multi-asset or multi-contract settings, selecting the appropriate reference instrument for pricing quotes is essential to managing execution risk and minimizing trading costs. This work presents a framework for reference selection based on predictive modeling of short-term price stability. We employ multivariate Hawkes processes to model the temporal clustering and cross-excitation of order flow events, capturing the dynamics of activity at the top of the limit order book. To complement this, we introduce a Composite Liquidity Factor (CLF) that provides instantaneous estimates of slippage based on structural features of the book, such as price discontinuities and depth variation across levels. Unlike Hawkes processes, which capture temporal dependencies but not the absolute price structure of the book, the CLF offers a static snapshot of liquidity. A rolling voting mechanism is used to convert these signals into real-time reference decisions. Empirical evaluation on high-frequency market data demonstrates that forecasts derived from the Hawkes process align more closely with market-optimal quoting choices than those based on CLF. These findings highlight the complementary roles of dynamic event modeling and structural liquidity metrics in guiding quoting behavior under execution constraints.

We derive the arbitrage gains or, equivalently, Loss Versus Rebalancing (LVR) for arbitrage between \textit{two imperfectly liquid} markets, extending prior work that assumes the existence of an infinitely liquid reference market. Our result highlights that the LVR depends on the relative liquidity and relative trading volume of the two markets between which arbitrage gains are extracted. Our model assumes that trading costs on at least one of the markets is quadratic. This assumption holds well in practice, with the exception of highly liquid major pairs on centralized exchanges, for which we discuss extensions to other cost functions.

In this work, we aim to reconcile several apparently contradictory observations in market microstructure: is the famous "square-root law" of metaorder impact, which decays with time, compatible with the random-walk nature of prices and the linear impact of order imbalances? Can one entirely explain the volatility of prices as resulting from the flow of uninformed metaorders that mechanically impact them? We introduce a new theoretical framework to describe metaorders with different signs, sizes and durations, which all impact prices as a square-root of volume but with a subsequent time decay. We show that, as in the original propagator model, price diffusion is ensured by the long memory of cross-correlations between metaorders. In order to account for the effect of strongly fluctuating volumes q of individual trades, we further introduce two q-dependent exponents, which allow us to describe how the moments of generalized volume imbalance and the correlation between price changes and generalized order flow imbalance scale with T. We predict in particular that the corresponding power-laws depend in a non-monotonic fashion on a parameter a, which allows one to put the same weight on all child orders or to overweight large ones, a behaviour that is clearly borne out by empirical data. We also predict that the correlation between price changes and volume imbalances should display a maximum as a function of a, which again matches observations. Such noteworthy agreement between theory and data suggests that our framework correctly captures the basic mechanism at the heart of price formation, namely the average impact of metaorders. We argue that our results support the "Order-Driven" theory of excess volatility, and are at odds with the idea that a "Fundamental" component accounts for a large share of the volatility of financial markets.

Cryptocurrency price dynamics are driven largely by microstructural supply

demand imbalances in the limit order book (LOB), yet the highly noisy nature of

LOB data complicates the signal extraction process. Prior research has

demonstrated that deep-learning architectures can yield promising predictive

performance on pre-processed equity and futures LOB data, but they often treat

model complexity as an unqualified virtue. In this paper, we aim to examine

whether adding extra hidden layers or parameters to "blackbox ish" neural

networks genuinely enhances short term price forecasting, or if gains are

primarily attributable to data preprocessing and feature engineering. We

benchmark a spectrum of models from interpretable baselines, logistic

regression, XGBoost to deep architectures (DeepLOB, Conv1D+LSTM) on BTC/USDT

LOB snapshots sampled at 100 ms to multi second intervals using publicly

available Bybit data. We introduce two data filtering pipelines (Kalman,

Savitzky Golay) and evaluate both binary (up/down) and ternary (up/flat/down)

labeling schemes. Our analysis compares models on out of sample accuracy,

latency, and robustness to noise. Results reveal that, with data preprocessing

and hyperparameter tuning, simpler models can match and even exceed the

performance of more complex networks, offering faster inference and greater

interpretability.

This research explores the capabilities of large language models as trading agents in simulated financial markets. It demonstrates that LLMs can effectively execute diverse trading strategies and influence market dynamics, exhibiting behaviors such as price convergence and liquidity provision, though with an observed asymmetry in correcting undervaluation versus overvaluation.

A comprehensive survey examines the evolution of AI in quantitative investment across three distinct phases - from traditional statistical models to deep learning approaches and emerging LLM-based methods - mapping out technical approaches, practical challenges, and future directions while connecting isolated research efforts into a unified framework for alpha strategy development.

Researchers from the University of Oxford and Queen Mary University of London conducted live trading experiments on a cryptocurrency market to empirically analyze limit order book mechanics. They quantified adverse selection's impact on common trading strategies and developed a predictive model for "Reversals," achieving an annualized Sharpe ratio of +11.97 for a balanced-inventory strategy.

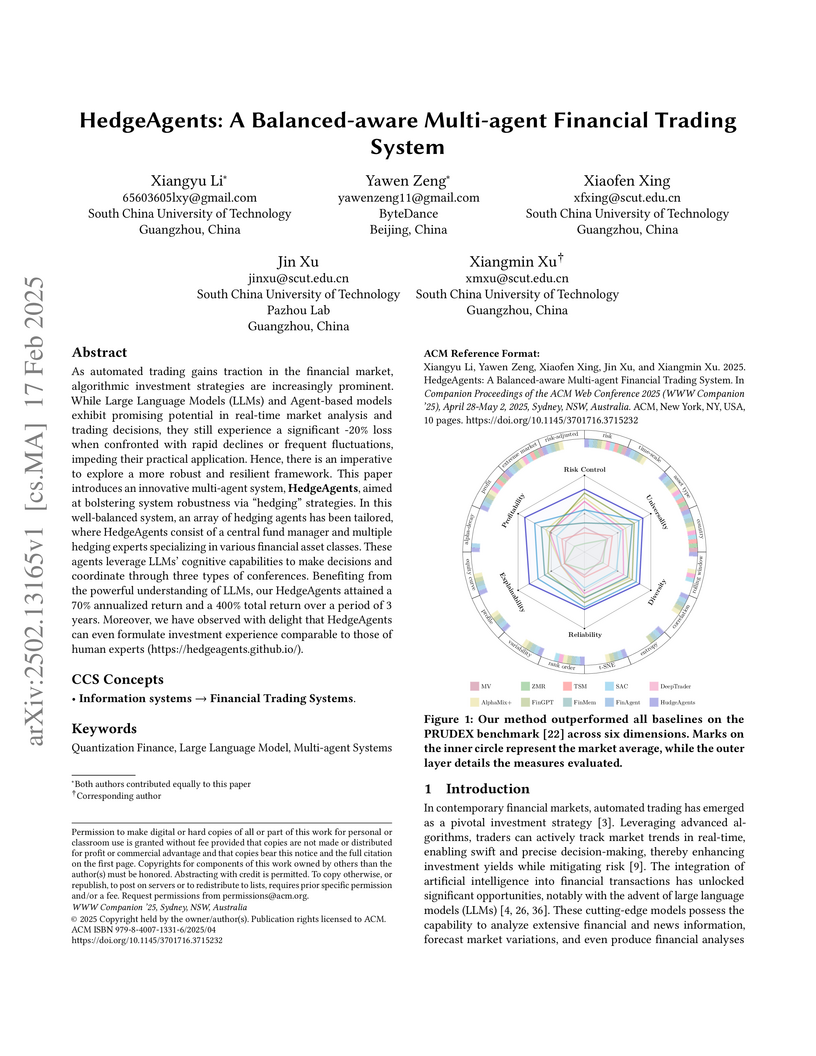

A groundbreaking multi-agent trading system from South China University of Technology and ByteDance achieves exceptional market performance (70% annualized return, 400% total return over 3 years) through innovative coordination between LLM-powered specialized hedging agents, demonstrating unprecedented stability during extreme market conditions while maintaining consistent profitability.

TradingAgents is a multi-agent LLM framework designed to mimic the specialized roles and collaborative decision-making processes of a financial trading firm. This framework, developed by researchers from UCLA, MIT, and Tauric Research, achieved superior cumulative returns (e.g., 26.62% for AAPL) and Sharpe Ratios (e.g., 8.21 for AAPL) compared to traditional algorithmic strategies in backtesting, while also providing high explainability for its trading decisions.

We introduce a new software toolbox for agent-based simulation. Facilitating rapid prototyping by offering a user-friendly Python API, its core rests on an efficient C++ implementation to support simulation of large-scale multi-agent systems. Our software environment benefits from a versatile message-driven architecture. Originally developed to support research on financial markets, it offers the flexibility to simulate a wide-range of different (easily customisable) market rules and to study the effect of auxiliary factors, such as delays, on the market dynamics. As a simple illustration, we employ our toolbox to investigate the role of the order processing delay in normal trading and for the scenario of a significant price change. Owing to its general architecture, our toolbox can also be employed as a generic multi-agent system simulator. We provide an example of such a non-financial application by simulating a mechanism for the coordination of no-regret learning agents in a multi-agent network routing scenario previously proposed in the literature.

Despite its practical significance, generating realistic synthetic financial time series is challenging due to statistical properties known as stylized facts, such as fat tails, volatility clustering, and seasonality patterns. Various generative models, including generative adversarial networks (GANs) and variational autoencoders (VAEs), have been employed to address this challenge, although no model yet satisfies all the stylized facts. We alternatively propose utilizing diffusion models, specifically denoising diffusion probabilistic models (DDPMs), to generate synthetic financial time series. This approach employs wavelet transformation to convert multiple time series (into images), such as stock prices, trading volumes, and spreads. Given these converted images, the model gains the ability to generate images that can be transformed back into realistic time series by inverse wavelet transformation. We demonstrate that our proposed approach satisfies stylized facts.

There are no more papers matching your filters at the moment.