Ask or search anything...

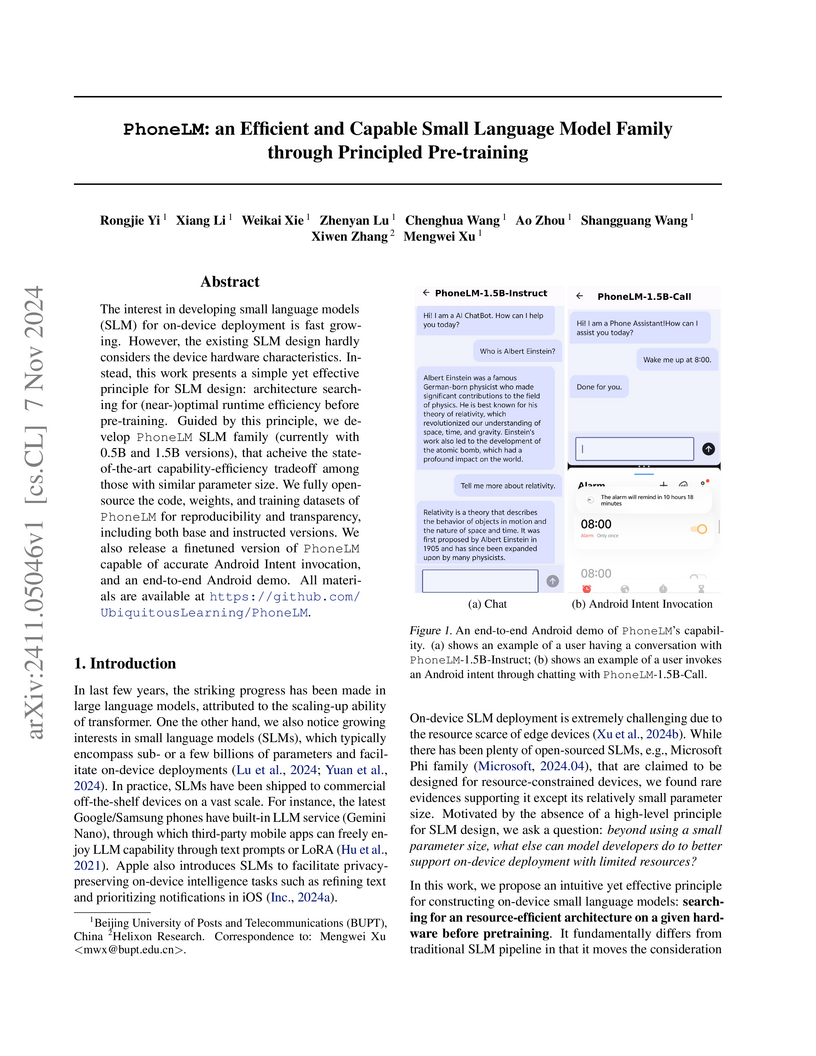

This work presents a comprehensive survey of 70 small language models (SLMs) and benchmarks their capabilities and on-device runtime costs on edge AI devices and smartphones. It reveals that SLMs are rapidly improving, with some models approaching or even surpassing the performance of 7-billion parameter models in various tasks.

View blogThe paper introduces InstaFlow, a novel pipeline that transforms multi-step Stable Diffusion into an ultra-fast, high-quality one-step generator for text-to-image synthesis by leveraging Rectified Flow's reflow procedure and knowledge distillation. InstaFlow-0.9B achieves an FID of 23.4 on MS COCO 2017-5k in 0.09 seconds, outperforming previous one-step diffusion distillation methods and competing with GAN-based models in speed while maintaining diffusion model quality.

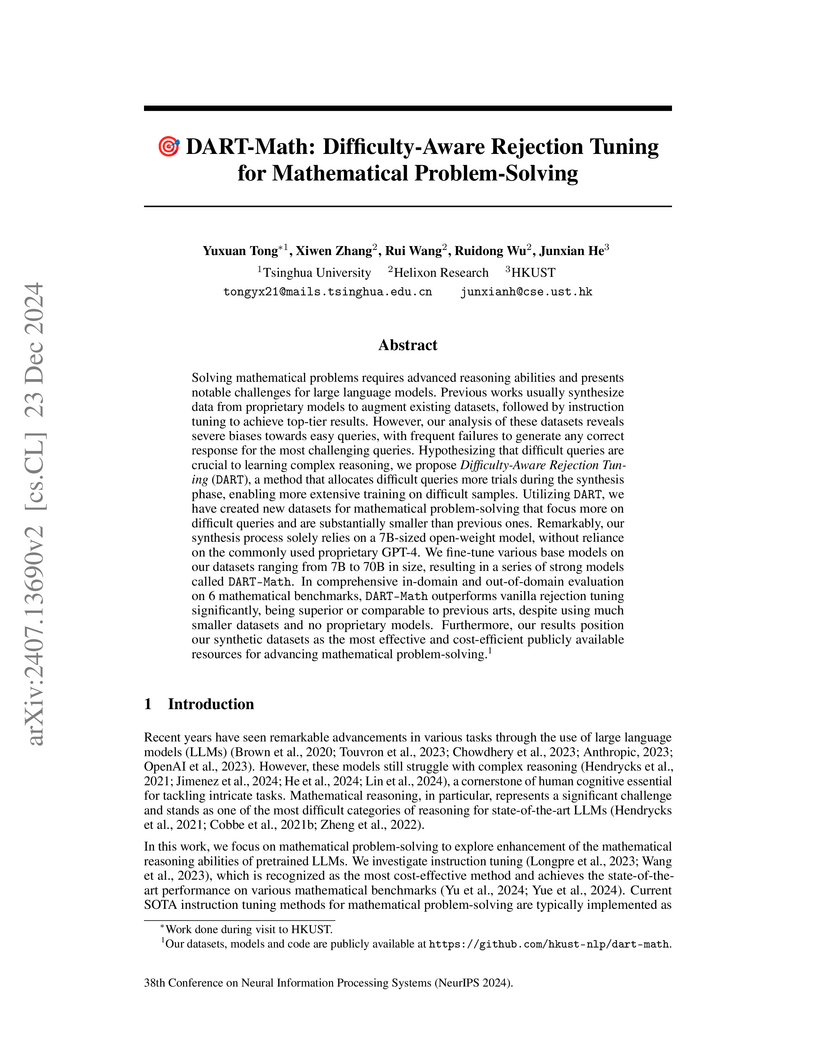

View blogDART-Math introduces a Difficulty-Aware Rejection Tuning (DART) method to synthesize instruction tuning data, improving large language models' mathematical reasoning by counteracting the bias towards easy problems in synthetic datasets. This approach enables training high-performing open-weight models that achieve competitive results on various math benchmarks without relying on proprietary data generation models like GPT-4.

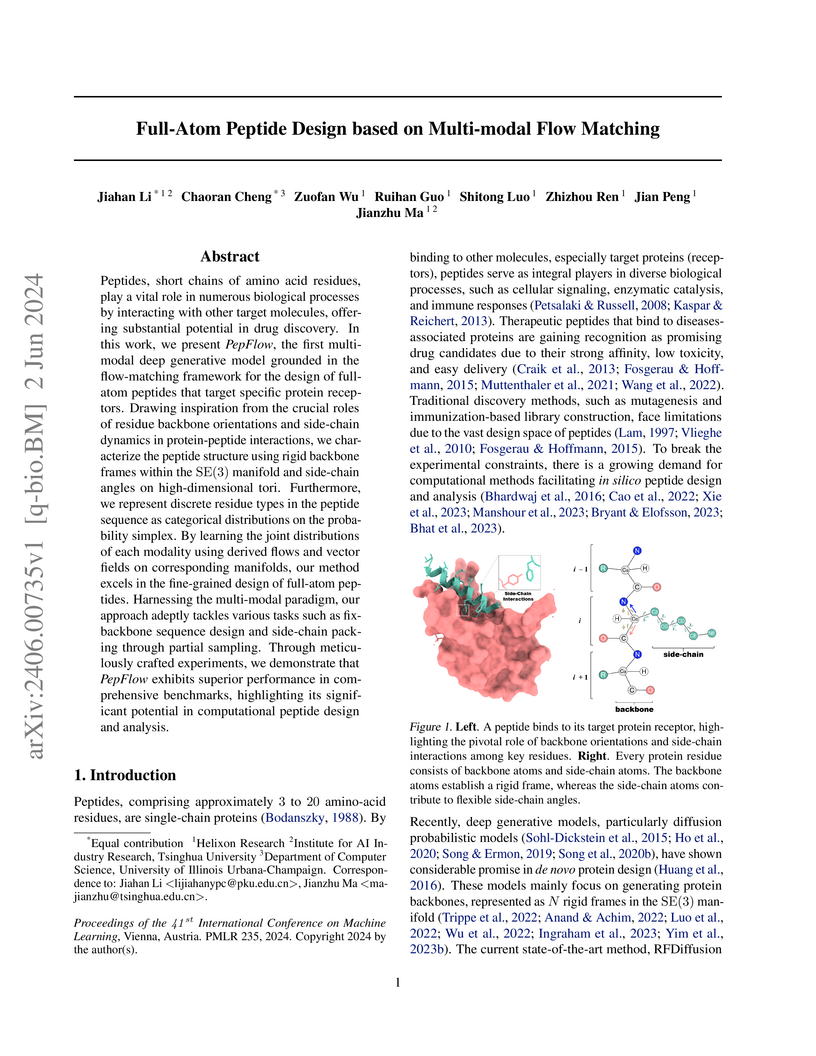

View blogThis paper presents PepFlow, a multi-modal flow matching approach that simultaneously generates peptide sequence and full-atom structure, conditioned on a target protein. PepFlow achieves lower RMSD and more native-like binding energy profiles compared to existing methods, demonstrating improved accuracy in peptide design.

View blog University of Cambridge

University of Cambridge

University of Texas at Austin

University of Texas at Austin

Tsinghua University

Tsinghua University HKUST

HKUST

University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign

Shanghai Jiao Tong University

Shanghai Jiao Tong University The Chinese University of Hong Kong

The Chinese University of Hong Kong

University of Washington

University of Washington

Peking University

Peking University