Hongkong University

Recently, text-guided scalable vector graphics (SVGs) synthesis has shown

promise in domains such as iconography and sketch. However, existing

text-to-SVG generation methods lack editability and struggle with visual

quality and result diversity. To address these limitations, we propose a novel

text-guided vector graphics synthesis method called SVGDreamer. SVGDreamer

incorporates a semantic-driven image vectorization (SIVE) process that enables

the decomposition of synthesis into foreground objects and background, thereby

enhancing editability. Specifically, the SIVE process introduces

attention-based primitive control and an attention-mask loss function for

effective control and manipulation of individual elements. Additionally, we

propose a Vectorized Particle-based Score Distillation (VPSD) approach to

address issues of shape over-smoothing, color over-saturation, limited

diversity, and slow convergence of the existing text-to-SVG generation methods

by modeling SVGs as distributions of control points and colors. Furthermore,

VPSD leverages a reward model to re-weight vector particles, which improves

aesthetic appeal and accelerates convergence. Extensive experiments are

conducted to validate the effectiveness of SVGDreamer, demonstrating its

superiority over baseline methods in terms of editability, visual quality, and

diversity. Project page: this https URL

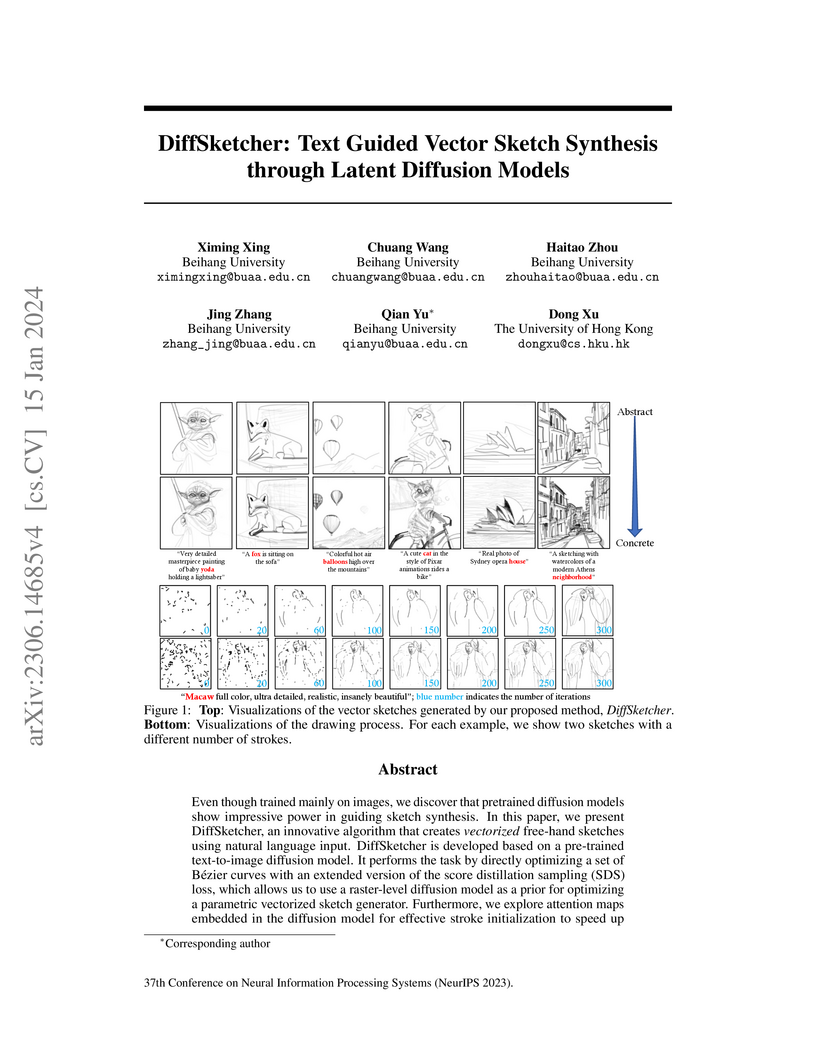

Even though trained mainly on images, we discover that pretrained diffusion models show impressive power in guiding sketch synthesis. In this paper, we present DiffSketcher, an innovative algorithm that creates \textit{vectorized} free-hand sketches using natural language input. DiffSketcher is developed based on a pre-trained text-to-image diffusion model. It performs the task by directly optimizing a set of Bézier curves with an extended version of the score distillation sampling (SDS) loss, which allows us to use a raster-level diffusion model as a prior for optimizing a parametric vectorized sketch generator. Furthermore, we explore attention maps embedded in the diffusion model for effective stroke initialization to speed up the generation process. The generated sketches demonstrate multiple levels of abstraction while maintaining recognizability, underlying structure, and essential visual details of the subject drawn. Our experiments show that DiffSketcher achieves greater quality than prior work. The code and demo of DiffSketcher can be found at this https URL.

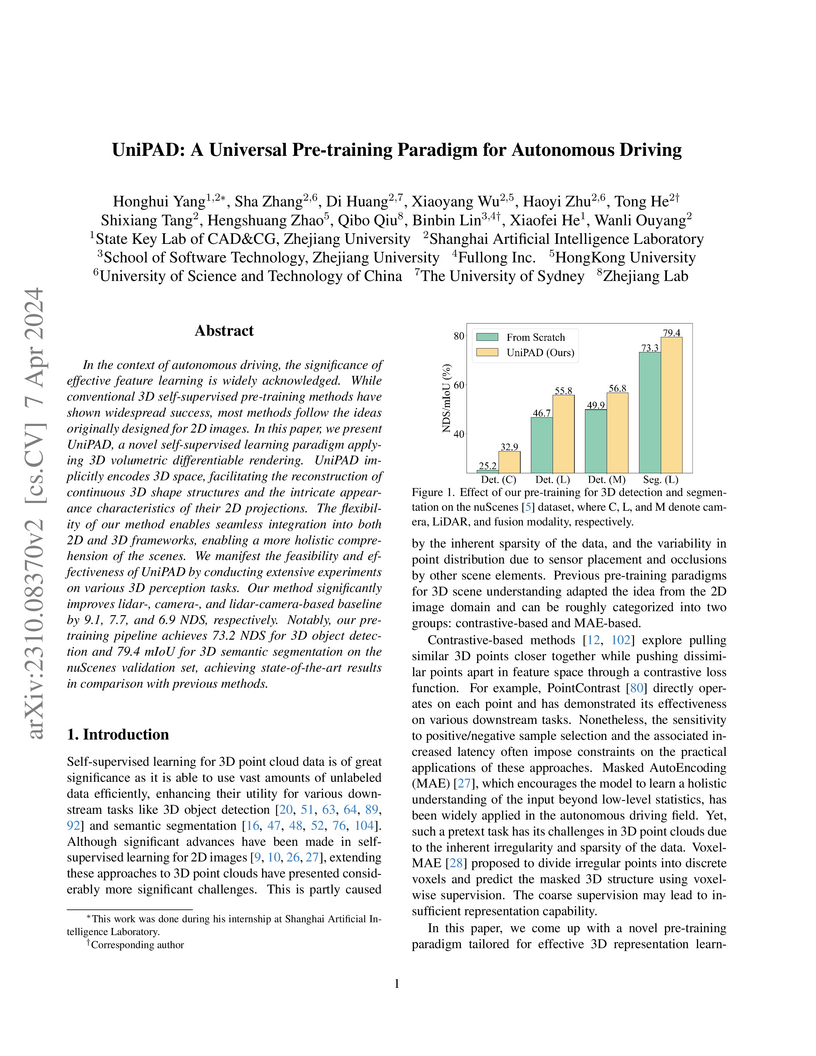

UniPAD introduces a self-supervised pre-training paradigm for autonomous driving that leverages 3D volumetric differentiable rendering to learn universal and continuous 3D scene representations. This approach enhances performance across diverse 3D object detection and semantic segmentation tasks for LiDAR, camera, and fusion modalities, achieving state-of-the-art comparable results on the nuScenes dataset.

Researchers from Tsinghua, Hongkong, and Stanford Universities introduced Task-aware Lipschitz Data Augmentation (TLDA), a method that selectively applies visual augmentations to only task-irrelevant pixels in reinforcement learning observations. This approach enhances policy generalization and training stability by preserving critical visual information, outperforming existing methods on various benchmarks like DMC-GB and CARLA.

Magnetic frustration has been recognized as pivotal to investigating new phases of matter in correlation-driven Kondo breakdown quantum phase transitions that are not clearly associated with broken symmetry. The nature of these new phases, however, remains underexplored. Here, we report quantum criticalities emerging from a cluster spin-glass in the heavy-fermion metal TiFexCu2x−1Sb, where frustration originates from intrinsic disorder. Specific heat and magnetic Grüneisen parameter measurements under varying magnetic fields exhibit quantum critical scaling, indicating a quantum critical point near 0.13 Tesla. As the magnetic field increases, the cluster spin-glass phase is progressively suppressed. Upon crossing the quantum critical point, resistivity and Hall effect measurements reveal enhanced screening of local moments and an expanding Fermi surface, consistent with the Kondo breakdown scenario.

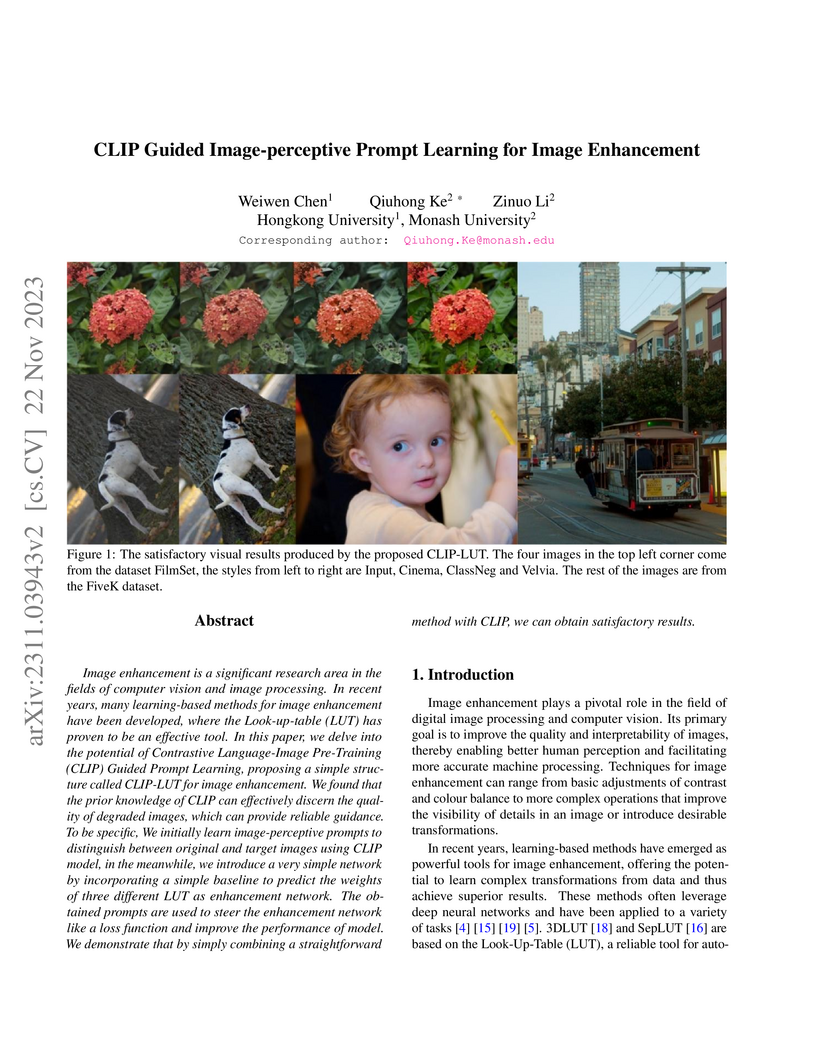

Image enhancement is a significant research area in the fields of computer vision and image processing. In recent years, many learning-based methods for image enhancement have been developed, where the Look-up-table (LUT) has proven to be an effective tool. In this paper, we delve into the potential of Contrastive Language-Image Pre-Training (CLIP) Guided Prompt Learning, proposing a simple structure called CLIP-LUT for image enhancement. We found that the prior knowledge of CLIP can effectively discern the quality of degraded images, which can provide reliable guidance. To be specific, We initially learn image-perceptive prompts to distinguish between original and target images using CLIP model, in the meanwhile, we introduce a very simple network by incorporating a simple baseline to predict the weights of three different LUT as enhancement network. The obtained prompts are used to steer the enhancement network like a loss function and improve the performance of model. We demonstrate that by simply combining a straightforward method with CLIP, we can obtain satisfactory results.

Researchers developed Kuaiji, the first open-source Chinese accounting Large Language Model, and CAtAcctQA, the first Chinese accounting dataset. This model demonstrates enhanced accuracy and comprehensive response capabilities for complex accounting queries, outperforming general LLMs by continuous pre-training and supervised fine-tuning on domain-specific data.

There are no more papers matching your filters at the moment.