IOTA Foundation

The study in group testing aims to develop strategies to identify a small set of defective items among a large population using a few pooled tests. The established techniques have been highly beneficial in a broad spectrum of applications ranging from channel communication to identifying COVID-19-infected individuals efficiently. Despite significant research on group testing and its variants since the 1940s, testing strategies robust to deletion noise have yet to be studied. Many practical systems exhibit deletion errors, for instance, in wireless communication and data storage systems. Such deletions of test outcomes lead to asynchrony between the tests, which the current group testing strategies cannot handle. In this work, we initiate the study of non-adaptive group testing strategies resilient to deletion noise. We characterize the necessary and sufficient conditions to successfully identify the defective items even after the adversarial deletion of certain test outputs. We also provide constructions of testing matrices along with an efficient recovery algorithm.

29 Jan 2024

This work focuses on non-adaptive group testing, with a primary goal of

efficiently identifying a set of at most d defective elements among a given

set of elements using the fewest possible number of tests. Non-adaptive

combinatorial group testing often employs disjunctive codes and union-free

codes. This paper discusses union-free codes with fast decoding (UFFD codes), a

recently introduced class of union-free codes that combine the best of both

worlds -- the linear complexity decoding of disjunctive codes and the fewest

number of tests of union-free codes. In our study, we distinguish two

subclasses of these codes -- one subclass, denoted as (=d)-UFFD codes, can be

used when the number of defectives d is a priori known, whereas $(\le

d)−UFFDcodesworksforanysubsetofatmostd$ defectives. Previous studies

have established a lower bound on the rate of these codes for d=2. Our

contribution lies in deriving new lower bounds on the rate for both (=d)- and

(≤d)-UFFD codes for an arbitrary number d≥2 of defectives. Our

results show that for d→∞, the rate of (=d)-UFFD codes is twice as

large as the best-known lower bound on the rate of d-disjunctive codes. In

addition, the rate of (≤d)-UFFD code is shown to be better than the known

lower bound on the rate of d-disjunctive codes for small values of d.

13 Jul 2021

Primality generation is the cornerstone of several essential cryptographic systems. The problem has been a subject of deep investigations, but there is still a substantial room for improvements. Typically, the algorithms used have two parts trial divisions aimed at eliminating numbers with small prime factors and primality tests based on an easy-to-compute statement that is valid for primes and invalid for composites. In this paper, we will showcase a technique that will eliminate the first phase of the primality testing algorithms. The computational simulations show a reduction of the primality generation time by about 30% in the case of 1024-bit RSA key pairs. This can be particularly beneficial in the case of decentralized environments for shared RSA keys as the initial trial division part of the key generation algorithms can be avoided at no cost. This also significantly reduces the communication complexity. Another essential contribution of the paper is the introduction of a new one-way function that is computationally simpler than the existing ones used in public-key cryptography. This function can be used to create new random number generators, and it also could be potentially used for designing entirely new public-key encryption systems.

Following the design of more efficient blockchain consensus algorithms, the execution layer has emerged as the new performance bottleneck of blockchains, especially under high contention. Current parallel execution frameworks either rely on optimistic concurrency control (OCC) or on pessimistic concurrency control (PCC), both of which see their performance decrease when workloads are highly contended, albeit for different reasons. In this work, we present NEMO, a new blockchain execution engine that combines OCC with the object data model to address this challenge. NEMO introduces four core innovations: (i) a greedy commit rule for transactions using only owned objects; (ii) refined handling of dependencies to reduce re-executions; (iii) the use of incomplete but statically derivable read/write hints to guide execution; and (iv) a priority-based scheduler that favors transactions that unblock others. Through simulated execution experiments, we demonstrate that NEMO significantly reduces redundant computation and achieves higher throughput than representative approaches. For example, with 16 workers NEMO's throughput is up to 42% higher than the one of Block-STM, the state-of-the-art OCC approach, and 61% higher than the pessimistic concurrency control baseline used.

This paper investigates the issue of fairness in Distributed Ledger

Technology (DLT), specifically focusing on the shortcomings observed in current

blockchain systems due to Miner Extractable Value (MEV) phenomena and systemic

centralization. We explore the potential of Directed Acyclic Graphs (DAGs) as a

solution to address or mitigate these fairness concerns. Our objective is to

gain a comprehensive understanding of fairness in DAG-based DLTs by examining

its different aspects and measurement metrics. We aim to establish a shared

knowledge base that facilitates accurate fairness assessment and allows for an

evaluation of whether DAG-based DLTs offer a more equitable design. We describe

the various dimensions of fairness and conduct a comparative analysis to

examine how they relate to different components of DLTs. This analysis serves

as a catalyst for further research, encouraging the development of

cryptographic systems that promote fairness.

In many smart contract architectures, every contract or object is mutably

shared by default. The Sui smart contract platform bears the unique feature of

distinguishing between shared and owned objects. While transactions operating

on shared objects require consensus to sequence reads and writes, those

involving only owned objects are independent and may bypass consensus; thus,

the latter are less prone to this throughput bottleneck. However, it may not

always be possible or desirable to avoid using shared objects. This article

aims at identifying and investigating decentralized applications that require

shared objects. Utilizing the Sui Rust SDK to query programmable transaction

blocks, we analyze the frequency of transactions involving shared objects,

shared resource contention levels, and most "popular" applications that contain

shared objects. The presented results are reproducible and show the extensive

usage of shared objects in Sui, low contention levels, and moderate dependency

among shared objects in atomic transactions. This novel study of shared object

use cases in a relatively new smart contract platform is important for

improving the efficiency of such object-based architectures. This work is

relevant for smart contract platform designers and smart contract developers.

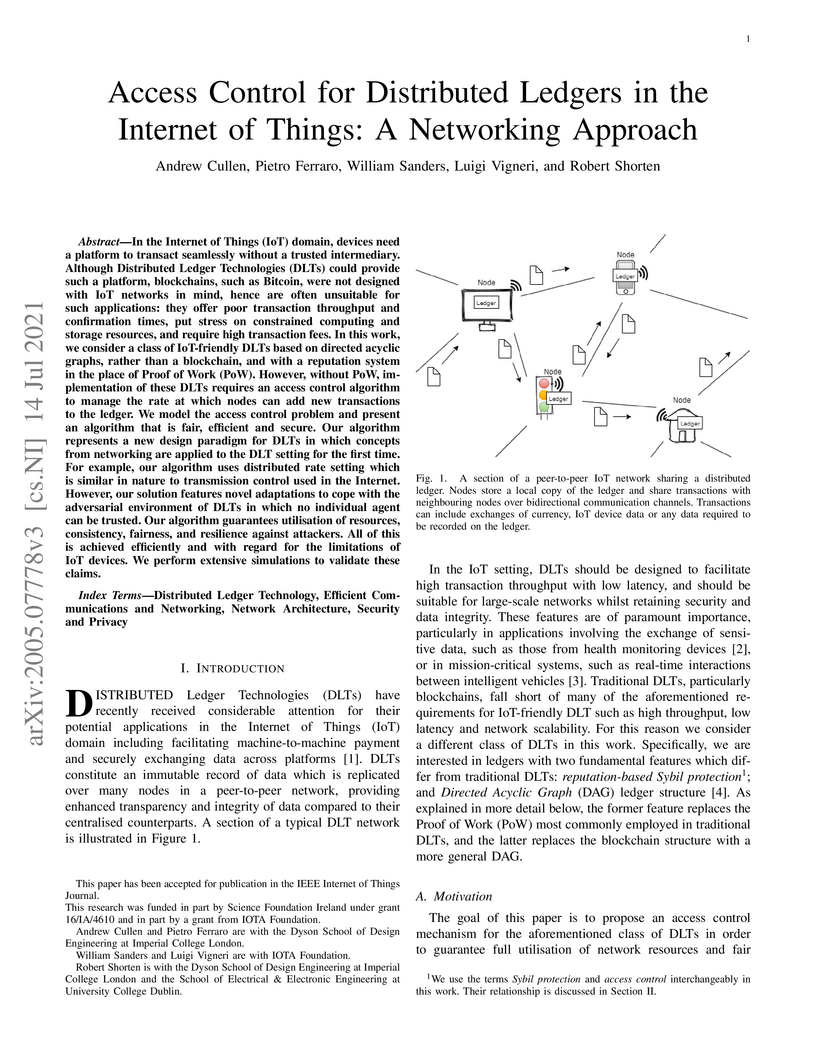

In the Internet of Things (IoT) domain, devices need a platform to transact seamlessly without a trusted intermediary. Although Distributed Ledger Technologies (DLTs) could provide such a platform, blockchains, such as Bitcoin, were not designed with IoT networks in mind, hence are often unsuitable for such applications: they offer poor transaction throughput and confirmation times, put stress on constrained computing and storage resources, and require high transaction fees. In this work, we consider a class of IoT-friendly DLTs based on directed acyclic graphs, rather than a blockchain, and with a reputation system in the place of Proof of Work (PoW). However, without PoW, implementation of these DLTs requires an access control algorithm to manage the rate at which nodes can add new transactions to the ledger. We model the access control problem and present an algorithm that is fair, efficient and secure. Our algorithm represents a new design paradigm for DLTs in which concepts from networking are applied to the DLT setting for the first time. For example, our algorithm uses distributed rate setting which is similar in nature to transmission control used in the Internet. However, our solution features novel adaptations to cope with the adversarial environment of DLTs in which no individual agent can be trusted. Our algorithm guarantees utilisation of resources, consistency, fairness, and resilience against attackers. All of this is achieved efficiently and with regard for the limitations of IoT devices. We perform extensive simulations to validate these claims.

Distributed Ledger Technologies (DLTs) promise decentralization,

transparency, and security, yet the reality often falls short due to

fundamental governance flaws. Poorly designed governance frameworks leave these

systems vulnerable to coercion, vote-buying, centralization of power, and

malicious protocol exploits: threats that undermine the very principles of

fairness and equity these technologies seek to uphold. This paper surveys the

state of DLT governance, identifies critical vulnerabilities, and highlights

the absence of universally accepted best practices for good governance. By

bridging insights from cryptography, social choice theory, and e-voting

systems, we not only present a comprehensive taxonomy of governance properties

essential for safeguarding DLTs but also point to technical solutions that can

deliver these properties in practice. This work underscores the urgent need for

robust, transparent, and enforceable governance mechanisms. Ensuring good

governance is not merely a technical necessity but a societal imperative to

protect the public interest, maintain trust, and realize the transformative

potential of DLTs for social good.

This paper introduces Slipstream, a Byzantine Fault Tolerance (BFT) protocol

where nodes concurrently propose blocks to be added to a Directed Acyclic Graph

(DAG) and aim to agree on block ordering. Slipstream offers two types of block

orderings: an optimistic ordering, which is live and secure in a sleepy model

under up to 50% Byzantine nodes, and a final ordering, which is a prefix of the

optimistic ordering and ensures safety and liveness in an eventual lock-step

synchronous model under up to 33% Byzantine nodes. Additionally, Slipstream

integrates a payment system that allows for fast UTXO transaction confirmation

independently of block ordering. Transactions are confirmed in three rounds

during synchrony, and unconfirmed double spends are resolved in a novel way

using the DAG structure.

This paper is a Systematization of Knowledge (SoK) on Directed Acyclic Graph

(DAG)-based consensus protocols, analyzing their performance and trade-offs

within the framework of consistency, availability, and partition tolerance

inspired by the CAP theorem.

We classify DAG-based consensus protocols into availability-focused and

consistency-focused categories, exploring their design principles, core

functionalities, and associated trade-offs. Furthermore, we examine key

properties, attack vectors, and recent developments, providing insights into

security, scalability, and fairness challenges. Finally, we identify research

gaps and outline directions for advancing DAG-based consensus mechanisms.

26 Aug 2025

An n-vertex graph G is weakly F-saturated if G contains no copy of F and there exists an ordering of all edges in E(Kn)∖E(G) such that, when added one at a time, each edge creates a new copy of F. The minimum size of a weakly F-saturated graph G is called the weak saturation number wsat(n,F). We obtain exact values and new bounds for wsat(n,Ks,t) in the previously unaddressed range s+t < n < 3t-3, where 3≤s≤t. To prove lower bounds, we introduce a new method that takes into account connectivity properties of subgraphs of a complement G′ to a weakly saturated graph G. We construct an auxiliary hypergraph and show that a linear combination of its parameters always increases in the process of the deletion of edges of G′. This gives a lower bound which is tight, up to an additive constant.

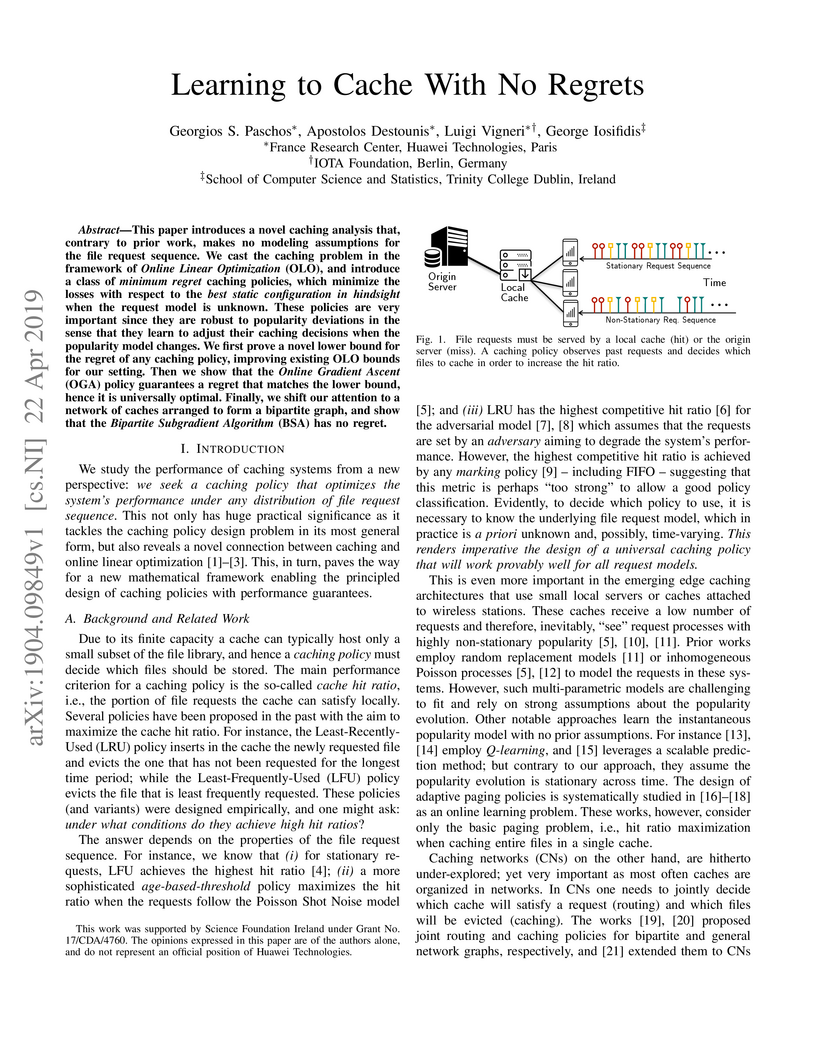

This paper introduces a novel caching analysis that, contrary to prior work, makes no modeling assumptions for the file request sequence. We cast the caching problem in the framework of Online Linear Optimization (OLO), and introduce a class of minimum regret caching policies, which minimize the losses with respect to the best static configuration in hindsight when the request model is unknown. These policies are very important since they are robust to popularity deviations in the sense that they learn to adjust their caching decisions when the popularity model changes. We first prove a novel lower bound for the regret of any caching policy, improving existing OLO bounds for our setting. Then we show that the Online Gradient Ascent (OGA) policy guarantees a regret that matches the lower bound, hence it is universally optimal. Finally, we shift our attention to a network of caches arranged to form a bipartite graph, and show that the Bipartite Subgradient Algorithm (BSA) has no regret

This paper presents a novel leaderless protocol (FPC-BI: Fast Probabilistic Consensus within Byzantine Infrastructures) with a low communicational complexity and which allows a set of nodes to come to a consensus on a value of a single bit. The paper makes the assumption that part of the nodes are Byzantine, and are thus controlled by an adversary who intends to either delay the consensus, or break it (this defines that at least a couple of honest nodes come to different conclusions). We prove that, nevertheless, the protocol works with high probability when its parameters are suitably chosen. Along this the paper also provides explicit estimates on the probability that the protocol finalizes in the consensus state in a given time. This protocol could be applied to reaching consensus in decentralized cryptocurrency systems. A special feature of it is that it makes use of a sequence of random numbers which are either provided by a trusted source or generated by the nodes themselves using some decentralized random number generating protocol. This increases the overall trustworthiness of the infrastructure. A core contribution of the paper is that it uses a very weak consensus to obtain a strong consensus on the value of a bit, and which can relate to the validity of a transaction.

Consensus plays a crucial role in distributed ledger systems, impacting both

scalability and decentralization. Many blockchain systems use a weighted

lottery based on a scarce resource such as a stake, storage, memory, or

computing power to select a committee whose members drive the consensus and are

responsible for adding new information to the ledger. Therefore, ensuring a

robust and fair committee selection process is essential for maintaining

security, efficiency, and decentralization.

There are two main approaches to randomized committee selection. In one

approach, each validator candidate locally checks whether they are elected to

the committee and reveals their proof during the consensus phase. In contrast,

in the second approach, a sortition algorithm decides a fixed-sized committee

that is globally verified. This paper focuses on the latter approach, with

cryptographic sortition as a method for fair committee selection that

guarantees a constant committee size. Our goal is to develop deterministic

guarantees that strengthen decentralization. We introduce novel methods that

provide deterministic bounds on the influence of adversaries within the

committee, as evidenced by numerical experiments. This approach overcomes the

limitations of existing protocols that only offer probabilistic guarantees,

often providing large committees that are impractical for many quorum-based

applications like atomic broadcast and randomness beacon protocols.

Rapidly growing distributed ledger technologies (DLTs) have recently received

attention among researchers in both industry and academia. While a lot of

existing analysis (mainly) of the Bitcoin and Ethereum networks is available,

the lack of measurements for other crypto projects is observed. This article

addresses questions about tokenomics and wealth distributions in

cryptocurrencies. We analyze the time-dependent statistical properties of top

cryptocurrency holders for 14 different distributed ledger projects. The

provided metrics include approximated Zipf coefficient, Shannon entropy, Gini

coefficient, and Nakamoto coefficient. We show that there are quantitative

differences between the coins (cryptocurrencies operating on their own

independent network) and tokens (which operate on top of a smart contract

platform). Presented results show that coins and tokens have different values

of approximated Zipf coefficient and centralization levels. This work is

relevant for DLTs as it might be useful in modeling and improving the committee

selection process, especially in decentralized autonomous organizations (DAOs)

and delegated proof of stake (DPoS) blockchains.

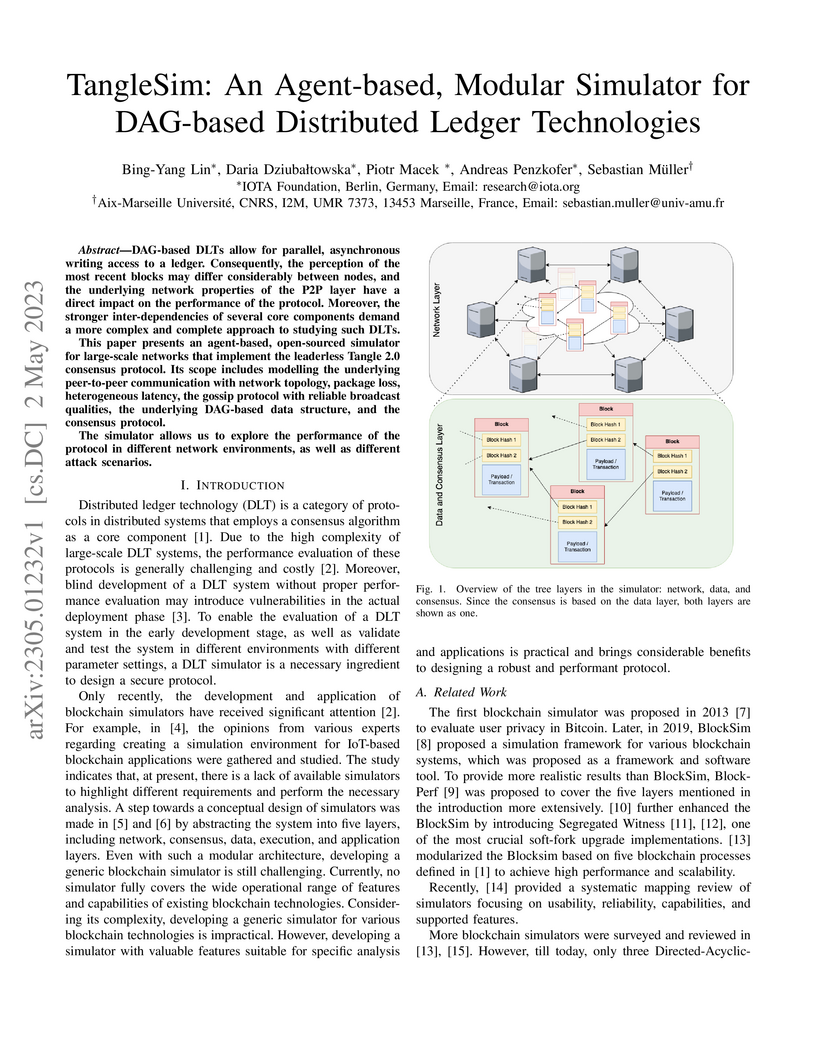

DAG-based DLTs allow for parallel, asynchronous writing access to a ledger.

Consequently, the perception of the most recent blocks may differ considerably

between nodes, and the underlying network properties of the P2P layer have a

direct impact on the performance of the protocol. Moreover, the stronger

inter-dependencies of several core components demand a more complex and

complete approach to studying such DLTs. This paper presents an agent-based,

open-sourced simulator for large-scale networks that implement the leaderless

Tangle 2.0 consensus protocol. Its scope includes modelling the underlying

peer-to-peer communication with network topology, package loss, heterogeneous

latency, the gossip protocol with reliable broadcast qualities, the underlying

DAG-based data structure, and the consensus protocol. The simulator allows us

to explore the performance of the protocol in different network environments,

as well as different attack scenarios.

A significant portion of research on distributed ledgers has focused on

circumventing the limitations of leader-based blockchains mainly in terms of

scalability, decentralization and power consumption. Leaderless architectures

based on directed acyclic graphs (DAGs) avoid many of these limitations

altogether, but their increased flexibility and performance comes at the cost

of increased design complexity, so their potential has remained largely

unexplored. Management of write access to these ledgers presents a major

challenge because ledger updates may be made in parallel, hence transactions

cannot simply be serialised and prioritised according to token fees paid to

validators. In this work, we propose an access control scheme for leaderless

DAG-based ledgers which is based on consuming credits rather than paying fees

in the base token. We outline a general model for this new approach and provide

some simulation results showing promising performance boosts.

There are no more papers matching your filters at the moment.