MetaGPT

02 Aug 2025

Google DeepMind

Google DeepMind University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign Université de Montréal

Université de Montréal University of Southern California

University of Southern California Stanford University

Stanford University Mila - Quebec AI Institute

Mila - Quebec AI Institute The Hong Kong Polytechnic University

The Hong Kong Polytechnic University Yale University

Yale University University of Georgia

University of Georgia Nanyang Technological University

Nanyang Technological University Microsoft

Microsoft Argonne National Laboratory

Argonne National Laboratory Duke University

Duke University HKUSTKing Abdullah University of Science and Technology

HKUSTKing Abdullah University of Science and Technology University of Sydney

University of Sydney The Ohio State UniversityPenn State UniversityMetaGPT

The Ohio State UniversityPenn State UniversityMetaGPTA comprehensive, brain-inspired framework integrates diverse research areas of LLM-based intelligent agents, encompassing individual architecture, collaborative systems, and safety. The framework formally conceptualizes agent components, maps AI capabilities to human cognition to identify research gaps, and outlines a roadmap for developing autonomous, adaptive, and safe AI.

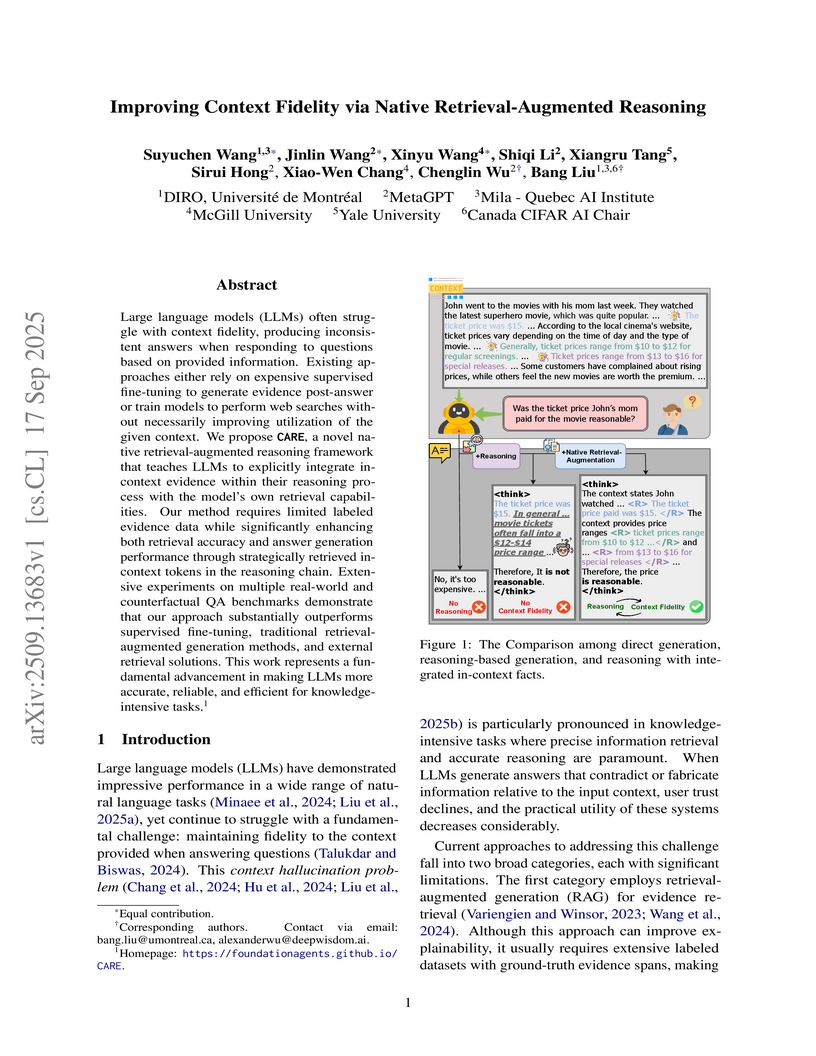

Large language models (LLMs) often struggle with context fidelity, producing inconsistent answers when responding to questions based on provided information. Existing approaches either rely on expensive supervised fine-tuning to generate evidence post-answer or train models to perform web searches without necessarily improving utilization of the given context. We propose CARE, a novel native retrieval-augmented reasoning framework that teaches LLMs to explicitly integrate in-context evidence within their reasoning process with the model's own retrieval capabilities. Our method requires limited labeled evidence data while significantly enhancing both retrieval accuracy and answer generation performance through strategically retrieved in-context tokens in the reasoning chain. Extensive experiments on multiple real-world and counterfactual QA benchmarks demonstrate that our approach substantially outperforms supervised fine-tuning, traditional retrieval-augmented generation methods, and external retrieval solutions. This work represents a fundamental advancement in making LLMs more accurate, reliable, and efficient for knowledge-intensive tasks.

Google DeepMind

Google DeepMind University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign Université de Montréal

Université de Montréal University of Southern California

University of Southern California Stanford University

Stanford University Mila - Quebec AI Institute

Mila - Quebec AI Institute The Hong Kong Polytechnic University

The Hong Kong Polytechnic University Yale University

Yale University University of Georgia

University of Georgia Nanyang Technological University

Nanyang Technological University Microsoft

Microsoft Argonne National Laboratory

Argonne National Laboratory Duke University

Duke University HKUSTKing Abdullah University of Science and Technology

HKUSTKing Abdullah University of Science and Technology University of Sydney

University of Sydney The Ohio State UniversityPenn State UniversityMetaGPT

The Ohio State UniversityPenn State UniversityMetaGPTThe advent of large language models (LLMs) has catalyzed a transformative shift in artificial intelligence, paving the way for advanced intelligent agents capable of sophisticated reasoning, robust perception, and versatile action across diverse domains. As these agents increasingly drive AI research and practical applications, their design, evaluation, and continuous improvement present intricate, multifaceted challenges. This book provides a comprehensive overview, framing intelligent agents within modular, brain-inspired architectures that integrate principles from cognitive science, neuroscience, and computational research. We structure our exploration into four interconnected parts. First, we systematically investigate the modular foundation of intelligent agents, systematically mapping their cognitive, perceptual, and operational modules onto analogous human brain functionalities and elucidating core components such as memory, world modeling, reward processing, goal, and emotion. Second, we discuss self-enhancement and adaptive evolution mechanisms, exploring how agents autonomously refine their capabilities, adapt to dynamic environments, and achieve continual learning through automated optimization paradigms. Third, we examine multi-agent systems, investigating the collective intelligence emerging from agent interactions, cooperation, and societal structures. Finally, we address the critical imperative of building safe and beneficial AI systems, emphasizing intrinsic and extrinsic security threats, ethical alignment, robustness, and practical mitigation strategies necessary for trustworthy real-world deployment. By synthesizing modular AI architectures with insights from different disciplines, this survey identifies key research challenges and opportunities, encouraging innovations that harmonize technological advancement with meaningful societal benefit.

There are no more papers matching your filters at the moment.