Prince of Songkla University

13 Sep 2024

Researchers at Prince of Songkla University developed QED, a three-phase static analysis toolchain to assist organizations in identifying quantum-vulnerable cryptography within software executables without source code. The tool significantly reduces the manual workload for post-quantum cryptography migration by over 90% and demonstrates high accuracy in detecting vulnerable dependencies in both synthetic and real-world binaries.

13 Jan 2025

Superconducting nanowire single-photon detectors (SNSPDs) are the current leading technology for the detection of single-photons in the near-infrared (NIR) and short-wave infrared (SWIR) spectral regions, due to record performance in terms of detection efficiency, low dark count rate, minimal timing jitter, and high maximum count rates. The various geometry and design parameters of SNSPDs are often carefully tailored to specific applications, resulting in challenges in optimising each performance characteristic without adversely impacting others. In particular, when scaling to larger array formats, the key challenge is to manage the heat load generated by the many readout cables in the cryogenic cooling system. Here we demonstrate a practical, self-contained 64-pixel SNSPD array system which exhibits high performance of all operational parameters, for use in the strategically important SWIR spectral region. The detector is an 8x8 array of 27.5 x 27.8 {\mu}m pixels on a 30 {\mu}m pitch, which leads to an 80 -- 85% fill factor. At a wavelength of 1550nm, a uniform average per-pixel photon detection efficiency of 77.7% was measured and the observed system detection efficiency (SDE) across the entire array was 65%. A full performance characterisation is presented, including a dark count rate of 20 cps per pixel, full-width-half-maximum (FWHM) jitter of 100 ps per pixel, a 3-dB maximum count rate of 645 Mcps and no evidence of crosstalk at the 0.1% level. This camera system therefore facilitates a variety of picosecond time-resolved measurement-based applications that include biomedical imaging, quantum communications, and long-range single-photon light detection and ranging (LiDAR) and 3D imaging.

27 Jan 2025

Entanglement distribution in quantum networks will enable next-generation

technologies for quantum-secured communications, distributed quantum computing

and sensing. Future quantum networks will require dense connectivity, allowing

multiple users to share entanglement in a reconfigurable and multiplexed

manner, while long-distance connections are established through the

teleportation of entanglement, or entanglement swapping. While several recent

works have demonstrated fully connected, local multi-user networks based on

multiplexing, extending this to a global network architecture of interconnected

local networks remains an outstanding challenge. Here we demonstrate the next

stage in the evolution of multiplexed quantum networks: a prototype global

reconfigurable network where entanglement is routed and teleported in a

flexible and multiplexed manner between two local multi-user networks composed

of four users each. At the heart of our network is a programmable

8x8-dimensional multi-port circuit that harnesses the natural mode-mixing

process inside a multi-mode fibre to implement on-demand high-dimensional

operations on two independent photons carrying eight transverse-spatial modes.

Our circuit design allows us to break away from the limited planar geometry and

bypass the control and fabrication challenges of conventional integrated

photonic platforms. Our demonstration showcases the potential of this

architecture for enabling large-scale, global quantum networks that offer

versatile connectivity while being fully compatible with an existing

communications infrastructure.

In a normal indoor environment, Raman spectrum encounters noise often conceal

spectrum peak, leading to difficulty in spectrum interpretation. This paper

proposes deep learning (DL) based noise reduction technique for Raman

spectroscopy. The proposed DL network is developed with several training and

test sets of noisy Raman spectrum. The proposed technique is applied to denoise

and compare the performance with different wavelet noise reduction methods.

Output signal-to-noise ratio (SNR), root-mean-square error (RMSE) and mean

absolute percentage error (MAPE) are the performance evaluation index. It is

shown that output SNR of the proposed noise reduction technology is 10.24 dB

greater than that of the wavelet noise reduction method while the RMSE and the

MAPE are 292.63 and 10.09, which are much better than the proposed technique.

15 Oct 2015

Quantum algorithm is an algorithm for solving mathematical problems using

quantum systems encoded as information, which is found to outperform classical

algorithms in some specific cases. The objective of this study is to develop a

quantum algorithm for finding the roots of nth degree polynomials where n is

any positive integer. In classical algorithm, the resources required for

solving this problem increase drastically when n increases and it would be

impossible to practically solve the problem when n is large. It was found that

any polynomial can be rearranged into a corresponding companion matrix, whose

eigenvalues are roots of the polynomial. This leads to a possibility to perform

a quantum algorithm where the number of computational resources increase as a

polynomial of n. In this study, we construct a quantum circuit representing the

companion matrix and use eigenvalue estimation technique to find roots of

polynomial.

25 Apr 2023

Close encounters between stars in star forming regions are important as they

can perturb or destroy protoplanetary discs, young planetary systems, and

stellar multiple systems. We simulate simple, viralised, equal-mass N-body

star clusters and find that both the rate and total number of encounters

between stars varies by factors of several in statistically identical clusters

due to the stochastic/chaotic details of orbits and stellar dynamics.

Encounters tend to rapidly `saturate' in the core of a cluster, with stars

there each having many encounters, while more distant stars have none. However,

we find that the fraction of stars that have had at least one encounter within

a particular distance grows in the same way (scaling with crossing time and

half-mass radius) in all clusters, and we present a new (empirical) way of

estimating the fraction of stars that have had at least one encounter at a

particular distance.

13 Oct 2023

We consider dark energy models obtained from the general conformal

transformation of the Kropina metric, representing an (α,β) type

Finslerian geometry, constructed as the ratio of the square of a Riemannian

metric α, and of the one-form β. Conformal symmetries do appear in

many fields of physics, and they may play a fundamental role in the

understanding of the Universe. We investigate the possibility of obtaining

conformal theories of gravity in the osculating Barthel-Kropina geometric

framework, where gravitation is described by an extended Finslerian type model,

with the metric tensor depending on both the base space coordinates, and on a

vector field. We show that it is possible to formulate a family of conformal

Barthel-Kropina theories in an osculating geometry with second-order field

equations, depending on the properties of the conformal factor, whose presence

leads to the appearance of an effective scalar field, of geometric origin, in

the gravitational field equations. The cosmological implications of the theory

are investigated in detail, by assuming a specific relation between the

component of the one-form of the Kropina metric, and the conformal factor. The

cosmological evolution is thus determined by the initial conditions of the

scalar field, and a free parameter of the model. We analyze in detail three

cosmological models, corresponding to different values of the theory

parameters. Our results show that the conformal Barthel-Kropina model could

give an acceptable description of the observational data, and may represent a

theoretically attractive alternative to the standard ΛCDM cosmology.

This study investigates the dynamics of a non-minimally coupled (NMC) scalar

field in modified gravity, employing the Noether gauge symmetry (NGS) approach

to systematically derive exact cosmological solutions. By formulating a

point-like Lagrangian and analyzing the corresponding Euler-Lagrange equations,

conserved quantities were identified, reducing the complexity of the dynamical

system. Through the application of Noether symmetry principles, the scalar

field potential was found to follow a power-law form, explicitly dependent on

the coupling parameter ξ, influencing the evolution of the universe. The

study further explores inflationary dynamics, showing that for specific values

of ξ, the potential resembles the Higgs-like structure, contributing to a

deeper understanding of early cosmic expansion. To enhance the theoretical

framework, the Eisenhart lift method was introduced, providing a geometric

interpretation of the system by embedding the dynamical variables within an

extended field space. This approach established a connection between the

kinetic terms and Killing vectors, offering an alternative perspective on the

conserved quantities. The study also derived geodesic equations governing the

evolution of the system, reinforcing the link between symmetry-based techniques

and fundamental cosmological properties.

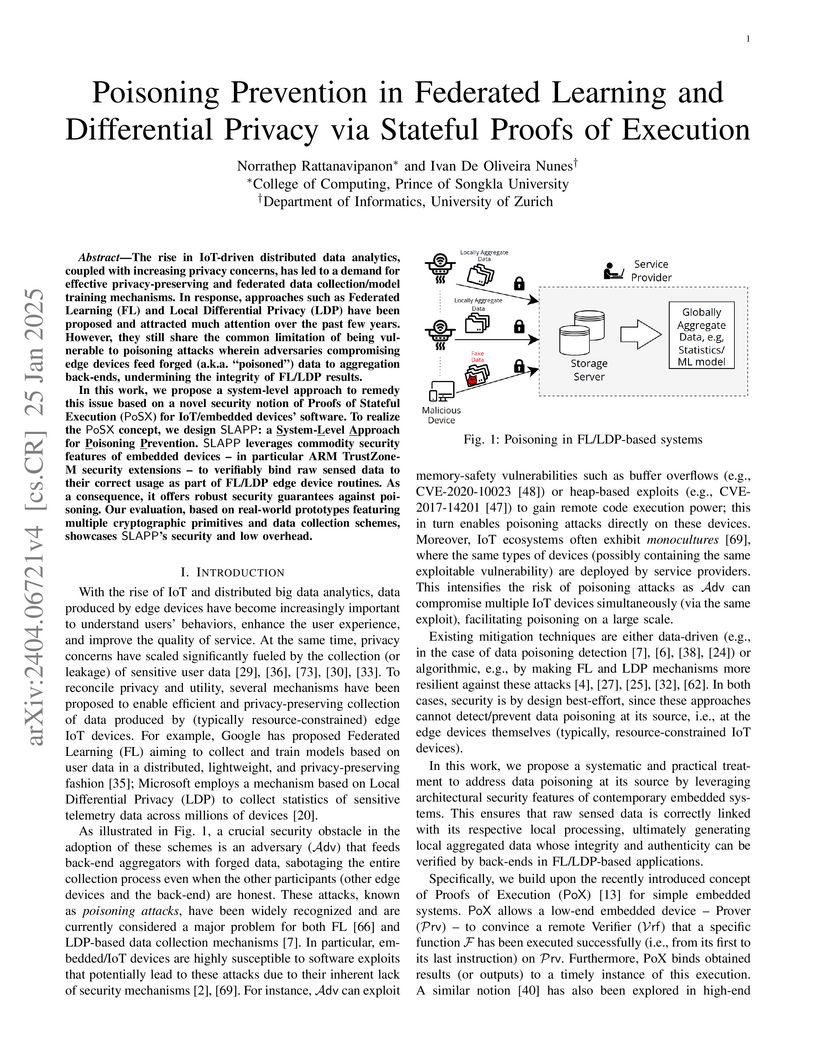

Poisoning Prevention in Federated Learning and Differential Privacy via Stateful Proofs of Execution

Poisoning Prevention in Federated Learning and Differential Privacy via Stateful Proofs of Execution

The rise in IoT-driven distributed data analytics, coupled with increasing privacy concerns, has led to a demand for effective privacy-preserving and federated data collection/model training mechanisms. In response, approaches such as Federated Learning (FL) and Local Differential Privacy (LDP) have been proposed and attracted much attention over the past few years. However, they still share the common limitation of being vulnerable to poisoning attacks wherein adversaries compromising edge devices feed forged (a.k.a. poisoned) data to aggregation back-ends, undermining the integrity of FL/LDP results.

In this work, we propose a system-level approach to remedy this issue based on a novel security notion of Proofs of Stateful Execution (PoSX) for IoT/embedded devices' software. To realize the PoSX concept, we design SLAPP: a System-Level Approach for Poisoning Prevention. SLAPP leverages commodity security features of embedded devices - in particular ARM TrustZoneM security extensions - to verifiably bind raw sensed data to their correct usage as part of FL/LDP edge device routines. As a consequence, it offers robust security guarantees against poisoning. Our evaluation, based on real-world prototypes featuring multiple cryptographic primitives and data collection schemes, showcases SLAPP's security and low overhead.

We consider a length-preserving biconnection gravitational theory, inspired

by information geometry, which extends general relativity by using the mutual

curvature as the fundamental object describing gravity. The two connections

used to build up the theory are the Schr\"{o}dinger connection, and its dual.

It can be seen that the dual of a non-metric Schr\"odinger connection possesses

torsion, even if the Schr\"odinger connection itself does not, and consequently

the pair (M,g,∇∗) is a quasi-statistical manifold. The field

equations are postulated to have the form of the standard Einstein equations,

but with the Ricci tensor- and scalar replaced with the mutual curvature

tensor- and scalar, resulting in additional torsion-dependent terms. The

covariant divergence of the matter energy-momentum does not vanish in this

theory. We derive the equation of motion for massive particles, which shows the

presence of an extra force. The Newtonian limit of the equations of motion is

also considered. We explore the cosmological implications by deriving the

generalized Friedmann equations for the FLRW geometry. They contain additional

terms that can be interpreted as describing an effective, geometric type dark

energy. We examine two cosmological models: one with conserved matter, and one

where dark energy and pressure are related by a linear equation of state. The

predictions of both models are compared with a set of observational values of

the Hubble function, and with the standard ΛCDM model.

Length-preserving biconnection gravity models fit well the observational data,

and also align with ΛCDM at low redshifts (z<3). The obtained results

suggest that a modified biconnection geometry could explain the late-time

acceleration, as well as the formation of the supermassive black holes, since

they predict a different age of our Universe as compared to standard cosmology.

28 Aug 2024

The use of high-dimensional systems for quantum communication opens interesting perspectives, such as increased information capacity and noise resilience. In this context, it is crucial to certify that a given quantum channel can reliably transmit high-dimensional quantum information. Here we develop efficient methods for the characterization of high-dimensional quantum channels. We first present a notion of dimensionality of quantum channels, and develop efficient certification methods for this quantity. We consider a simple prepare-and-measure setup, and provide witnesses for both a fully and a partially trusted scenario. In turn we apply these methods to a photonic experiment and certify dimensionalities up to 59 for a commercial graded-index multi-mode optical fiber. Moreover, we present extensive numerical simulations of the experiment, providing an accurate noise model for the fiber and exploring the potential of more sophisticated witnesses. Our work demonstrates the efficient characterization of high-dimensional quantum channels, a key ingredient for future quantum communication technologies.

27 Sep 2024

Control Flow Attestation (CFA) offers a means to detect control flow

hijacking attacks on remote devices, enabling verification of their runtime

trustworthiness. CFA generates a trace (CFLog) containing the destination of

all branching instructions executed. This allows a remote Verifier (Vrf) to

inspect the execution control flow on a potentially compromised Prover (Prv)

before trusting that a value/action was correctly produced/performed by Prv.

However, while CFA can be used to detect runtime compromises, it cannot

guarantee the eventual delivery of the execution evidence (CFLog) to Vrf. In

turn, a compromised Prv may refuse to send CFLog to Vrf, preventing its

analysis to determine the exploit's root cause and appropriate remediation

actions.

In this work, we propose TRACES: TEE-based Runtime Auditing for Commodity

Embedded Systems. TRACES guarantees reliable delivery of periodic runtime

reports even when Prv is compromised. This enables secure runtime auditing in

addition to best-effort delivery of evidence in CFA. TRACES also supports a

guaranteed remediation phase, triggered upon compromise detection to ensure

that identified runtime vulnerabilities can be reliably patched. To the best of

our knowledge, TRACES is the first system to provide this functionality on

commodity devices (i.e., without requiring custom hardware modifications). To

that end, TRACES leverages support from the ARM TrustZone-M Trusted Execution

Environment (TEE). To assess practicality, we implement and evaluate a fully

functional (open-source) prototype of TRACES atop the commodity ARM Cortex-M33

micro-controller unit.

04 Jul 2025

Remote run-time attestation methods, including Control Flow Attestation (CFA) and Data Flow Attestation (DFA), have been proposed to generate precise evidence of execution's control flow path (in CFA) and optionally execution data inputs (in DFA) on a remote and potentially compromised embedded device, hereby referred to as a Prover (Prv). Recent advances in run-time attestation architectures are also able to guarantee that a remote Verifier (Vrf) reliably receives this evidence from Prv, even when Prv's software state is fully compromised. This, in theory, enables secure "run-time auditing" in addition to best-effort attestation, i.e., it guarantees that Vrf can examine execution evidence to identify previously unknown compromises as soon as they are exploited, pinpoint their root cause(s), and remediate them. However, prior work has for the most part focused on securely implementing Prv's root of trust (responsible for generating authentic run-time evidence), leaving Vrf 's perspective in this security service unexplored. In this work, we argue that run-time attestation and auditing are only truly useful if Vrf can effectively analyze received evidence. From this premise, we characterize different types of evidence produced by existing run-time attestation/auditing architectures in terms of Vrf 's ability to detect and remediate (previously unknown) vulnerabilities. As a case study for practical uses of run-time evidence by Vrf, we propose SABRE: a Security Analysis and Binary Repair Engine. SABRE showcases how Vrf can systematically leverage run-time evidence to detect control flow attacks, pinpoint corrupted control data and specific instructions used to corrupt them, and leverage this evidence to automatically generate binary patches to buffer overflow and use-after-free vulnerabilities without source code knowledge.

23 Nov 2024

This paper introduces an analytical formula for the fractional-order conditional moments of nonlinear drift constant elasticity of variance (NLD-CEV) processes under regime switching, governed by continuous-time finite-state irreducible Markov chains. By employing a hybrid system approach, we derive exact closed-form expressions for these moments across arbitrary fractional orders and regime states, thereby enhancing the analytical tractability of NLD-CEV models under stochastic regimes. Our methodology hinges on formulating and solving a complex system of interconnected partial differential equations derived from the Feynman-Kac formula for switching diffusions. To illustrate the practical relevance of our approach, Monte Carlo simulations for process with Markovian switching are applied to validate the accuracy and computational efficiency of the analytical formulas. Furthermore, we apply our findings for the valuation of financial derivatives within a dynamic nonlinear mean-reverting regime-switching framework, which demonstrates significant improvements over traditional methods. This work offers substantial contributions to financial modeling and derivative pricing by providing a robust tool for practitioners and researchers who are dealing with complex stochastic environments.

In this study, we investigate traversable wormholes within the framework of

Einstein-Euler-Heisenberg (EEH) nonlinear electrodynamics. By employing the

Einstein field equations with quantum corrections from the Euler-Heisenberg

Lagrangian, we derive wormhole solutions and examine their geometric, physical,

and gravitational properties. Two redshift function models are analyzed: one

with a constant redshift function and another with a radial-dependent function

Φ=r0/r. Our analysis demonstrates that the inclusion of quantum

corrections significantly influences the wormhole geometry, particularly by

mitigating the need for exotic matter. The shape function and energy density

are derived and examined in both models, revealing that the energy conditions,

including the weak and null energy conditions (WEC and NEC), are generally

violated at the wormhole throat. However, satisfaction of the strong energy

condition (SEC) is observed, consistent with the nature of traversable

wormholes. The Arnowitt-Deser-Misner (ADM) mass of the EEH wormhole is

calculated, showing contributions from geometric, electromagnetic, and quantum

corrections. The mass decreases with the Euler-Heisenberg correction parameter,

indicating that quantum effects contribute significantly to the wormhole mass.

Furthermore, we investigate gravitational lensing within the EEH wormhole

geometry using the Gauss-Bonnet theorem, revealing that the deflection angle is

influenced by both the electric charge and the nonlinear parameter. The

nonlinear electrodynamic corrections enhance the gravitational lensing effect,

particularly at smaller impact parameters.

20 May 2025

Embedded devices are increasingly ubiquitous and vital, often supporting

safety-critical functions. However, due to strict cost and energy constraints,

they are typically implemented with Micro-Controller Units (MCUs) that lack

advanced architectural security features. Within this space, recent efforts

have created low-cost architectures capable of generating Proofs of Execution

(PoX) of software on potentially compromised MCUs. This capability can ensure

the integrity of sensor data from the outset, by binding sensed results to an

unforgeable cryptographic proof of execution on edge sensor MCUs. However, the

security of existing PoX requires the proven execution to occur atomically.

This requirement precludes the application of PoX to (1) time-shared systems,

and (2) applications with real-time constraints, creating a direct conflict

between execution integrity and the real-time availability needs of several

embedded system uses.

In this paper, we formulate a new security goal called Real-Time Proof of

Execution (RT-PoX) that retains the integrity guarantees of classic PoX while

enabling its application to existing real-time systems. This is achieved by

relaxing the atomicity requirement of PoX while dispatching interference

attempts from other potentially malicious tasks (or compromised operating

systems) executing on the same device. To realize the RT-PoX goal, we develop

Provable Execution Architecture for Real-Time Systems (PEARTS). To the best of

our knowledge, PEARTS is the first PoX system that can be directly deployed

alongside a commodity embedded real-time operating system (FreeRTOS). This

enables both real-time scheduling and execution integrity guarantees on

commodity MCUs. To showcase this capability, we develop a PEARTS open-source

prototype atop FreeRTOS on a single-core ARM Cortex-M33 processor. We evaluate

and report on PEARTS security and (modest) overheads.

We review recent developments in cosmological models based on Finsler geometry and extensions of general relativity within this framework. Finsler geometry generalizes Riemannian geometry by allowing the metric tensor to depend on position and an additional internal degree of freedom, typically represented by a vector field at each point of the spacetime manifold. We explore whether Finsler-type geometries can describe gravitational interaction and cosmological dynamics. In particular, we examine the Barthel connection and (α,β) geometries, where α is a Riemannian metric and β is a one-form. For a specific construction of β, the Barthel connection coincides with the Levi-Civita connection of the associated Riemann metric. We review gravitational field and cosmological evolution in three geometries: Barthel-Randers (F=α+β), Barthel-Kropina (F=α2β), and the conformally transformed Barthel-Kropina geometry. After presenting the mathematical foundations of Finslerian-type modified gravity theories, we derive generalized Friedmann equations in these geometries assuming a Friedmann-Lemaître-Robertson-Walker type metric. We also present the matter-energy balance equations, interpreting them from the perspective of thermodynamics with particle creation. The cosmological properties of Barthel-Randers and Barthel-Kropina models are explored in detail. The additional geometric terms in these models can be interpreted as an effective dark energy component, generating an effective cosmological constant. Several cosmological solutions are compared with observational data (Cosmic Chronometers, Type Ia Supernovae, Baryon Acoustic Oscillations) using MCMC analysis. A comparison with the ΛCDM model shows that Finslerian models fit observational data well, suggesting they offer a viable alternative to general relativity.

The degree of malignancy of osteosarcoma and its tendency to metastasize/spread mainly depend on the pathological grade (determined by observing the morphology of the tumor under a microscope). The purpose of this study is to use artificial intelligence to classify osteosarcoma histological images and to assess tumor survival and necrosis, which will help doctors reduce their workload, improve the accuracy of osteosarcoma cancer detection, and make a better prognosis for patients. The study proposes a typical transformer image classification framework by integrating noise reduction convolutional autoencoder and feature cross fusion learning (NRCA-FCFL) to classify osteosarcoma histological images. Noise reduction convolutional autoencoder could well denoise histological images of osteosarcoma, resulting in more pure images for osteosarcoma classification. Moreover, we introduce feature cross fusion learning, which integrates two scale image patches, to sufficiently explore their interactions by using additional classification tokens. As a result, a refined fusion feature is generated, which is fed to the residual neural network for label predictions. We conduct extensive experiments to evaluate the performance of the proposed approach. The experimental results demonstrate that our method outperforms the traditional and deep learning approaches on various evaluation metrics, with an accuracy of 99.17% to support osteosarcoma diagnosis.

05 Dec 2008

An octopus program is demonstrated to generate electron energy levels in three-dimensional geometrical potential wells. The wells are modeled to have shapes similar to cone, pyramid and truncated-pyramid. To simulate the electron energy levels in quantum mechanical scheme like the ones in parabolic band approximation scheme, the program is run initially to find a suitable electron mass fraction that can produce ground-state energies in the wells as close to those in quantum dots as possible and further to simulate excited-state energies. The programs also produce wavefunctions for exploring and determining their degeneracies and vibrational normal modes.

23 Aug 2023

Widespread adoption and growing popularity of embedded/IoT/CPS devices make them attractive attack targets. On low-to-mid-range devices, security features are typically few or none due to various constraints. Such devices are thus subject to malware-based compromise. One popular defensive measure is Remote Attestation (RA) which allows a trusted entity to determine the current software integrity of an untrusted remote device.

For higher-end devices, RA is achievable via secure hardware components. For low-end (bare metal) devices, minimalistic hybrid (hardware/software) RA is effective, which incurs some hardware modifications. That leaves certain mid-range devices (e.g., ARM Cortex-A family) equipped with standard hardware components, e.g., a memory management unit (MMU) and perhaps a secure boot facility. In this space, seL4 (a verified microkernel with guaranteed process isolation) is a promising platform for attaining RA. HYDRA made a first step towards this, albeit without achieving any verifiability or provable guarantees.

This paper picks up where HYDRA left off by constructing a PARseL architecture, that separates all user-dependent components from the TCB. This leads to much stronger isolation guarantees, based on seL4 alone, and facilitates formal verification. In PARseL, We use formal verification to obtain several security properties for the isolated RA TCB, including: memory safety, functional correctness, and secret independence. We implement PARseL in F* and specify/prove expected properties using Hoare logic. Next, we automatically translate the F* implementation to C using KaRaMeL, which preserves verified properties of PARseL C implementation (atop seL4). Finally, we instantiate and evaluate PARseL on a commodity platform -- a SabreLite embedded device.

There are no more papers matching your filters at the moment.