Rolls-Royce plc

04 Jan 2021

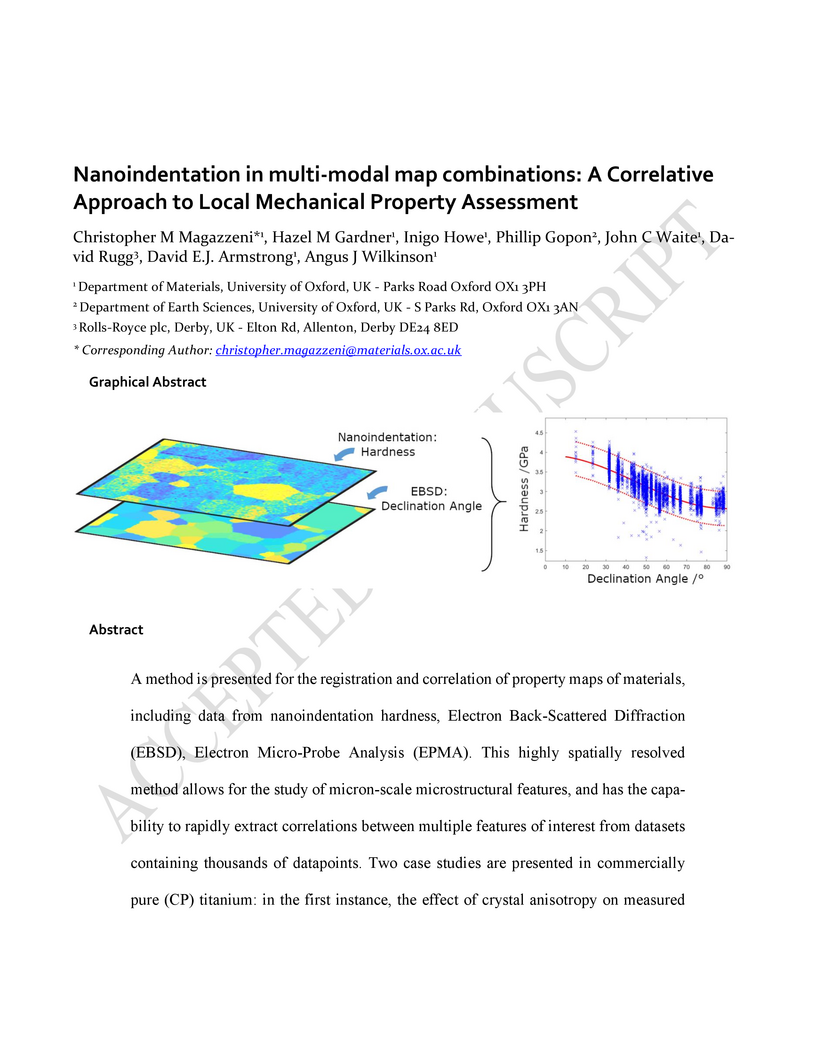

A method is presented for the registration and correlation of intrinsic property maps of materials, including data from nanoindentation hardness, Electron Back-Scattered Diffraction (EBSD), Electron Micro-Probe Analysis (EPMA). This highly spatially resolved method allows for the study of micron-scale microstructural features, and has the capability to rapidly extract correlations between multiple features of interest from datasets containing thousands of datapoints. Two case studies are presented in commercially pure (CP) titanium: in the first instance, the effect of crystal anisotropy on measured hardness and, in the second instance, the effect of an oxygen diffusion layer on hardness. The independently collected property maps are registered us-ing affine geometric transformations and are interpolated to allow for direct correlation. The results show strong agreement with trends observed in the literature, as well as providing a large dataset to facilitate future statistical analysis of microstructure-dependent mechanisms.

16 Sep 2022

Implicit methods are attractive for hybrid quantum-classical CFD solvers as

the flow equations are combined into a single coupled matrix that is solved on

the quantum device, leaving only the CFD discretisation and matrix assembly on

the classical device. In this paper, an implicit hybrid solver is investigated

using emulated HHL circuits. The hybrid solutions are compared with classical

solutions including full eigen-system decompositions. A thorough analysis is

made of how the number of qubits in the HHL eigenvalue inversion circuit affect

the CFD solver's convergence rates. Loss of precision in the minimum and

maximum eigenvalues have different effects and are understood by relating the

corresponding eigenvectors to error waves in the CFD solver. An iterative

feed-forward mechanism is identified that allows loss of precision in the HHL

circuit to amplify the associated error waves. These results will be relevant

to early fault tolerant CFD applications where every (logical) qubit will

count. The importance of good classical estimators for the minimum and maximum

eigenvalues is also relevant to the calculation of condition number for Quantum

Singular Value Transformation approaches to matrix inversion.

03 Jun 2016

The Automatic Identification System (AIS) tracks vessel movement by means of

electronic exchange of navigation data between vessels, with onboard

transceiver, terrestrial and/or satellite base stations. The gathered data

contains a wealth of information useful for maritime safety, security and

efficiency. This paper surveys AIS data sources and relevant aspects of

navigation in which such data is or could be exploited for safety of seafaring,

namely traffic anomaly detection, route estimation, collision prediction and

path planning.

12 Nov 2025

Understanding how protective oxide scales evolve over time is necessary for improving the long term resistance of superalloys. This work investigates the time-dependent oxidation behavior of an ingot-processable Co/Ni-based superalloy oxidized in air at 800 ∘C for 20, 100, and 1000 h . Mass-gain and white-light interferometry measurements quantified oxidation kinetics, surface roughness, and spallation, while high-resolution STEM-EDX characterized oxide morphology and nanoscale elemental partitioning. Atom probe tomography captured the key transition regions between the chromia and alumina scales, and X-ray diffraction was used to identify a gradual transition from NiO and (Ni,Co)-spinel phases to a compact, dual phase chromia and alumina-rich scale. The oxidation rate evolved from near-linear to parabolic behavior with time, consistent with diffusion-controlled growth once a continuous Cr2O3/α-Al2O3 scale formed. These observations help link kinetics, structure and chemistry, showing how an originally porous spinel layer transforms into a dense, adherent chromia + alumina scale that provides long-term protection in wrought Co/Ni-based superalloys.

27 Feb 2024

Three block encoding methods are evaluated for solving linear systems of equations using QSVT (Quantum Singular Value Transformation). These are ARCSIN, FABLE and PREPARE-SELECT. The performance of the encoders is evaluated using a suite of 30 test cases including 1D, 2D and 3D Laplacians and 2D CFD matrices. A subset of cases is used to characterise how the degree of the polynomial approximation to 1/x influences the performance of QSVT. The results are used to guide the evaluation of QSVT as the linear solver in hybrid non-linear pressure correction and coupled implicit CFD solvers. The performance of QSVT is shown to be resilient to polynomial approximation errors. For both CFD solvers, error tolerances of 10−2 are more than sufficient in most cases and in some cases 10−1 is sufficient. The pressure correction solver allows subnormalised condition numbers, κs, as low as half the theoretical values to be used, reducing the number of phase factors needed. PREPARE-SELECT encoding relies on a unitary decomposition, e.g. Pauli strings, that has significant classical preprocessing costs. Both ARCSIN and FABLE have much lower costs, particularly for coupled solvers. However, their subnormalisation factors, which are based on the rank of the matrix, can be many times higher than PREPARE-SELECT leading to more phase factors being needed. For both the pressure correction and coupled CFD calculations, QSVT is more stable than previous HHL results due to the polynomial approximation errors only affecting long wavelength CFD errors. Given that lowering κs increases the success probability, optimising the performance of QSVT within a CFD code is a function of the number QSVT phase factors, the number of non-linear iterations and the number of shots. Although phase factor files can be reused, the time taken to generate them impedes scaling QSVT to larger test cases.

14 Aug 2023

Sapphire fiber can withstand around 2000°C, but it is multimoded, giving poor precision sensors. We demonstrate a single-mode sapphire fiber Bragg grating temperature sensor operating up to 1200°C. The repeatability above 1000°C is within {\pm}0.08%.

30 Aug 2019

Functional linear regression is a widely used approach to model functional

responses with respect to functional inputs. However, classical functional

linear regression models can be severely affected by outliers. We therefore

introduce a Fisher-consistent robust functional linear regression model that is

able to effectively fit data in the presence of outliers. The model is built

using robust functional principal component and least squares regression

estimators. The performance of the functional linear regression model depends

on the number of principal components used. We therefore introduce a consistent

robust model selection procedure to choose the number of principal components.

Our robust functional linear regression model can be used alongside an outlier

detection procedure to effectively identify abnormal functional responses. A

simulation study shows our method is able to effectively capture the regression

behaviour in the presence of outliers, and is able to find the outliers with

high accuracy. We demonstrate the usefulness of our method on jet engine sensor

data. We identify outliers that would not be found if the functional responses

were modelled independently of the functional input, or using non-robust

methods.

The mechanical performance of Directed Energy Deposition Additive Manufactured (DED-AM) components can be highly material dependent. Through in situ and operando synchrotron X-ray imaging we capture the underlying phenomena controlling build quality of stainless steel (SS316) and titanium alloy (Ti6242 or Ti-6Al-2Sn-4Zr-2Mo). We reveal three mechanisms influencing the build efficiency of titanium alloys compared to stainless steel: blown powder sintering; reduced melt-pool wetting due to the sinter; and pore pushing in the melt-pool. The former two directly increase lack of fusion porosity, while the later causes end of track porosity. Each phenomenon influences the melt-pool characteristics, wetting of the substrate and hence build efficacy and undesirable microstructural feature formation. We demonstrate that porosity is related to powder characteristics, pool flow, and solidification front morphology. Our results clarify DED-AM process dynamics, illustrating why each alloy builds differently, facilitating the wider application of additive manufacturing to new materials.

07 Aug 2023

This paper investigates robust tube-based Model Predictive Control (MPC) of a

tiltwing Vertical Take-Off and Landing (VTOL) aircraft subject to wind

disturbances and model uncertainty. Our approach is based on a Difference of

Convex (DC) function decomposition of the dynamics to develop a computationally

tractable optimisation with robust tubes for the system trajectories. We

consider a case study of a VTOL aircraft subject to wind gusts and whose

aerodynamics is defined from data.

16 Feb 2018

Hot salt stress corrosion cracking in Ti 6246 alloy has been investigated to

elucidate the chemical mechanisms that occur. Cracking was found to initiate

beneath salt particles in the presence of oxidation. The observed transgranular

fracture was suspected to be due to hydrogen charging; XRD and high-resolution

transmission electron microscopy detected the presence of hydrides that were

precipitated on cooling. SEM-EDS showed oxygen enrichment near salt particles,

alongside chlorine and sodium. Aluminium and zirconium were also involved in

the oxidation reactions. The role of intermediate corrosion products such as

Na2TiO3, Al2O3, ZrO2, TiCl2 and TiH are discussed.

A new computational tool has been developed to model, discover, and optimize

new alloys that simultaneously satisfy up to eleven physical criteria. An

artificial neural network is trained from pre-existing materials data that

enables the prediction of individual material properties both as a function of

composition and heat treatment routine, which allows it to optimize the

material properties to search for the material with properties most likely to

exceed a target criteria. We design a new polycrystalline nickel-base

superalloy with the optimal combination of cost, density, gamma' phase content

and solvus, phase stability, fatigue life, yield stress, ultimate tensile

strength, stress rupture, oxidation resistance, and tensile elongation.

Experimental data demonstrates that the proposed alloy fulfills the

computational predictions, possessing multiple physical properties,

particularly oxidation resistance and yield stress, that exceed existing

commercially available alloys.

16 Sep 2020

The governing mechanistic behaviour of Directed Energy Deposition Additive Manufacturing (DED-AM) is revealed by a combined in situ and operando synchrotron X-ray imaging and diffraction study of a nickel-base superalloy, IN718. Using a unique process replicator, real-space phase-contrast imaging enables quantification of the melt-pool boundary and flow dynamics during solidification. This imaging knowledge informed precise diffraction measurements of temporally resolved microstructural phases during transformation and stress development with a spatial resolution of 100 μm. The diffraction quantified thermal gradient enabled a dendritic solidification microstructure to be predicted and coupled to the stress orientation and magnitude. The fast cooling rate entirely suppressed the formation of secondary phases or recrystallisation in the solid-state. Upon solidification, the stresses rapidly increase to the yield strength during cooling. This insight, combined with IN718 ′s large solidification range suggests that the accumulated plasticity exhausts the alloy′s ductility, causing liquation cracking. This study has revealed additional fundamental mechanisms governing the formation of highly non-equilibrium microstructures during DED-AM.

Many real-world applications demand accurate and fast predictions, as well as reliable uncertainty estimates. However, quantifying uncertainty on high-dimensional predictions is still a severely under-investigated problem, especially when input-output relationships are non-linear. To handle this problem, the present work introduces an innovative approach that combines autoencoder deep neural networks with the probabilistic regression capabilities of Gaussian processes. The autoencoder provides a low-dimensional representation of the solution space, while the Gaussian process is a Bayesian method that provides a probabilistic mapping between the low-dimensional inputs and outputs. We validate the proposed framework for its application to surrogate modeling of non-linear finite element simulations. Our findings highlight that the proposed framework is computationally efficient as well as accurate in predicting non-linear deformations of solid bodies subjected to external forces, all the while providing insightful uncertainty assessments.

19 Mar 2025

Quantum linear system solvers like the Quantum Singular Value Transformation

(QSVT) require a block encoding of the system matrix A within a unitary

operator UA. Unfortunately, block encoding often results in significant

subnormalisation and increase in the matrix's effective condition number

κ, affecting the efficiency of solvers. Matrix preconditioning is a

well-established classical technique to reduce κ by multiplying A by a

preconditioner P. Here, we study quantum preconditioning for block encodings.

We consider four preconditioners and two encoding approaches: (a) separately

encoding A and its preconditioner P, followed by quantum multiplication,

and (b) classically multiplying A and P before encoding the product in

UPA. Their impact on subnormalisation factors and condition number

κ are analysed using practical matrices from Computational Fluid

Dynamics (CFD). Our results show that (a) quantum multiplication introduces

excessive subnormalisation factors, negating improvements in κ. We

introduce preamplified quantum multiplication to reduce subnormalisation, which

is of independent interest. Conversely, we see that (b) encoding of the

classical product can significantly improve the effective condition number

using the Sparse Approximate Inverse preconditioner with infill. Further, we

introduce a new matrix filtering technique that reduces the circuit depth

without adversely affecting the matrix solution. We apply these methods to

reduce the number of QSVT phase factors by a factor of 25 for an example CFD

matrix of size 1024x1024.

Gas turbine engines are complex machines that typically generate a vast amount of data, and require careful monitoring to allow for cost-effective preventative maintenance. In aerospace applications, returning all measured data to ground is prohibitively expensive, often causing useful, high value, data to be discarded. The ability to detect, prioritise, and return useful data in real-time is therefore vital. This paper proposes that system output measurements, described by a convolutional neural network model of normality, are prioritised in real-time for the attention of preventative maintenance decision makers.

Due to the complexity of gas turbine engine time-varying behaviours, deriving accurate physical models is difficult, and often leads to models with low prediction accuracy and incompatibility with real-time execution. Data-driven modelling is a desirable alternative producing high accuracy, asset specific models without the need for derivation from first principles.

We present a data-driven system for online detection and prioritisation of anomalous data. Biased data assessment deriving from novel operating conditions is avoided by uncertainty management integrated into the deep neural predictive model. Testing is performed on real and synthetic data, showing sensitivity to both real and synthetic faults. The system is capable of running in real-time on low-power embedded hardware and is currently in deployment on the Rolls-Royce Pearl 15 engine flight trials.

A biological neural network is constituted by numerous subnetworks and modules with different functionalities. For an artificial neural network, the relationship between a network and its subnetworks is also important and useful for both theoretical and algorithmic research, i.e. it can be exploited to develop incremental network training algorithm or parallel network training algorithm. In this paper we explore the relationship between an ELM neural network and its subnetworks. To the best of our knowledge, we are the first to prove a theorem that shows an ELM neural network can be scattered into subnetworks and its optimal solution can be constructed recursively by the optimal solutions of these subnetworks. Based on the theorem we also present two algorithms to train a large ELM neural network efficiently: one is a parallel network training algorithm and the other is an incremental network training algorithm. The experimental results demonstrate the usefulness of the theorem and the validity of the developed algorithms.

25 Jul 2024

This paper develops a numerical procedure to accelerate the convergence of the Favre-averaged Non-Linear Harmonic (FNLH) method. The scheme provides a unified mathematical framework for solving the sparse linear systems formed by the mean flow and the time-linearized harmonic flows of FNLH in an explicit or implicit fashion. The approach explores the similarity of the sparse linear systems of FNLH and leads to a memory efficient procedure, so that its memory consumption does not depend on the number of harmonics to compute. The proposed method has been implemented in the industrial CFD solver HYDRA. Two test cases are used to conduct a comparative study of explicit and implicit schemes in terms of convergence, computational efficiency, and memory consumption. Comparisons show that the implicit scheme yields better convergence than the explicit scheme and is also roughly 7 to 10 times more computationally efficient than the explicit scheme with 4 levels of multigrid. Furthermore, the implicit scheme consumes only approximately 50% of the explicit scheme with four levels of multigrid. Compared with the full annulus unsteady Reynolds averaged Navier-Stokes (URANS) simulations, the implicit scheme produces comparable results to URANS with computational time and memory consumption that are two orders of magnitude smaller.

19 Jun 2016

This paper describes an optimisation methodology that has been specifically developed for engineering design problems. The methodology is based on a Tabu search (TS) algorithm that has been shown to find high quality solutions with a relatively low number of objective function evaluations. Whilst the methodology was originally intended for a small range of design problems it has since been successfully applied to problems from different domains with no alteration to the underlying method. This paper describes the method and its application to three different problems. The first is from the field of structural design, the second relates to the design of electromagnetic pole shapes and the third involves the design of turbomachinery blades.

13 Jul 2022

This manuscript outlines an automated anomaly detection framework for jet engines. It is tailored for identifying spatial anomalies in steady-state temperature measurements at various axial stations in an engine. The framework rests upon ideas from optimal transport theory for Gaussian measures which yields analytical solutions for both Wasserstein distances and barycenters. The anomaly detection framework proposed builds upon our prior efforts that view the spatial distribution of temperature as a Gaussian random field. We demonstrate the utility of our approach by training on a dataset from one engine family, and applying them across a fleet of engines -- successfully detecting anomalies while avoiding both false positives and false negatives. Although the primary application considered in this paper are the temperature measurements in engines, applications to other internal flows and related thermodynamic quantities are made lucid.

09 Nov 2021

Recent results in the theory and application of Newton-Puiseux expansions, i.e. fractional power series solutions of equations, suggest further developments within a more abstract algebraic-geometric framework, involving in particular the theory of toric varieties and ideals. Here, we present a number of such developments, especially in relation to the equations of van der Pol, Riccati, and Schrödinger. Some pure mathematical concepts we are led to are Graver, Gröbner, lattice and circuit bases, combinatorial geometry and differential algebra, and algebraic-differential equations. Two techniques are coordinated: classical dimensional analysis (DA) in applied mathematics and science, and a polynomial and differential-polynomial formulation of asymptotic expansions, referred to here as Newton-Puiseux (NP) expansions. The latter leads to power series with rational exponents, which depend on the choice of the dominant part of the equations, often referred to as the method of "dominant balance". The paper shows, using a new approach to DA based on toric ideals, how dimensionally homogeneous equations may best be non-dimensionalised, and then, with examples involving differential equations, uses recent work on NP expansions to show how non-dimensional parameters affect the results. Our approach finds a natural home within computational algebraic geometry, i.e. at the interface of abstract and algorithmic mathematics.

There are no more papers matching your filters at the moment.