Universite Clermont Auvergne

12 Mar 2021

University of Washington

University of Washington CNRS

CNRS California Institute of Technology

California Institute of Technology UC BerkeleyUniversity of Edinburgh

UC BerkeleyUniversity of Edinburgh University of Maryland

University of Maryland Stockholm University

Stockholm University Lawrence Berkeley National LaboratoryThe Hebrew University of JerusalemLiverpool John Moores UniversityTexas Tech UniversityThe Weizmann Institute of ScienceUniversity of Hawai’iHumboldt-Universitat zu BerlinMiller Institute for Basic Research in ScienceUniversite Clermont AuvergneINAF

Osservatorio Astronomico di Padova

Lawrence Berkeley National LaboratoryThe Hebrew University of JerusalemLiverpool John Moores UniversityTexas Tech UniversityThe Weizmann Institute of ScienceUniversity of Hawai’iHumboldt-Universitat zu BerlinMiller Institute for Basic Research in ScienceUniversite Clermont AuvergneINAF

Osservatorio Astronomico di PadovaInteraction-powered supernovae (SNe) explode within an optically-thick circumstellar medium (CSM) that could be ejected during eruptive events. To identify and characterize such pre-explosion outbursts we produce forced-photometry light curves for 196 interacting SNe, mostly of Type IIn, detected by the Zwicky Transient Facility between early 2018 and June 2020. Extensive tests demonstrate that we only expect a few false detections among the 70,000 analyzed pre-explosion images after applying quality cuts and bias corrections. We detect precursor eruptions prior to 18 Type IIn SNe and prior to the Type Ibn SN2019uo. Precursors become brighter and more frequent in the last months before the SN and month-long outbursts brighter than magnitude -13 occur prior to 25% (5 - 69%, 95% confidence range) of all Type IIn SNe within the final three months before the explosion. With radiative energies of up to 1049erg, precursors could eject ∼1M⊙ of material. Nevertheless, SNe with detected precursors are not significantly more luminous than other SNe IIn and the characteristic narrow hydrogen lines in their spectra typically originate from earlier, undetected mass-loss events. The long precursor durations require ongoing energy injection and they could, for example, be powered by interaction or by a continuum-driven wind. Instabilities during the neon and oxygen burning phases are predicted to launch precursors in the final years to months before the explosion; however, the brightest precursor is 100 times more energetic than anticipated.

University of Washington

University of Washington CNRS

CNRS University of PittsburghSLAC National Accelerator Laboratory

University of PittsburghSLAC National Accelerator Laboratory Harvard University

Harvard University Carnegie Mellon University

Carnegie Mellon University Stanford University

Stanford University University of Arizona

University of Arizona Duke University

Duke University CEA

CEA Princeton UniversityPrinceton Plasma Physics LaboratoryKavli Institute for Particle Astrophysics & CosmologyNSF NOIRLabAssociation of Universities for Research in AstronomyUniversite Clermont AuvergneLaboratoire de Physique des 2 Infinis Ir`ene Joliot-Curie - IJCLabUniversit´e Paris Cit´eNSF–DOE Vera C. Rubin Observatory HQ

Princeton UniversityPrinceton Plasma Physics LaboratoryKavli Institute for Particle Astrophysics & CosmologyNSF NOIRLabAssociation of Universities for Research in AstronomyUniversite Clermont AuvergneLaboratoire de Physique des 2 Infinis Ir`ene Joliot-Curie - IJCLabUniversit´e Paris Cit´eNSF–DOE Vera C. Rubin Observatory HQThe Vera C. Rubin Observatory recently released Data Preview 1 (DP1) in advance of the upcoming Legacy Survey of Space and Time (LSST), which will enable boundless discoveries in time-domain astronomy over the next ten years. DP1 provides an ideal sandbox for validating innovative data analysis approaches for the LSST mission, whose scale challenges established software infrastructure paradigms. This note presents a pair of such pipelines for variability-finding using powerful software infrastructure suited to LSST data, namely the HATS (Hierarchical Adaptive Tiling Scheme) format and the LSDB framework, developed by the LSST Interdisciplinary Network for Collaboration and Computing (LINCC) Frameworks team. This article presents a pair of variability-finding pipelines built on LSDB, the HATS catalog of DP1 data, and preliminary results of detected variable objects, two of which are novel discoveries.

An implemented incremental Structure from Motion (SfM) pipeline provides a detailed, modular, and interpretable approach for 3D reconstruction from 2D images. This system generates accurate sparse 3D models and camera pose estimations, demonstrating performance comparable to the established COLMAP system on standard datasets.

StixelNExT++ introduces a lightweight, end-to-end neural network for 3D monocular scene segmentation and representation, achieving real-time inference at 10 ms per frame and a 63.8% F1-Score for holistic obstacle detection. The system generates a compressed 3D Stixel World from a single RGB image, suitable for collective perception by leveraging a depth-aware loss and non-linear depth discretization.

The Data Release 1 (DR1) of the Dark Energy Spectroscopic Instrument (DESI) is the largest sample to date for small-scale Lyα forest cosmology, accessed through its one-dimensional power spectrum (P1D). The Lyα forest P1D is extracted from quasar spectra that are highly inhomogeneous (both in wavelength and between quasars) in noise properties due to intrinsic properties of the quasar, atmospheric and astrophysical contamination, and also sensitive to low-level details of the spectral extraction pipeline. We employ two estimators in DR1 analysis to measure P1D: the optimal estimator and the fast Fourier transform (FFT) estimator. To ensure robustness of our DR1 measurements, we validate these two power spectrum and covariance matrix estimation methodologies against the challenging aspects of the data. First, using a set of 20 synthetic 1D realizations of DR1, we derive the masking bias corrections needed for the FFT estimator and the continuum fitting bias needed for both estimators. We demonstrate that both estimators, including their covariances, are unbiased with these corrections using the Kolmogorov-Smirnov test. Second, we substantially extend our previous suite of CCD image simulations to include 675,000 quasars, allowing us to accurately quantify the pipeline's performance. This set of simulations reveals biases at the highest k values, corresponding to a resolution error of a few percent. We base the resolution systematics error budget of DR1 P1D on these values, but do not derive corrections from them since the simulation fidelity is insufficient for precise corrections.

14 Oct 2025

We classify semistable abelian varieties over Q with bad reduction at exactly 19 up to isogeny over Q. The general strategy goes back to Fontaine and has been heavily refined by Schoof. In the beginning of this paper we include an overview of this strategy, proving various non-trivial background results along the way, as an introduction for readers unacquainted with this topic.

06 Jul 2019

This work determines the degree to which a standard Lambda-CDM analysis based on type Ia supernovae can identify deviations from a cosmological constant in the form of a redshift-dependent dark energy equation of state w(z). We introduce and apply a novel random curve generator to simulate instances of w(z) from constraint families with increasing distinction from a cosmological constant. After producing a series of mock catalogs of binned type Ia supernovae corresponding to each w(z) curve, we perform a standard Lambda-CDM analysis to estimate the corresponding posterior densities of the absolute magnitude of type Ia supernovae, the present-day matter density, and the equation of state parameter. Using the Kullback-Leibler divergence between posterior densities as a difference measure, we demonstrate that a standard type Ia supernova cosmology analysis has limited sensitivity to extensive redshift dependencies of the dark energy equation of state. In addition, we report that larger redshift-dependent departures from a cosmological constant do not necessarily manifest easier-detectable incompatibilities with the Lambda-CDM model. Our results suggest that physics beyond the standard model may simply be hidden in plain sight.

21 Oct 2020

Mapping algorithms that rely on registering point clouds inevitably suffer from local drift, both in localization and in the built map. Applications that require accurate maps, such as environmental monitoring, benefit from additional sensor modalities that reduce such drift. In our work, we target the family of mappers based on the Iterative Closest Point (ICP) algorithm which use additional orientation sources such as the Inertial Measurement Unit (IMU). We introduce a new angular penalty term derived from Lie algebra. Our formulation avoids the need for tuning arbitrary parameters. Orientation covariance is used instead, and the resulting error term fits into the ICP cost function minimization problem. Experiments performed on our own real-world data and on the KITTI dataset show consistent behavior while suppressing the effect of outlying IMU measurements. We further discuss promising experiments, which should lead to optimal combination of all error terms in the ICP cost function minimization problem, allowing us to smoothly combine the geometric and inertial information provided by robot sensors.

Non-Rigid Structure-from-Motion (NRSfM) reconstructs a deformable 3D object from the correspondences established between monocular 2D images. Current NRSfM methods lack statistical robustness, which is the ability to cope with correspondence this http URL prevents one to use automatically established correspondences, which are prone to errors, thereby strongly limiting the scope of NRSfM. We propose a three-step automatic pipeline to solve NRSfM robustly by exploiting isometry. Step 1 computes the optical flow from correspondences, step 2 reconstructs each 3D point's normal vector using multiple reference images and integrates them to form surfaces with the best reference and step 3 rejects the 3D points that break isometry in their local neighborhood. Importantly, each step is designed to discard or flag erroneous correspondences. Our contributions include the robustification of optical flow by warp estimation, new fast analytic solutions to local normal reconstruction and their robustification, and a new scale-independent measure of 3D local isometric coherence. Experimental results show that our robust NRSfM method consistently outperforms existing methods on both synthetic and real datasets.

Machine learning (ML) in high-energy physics (HEP) has moved in the LHC era from an internal detail of experiment software, to an unavoidable public component of many physics data-analyses. Scientific reproducibility thus requires that it be possible to accurately and stably preserve the behaviours of these, sometimes very complex algorithms. We present and document the petrifyML package, which provides missing mechanisms to convert configurations from commonly used HEP ML tools to either the industry-standard ONNX format or to native Python or C++ code, enabling future re-use and re-interpretation of many ML-based experimental studies.

30 Aug 2022

The continuous development of new multiphase alloys with improved mechanical properties requires quantitative microstructure-resolved observation of the nanoscale deformation mechanisms at, e.g., multiphase interfaces. This calls for a combinatory approach beyond advanced testing methods such as microscale strain mapping on bulk material and micrometer sized deformation tests of single grains. We propose a nanomechanical testing framework that has been carefully designed to integrate several state-of-the-art testing and characterization methods: (i) well-defined nano-tensile testing of carefully selected and isolated multiphase specimens, (ii) front&rear-sided SEM-EBSD microstructural characterization combined with front&rear-sided in-situ SEM-DIC testing at very high resolution enabled by a recently developed InSn nano-DIC speckle pattern, (iii) optimized DIC strain mapping aided by application of SEM scanning artefact correction and DIC deconvolution for improved spatial resolution, (iv) a novel microstructure-to-strain alignment framework to deliver front&rear-sided, nanoscale, microstructure-resolved strain fields, and (v) direct comparison of microstructure, strain and SEM-BSE damage maps in the deformed configuration. Demonstration on a micrometer-sized dual-phase steel specimen, containing an incompatible ferrite-martensite interface, shows how the nanoscale deformation mechanisms can be unraveled. Discrete lath-boundary-aligned martensite strain localizations transit over the interface into diffuse ferrite plasticity, revealed by the nanoscale front&rear-sided microstructure-to-strain alignment and optimization of DIC correlations. The proposed framework yields front&rear-sided aligned microstructure and strain fields providing 3D interpretation of the deformation and opening new opportunities for unprecedented validation of advanced multiphase simulations.

06 Jul 2019

This work determines the degree to which a standard Lambda-CDM analysis based on type Ia supernovae can identify deviations from a cosmological constant in the form of a redshift-dependent dark energy equation of state w(z). We introduce and apply a novel random curve generator to simulate instances of w(z) from constraint families with increasing distinction from a cosmological constant. After producing a series of mock catalogs of binned type Ia supernovae corresponding to each w(z) curve, we perform a standard Lambda-CDM analysis to estimate the corresponding posterior densities of the absolute magnitude of type Ia supernovae, the present-day matter density, and the equation of state parameter. Using the Kullback-Leibler divergence between posterior densities as a difference measure, we demonstrate that a standard type Ia supernova cosmology analysis has limited sensitivity to extensive redshift dependencies of the dark energy equation of state. In addition, we report that larger redshift-dependent departures from a cosmological constant do not necessarily manifest easier-detectable incompatibilities with the Lambda-CDM model. Our results suggest that physics beyond the standard model may simply be hidden in plain sight.

02 Aug 2021

The abundant production of beauty and charm hadrons in the 5×1012 Z0 decays expected at FCC-ee offers outstanding opportunities in flavour physics that in general exceed those available at Belle II, and are complementary to the heavy-flavour programme of the LHC. A wide range of measurements will be possible in heavy-flavour spectroscopy, rare decays of heavy-flavoured particles and CP-violation studies, which will benefit from the low-background experimental environment, the high Lorentz boost, and the availability of the full spectrum of hadron species. This essay first surveys the important questions in heavy-flavour physics, and assesses the likely theoretical and experimental landscape at the turn-on of FCC-ee. From this certain measurements are identified where the impact of FCC-ee will be particularly important. A full exploitation of the heavy-flavour potential of FCC-ee places specific constraints and challenges on detector design, which in some cases are in tension with those imposed by the other physics goals of the facility. These requirements and conflicts are discussed.

CNRS

CNRS California Institute of Technology

California Institute of Technology Chinese Academy of Sciences

Chinese Academy of Sciences University of California, Irvine

University of California, Irvine Peking UniversityUniversidade de Lisboa

Peking UniversityUniversidade de Lisboa Space Telescope Science Institute

Space Telescope Science Institute Leiden UniversityShanghai Astronomical ObservatoryKavli Institute for Astronomy and AstrophysicsUniversity of Wisconsin-WhitewaterCalifornia State Polytechnic UniversityUniversite Clermont Auvergne

Leiden UniversityShanghai Astronomical ObservatoryKavli Institute for Astronomy and AstrophysicsUniversity of Wisconsin-WhitewaterCalifornia State Polytechnic UniversityUniversite Clermont AuvergneWide-field searches for young stellar objects (YSOs) can place useful constraints on the prevalence of clustered versus distributed star formation. The Spitzer/IRAC Candidate YSO (SPICY) catalog is one of the largest compilations of such objects (~120,000 candidates in the Galactic midplane). Many SPICY candidates are spatially clustered, but, perhaps surprisingly, approximately half the candidates appear spatially distributed. To better characterize this unexpected population and confirm its nature, we obtained Palomar/DBSP spectroscopy for 26 of the optically-bright (G<15 mag) "isolated" YSO candidates. We confirm the YSO classifications of all 26 sources based on their positions on the Hertzsprung-Russell diagram, H and Ca II line-emission from over half the sample, and robust detection of infrared excesses. This implies a contamination rate of <10% for SPICY stars that meet our optical selection criteria. Spectral types range from B4 to K3, with A-type stars most common. Spectral energy distributions, diffuse interstellar bands, and Galactic extinction maps indicate moderate to high extinction. Stellar masses range from ~1 to 7 M⊙, and the estimated accretion rates, ranging from 3×10−8 to 3×10−7 M⊙ yr−1, are typical for YSOs in this mass range. The 3D spatial distribution of these stars, based on Gaia astrometry, reveals that the "isolated" YSOs are not evenly distributed in the Solar neighborhood but are concentrated in kpc-scale dusty Galactic structures that also contain the majority of the SPICY YSO clusters. Thus, the processes that produce large Galactic star-forming structures may yield nearly as many distributed as clustered YSOs.

Accurate photometry in astronomical surveys is challenged by image artefacts,

which affect measurements and degrade data quality. Due to the large amount of

available data, this task is increasingly handled using machine learning

algorithms, which often require a labelled training set to learn data patterns.

We present an expert-labelled dataset of 1127 artefacts with 1213 labels from

26 fields in ZTF DR3, along with a complementary set of nominal objects. The

artefact dataset was compiled using the active anomaly detection algorithm

PineForest, developed by the SNAD team. These datasets can serve as valuable

resources for real-bogus classification, catalogue cleaning, anomaly detection,

and educational purposes. Both artefacts and nominal images are provided in

FITS format in two sizes (28 x 28 and 63 x 63 pixels). The datasets are

publicly available for further scientific applications.

10 Oct 2025

An identifying code of a closed-twin-free graph G is a dominating set S of vertices of G such that any two vertices in G have a distinct intersection between their closed neighborhoods and S. It was conjectured that there exists an absolute constant c such that for every connected graph G of order n and maximum degree Δ, the graph G admits an identifying code of size at most (ΔΔ−1)n+c. We provide significant support for this conjecture by exactly characterizing every tree requiring a positive constant c together with the exact value of the constant. Hence, proving the conjecture for trees. For Δ=2 (the graph is a path or a cycle), it is long known that c=3/2 suffices. For trees, for each Δ≥3, we show that c=1/Δ≤1/3 suffices and that c is required to have a positive value only for a finite number of trees. In particular, for Δ=3, there are 12 trees with a positive constant c and, for each Δ≥4, the only tree with positive constant c is the Δ-star. Our proof is based on induction and utilizes recent results from [F. Foucaud, T. Lehtilä. Revisiting and improving upper bounds for identifying codes. SIAM Journal on Discrete Mathematics, 2022]. We remark that there are infinitely many trees for which the bound is tight when Δ=3; for every Δ≥4, we construct an infinite family of trees of order n with identification number very close to the bound, namely (Δ+Δ−22Δ−1+Δ−21)n>(ΔΔ−1)n−Δ2n. Furthermore, we also give a new tight upper bound for identification number on trees by showing that the sum of the domination and identification numbers of any tree T is at most its number of vertices.

28 Oct 2019

University of Washington

University of Washington California Institute of Technology

California Institute of Technology University of California, Santa Barbara

University of California, Santa Barbara Northwestern University

Northwestern University NASA Goddard Space Flight Center

NASA Goddard Space Flight Center University of Maryland

University of Maryland Stockholm University

Stockholm University Duke UniversityLiverpool John Moores UniversityThe Weizmann Institute of ScienceHumboldt-Universitat zu BerlinThe Adler PlanetariumUniversite Clermont Auvergne

Duke UniversityLiverpool John Moores UniversityThe Weizmann Institute of ScienceHumboldt-Universitat zu BerlinThe Adler PlanetariumUniversite Clermont AuvergneThe Zwicky Transient Facility (ZTF) is performing a three-day cadence survey of the visible Northern sky (~3π). The transient candidates found in this survey are announced via public alerts. As a supplementary product ZTF is also conducting a large spectroscopic campaign: the ZTF Bright Transient Survey (BTS). The goal of the BTS is to spectroscopically classify all extragalactic transients brighter than 18.5 mag at peak brightness and immediately announce those classifications to the public. Extragalactic discoveries from ZTF are predominantly Supernovae (SNe). The BTS is the largest flux-limited SN survey to date. Here we present a catalog of the761 SNe that were classified during the first nine months of the survey (2018 Apr. 1 to 2018 Dec. 31). The BTS SN catalog contains redshifts based on SN template matching and spectroscopic host galaxy redshifts when available. Based on this data we perform an analysis of the redshift completeness of local galaxy catalogs, dubbed as the Redshift Completeness Fraction (RCF; the number of SN host galaxies with known spectroscopic redshift prior to SN discovery divided by the total number of SN hosts). In total, we identify the host galaxies of 512 Type Ia supernovae, 227 of which have known spectroscopic redshifts, yielding an RCF estimate of 44%±1%. We find a steady decrease in the RCF with increasing distance in the local universe. For z<0.05, or ~200 Mpc, we find RCF=0.6, which has important ramifications when searching for multimessenger astronomical events. Prospects for dramatically increasing the RCF are limited to new multi-fiber spectroscopic instruments, or wide-field narrowband surveys. We find that existing galaxy redshift catalogs are only 50% complete at r≈16.9 mag. Pushing this limit several magnitudes deeper will pay huge dividends when searching for electromagnetic counterparts to gravitational wave events.

15 Nov 2022

Cosmic voids and their corresponding redshift-projected mass densities, known as troughs, play an important role in our attempt to model the large-scale structure of the Universe. Understanding these structures enables us to compare the standard model with alternative cosmologies, constrain the dark energy equation of state, and distinguish between different gravitational theories. In this paper, we extend the subspace-constrained mean shift algorithm, a recently introduced method to estimate density ridges, and apply it to 2D weak lensing mass density maps from the Dark Energy Survey Y1 data release to identify curvilinear filamentary structures. We compare the obtained ridges with previous approaches to extract trough structure in the same data, and apply curvelets as an alternative wavelet-based method to constrain densities. We then invoke the Wasserstein distance between noisy and noiseless simulations to validate the denoising capabilities of our method. Our results demonstrate the viability of ridge estimation as a precursor for denoising weak lensing observables to recover the large-scale structure, paving the way for a more versatile and effective search for troughs.

20 Dec 2021

We perform a systematic study of the α-particle excitation from its ground state 01+ to the 02+ resonance. The so-called monopole transition form factor is investigated via an electron scattering experiment in a broad Q2-range (from 0.5 to 5.0 fm−2). The precision of the new data dramatically superseeds that of older sets of data, each covering only a portion of the Q2-range. The new data allow the determination of two coefficients in a low-momentum expansion leading to a new puzzle. By confronting experiment to state-of-the-art theoretical calculations we observe that modern nuclear forces, including those derived within chiral effective field theory which are well tested on a variety of observables, fail to reproduce the excitation of the α-particle.

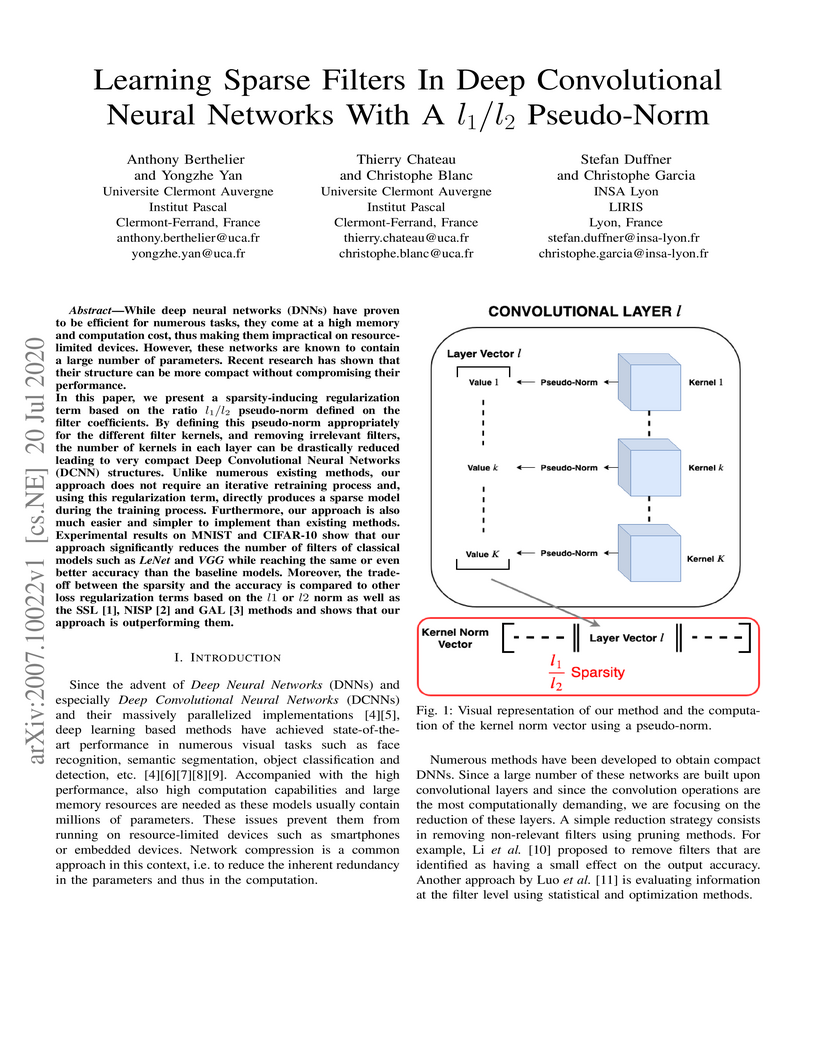

While deep neural networks (DNNs) have proven to be efficient for numerous

tasks, they come at a high memory and computation cost, thus making them

impractical on resource-limited devices. However, these networks are known to

contain a large number of parameters. Recent research has shown that their

structure can be more compact without compromising their performance. In this

paper, we present a sparsity-inducing regularization term based on the ratio

l1/l2 pseudo-norm defined on the filter coefficients. By defining this

pseudo-norm appropriately for the different filter kernels, and removing

irrelevant filters, the number of kernels in each layer can be drastically

reduced leading to very compact Deep Convolutional Neural Networks (DCNN)

structures. Unlike numerous existing methods, our approach does not require an

iterative retraining process and, using this regularization term, directly

produces a sparse model during the training process. Furthermore, our approach

is also much easier and simpler to implement than existing methods.

Experimental results on MNIST and CIFAR-10 show that our approach significantly

reduces the number of filters of classical models such as LeNet and VGG while

reaching the same or even better accuracy than the baseline models. Moreover,

the trade-off between the sparsity and the accuracy is compared to other loss

regularization terms based on the l1 or l2 norm as well as the SSL, NISP and

GAL methods and shows that our approach is outperforming them.

There are no more papers matching your filters at the moment.