speech-synthesis

Researchers at Sharif University of Technology developed a service-oriented framework to integrate context-aware phonemization into real-time Text-to-Speech systems for Persian. This approach achieved an Ezafe F1 score of 90.08% and a homograph accuracy of 77.67%, significantly enhancing pronunciation naturalness while maintaining a real-time factor of 0.167.

A two-stage self-supervised framework integrates the Joint-Embedding Predictive Architecture (JEPA) with Density Adaptive Attention Mechanisms (DAAM) to learn robust speech representations. This approach generates efficient, reversible discrete speech tokens at an ultra-low rate of 47.5 tokens/sec, designed for seamless integration with large language models.

The RRPO framework mitigates reward hacking in LLM-based emotional Text-to-Speech systems by introducing a robust Reward Model that guides policy optimization. This approach yields superior perceptual quality, achieving E-MOS of 3.78 and N-MOS of 3.81, outperforming prior differentiable reinforcement learning and supervised fine-tuning methods.

08 Dec 2025

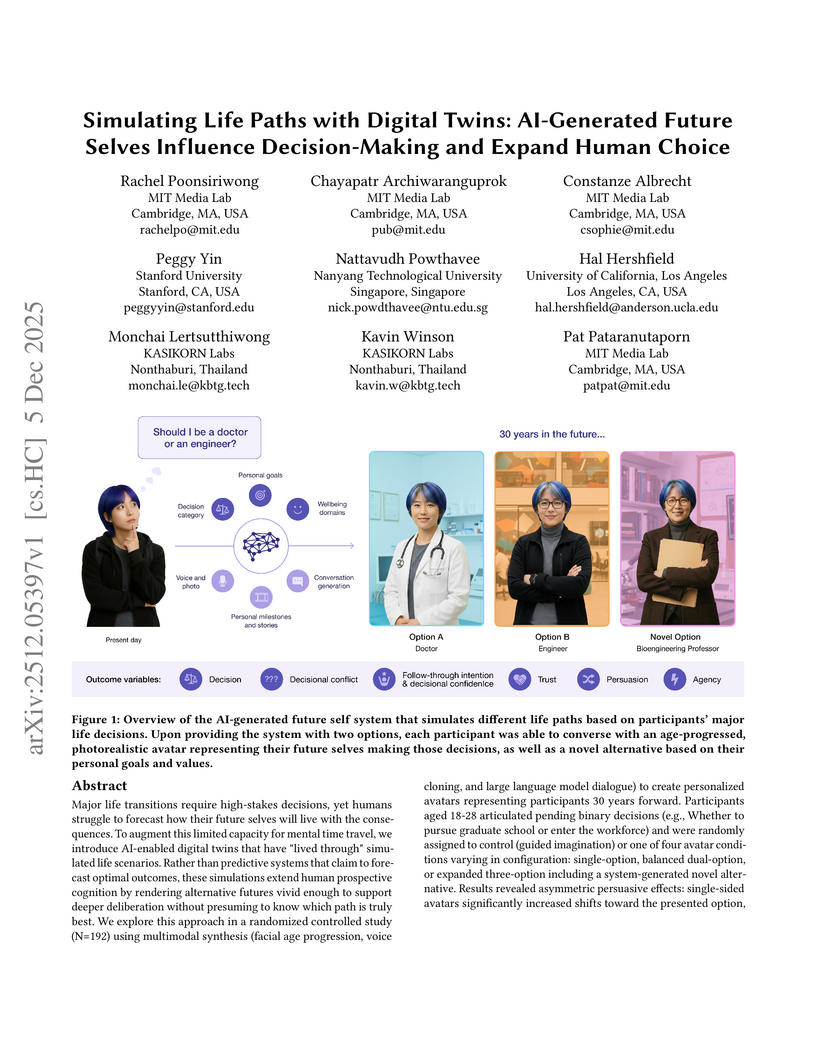

Researchers at MIT Media Lab and collaborators developed an AI system that generates personalized, multimodal future self avatars to help individuals explore major life decisions. The system demonstrates that interacting with these AI-generated digital twins can influence decision outcomes, expand the consideration of previously unthought-of choices, and highlights the importance of cognitive and meaning-making aspects over visual realism for effective decision support.

Recent advances in diffusion models have positioned them as powerful generative frameworks for speech synthesis, demonstrating substantial improvements in audio quality and stability. Nevertheless, their effectiveness in vocoders conditioned on mel spectrograms remains constrained, particularly when the conditioning diverges from the training distribution. The recently proposed GLA-Grad model introduced a phase-aware extension to the WaveGrad vocoder that integrated the Griffin-Lim algorithm (GLA) into the reverse process to reduce inconsistencies between generated signals and conditioning mel spectrogram. In this paper, we further improve GLA-Grad through an innovative choice in how to apply the correction. Particularly, we compute the correction term only once, with a single application of GLA, to accelerate the generation process. Experimental results demonstrate that our method consistently outperforms the baseline models, particularly in out-of-domain scenarios.

02 Dec 2025

We propose MAViD, a novel Multimodal framework for Audio-Visual Dialogue understanding and generation. Existing approaches primarily focus on non-interactive systems and are limited to producing constrained and unnatural human this http URL primary challenge of this task lies in effectively integrating understanding and generation capabilities, as well as achieving seamless multimodal audio-video fusion. To solve these problems, we propose a Conductor-Creator architecture that divides the dialogue system into two primary this http URL Conductor is tasked with understanding, reasoning, and generating instructions by breaking them down into motion and speech components, thereby enabling fine-grained control over interactions. The Creator then delivers interactive responses based on these this http URL, to address the difficulty of generating long videos with consistent identity, timbre, and tone using dual DiT structures, the Creator adopts a structure that combines autoregressive (AR) and diffusion models. The AR model is responsible for audio generation, while the diffusion model ensures high-quality video this http URL, we propose a novel fusion module to enhance connections between contextually consecutive clips and modalities, enabling synchronized long-duration audio-visual content this http URL experiments demonstrate that our framework can generate vivid and contextually coherent long-duration dialogue interactions and accurately interpret users' multimodal queries.

The globalization of education and rapid growth of online learning have made localizing educational content a critical challenge. Lecture materials are inherently multimodal, combining spoken audio with visual slides, which requires systems capable of processing multiple input modalities. To provide an accessible and complete learning experience, translations must preserve all modalities: text for reading, slides for visual understanding, and speech for auditory learning. We present \textbf{BOOM}, a multimodal multilingual lecture companion that jointly translates lecture audio and slides to produce synchronized outputs across three modalities: translated text, localized slides with preserved visual elements, and synthesized speech. This end-to-end approach enables students to access lectures in their native language while aiming to preserve the original content in its entirety. Our experiments demonstrate that slide-aware transcripts also yield cascading benefits for downstream tasks such as summarization and question answering. We release our Slide Translation code at this https URL and integrate it in Lecture Translator at this https URL}\footnote{All released code and models are licensed under the MIT License.

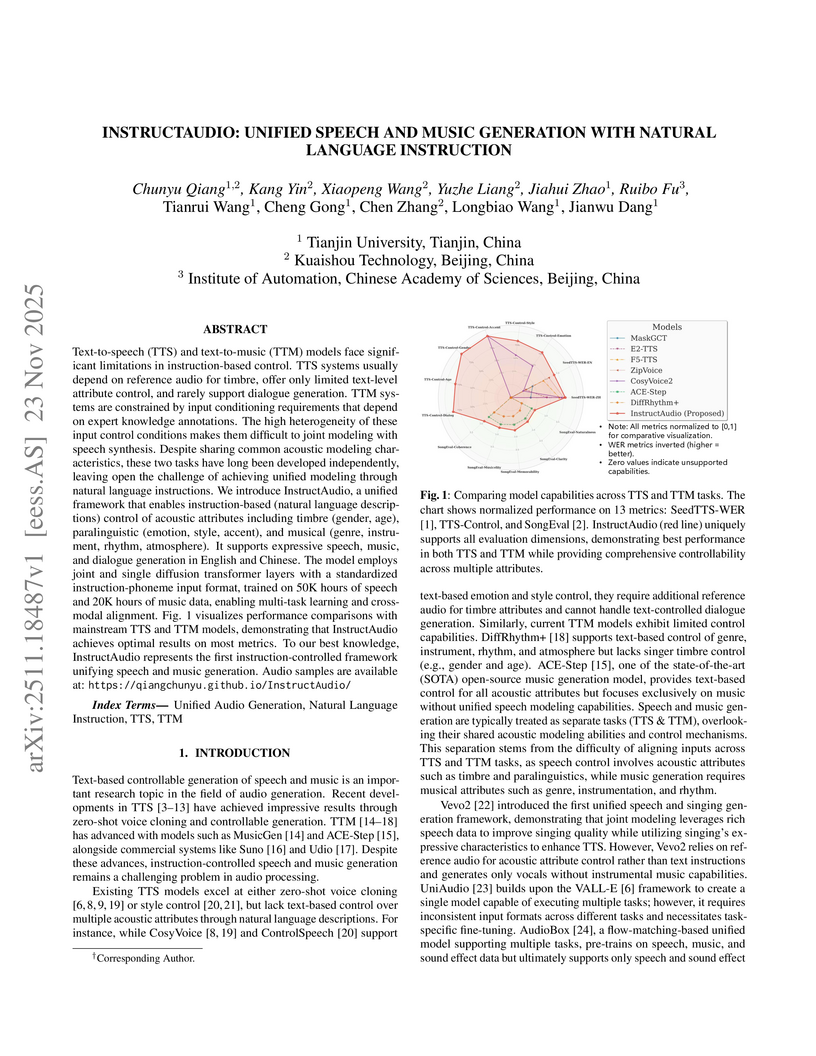

InstructAudio presents a unified framework for generating speech and music from natural language instructions, leveraging a Multimodal Diffusion Transformer to provide comprehensive control without requiring reference audio. The system achieves superior performance in instruction-based Text-to-Speech, including novel dialogue synthesis capabilities, and maintains competitive results in Text-to-Music.

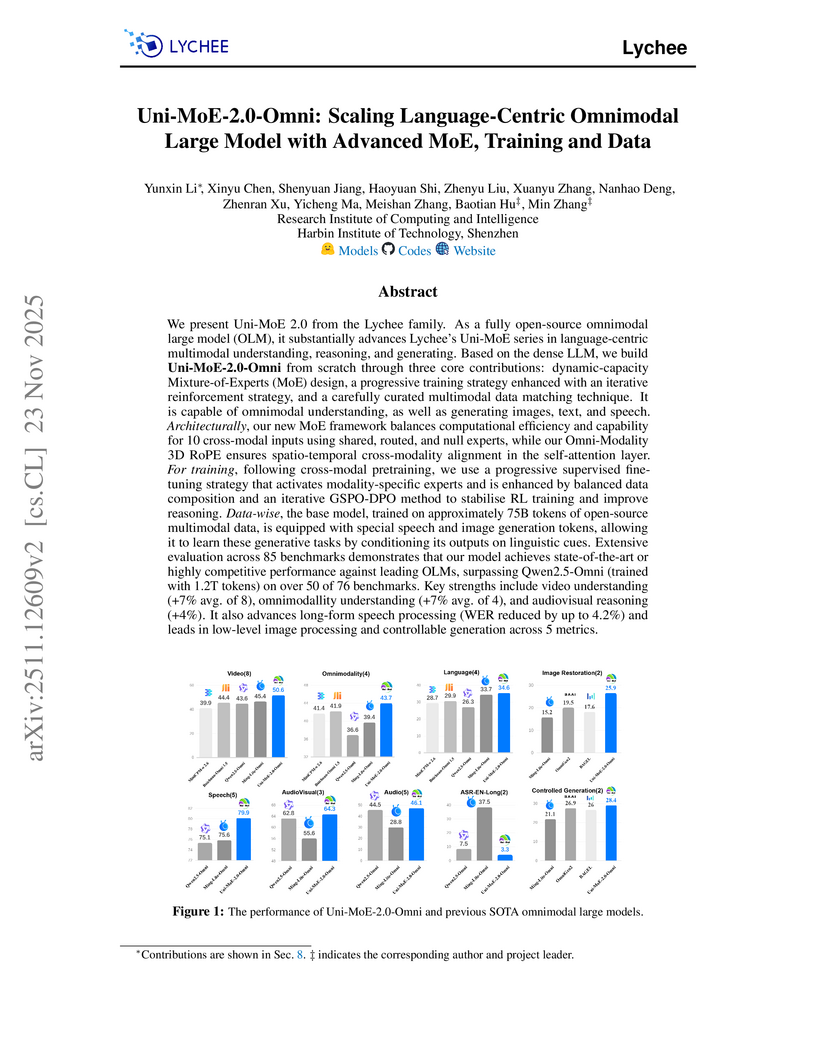

Uni-MoE-2.0-Omni, an open-source omnimodal large model from Harbin Institute of Technology, Shenzhen, unifies understanding and generation across text, images, audio, and video through an advanced Mixture-of-Experts architecture and progressive training. The model achieved state-of-the-art or competitive performance across 85 multimodal benchmarks, notably outperforming Qwen2.5-Omni on over 50 of 76 benchmarks and showing strong capabilities in complex reasoning, long-form speech understanding, and controllable image generation.

13 Nov 2025

MTR-DUPLEXBENCH introduces a comprehensive benchmark for evaluating Full-Duplex Speech Language Models (FD-SLMs) across multi-round conversations, utilizing a novel turn segmentation method and diverse metrics. Experiments show existing FD-SLMs face challenges in maintaining consistent dialogue quality, conversational feature handling, and instruction following over extended interactions.

Aligning large generative models with human feedback is a critical challenge. In speech synthesis, this is particularly pronounced due to the lack of a large-scale human preference dataset, which hinders the development of models that truly align with human perception. To address this, we introduce SpeechJudge, a comprehensive suite comprising a dataset, a benchmark, and a reward model centered on naturalness--one of the most fundamental subjective metrics for speech synthesis. First, we present SpeechJudge-Data, a large-scale human feedback corpus of 99K speech pairs. The dataset is constructed using a diverse set of advanced zero-shot text-to-speech (TTS) models across diverse speech styles and multiple languages, with human annotations for both intelligibility and naturalness preference. From this, we establish SpeechJudge-Eval, a challenging benchmark for speech naturalness judgment. Our evaluation reveals that existing metrics and AudioLLMs struggle with this task; the leading model, Gemini-2.5-Flash, achieves less than 70% agreement with human judgment, highlighting a significant gap for improvement. To bridge this gap, we develop SpeechJudge-GRM, a generative reward model (GRM) based on Qwen2.5-Omni-7B. It is trained on SpeechJudge-Data via a two-stage post-training process: Supervised Fine-Tuning (SFT) with Chain-of-Thought rationales followed by Reinforcement Learning (RL) with GRPO on challenging cases. On the SpeechJudge-Eval benchmark, the proposed SpeechJudge-GRM demonstrates superior performance, achieving 77.2% accuracy (and 79.4% after inference-time scaling @10) compared to a classic Bradley-Terry reward model (72.7%). Furthermore, SpeechJudge-GRM can be also employed as a reward function during the post-training of speech generation models to facilitate their alignment with human preferences.

We present Step-Audio-EditX, the first open-source LLM-based audio model excelling at expressive and iterative audio editing encompassing emotion, speaking style, and paralinguistics alongside robust zero-shot text-to-speech (TTS) capabilities. Our core innovation lies in leveraging only large-margin synthetic data, which circumvents the need for embedding-based priors or auxiliary modules. This large-margin learning approach enables both iterative control and high expressivity across voices, and represents a fundamental pivot from the conventional focus on representation-level disentanglement. Evaluation results demonstrate that Step-Audio-EditX surpasses both MiniMax-2.6-hd and Doubao-Seed-TTS-2.0 in emotion editing and other fine-grained control tasks.

UniAVGen introduces a unified framework for joint audio and video generation that uses asymmetric cross-modal interactions, face-aware modulation, and modality-aware classifier-free guidance. The model generates high-fidelity audio and video with robust lip synchronization and emotional consistency, outperforming previous joint methods while requiring 95% fewer training samples and demonstrating strong generalization to diverse visual styles.

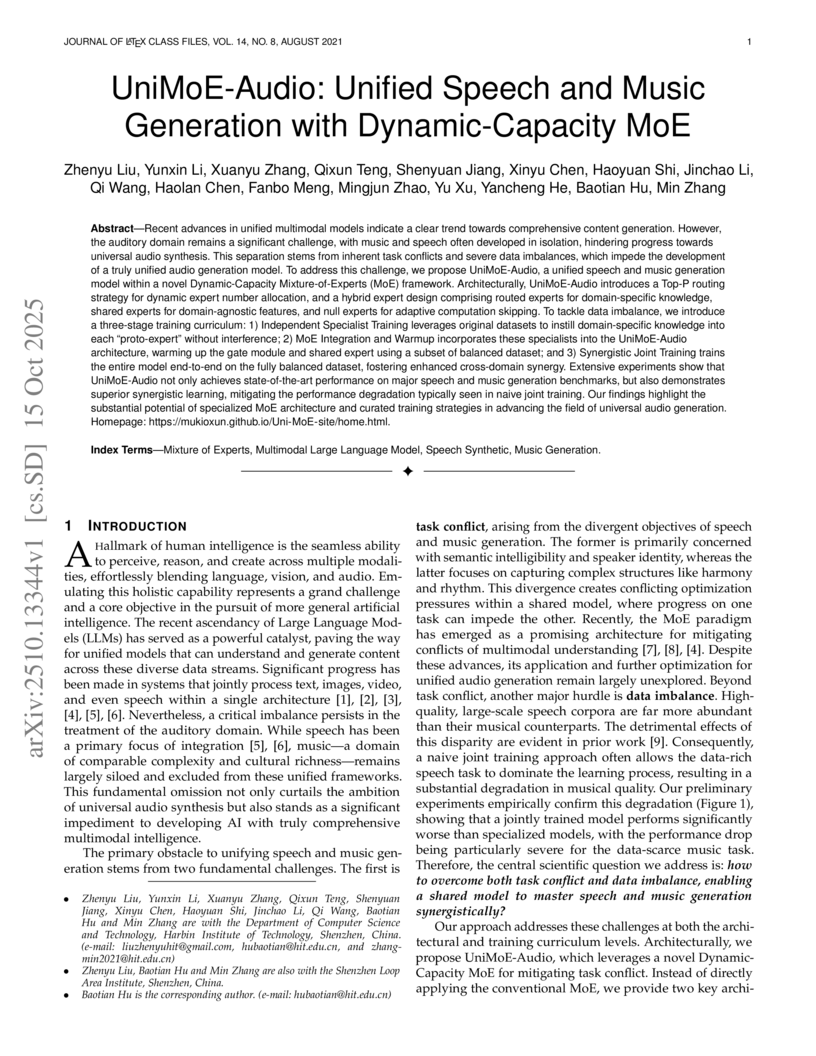

UniMoE-Audio achieves unified speech and music generation by employing a Dynamic-Capacity Mixture-of-Experts architecture and a three-stage training curriculum. This approach addresses task conflict and data imbalance, yielding state-of-the-art perceptual quality in speech synthesis (UTMOS 4.36 on SeedTTS-EN) and superior aesthetic scores in music generation.

NExT-OMNI introduces the first open-source omnimodal foundation model leveraging Discrete Flow Matching, achieving unified understanding, generation, and retrieval across text, image, video, and audio. It demonstrates superior performance over autoregressive models in multimodal retrieval, competitive results in multi-turn interactions, and improves inference speed by 1.2x.

Text-to-audio (TTA) generation with fine-grained control signals, e.g., precise timing control or intelligible speech content, has been explored in recent works. However, constrained by data scarcity, their generation performance at scale is still compromised. In this study, we recast controllable TTA generation as a multi-task learning problem and introduce a progressive diffusion modeling approach, ControlAudio. Our method adeptly fits distributions conditioned on more fine-grained information, including text, timing, and phoneme features, through a step-by-step strategy. First, we propose a data construction method spanning both annotation and simulation, augmenting condition information in the sequence of text, timing, and phoneme. Second, at the model training stage, we pretrain a diffusion transformer (DiT) on large-scale text-audio pairs, achieving scalable TTA generation, and then incrementally integrate the timing and phoneme features with unified semantic representations, expanding controllability. Finally, at the inference stage, we propose progressively guided generation, which sequentially emphasizes more fine-grained information, aligning inherently with the coarse-to-fine sampling nature of DiT. Extensive experiments show that ControlAudio achieves state-of-the-art performance in terms of temporal accuracy and speech clarity, significantly outperforming existing methods on both objective and subjective evaluations. Demo samples are available at: this https URL.

OVI presents a unified generative AI framework for synchronized audio-video content, employing a symmetric twin backbone Diffusion Transformer with blockwise cross-modal fusion. The model achieves clear human preference over existing open-source solutions for combined audio and video quality, along with improved synchronization.

02 Oct 2025

End-to-end speech-in speech-out dialogue systems are emerging as a powerful alternative to traditional ASR-LLM-TTS pipelines, generating more natural, expressive responses with significantly lower latency. However, these systems remain prone to hallucinations due to limited factual grounding. While text-based dialogue systems address this challenge by integrating tools such as web search and knowledge graph APIs, we introduce the first approach to extend tool use directly into speech-in speech-out systems. A key challenge is that tool integration substantially increases response latency, disrupting conversational flow. To mitigate this, we propose Streaming Retrieval-Augmented Generation (Streaming RAG), a novel framework that reduces user-perceived latency by predicting tool queries in parallel with user speech, even before the user finishes speaking. Specifically, we develop a post-training pipeline that teaches the model when to issue tool calls during ongoing speech and how to generate spoken summaries that fuse audio queries with retrieved text results, thereby improving both accuracy and responsiveness. To evaluate our approach, we construct AudioCRAG, a benchmark created by converting queries from the publicly available CRAG dataset into speech form. Experimental results demonstrate that our streaming RAG approach increases QA accuracy by up to 200% relative (from 11.1% to 34.2% absolute) and further enhances user experience by reducing tool use latency by 20%. Importantly, our streaming RAG approach is modality-agnostic and can be applied equally to typed input, paving the way for more agentic, real-time AI assistants.

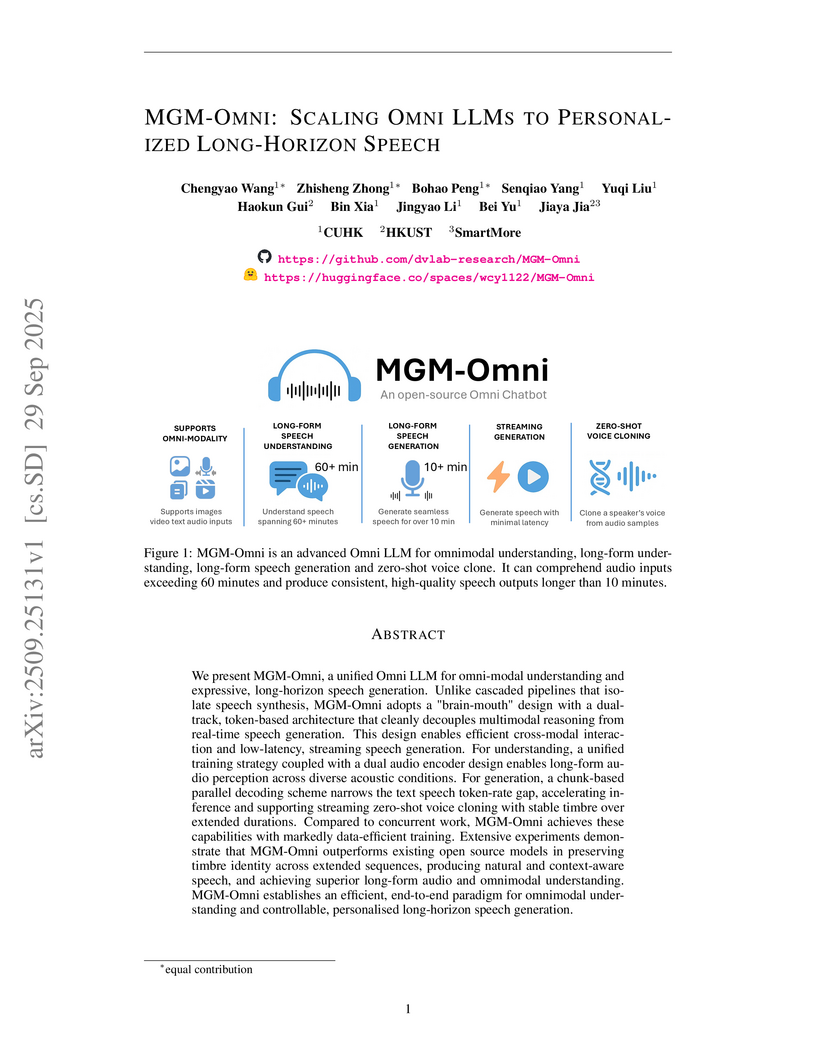

MGM-Omni presents a unified omni-modal large language model that excels in understanding diverse inputs, including long-form audio, and generating expressive, personalized speech. It achieves a 94% success rate in long audio understanding tests up to 75 minutes and generates speech with a low Real-Time Factor of 0.19, employing a data-efficient training approach with less than 400k hours of audio.

Researchers at Yonsei University developed UNIVERSR, a vocoder-free flow matching model for audio super-resolution that directly generates full-band spectral coefficients. The model achieves state-of-the-art perceptual quality across diverse audio types and upsampling factors, outperforming two-stage vocoder-based methods and showing improved efficiency over diffusion models.

There are no more papers matching your filters at the moment.