Ask or search anything...

Diffusion Soup introduces a method for combining multiple text-to-image diffusion models by simply averaging their weights, enabling efficient continual learning, machine unlearning, and zero-shot style mixing. The approach achieves superior performance over individual or monolithically trained models, generating higher quality images with improved text-to-image alignment and reduced memorization, all without increasing inference costs.

View blogDATAADVISOR introduces a dynamic, principles-guided framework for curating high-quality safety alignment data for Large Language Models. It continuously assesses an existing dataset, identifies weaknesses in safety coverage, and then generates new, targeted examples to address those gaps, leading to improved safety performance without compromising utility.

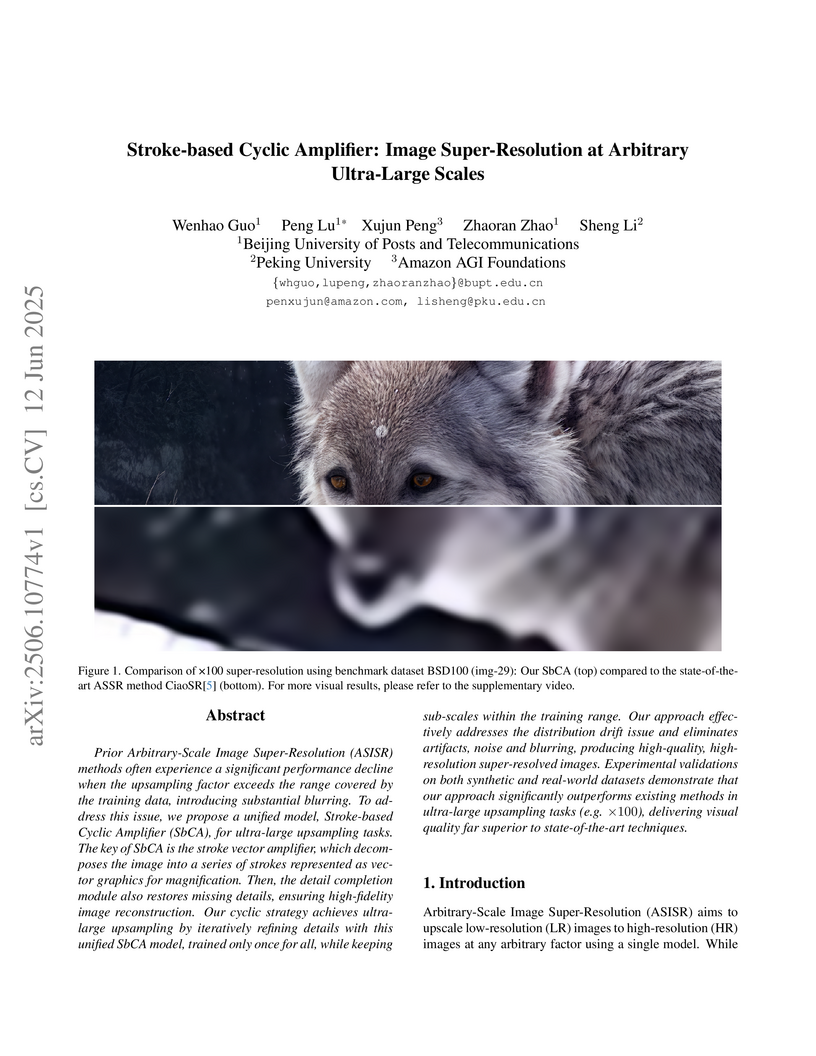

View blogResearchers from Beijing University of Posts and Telecommunications, Peking University, and Amazon AGI Foundations developed the Stroke-based Cyclic Amplifier (SbCA) for arbitrary ultra-large scale image super-resolution. The unified model uses a novel stroke-based approach to mitigate distribution drift and prevent artifact accumulation during cyclic amplification, achieving high perceptual quality for factors up to ×100 on both synthetic and real-world datasets.

View blogResearchers from Amazon AGI Foundations, Télécom Paris, UNC Chapel Hill, and UCLA introduced DECRIM, a self-correction pipeline that enhances large language models' ability to follow instructions with multiple constraints. DECRIM enables models like Mistral v0.2 to achieve constraint satisfaction rates comparable to or exceeding GPT-4 on a new benchmark, REALINSTRUCT, which is derived from real user interactions.

View blogThe COKE (Chain-of-Keywords) framework introduces a structured approach to automated creative story evaluation, addressing the challenges of subjective assessment and the "objective mismatch" found in large language models. This method utilizes diverse keyword sequences to provide fine-grained, customizable, and interpretable evaluations, achieving approximately 0.30 Pearson correlation with human population averages and outperforming larger LLMs with significantly fewer parameters.

View blogResearchers from Michigan State University and Amazon AGI Foundations investigated leveraging Large Language Models (LLMs) for automated dialogue quality assessment. The study identified that larger, instruction-tuned models, few-shot learning with semantically retrieved examples, supervised fine-tuning, and analysis-first chain-of-thought reasoning consistently improve LLM performance in aligning with human judgments for dialogue evaluation.

View blog

University of Southern California

University of Southern California

Peking University

Peking University

UCLA

UCLA

Michigan State University

Michigan State University