CHU Lille

In MRI studies, the aggregation of imaging data from multiple acquisition

sites enhances sample size but may introduce site-related variabilities that

hinder consistency in subsequent analyses. Deep learning methods for image

translation have emerged as a solution for harmonizing MR images across sites.

In this study, we introduce IGUANe (Image Generation with Unified Adversarial

Networks), an original 3D model that leverages the strengths of domain

translation and straightforward application of style transfer methods for

multicenter brain MR image harmonization. IGUANe extends CycleGAN by

integrating an arbitrary number of domains for training through a many-to-one

architecture. The framework based on domain pairs enables the implementation of

sampling strategies that prevent confusion between site-related and biological

variabilities. During inference, the model can be applied to any image, even

from an unknown acquisition site, making it a universal generator for

harmonization. Trained on a dataset comprising T1-weighted images from 11

different scanners, IGUANe was evaluated on data from unseen sites. The

assessments included the transformation of MR images with traveling subjects,

the preservation of pairwise distances between MR images within domains, the

evolution of volumetric patterns related to age and Alzheimer′s disease (AD),

and the performance in age regression and patient classification tasks.

Comparisons with other harmonization and normalization methods suggest that

IGUANe better preserves individual information in MR images and is more

suitable for maintaining and reinforcing variabilities related to age and AD.

Future studies may further assess IGUANe in other multicenter contexts, either

using the same model or retraining it for applications to different image

modalities. IGUANe is available at

this https URL

Depression is a complex mental disorder characterized by a diverse range of

observable and measurable indicators that go beyond traditional subjective

assessments. Recent research has increasingly focused on objective, passive,

and continuous monitoring using wearable devices to gain more precise insights

into the physiological and behavioral aspects of depression. However, most

existing studies primarily distinguish between healthy and depressed

individuals, adopting a binary classification that fails to capture the

heterogeneity of depressive disorders. In this study, we leverage wearable

devices to predict depression subtypes-specifically unipolar and bipolar

depression-aiming to identify distinctive biomarkers that could enhance

diagnostic precision and support personalized treatment strategies. To this

end, we introduce the CALYPSO dataset, designed for non-invasive detection of

depression subtypes and symptomatology through physiological and behavioral

signals, including blood volume pulse, electrodermal activity, body

temperature, and three-axis acceleration. Additionally, we establish a

benchmark on the dataset using well-known features and standard machine

learning methods. Preliminary results indicate that features related to

physical activity, extracted from accelerometer data, are the most effective in

distinguishing between unipolar and bipolar depression, achieving an accuracy

of 96.77%. Temperature-based features also showed high discriminative power,

reaching an accuracy of 93.55%. These findings highlight the potential of

physiological and behavioral monitoring for improving the classification of

depressive subtypes, paving the way for more tailored clinical interventions.

25 Apr 2024

In a world increasingly awash with data, the need to extract meaningful

insights from data has never been more crucial. Functional Data Analysis (FDA)

goes beyond traditional data points, treating data as dynamic, continuous

functions, capturing ever-changing phenomena nuances. This article introduces

FDA, merging statistics with real-world complexity, ideal for those with

mathematical skills but no FDA background.

07 Mar 2023

Real World Data (RWD) bears great promises to improve the quality of care. However, specific infrastructures and methodologies are required to derive robust knowledge and brings innovations to the patient. Drawing upon the national case study of the 32 French regional and university hospitals governance, we highlight key aspects of modern Clinical Data Warehouses (CDWs): governance, transparency, types of data, data reuse, technical tools, documentation and data quality control processes. Semi-structured interviews as well as a review of reported studies on French CDWs were conducted in a semi-structured manner from March to November 2022. Out of 32 regional and university hospitals in France, 14 have a CDW in production, 5 are experimenting, 5 have a prospective CDW project, 8 did not have any CDW project at the time of writing. The implementation of CDW in France dates from 2011 and accelerated in the late 2020. From this case study, we draw some general guidelines for CDWs. The actual orientation of CDWs towards research requires efforts in governance stabilization, standardization of data schema and development in data quality and data documentation. Particular attention must be paid to the sustainability of the warehouse teams and to the multi-level governance. The transparency of the studies and the tools of transformation of the data must improve to allow successful multi-centric data reuses as well as innovations in routine care.

Image synthesis via Generative Adversarial Networks (GANs) of three-dimensional (3D) medical images has great potential that can be extended to many medical applications, such as, image enhancement and disease progression modeling. However, current GAN technologies for 3D medical image synthesis need to be significantly improved to be readily adapted to real-world medical problems. In this paper, we extend the state-of-the-art StyleGAN2 model, which natively works with two-dimensional images, to enable 3D image synthesis. In addition to the image synthesis, we investigate the controllability and interpretability of the 3D-StyleGAN via style vectors inherited form the original StyleGAN2 that are highly suitable for medical applications: (i) the latent space projection and reconstruction of unseen real images, and (ii) style mixing. We demonstrate the 3D-StyleGAN's performance and feasibility with ~12,000 three-dimensional full brain MR T1 images, although it can be applied to any 3D volumetric images. Furthermore, we explore different configurations of hyperparameters to investigate potential improvement of the image synthesis with larger networks. The codes and pre-trained networks are available online: this https URL.

13 Apr 2020

The main goal of this paper is to construct a wavelet-type random series

representation for a random field X, defined by a multistable stochastic

integral, which generates a multifractional multistable Riemann-Liouville

(mmRL) process Y. Such a representation provides, among other things, an

efficient method of simulation of paths of Y. In order to obtain it, we

expand in the Haar basis the integrand associated with X and we use some

fundamental properties of multistable stochastic integrals. Then, thanks to the

Abel's summation rule and the Doob's maximal inequality for discrete

submartingales, we show that this wavelet-type random series representation of

X is convergent in a strong sense: almost surely in some spaces of continuous

functions. Also, we determine an estimate of its almost sure rate of

convergence in these spaces.

31 Mar 2025

Hermite processes are paradigmatic examples of stochastic processes which can

belong to any Wiener chaos of an arbitrary order; the wellknown fractional

Brownian motion belonging to the Gaussian first order Wiener chaos and the

Rosenblatt process belonging to the non-Gaussian second order Wiener chaos are

two particular cases of them. Except these two particular cases no simulation

method for sample paths of Hermite processes is available so far. The goal of

our article is to introduce a new method which potentially allows to simulate

sample paths of any Hermite process and even those of any generalized Hermite

process. Our starting point is the representation for the latter process as

random wavelet-typeseries, obtained in our very recent paper [3]. We construct

from it a "concrete" sequence of piecewise linear continuous random functions

which almost surely approximate sample paths of this process for the uniform

norm on any compact interval, and we provide an almost sure estimate of the

approximation error. Then, for the Rosenblatt process and more importantly for

the third order Hermite process, we propose algorithms allowing to implement

this sequence and we illustrate them by several simulations. Python routines

implementing these synthesis procedures are available upon request.

Coronavirus disease 2019 (COVID-19) is an infectious disease with first

symptoms similar to the flu. COVID-19 appeared first in China and very quickly

spreads to the rest of the world, causing then the 2019-20 coronavirus

pandemic. In many cases, this disease causes pneumonia. Since pulmonary

infections can be observed through radiography images, this paper investigates

deep learning methods for automatically analyzing query chest X-ray images with

the hope to bring precision tools to health professionals towards screening the

COVID-19 and diagnosing confirmed patients. In this context, training datasets,

deep learning architectures and analysis strategies have been experimented from

publicly open sets of chest X-ray images. Tailored deep learning models are

proposed to detect pneumonia infection cases, notably viral cases. It is

assumed that viral pneumonia cases detected during an epidemic COVID-19 context

have a high probability to presume COVID-19 infections. Moreover, easy-to-apply

health indicators are proposed for estimating infection status and predicting

patient status from the detected pneumonia cases. Experimental results show

possibilities of training deep learning models over publicly open sets of chest

X-ray images towards screening viral pneumonia. Chest X-ray test images of

COVID-19 infected patients are successfully diagnosed through detection models

retained for their performances. The efficiency of proposed health indicators

is highlighted through simulated scenarios of patients presenting infections

and health problems by combining real and synthetic health data.

Objective: Brain-predicted age difference (BrainAGE) is a neuroimaging biomarker reflecting brain health. However, training robust BrainAGE models requires large datasets, often restricted by privacy concerns. This study evaluates the performance of federated learning (FL) for BrainAGE estimation in ischemic stroke patients treated with mechanical thrombectomy, and investigates its association with clinical phenotypes and functional outcomes.

Methods: We used FLAIR brain images from 1674 stroke patients across 16 hospital centers. We implemented standard machine learning and deep learning models for BrainAGE estimates under three data management strategies: centralized learning (pooled data), FL (local training at each site), and single-site learning. We reported prediction errors and examined associations between BrainAGE and vascular risk factors (e.g., diabetes mellitus, hypertension, smoking), as well as functional outcomes at three months post-stroke. Logistic regression evaluated BrainAGE's predictive value for these outcomes, adjusting for age, sex, vascular risk factors, stroke severity, time between MRI and arterial puncture, prior intravenous thrombolysis, and recanalisation outcome.

Results: While centralized learning yielded the most accurate predictions, FL consistently outperformed single-site models. BrainAGE was significantly higher in patients with diabetes mellitus across all models. Comparisons between patients with good and poor functional outcomes, and multivariate predictions of these outcomes showed the significance of the association between BrainAGE and post-stroke recovery.

Conclusion: FL enables accurate age predictions without data centralization. The strong association between BrainAGE, vascular risk factors, and post-stroke recovery highlights its potential for prognostic modeling in stroke care.

13 Dec 2017

CNRS

CNRS Imperial College LondonTechnische Universität DresdenUniv. LilleINSERMÉcole Polytechnique Fédérale de LausanneUniv MontpellierCHU LilleUniversity Hospital Schleswig-HolsteinCHU MontpellierInstitut Pasteur de LilleInstitut CochinGerman Center for Diabetes ResearchGerman Institute of Human Nutrition Potsdam-RehbrüeckeSkane University Hospital MalmöUniversit

Paris Descartes

Imperial College LondonTechnische Universität DresdenUniv. LilleINSERMÉcole Polytechnique Fédérale de LausanneUniv MontpellierCHU LilleUniversity Hospital Schleswig-HolsteinCHU MontpellierInstitut Pasteur de LilleInstitut CochinGerman Center for Diabetes ResearchGerman Institute of Human Nutrition Potsdam-RehbrüeckeSkane University Hospital MalmöUniversit

Paris DescartesType 2 diabetes (T2D) is closely linked with non-alcoholic fatty liver disease (NAFLD) and hepatic insulin resistance, but the involved mechanisms are still elusive. Using DNA methylome and transcriptome analyses of livers from obese individuals, we found that both hypomethylation at a CpG site in PDGFA (encoding platelet derived growth factor alpha) and PDGFA overexpression are associated with increased T2D risk, hyperinsulinemia, increased insulin resistance and increased steatohepatitis risk. Both genetic risk score studies and human cell modeling pointed to a causative impact of high insulin levels on PDGFA CpG site hypomethylation, PDGFA overexpression, and increased PDGF-AA secretion from liver. We found that PDGF-AA secretion further stimulates its own expression through protein kinase C activity and contributes to insulin resistance through decreased expression of both insulin receptor substrate 1 and of insulin receptor. Importantly, hepatocyte insulin sensitivity can be restored by PDGF-AA blocking antibodies, PDGF receptor inhibitors and by metformin opening therapeutic avenues. Conclusion: Therefore, in the liver of obese patients with T2D, the increased PDGF-AA signaling contributes to insulin resistance, opening new therapeutic avenues against T2D and NAFLD.

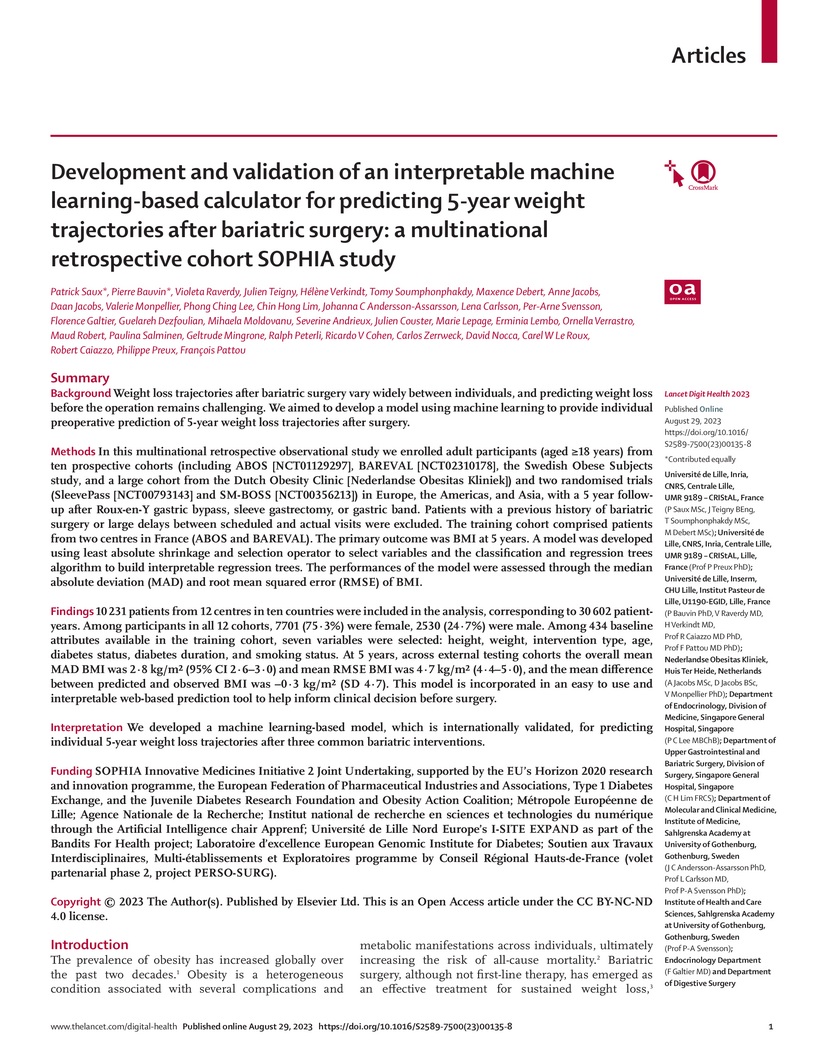

Background Weight loss trajectories after bariatric surgery vary widely

between individuals, and predicting weight loss before the operation remains

challenging. We aimed to develop a model using machine learning to provide

individual preoperative prediction of 5-year weight loss trajectories after

surgery. Methods In this multinational retrospective observational study we

enrolled adult participants (aged ≥18 years) from ten prospective cohorts

(including ABOS [NCT01129297], BAREVAL [NCT02310178], the Swedish Obese

Subjects study, and a large cohort from the Dutch Obesity Clinic [Nederlandse

Obesitas Kliniek]) and two randomised trials (SleevePass [NCT00793143] and

SM-BOSS [NCT00356213]) in Europe, the Americas, and Asia, with a 5 year

followup after Roux-en-Y gastric bypass, sleeve gastrectomy, or gastric band.

Patients with a previous history of bariatric surgery or large delays between

scheduled and actual visits were excluded. The training cohort comprised

patients from two centres in France (ABOS and BAREVAL). The primary outcome was

BMI at 5 years. A model was developed using least absolute shrinkage and

selection operator to select variables and the classification and regression

trees algorithm to build interpretable regression trees. The performances of

the model were assessed through the median absolute deviation (MAD) and root

mean squared error (RMSE) of BMI. Findings10 231 patients from 12 centres in

ten countries were included in the analysis, corresponding to 30 602

patient-years. Among participants in all 12 cohorts, 7701 (75∙3%) were

female, 2530 (24∙7%) were male. Among 434 baseline attributes available

in the training cohort, seven variables were selected: height, weight,

intervention type, age, diabetes status, diabetes duration, and smoking status.

At 5 years, across external testing cohorts the overall mean MAD BMI was

2∙8 kg/m2 (95% CI 2∙6-3∙0) and mean RMSE BMI was

4∙7 kg/m2 (4∙4-5∙0), and the mean difference

between predicted and observed BMI was-0∙3 kg/m2 (SD 4∙7).

This model is incorporated in an easy to use and interpretable web-based

prediction tool to help inform clinical decision before surgery.

InterpretationWe developed a machine learning-based model, which is

internationally validated, for predicting individual 5-year weight loss

trajectories after three common bariatric interventions.

23 Jan 2024

Event of the same type occurring several times for one individual (recurrent events) are present in various domains (industrial systems reliability, episodes of unemployment, political conflicts, chronic diseases episodes). Analysis of such kind of data should account for the whole recurrence process dynamics rather than only focusing on the number of observed events. Statistical models for recurrent events analysis are developed in the counting process probabilistic framework. One of the often-used models is the Andersen-Gill model, a generalization of the well-known Cox model for durations, which assumes that the baseline intensity of the recurrence process is time-dependent and is adjusted for covariates. For an individual i with covariates Xi, the intensity is as follows: λ_{ik}(t;θ) = λ_0(t) exp (X_i β). The baseline intensity can be specified parametrically, in a form of Weibull: λ_0 (t) = γ_{1} γ_{2} t^{γ_2-1}, with γ1 scale parameter et γ2 shape parameter. However, the observed covariates are often insufficient to explain the observed heterogeneity in data. This is often the case of clinical trials data containing information on patients. In this article a mixture model for recurrent events analysis is proposed. This model allows to account for unobserved heterogeneity and to cluster individuals according to their recurrence process. The intensity of the process is parametrically specified within each class and depend on observed covariates. Thus, the intensity becomes specific to class k: λ_{ik} (t; θ_k) = γ_{1k} γ_{2k} t^{γ_{2k}-1} exp (X_i β_k). The model parameters are estimated by the Maximum Likelihood method, using the EM algorithm. The BIC criterion is employed to choose the optimal number of classes. Model feasibility is verified by Monte Carlo simulations. An application to real data concerning hospital readmissions of elderly patients is proposed. The proposed model feasibility is empirically verified (the optimization algorithm converges, providing non-biased estimates). The real data application allows to identify two clinically relevant classes of patients.

28 Dec 2020

We investigate the parameter estimation of regression models with fixed group

effects, when the group variable is missing while group related variables are

available. This problem involves clustering to infer the missing group variable

based on the group related variables, and regression to build a model on the

target variable given the group and eventually additional variables. Thus, this

problem can be formulated as the joint distribution modeling of the target and

of the group related variables. The usual parameter estimation strategy for

this joint model is a two-step approach starting by learning the group variable

(clustering step) and then plugging in its estimator for fitting the regression

model (regression step). However, this approach is suboptimal (providing in

particular biased regression estimates) since it does not make use of the

target variable for clustering. Thus, we claim for a simultaneous estimation

approach of both clustering and regression, in a semi-parametric framework.

Numerical experiments illustrate the benefits of our proposition by considering

wide ranges of distributions and regression models. The relevance of our new

method is illustrated on real data dealing with problems associated with high

blood pressure prevention.

This thesis aims to study some of the mathematical challenges that arise in the analysis of statistical sequential decision-making algorithms for postoperative patients follow-up. Stochastic bandits (multiarmed, contextual) model the learning of a sequence of actions (policy) by an agent in an uncertain environment in order to maximise observed rewards. To learn optimal policies, bandit algorithms have to balance the exploitation of current knowledge and the exploration of uncertain actions. Such algorithms have largely been studied and deployed in industrial applications with large datasets, low-risk decisions and clear modelling assumptions, such as clickthrough rate maximisation in online advertising. By contrast, digital health recommendations call for a whole new paradigm of small samples, risk-averse agents and complex, nonparametric modelling. To this end, we developed new safe, anytime-valid concentration bounds, (Bregman, empirical Chernoff), introduced a new framework for risk-aware contextual bandits (with elicitable risk measures) and analysed a novel class of nonparametric bandit algorithms under weak assumptions (Dirichlet sampling). In addition to the theoretical guarantees, these results are supported by in-depth empirical evidence. Finally, as a first step towards personalised postoperative follow-up recommendations, we developed with medical doctors and surgeons an interpretable machine learning model to predict the long-term weight trajectories of patients after bariatric surgery.

Biclustering is an unsupervised machine-learning approach aiming to cluster

rows and columns simultaneously in a data matrix. Several biclustering

algorithms have been proposed for handling numeric datasets. However,

real-world data mining problems often involve heterogeneous datasets with mixed

attributes. To address this challenge, we introduce a biclustering approach

called HBIC, capable of discovering meaningful biclusters in complex

heterogeneous data, including numeric, binary, and categorical data. The

approach comprises two stages: bicluster generation and bicluster model

selection. In the initial stage, several candidate biclusters are generated

iteratively by adding and removing rows and columns based on the frequency of

values in the original matrix. In the second stage, we introduce two approaches

for selecting the most suitable biclusters by considering their size and

homogeneity. Through a series of experiments, we investigated the suitability

of our approach on a synthetic benchmark and in a biomedical application

involving clinical data of systemic sclerosis patients. The evaluation

comparing our method to existing approaches demonstrates its ability to

discover high-quality biclusters from heterogeneous data. Our biclustering

approach is a starting point for heterogeneous bicluster discovery, leading to

a better understanding of complex underlying data structures.

24 Jan 2022

Introduction: A phenotype of isolated parkinsonism mimicking Idiopathic

Parkinson's Disease (IPD) is a rare clinical presentation of GRN and C9orf72

mutations, the major genetic causes of frontotemporal dementia (FTD). It still

remains controversial if this association is fortuitous or not, and which

clinical clues could reliably suggest a genetic FTD etiology in IPD patients.

This study aims to describe the clinical characteristics of FTD mutation

carriers presenting with IPD phenotype, provide neuropathological evidence of

the mutation's causality, and specifically address their "red flags" according

to current IPD criteria. Methods: Seven GRN and C9orf72 carriers with isolated

parkinsonism at onset, and three patients from the literature were included in

this study. To allow better delineation of their phenotype, the presence of

supportive, exclusion and "red flag" features from MDS criteria were analyzed

for each case. Results: Amongst the ten patients (5 GRN, 5 C9orf72), seven

fulfilled probable IPD criteria during all the disease course, while

behavioral/language or motoneuron dysfunctions occurred later in three. Disease

duration was longer and dopa-responsiveness was more sustained in C9orf72 than

in GRN carriers. Subtle motor features, cognitive/behavioral changes, family

history of dementia/ALS were suggestive clues for a genetic diagnosis.

Importantly, neuropathological examination in one patient revealed typical

TDP-43-inclusions without alpha-synucleinopathy, thus demonstrating the causal

link between FTD mutations, TDP-43-pathology and PD phenotype. Conclusion: We

showed that, altogether, family history of early-onset dementia/ALS, the

presence of cognitive/behavioral dysfunction and subtle motor characteristics

are atypical features frequently present in the parkinsonian presentations of

GRN and C9orf72 mutations .

Depression and anxiety are prevalent mental health disorders that frequently

cooccur, with anxiety significantly influencing both the manifestation and

treatment of depression. An accurate assessment of anxiety levels in

individuals with depression is crucial to develop effective and personalized

treatment plans. This study proposes a new noninvasive method for quantifying

anxiety severity by analyzing head movements -- specifically speed,

acceleration, and angular displacement -- during video-recorded interviews with

patients suffering from severe depression. Using data from a new CALYPSO

Depression Dataset, we extracted head motion characteristics and applied

regression analysis to predict clinically evaluated anxiety levels. Our results

demonstrate a high level of precision, achieving a mean absolute error (MAE) of

0.35 in predicting the severity of psychological anxiety based on head movement

patterns. This indicates that our approach can enhance the understanding of

anxiety's role in depression and assist psychiatrists in refining treatment

strategies for individuals.

22 Jun 2025

Perceptual multistability, observed across species and sensory modalities, offers valuable insights into numerous cognitive functions and dysfunctions. For instance, differences in temporal dynamics and information integration during percept formation often distinguish clinical from non-clinical populations. Computational psychiatry can elucidate these variations, through two primary approaches: (i) Bayesian modeling, which treats perception as an unconscious inference, and (ii) an active, information-seeking perspective (e.g., reinforcement learning) framing perceptual switches as internal actions. Our synthesis aims to leverage multistability to bridge these computational psychiatry subfields, linking human and animal studies as well as connecting behavior to underlying neural mechanisms. Perceptual multistability emerges as a promising non-invasive tool for clinical applications, facilitating translational research and enhancing our mechanistic understanding of cognitive processes and their impairments.

08 Mar 2021

We have developed two scan statistics for detecting clusters of functional data indexed in space. The first method is based on an adaptation of a functional analysis of variance and the second one is based on a distribution-free spatial scan statistic for univariate data. In a simulation study, the distribution-free method always performed better than a nonparametric functional scan statistic, and the adaptation of the anova also performed better for data with a normal or a quasi-normal distribution. Our methods can detect smaller spatial clusters than the nonparametric method. Lastly, we used our scan statistics for functional data to search for spatial clusters of abnormal unemployment rates in France over the period 1998-2013 (divided into quarters).

26 Mar 2021

This paper introduces new scan statistics for multivariate functional data indexed in space. The new methods are derivated from a MANOVA test statistic for functional data, an adaptation of the Hotelling T2-test statistic, and a multivariate extension of the Wilcoxon rank-sum test statistic. In a simulation study, the latter two methods present very good performances and the adaptation of the functional MANOVA also shows good performances for a normal distribution. Our methods detect more accurate spatial clusters than an existing nonparametric functional scan statistic. Lastly we applied the methods on multivariate functional data to search for spatial clusters of abnormal daily concentrations of air pollutants in the north of France in May and June 2020.

There are no more papers matching your filters at the moment.