DarwinAI

While significant research advances have been made in the field of deep

reinforcement learning, there have been no concrete adversarial attack

strategies in literature tailored for studying the vulnerability of deep

reinforcement learning algorithms to membership inference attacks. In such

attacking systems, the adversary targets the set of collected input data on

which the deep reinforcement learning algorithm has been trained. To address

this gap, we propose an adversarial attack framework designed for testing the

vulnerability of a state-of-the-art deep reinforcement learning algorithm to a

membership inference attack. In particular, we design a series of experiments

to investigate the impact of temporal correlation, which naturally exists in

reinforcement learning training data, on the probability of information

leakage. Moreover, we compare the performance of \emph{collective} and

\emph{individual} membership attacks against the deep reinforcement learning

algorithm. Experimental results show that the proposed adversarial attack

framework is surprisingly effective at inferring data with an accuracy

exceeding 84% in individual and 97% in collective modes in three

different continuous control Mujoco tasks, which raises serious privacy

concerns in this regard. Finally, we show that the learning state of the

reinforcement learning algorithm influences the level of privacy breaches

significantly.

Traffic sign recognition is a very important computer vision task for a number of real-world applications such as intelligent transportation surveillance and analysis. While deep neural networks have been demonstrated in recent years to provide state-of-the-art performance traffic sign recognition, a key challenge for enabling the widespread deployment of deep neural networks for embedded traffic sign recognition is the high computational and memory requirements of such networks. As a consequence, there are significant benefits in investigating compact deep neural network architectures for traffic sign recognition that are better suited for embedded devices. In this paper, we introduce MicronNet, a highly compact deep convolutional neural network for real-time embedded traffic sign recognition designed based on macroarchitecture design principles (e.g., spectral macroarchitecture augmentation, parameter precision optimization, etc.) as well as numerical microarchitecture optimization strategies. The resulting overall architecture of MicronNet is thus designed with as few parameters and computations as possible while maintaining recognition performance, leading to optimized information density of the proposed network. The resulting MicronNet possesses a model size of just ~1MB and ~510,000 parameters (~27x fewer parameters than state-of-the-art) while still achieving a human performance level top-1 accuracy of 98.9% on the German traffic sign recognition benchmark. Furthermore, MicronNet requires just ~10 million multiply-accumulate operations to perform inference, and has a time-to-compute of just 32.19 ms on a Cortex-A53 high efficiency processor. These experimental results show that highly compact, optimized deep neural network architectures can be designed for real-time traffic sign recognition that are well-suited for embedded scenarios.

There can be numerous electronic components on a given PCB, making the task of visual inspection to detect defects very time-consuming and prone to error, especially at scale. There has thus been significant interest in automatic PCB component detection, particularly leveraging deep learning. However, deep neural networks typically require high computational resources, possibly limiting their feasibility in real-world use cases in manufacturing, which often involve high-volume and high-throughput detection with constrained edge computing resource availability. As a result of an exploration of efficient deep neural network architectures for this use case, we introduce PCBDet, an attention condenser network design that provides state-of-the-art inference throughput while achieving superior PCB component detection performance compared to other state-of-the-art efficient architecture designs. Experimental results show that PCBDet can achieve up to 2× inference speed-up on an ARM Cortex A72 processor when compared to an EfficientNet-based design while achieving ∼2-4\% higher mAP on the FICS-PCB benchmark dataset.

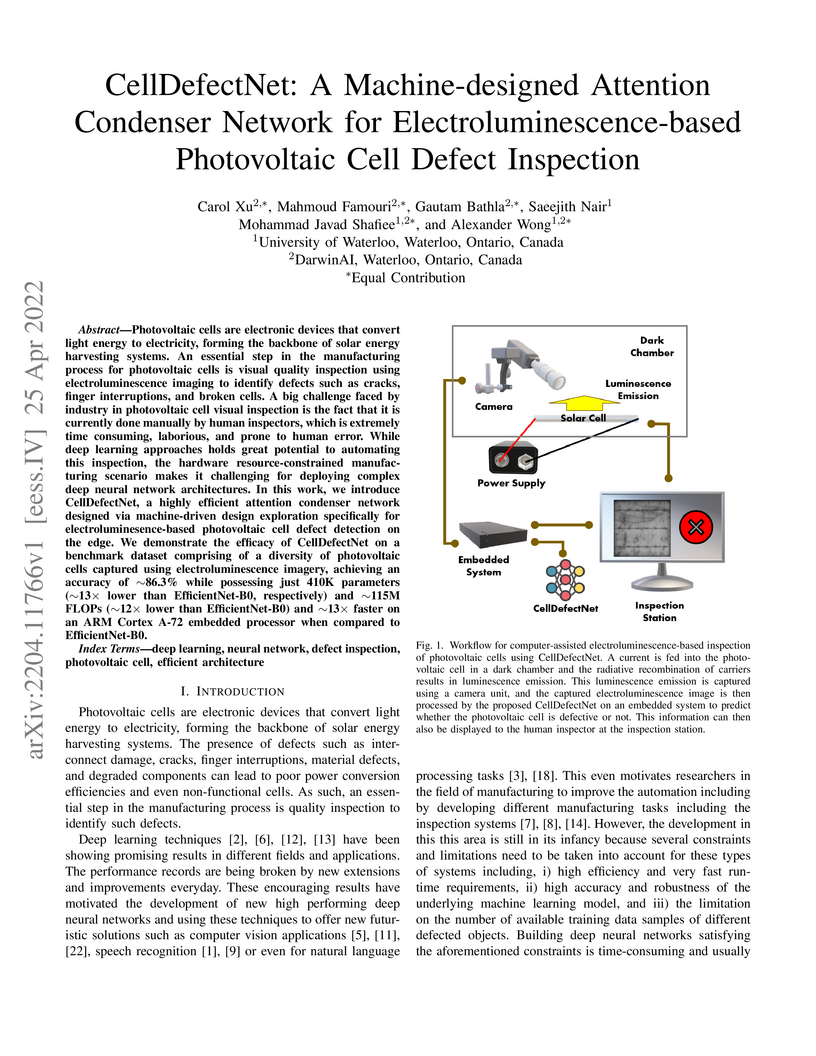

Photovoltaic cells are electronic devices that convert light energy to

electricity, forming the backbone of solar energy harvesting systems. An

essential step in the manufacturing process for photovoltaic cells is visual

quality inspection using electroluminescence imaging to identify defects such

as cracks, finger interruptions, and broken cells. A big challenge faced by

industry in photovoltaic cell visual inspection is the fact that it is

currently done manually by human inspectors, which is extremely time consuming,

laborious, and prone to human error. While deep learning approaches holds great

potential to automating this inspection, the hardware resource-constrained

manufacturing scenario makes it challenging for deploying complex deep neural

network architectures. In this work, we introduce CellDefectNet, a highly

efficient attention condenser network designed via machine-driven design

exploration specifically for electroluminesence-based photovoltaic cell defect

detection on the edge. We demonstrate the efficacy of CellDefectNet on a

benchmark dataset comprising of a diversity of photovoltaic cells captured

using electroluminescence imagery, achieving an accuracy of ~86.3% while

possessing just 410K parameters (~13× lower than EfficientNet-B0,

respectively) and ~115M FLOPs (~12× lower than EfficientNet-B0) and

~13× faster on an ARM Cortex A-72 embedded processor when compared to

EfficientNet-B0.

Neural Architecture Search (NAS) has enabled automatic discovery of more

efficient neural network architectures, especially for mobile and embedded

vision applications. Although recent research has proposed ways of quickly

estimating latency on unseen hardware devices with just a few samples, little

focus has been given to the challenges of estimating latency on runtimes using

optimized graphs, such as TensorRT and specifically for edge devices. In this

work, we propose MAPLE-Edge, an edge device-oriented extension of MAPLE, the

state-of-the-art latency predictor for general purpose hardware, where we train

a regression network on architecture-latency pairs in conjunction with a

hardware-runtime descriptor to effectively estimate latency on a diverse pool

of edge devices. Compared to MAPLE, MAPLE-Edge can describe the runtime and

target device platform using a much smaller set of CPU performance counters

that are widely available on all Linux kernels, while still achieving up to

+49.6% accuracy gains against previous state-of-the-art baseline methods on

optimized edge device runtimes, using just 10 measurements from an unseen

target device. We also demonstrate that unlike MAPLE which performs best when

trained on a pool of devices sharing a common runtime, MAPLE-Edge can

effectively generalize across runtimes by applying a trick of normalizing

performance counters by the operator latency, in the measured hardware-runtime

descriptor. Lastly, we show that for runtimes exhibiting lower than desired

accuracy, performance can be boosted by collecting additional samples from the

target device, with an extra 90 samples translating to gains of nearly +40%.

We explore calibration properties at various precisions for three

architectures: ShuffleNetv2, GhostNet-VGG, and MobileOne; and two datasets:

CIFAR-100 and PathMNIST. The quality of calibration is observed to track the

quantization quality; it is well-documented that performance worsens with lower

precision, and we observe a similar correlation with poorer calibration. This

becomes especially egregious at 4-bit activation regime. GhostNet-VGG is shown

to be the most robust to overall performance drop at lower precision. We find

that temperature scaling can improve calibration error for quantized networks,

with some caveats. We hope that these preliminary insights can lead to more

opportunities for explainable and reliable EdgeML.

A promising paradigm for achieving highly efficient deep neural networks is

the idea of evolutionary deep intelligence, which mimics biological evolution

processes to progressively synthesize more efficient networks. A crucial design

factor in evolutionary deep intelligence is the genetic encoding scheme used to

simulate heredity and determine the architectures of offspring networks. In

this study, we take a deeper look at the notion of synaptic cluster-driven

evolution of deep neural networks which guides the evolution process towards

the formation of a highly sparse set of synaptic clusters in offspring

networks. Utilizing a synaptic cluster-driven genetic encoding, the

probabilistic encoding of synaptic traits considers not only individual

synaptic properties but also inter-synaptic relationships within a deep neural

network. This process results in highly sparse offspring networks which are

particularly tailored for parallel computational devices such as GPUs and deep

neural network accelerator chips. Comprehensive experimental results using four

well-known deep neural network architectures (LeNet-5, AlexNet, ResNet-56, and

DetectNet) on two different tasks (object categorization and object detection)

demonstrate the efficiency of the proposed method. Cluster-driven genetic

encoding scheme synthesizes networks that can achieve state-of-the-art

performance with significantly smaller number of synapses than that of the

original ancestor network. (∼125-fold decrease in synapses for MNIST).

Furthermore, the improved cluster efficiency in the generated offspring

networks (∼9.71-fold decrease in clusters for MNIST and a ∼8.16-fold

decrease in clusters for KITTI) is particularly useful for accelerated

performance on parallel computing hardware architectures such as those in GPUs

and deep neural network accelerator chips.

Light guide plates are essential optical components widely used in a diverse

range of applications ranging from medical lighting fixtures to back-lit TV

displays. In this work, we introduce a fully-integrated, high-throughput,

high-performance deep learning-driven workflow for light guide plate surface

visual quality inspection (VQI) tailored for real-world manufacturing

environments. To enable automated VQI on the edge computing within the

fully-integrated VQI system, a highly compact deep anti-aliased attention

condenser neural network (which we name LightDefectNet) tailored specifically

for light guide plate surface defect detection in resource-constrained

scenarios was created via machine-driven design exploration with computational

and "best-practices" constraints as well as L_1 paired classification

discrepancy loss. Experiments show that LightDetectNet achieves a detection

accuracy of ~98.2% on the LGPSDD benchmark while having just 770K parameters

(~33X and ~6.9X lower than ResNet-50 and EfficientNet-B0, respectively) and

~93M FLOPs (~88X and ~8.4X lower than ResNet-50 and EfficientNet-B0,

respectively) and ~8.8X faster inference speed than EfficientNet-B0 on an

embedded ARM processor. As such, the proposed deep learning-driven workflow,

integrated with the aforementioned LightDefectNet neural network, is highly

suited for high-throughput, high-performance light plate surface VQI within

real-world manufacturing environments.

The fine-grained relationship between form and function with respect to deep

neural network architecture design and hardware-specific acceleration is one

area that is not well studied in the research literature, with form often

dictated by accuracy as opposed to hardware function. In this study, a

comprehensive empirical exploration is conducted to investigate the impact of

deep neural network architecture design on the degree of inference speedup that

can be achieved via hardware-specific acceleration. More specifically, we

empirically study the impact of a variety of commonly used macro-architecture

design patterns across different architectural depths through the lens of

OpenVINO microprocessor-specific and GPU-specific acceleration. Experimental

results showed that while leveraging hardware-specific acceleration achieved an

average inference speed-up of 380%, the degree of inference speed-up varied

drastically depending on the macro-architecture design pattern, with the

greatest speedup achieved on the depthwise bottleneck convolution design

pattern at 550%. Furthermore, we conduct an in-depth exploration of the

correlation between FLOPs requirement, level 3 cache efficacy, and network

latency with increasing architectural depth and width. Finally, we analyze the

inference time reductions using hardware-specific acceleration when compared to

native deep learning frameworks across a wide variety of hand-crafted deep

convolutional neural network architecture designs as well as ones found via

neural architecture search strategies. We found that the DARTS-derived

architecture to benefit from the greatest improvement from hardware-specific

software acceleration (1200%) while the depthwise bottleneck convolution-based

MobileNet-V2 to have the lowest overall inference time of around 2.4 ms.

Vision transformers have shown unprecedented levels of performance in tackling various visual perception tasks in recent years. However, the architectural and computational complexity of such network architectures have made them challenging to deploy in real-world applications with high-throughput, low-memory requirements. As such, there has been significant research recently on the design of efficient vision transformer architectures. In this study, we explore the generation of fast vision transformer architecture designs via generative architecture search (GAS) to achieve a strong balance between accuracy and architectural and computational efficiency. Through this generative architecture search process, we create TurboViT, a highly efficient hierarchical vision transformer architecture design that is generated around mask unit attention and Q-pooling design patterns. The resulting TurboViT architecture design achieves significantly lower architectural computational complexity (>2.47× smaller than FasterViT-0 while achieving same accuracy) and computational complexity (>3.4× fewer FLOPs and 0.9% higher accuracy than MobileViT2-2.0) when compared to 10 other state-of-the-art efficient vision transformer network architecture designs within a similar range of accuracy on the ImageNet-1K dataset. Furthermore, TurboViT demonstrated strong inference latency and throughput in both low-latency and batch processing scenarios (>3.21× lower latency and >3.18× higher throughput compared to FasterViT-0 for low-latency scenario). These promising results demonstrate the efficacy of leveraging generative architecture search for generating efficient transformer architecture designs for high-throughput scenarios.

SquishedNets: Squishing SqueezeNet further for edge device scenarios via deep evolutionary synthesis

SquishedNets: Squishing SqueezeNet further for edge device scenarios via deep evolutionary synthesis

While deep neural networks have been shown in recent years to outperform other machine learning methods in a wide range of applications, one of the biggest challenges with enabling deep neural networks for widespread deployment on edge devices such as mobile and other consumer devices is high computational and memory requirements. Recently, there has been greater exploration into small deep neural network architectures that are more suitable for edge devices, with one of the most popular architectures being SqueezeNet, with an incredibly small model size of 4.8MB. Taking further advantage of the notion that many applications of machine learning on edge devices are often characterized by a low number of target classes, this study explores the utility of combining architectural modifications and an evolutionary synthesis strategy for synthesizing even smaller deep neural architectures based on the more recent SqueezeNet v1.1 macroarchitecture for applications with fewer target classes. In particular, architectural modifications are first made to SqueezeNet v1.1 to accommodate for a 10-class ImageNet-10 dataset, and then an evolutionary synthesis strategy is leveraged to synthesize more efficient deep neural networks based on this modified macroarchitecture. The resulting SquishedNets possess model sizes ranging from 2.4MB to 0.95MB (~5.17X smaller than SqueezeNet v1.1, or 253X smaller than AlexNet). Furthermore, the SquishedNets are still able to achieve accuracies ranging from 81.2% to 77%, and able to process at speeds of 156 images/sec to as much as 256 images/sec on a Nvidia Jetson TX1 embedded chip. These preliminary results show that a combination of architectural modifications and an evolutionary synthesis strategy can be a useful tool for producing very small deep neural network architectures that are well-suited for edge device scenarios.

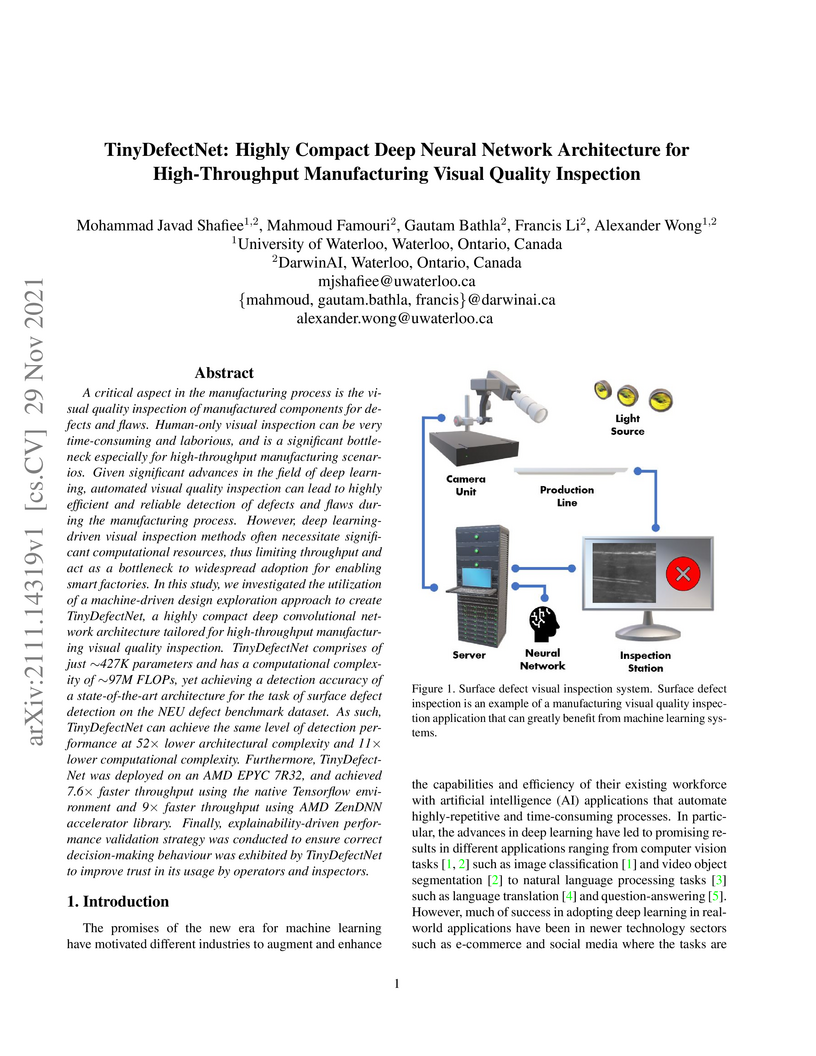

A critical aspect in the manufacturing process is the visual quality inspection of manufactured components for defects and flaws. Human-only visual inspection can be very time-consuming and laborious, and is a significant bottleneck especially for high-throughput manufacturing scenarios. Given significant advances in the field of deep learning, automated visual quality inspection can lead to highly efficient and reliable detection of defects and flaws during the manufacturing process. However, deep learning-driven visual inspection methods often necessitate significant computational resources, thus limiting throughput and act as a bottleneck to widespread adoption for enabling smart factories. In this study, we investigated the utilization of a machine-driven design exploration approach to create TinyDefectNet, a highly compact deep convolutional network architecture tailored for high-throughput manufacturing visual quality inspection. TinyDefectNet comprises of just ~427K parameters and has a computational complexity of ~97M FLOPs, yet achieving a detection accuracy of a state-of-the-art architecture for the task of surface defect detection on the NEU defect benchmark dataset. As such, TinyDefectNet can achieve the same level of detection performance at 52× lower architectural complexity and 11x lower computational complexity. Furthermore, TinyDefectNet was deployed on an AMD EPYC 7R32, and achieved 7.6x faster throughput using the native Tensorflow environment and 9x faster throughput using AMD ZenDNN accelerator library. Finally, explainability-driven performance validation strategy was conducted to ensure correct decision-making behaviour was exhibited by TinyDefectNet to improve trust in its usage by operators and inspectors.

Much of the focus in the design of deep neural networks has been on improving

accuracy, leading to more powerful yet highly complex network architectures

that are difficult to deploy in practical scenarios, particularly on edge

devices such as mobile and other consumer devices given their high

computational and memory requirements. As a result, there has been a recent

interest in the design of quantitative metrics for evaluating deep neural

networks that accounts for more than just model accuracy as the sole indicator

of network performance. In this study, we continue the conversation towards

universal metrics for evaluating the performance of deep neural networks for

practical on-device edge usage. In particular, we propose a new balanced metric

called NetScore, which is designed specifically to provide a quantitative

assessment of the balance between accuracy, computational complexity, and

network architecture complexity of a deep neural network, which is important

for on-device edge operation. In what is one of the largest comparative

analysis between deep neural networks in literature, the NetScore metric, the

top-1 accuracy metric, and the popular information density metric were compared

across a diverse set of 60 different deep convolutional neural networks for

image classification on the ImageNet Large Scale Visual Recognition Challenge

(ILSVRC 2012) dataset. The evaluation results across these three metrics for

this diverse set of networks are presented in this study to act as a reference

guide for practitioners in the field. The proposed NetScore metric, along with

the other tested metrics, are by no means perfect, but the hope is to push the

conversation towards better universal metrics for evaluating deep neural

networks for use in practical on-device edge scenarios to help guide

practitioners in model design for such scenarios.

There are no more papers matching your filters at the moment.