DeepLab

Building robust online content recommendation systems requires learning complex interactions between user preferences and content features. The field has evolved rapidly in recent years from traditional multi-arm bandit and collaborative filtering techniques, with new methods employing Deep Learning models to capture non-linearities. Despite progress, the dynamic nature of online recommendations still poses great challenges, such as finding the delicate balance between exploration and exploitation. In this paper we show how uncertainty estimations can be incorporated by employing them in an optimistic exploitation/exploration strategy for more efficient exploration of new recommendations. We provide a novel hybrid deep neural network model, Deep Density Networks (DDN), which integrates content-based deep learning models with a collaborative scheme that is able to robustly model and estimate uncertainty. Finally, we present online and offline results after incorporating DNN into a real world content recommendation system that serves billions of recommendations per day, and show the benefit of using DDN in practice.

Visual relations are complex, multimodal concepts that play an important role in the way humans perceive the world. As a result of their complexity, high-quality, diverse and large scale datasets for visual relations are still absent. In an attempt to overcome this data barrier, we choose to focus on the problem of few-shot Visual Relationship Detection (VRD), a setting that has been so far neglected by the community. In this work we present the first pretraining method for few-shot predicate classification that does not require any annotated relations. We achieve this by introducing a generative model that is able to capture the variation of semantic, visual and spatial information of relations inside a latent space and later exploiting its representations in order to achieve efficient few-shot classification. We construct few-shot training splits and show quantitative experiments on VG200 and VRD datasets where our model outperforms the baselines. Lastly we attempt to interpret the decisions of the model by conducting various qualitative experiments.

An increasing number of emerging applications in data science and engineering

are based on multidimensional and structurally rich data. The irregularities,

however, of high-dimensional data often compromise the effectiveness of

standard machine learning algorithms. We hereby propose the Rank-R Feedforward

Neural Network (FNN), a tensor-based nonlinear learning model that imposes

Canonical/Polyadic decomposition on its parameters, thereby offering two core

advantages compared to typical machine learning methods. First, it handles

inputs as multilinear arrays, bypassing the need for vectorization, and can

thus fully exploit the structural information along every data dimension.

Moreover, the number of the model's trainable parameters is substantially

reduced, making it very efficient for small sample setting problems. We

establish the universal approximation and learnability properties of Rank-R

FNN, and we validate its performance on real-world hyperspectral datasets.

Experimental evaluations show that Rank-R FNN is a computationally inexpensive

alternative of ordinary FNN that achieves state-of-the-art performance on

higher-order tensor data.

In this work we introduce an incremental learning framework for

Click-Through-Rate (CTR) prediction and demonstrate its effectiveness for

Taboola's massive-scale recommendation service. Our approach enables rapid

capture of emerging trends through warm-starting from previously deployed

models and fine tuning on "fresh" data only. Past knowledge is maintained via a

teacher-student paradigm, where the teacher acts as a distillation technique,

mitigating the catastrophic forgetting phenomenon. Our incremental learning

framework enables significantly faster training and deployment cycles (x12

speedup). We demonstrate a consistent Revenue Per Mille (RPM) lift over

multiple traffic segments and a significant CTR increase on newly introduced

items.

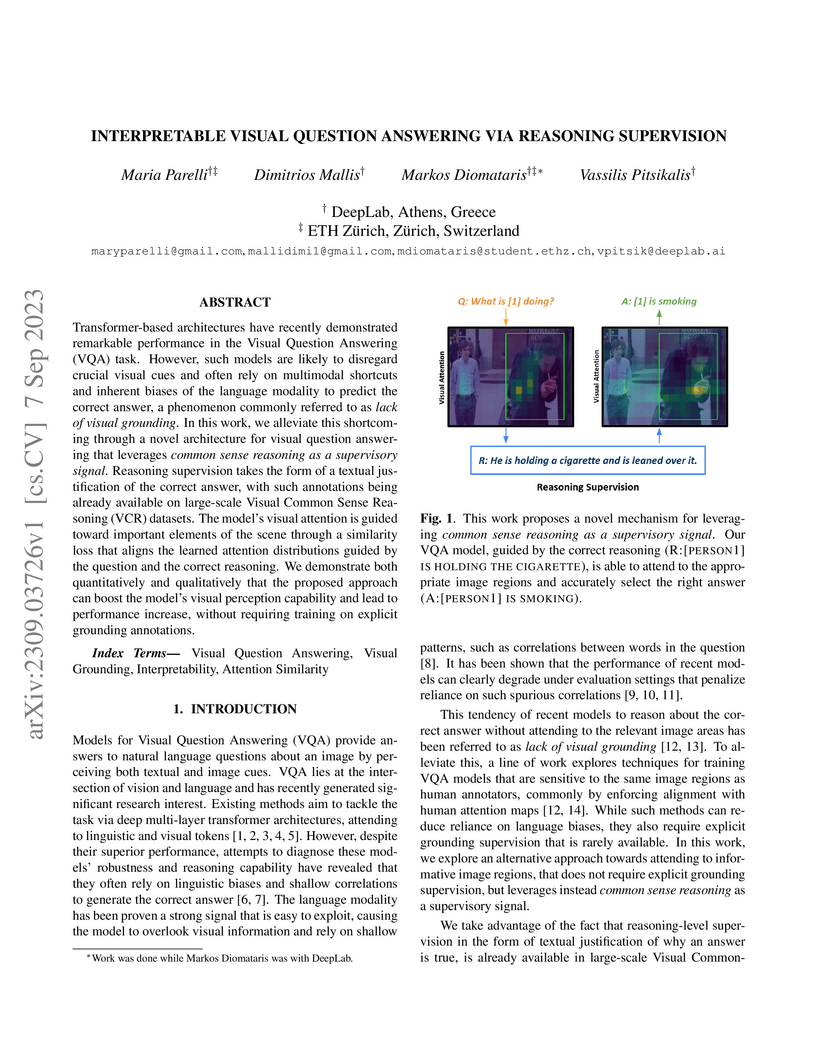

Transformer-based architectures have recently demonstrated remarkable

performance in the Visual Question Answering (VQA) task. However, such models

are likely to disregard crucial visual cues and often rely on multimodal

shortcuts and inherent biases of the language modality to predict the correct

answer, a phenomenon commonly referred to as lack of visual grounding. In this

work, we alleviate this shortcoming through a novel architecture for visual

question answering that leverages common sense reasoning as a supervisory

signal. Reasoning supervision takes the form of a textual justification of the

correct answer, with such annotations being already available on large-scale

Visual Common Sense Reasoning (VCR) datasets. The model's visual attention is

guided toward important elements of the scene through a similarity loss that

aligns the learned attention distributions guided by the question and the

correct reasoning. We demonstrate both quantitatively and qualitatively that

the proposed approach can boost the model's visual perception capability and

lead to performance increase, without requiring training on explicit grounding

annotations.

There are no more papers matching your filters at the moment.