Goldman Sachs

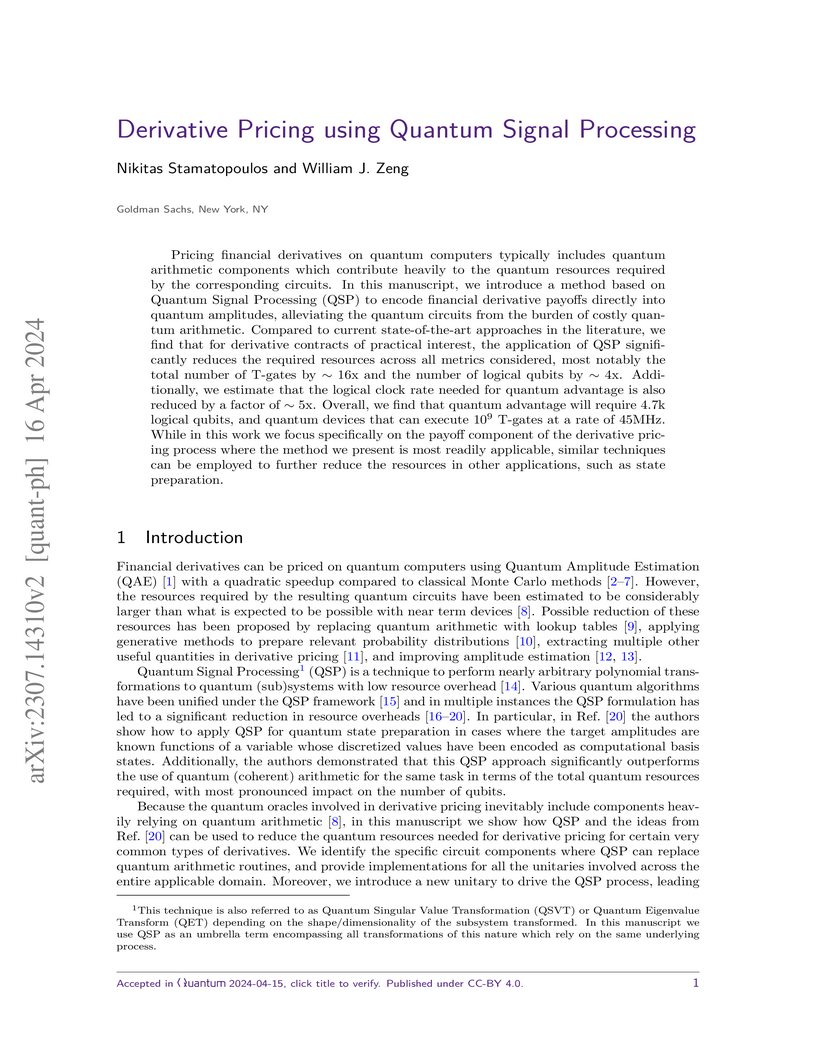

Researchers at Goldman Sachs significantly reduced the quantum computational resources required for pricing complex financial derivatives by leveraging Quantum Signal Processing (QSP). This method achieved a 16x reduction in T-gates and a 4.1x reduction in logical qubits for an autocallable contract, bringing the estimated logical clock rate for quantum advantage down to 45 MHz.

23 May 2024

We study quantum interior point methods (QIPMs) for second-order cone programming (SOCP), guided by the example use case of portfolio optimization (PO). We provide a complete quantum circuit-level description of the algorithm from problem input to problem output, making several improvements to the implementation of the QIPM. We report the number of logical qubits and the quantity/depth of non-Clifford T-gates needed to run the algorithm, including constant factors. The resource counts we find depend on instance-specific parameters, such as the condition number of certain linear systems within the problem. To determine the size of these parameters, we perform numerical simulations of small PO instances, which lead to concrete resource estimates for the PO use case. Our numerical results do not probe large enough instance sizes to make conclusive statements about the asymptotic scaling of the algorithm. However, already at small instance sizes, our analysis suggests that, due primarily to large constant pre-factors, poorly conditioned linear systems, and a fundamental reliance on costly quantum state tomography, fundamental improvements to the QIPM are required for it to lead to practical quantum advantage.

06 Jun 2023

Relation extraction models trained on a source domain cannot be applied on a

different target domain due to the mismatch between relation sets. In the

current literature, there is no extensive open-source relation extraction

dataset specific to the finance domain. In this paper, we release FinRED, a

relation extraction dataset curated from financial news and earning call

transcripts containing relations from the finance domain. FinRED has been

created by mapping Wikidata triplets using distance supervision method. We

manually annotate the test data to ensure proper evaluation. We also experiment

with various state-of-the-art relation extraction models on this dataset to

create the benchmark. We see a significant drop in their performance on FinRED

compared to the general relation extraction datasets which tells that we need

better models for financial relation extraction.

Abstractive summarization is the task of compressing a long document into a coherent short document while retaining salient information. Modern abstractive summarization methods are based on deep neural networks which often require large training datasets. Since collecting summarization datasets is an expensive and time-consuming task, practical industrial settings are usually low-resource. In this paper, we study a challenging low-resource setting of summarizing long legal briefs with an average source document length of 4268 words and only 120 available (document, summary) pairs. To account for data scarcity, we used a modern pretrained abstractive summarizer BART (Lewis et al., 2020), which only achieves 17.9 ROUGE-L as it struggles with long documents. We thus attempt to compress these long documents by identifying salient sentences in the source which best ground the summary, using a novel algorithm based on GPT-2 (Radford et al., 2019) language model perplexity scores, that operates within the low resource regime. On feeding the compressed documents to BART, we observe a 6.0 ROUGE-L improvement. Our method also beats several competitive salience detection baselines. Furthermore, the identified salient sentences tend to agree with an independent human labeling by domain experts.

We introduce two quantum algorithms to compute the Value at Risk (VaR) and Conditional Value at Risk (CVaR) of financial derivatives using quantum computers: the first by applying existing ideas from quantum risk analysis to derivative pricing, and the second based on a novel approach using Quantum Signal Processing (QSP). Previous work in the literature has shown that quantum advantage is possible in the context of individual derivative pricing and that advantage can be leveraged in a straightforward manner in the estimation of the VaR and CVaR. The algorithms we introduce in this work aim to provide an additional advantage by encoding the derivative price over multiple market scenarios in superposition and computing the desired values by applying appropriate transformations to the quantum system. We perform complexity and error analysis of both algorithms, and show that while the two algorithms have the same asymptotic scaling the QSP-based approach requires significantly fewer quantum resources for the same target accuracy. Additionally, by numerically simulating both quantum and classical VaR algorithms, we demonstrate that the quantum algorithm can extract additional advantage from a quantum computer compared to individual derivative pricing. Specifically, we show that under certain conditions VaR estimation can lower the latest published estimates of the logical clock rate required for quantum advantage in derivative pricing by up to ∼30x. In light of these results, we are encouraged that our formulation of derivative pricing in the QSP framework may be further leveraged for quantum advantage in other relevant financial applications, and that quantum computers could be harnessed more efficiently by considering problems in the financial sector at a higher level.

23 May 2024

We study quantum interior point methods (QIPMs) for second-order cone programming (SOCP), guided by the example use case of portfolio optimization (PO). We provide a complete quantum circuit-level description of the algorithm from problem input to problem output, making several improvements to the implementation of the QIPM. We report the number of logical qubits and the quantity/depth of non-Clifford T-gates needed to run the algorithm, including constant factors. The resource counts we find depend on instance-specific parameters, such as the condition number of certain linear systems within the problem. To determine the size of these parameters, we perform numerical simulations of small PO instances, which lead to concrete resource estimates for the PO use case. Our numerical results do not probe large enough instance sizes to make conclusive statements about the asymptotic scaling of the algorithm. However, already at small instance sizes, our analysis suggests that, due primarily to large constant pre-factors, poorly conditioned linear systems, and a fundamental reliance on costly quantum state tomography, fundamental improvements to the QIPM are required for it to lead to practical quantum advantage.

Pretrained Transformer models have emerged as state-of-the-art approaches

that learn contextual information from text to improve the performance of

several NLP tasks. These models, albeit powerful, still require specialized

knowledge in specific scenarios. In this paper, we argue that context derived

from a knowledge graph (in our case: Wikidata) provides enough signals to

inform pretrained transformer models and improve their performance for named

entity disambiguation (NED) on Wikidata KG. We further hypothesize that our

proposed KG context can be standardized for Wikipedia, and we evaluate the

impact of KG context on state-of-the-art NED model for the Wikipedia knowledge

base. Our empirical results validate that the proposed KG context can be

generalized (for Wikipedia), and providing KG context in transformer

architectures considerably outperforms the existing baselines, including the

vanilla transformer models.

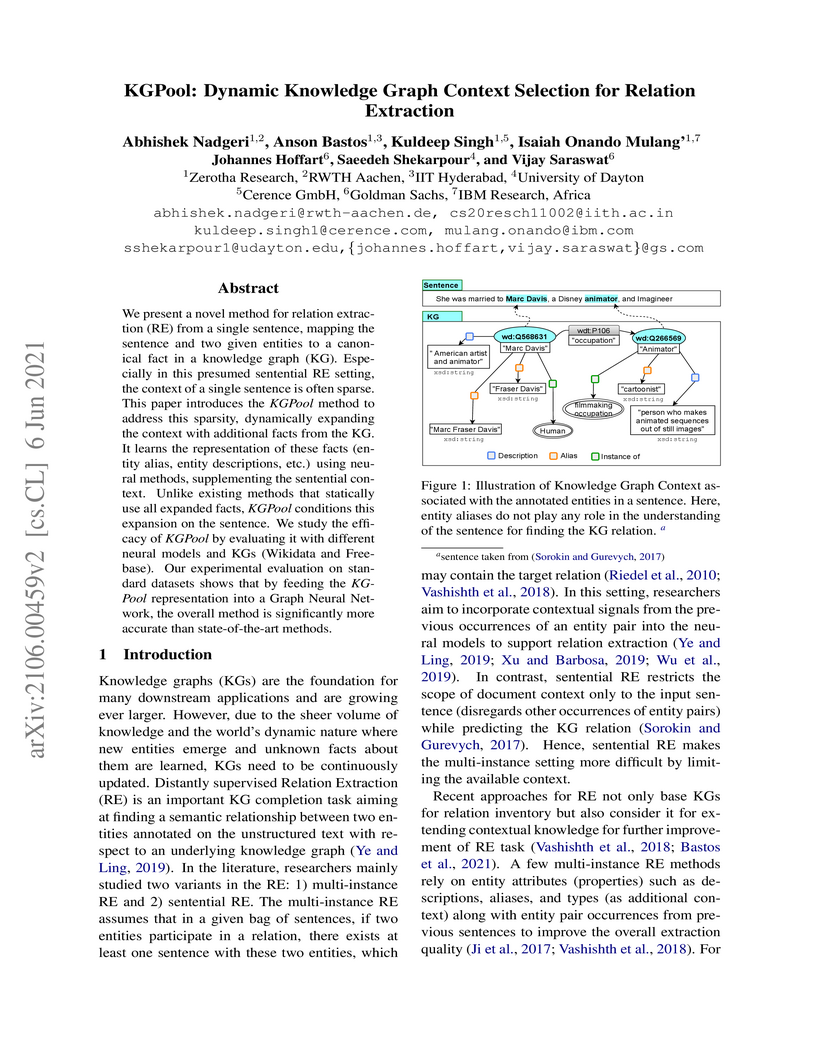

We present a novel method for relation extraction (RE) from a single sentence, mapping the sentence and two given entities to a canonical fact in a knowledge graph (KG). Especially in this presumed sentential RE setting, the context of a single sentence is often sparse. This paper introduces the KGPool method to address this sparsity, dynamically expanding the context with additional facts from the KG. It learns the representation of these facts (entity alias, entity descriptions, etc.) using neural methods, supplementing the sentential context. Unlike existing methods that statically use all expanded facts, KGPool conditions this expansion on the sentence. We study the efficacy of KGPool by evaluating it with different neural models and KGs (Wikidata and NYT Freebase). Our experimental evaluation on standard datasets shows that by feeding the KGPool representation into a Graph Neural Network, the overall method is significantly more accurate than state-of-the-art methods.

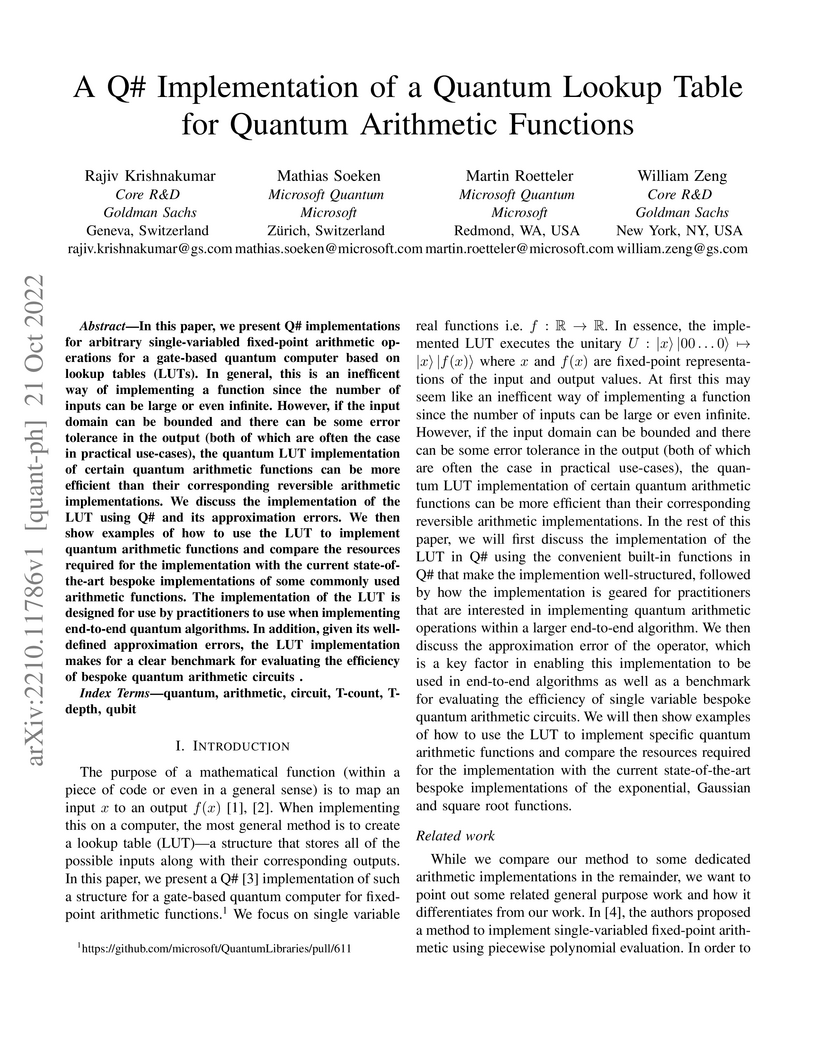

In this paper, we present Q# implementations for arbitrary single-variabled

fixed-point arithmetic operations for a gate-based quantum computer based on

lookup tables (LUTs). In general, this is an inefficent way of implementing a

function since the number of inputs can be large or even infinite. However, if

the input domain can be bounded and there can be some error tolerance in the

output (both of which are often the case in practical use-cases), the quantum

LUT implementation of certain quantum arithmetic functions can be more

efficient than their corresponding reversible arithmetic implementations. We

discuss the implementation of the LUT using Q\# and its approximation errors.

We then show examples of how to use the LUT to implement quantum arithmetic

functions and compare the resources required for the implementation with the

current state-of-the-art bespoke implementations of some commonly used

arithmetic functions. The implementation of the LUT is designed for use by

practitioners to use when implementing end-to-end quantum algorithms. In

addition, given its well-defined approximation errors, the LUT implementation

makes for a clear benchmark for evaluating the efficiency of bespoke quantum

arithmetic circuits .

The U.S. Securities and Exchange Commission (SEC) mandates all public companies to file periodic financial statements that should contain numerals annotated with a particular label from a taxonomy. In this paper, we formulate the task of automating the assignment of a label to a particular numeral span in a sentence from an extremely large label set. Towards this task, we release a dataset, Financial Numeric Extreme Labelling (FNXL), annotated with 2,794 labels. We benchmark the performance of the FNXL dataset by formulating the task as (a) a sequence labelling problem and (b) a pipeline with span extraction followed by Extreme Classification. Although the two approaches perform comparably, the pipeline solution provides a slight edge for the least frequent labels.

09 May 2025

We demonstrate that the problem of amplitude estimation, a core subroutine

used in many quantum algorithms, can be mapped directly to a problem in signal

processing called direction of arrival (DOA) estimation. The DOA task is to

determine the direction of arrival of an incoming wave with the fewest possible

measurements. The connection between amplitude estimation and DOA allows us to

make use of the vast amount of signal processing algorithms to post-process the

measurements of the Grover iterator at predefined depths. Using an

off-the-shelf DOA algorithm called ESPRIT together with a compressed-sensing

based sampling approach, we create a phase-estimation free, parallel quantum

amplitude estimation (QAE) algorithm with a worst-case sequential query

complexity of ∼4.3/ε and a parallel query complexity of $\sim

0.26/\varepsilon$ at 95% confidence. This performance is statistically

equivalent and a 16× improvement over Rall and Fuller [Quantum 7, 937

(2023)], for sequential and parallel query complexity respectively, which to

our knowledge is the best published result for amplitude estimation. The

approach presented here provides a simple, robust, parallel method to

performing QAE, with many possible avenues for improvement borrowing ideas from

the wealth of literature in classical signal processing.

In this paper, we propose CHOLAN, a modular approach to target end-to-end entity linking (EL) over knowledge bases. CHOLAN consists of a pipeline of two transformer-based models integrated sequentially to accomplish the EL task. The first transformer model identifies surface forms (entity mentions) in a given text. For each mention, a second transformer model is employed to classify the target entity among a predefined candidates list. The latter transformer is fed by an enriched context captured from the sentence (i.e. local context), and entity description gained from Wikipedia. Such external contexts have not been used in the state of the art EL approaches. Our empirical study was conducted on two well-known knowledge bases (i.e., Wikidata and Wikipedia). The empirical results suggest that CHOLAN outperforms state-of-the-art approaches on standard datasets such as CoNLL-AIDA, MSNBC, AQUAINT, ACE2004, and T-REx.

In this paper, we present a novel method named RECON, that automatically identifies relations in a sentence (sentential relation extraction) and aligns to a knowledge graph (KG). RECON uses a graph neural network to learn representations of both the sentence as well as facts stored in a KG, improving the overall extraction quality. These facts, including entity attributes (label, alias, description, instance-of) and factual triples, have not been collectively used in the state of the art methods. We evaluate the effect of various forms of representing the KG context on the performance of RECON. The empirical evaluation on two standard relation extraction datasets shows that RECON significantly outperforms all state of the art methods on NYT Freebase and Wikidata datasets. RECON reports 87.23 F1 score (Vs 82.29 baseline) on Wikidata dataset whereas on NYT Freebase, reported values are 87.5(P@10) and 74.1(P@30) compared to the previous baseline scores of 81.3(P@10) and 63.1(P@30).

Recently, several Knowledge Graph Embedding (KGE) approaches have been devised to represent entities and relations in dense vector space and employed in downstream tasks such as link prediction. A few KGE techniques address interpretability, i.e., mapping the connectivity patterns of the relations (i.e., symmetric/asymmetric, inverse, and composition) to a geometric interpretation such as rotations. Other approaches model the representations in higher dimensional space such as four-dimensional space (4D) to enhance the ability to infer the connectivity patterns (i.e., expressiveness). However, modeling relation and entity in a 4D space often comes at the cost of interpretability. This paper proposes HopfE, a novel KGE approach aiming to achieve the interpretability of inferred relations in the four-dimensional space. We first model the structural embeddings in 3D Euclidean space and view the relation operator as an SO(3) rotation. Next, we map the entity embedding vector from a 3D space to a 4D hypersphere using the inverse Hopf Fibration, in which we embed the semantic information from the KG ontology. Thus, HopfE considers the structural and semantic properties of the entities without losing expressivity and interpretability. Our empirical results on four well-known benchmarks achieve state-of-the-art performance for the KG completion task.

12 Nov 2021

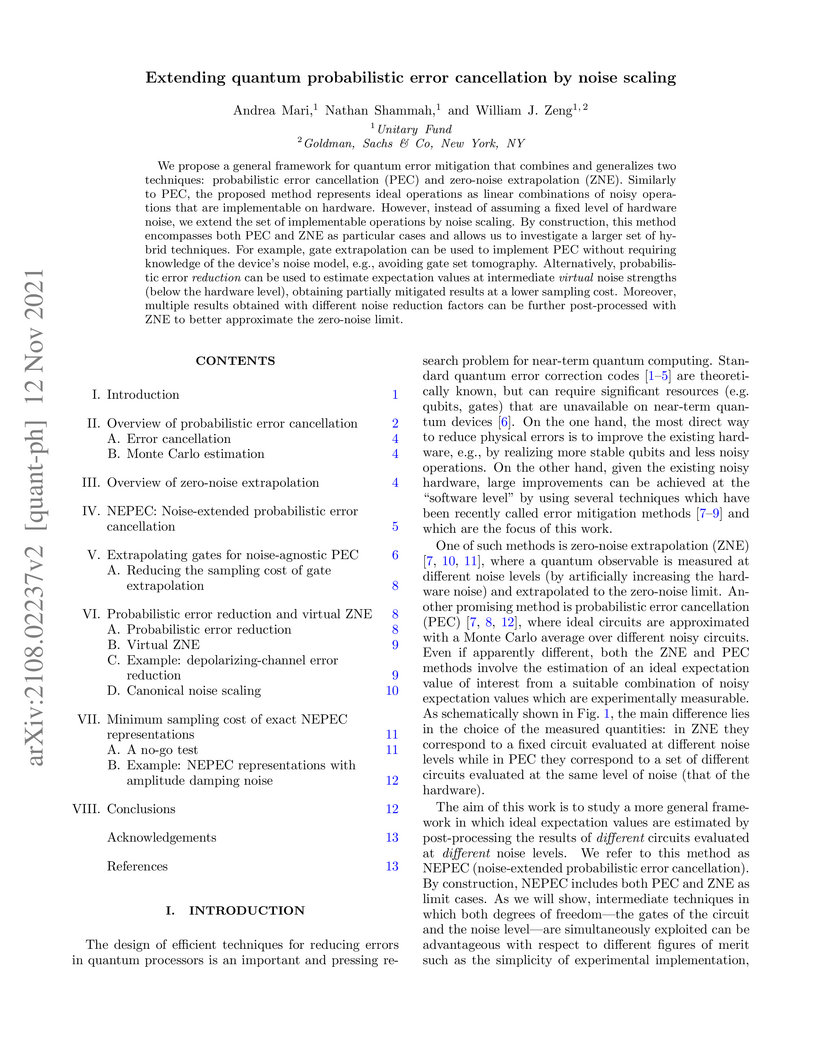

We propose a general framework for quantum error mitigation that combines and

generalizes two techniques: probabilistic error cancellation (PEC) and

zero-noise extrapolation (ZNE). Similarly to PEC, the proposed method

represents ideal operations as linear combinations of noisy operations that are

implementable on hardware. However, instead of assuming a fixed level of

hardware noise, we extend the set of implementable operations by noise scaling.

By construction, this method encompasses both PEC and ZNE as particular cases

and allows us to investigate a larger set of hybrid techniques. For example,

gate extrapolation can be used to implement PEC without requiring knowledge of

the device's noise model, e.g., avoiding gate set tomography. Alternatively,

probabilistic error reduction can be used to estimate expectation values at

intermediate virtual noise strengths (below the hardware level), obtaining

partially mitigated results at a lower sampling cost. Moreover, multiple

results obtained with different noise reduction factors can be further

post-processed with ZNE to better approximate the zero-noise limit.

Sampling is an established technique to scale graph neural networks to large

graphs. Current approaches however assume the graphs to be homogeneous in terms

of relations and ignore relation types, critically important in biomedical

graphs. Multi-relational graphs contain various types of relations that usually

come with variable frequency and have different importance for the problem at

hand. We propose an approach to modeling the importance of relation types for

neighborhood sampling in graph neural networks and show that we can learn the

right balance: relation-type probabilities that reflect both frequency and

importance. Our experiments on drug-drug interaction prediction show that

state-of-the-art graph neural networks profit from relation-dependent sampling

in terms of both accuracy and efficiency.

We study the problem of automatically annotating relevant numerals (GAAP metrics) occurring in the financial documents with their corresponding XBRL tags. Different from prior works, we investigate the feasibility of solving this extreme classification problem using a generative paradigm through instruction tuning of Large Language Models (LLMs). To this end, we leverage metric metadata information to frame our target outputs while proposing a parameter efficient solution for the task using LoRA. We perform experiments on two recently released financial numeric labeling datasets. Our proposed model, FLAN-FinXC, achieves new state-of-the-art performances on both the datasets, outperforming several strong baselines. We explain the better scores of our proposed model by demonstrating its capability for zero-shot as well as the least frequently occurring tags. Also, even when we fail to predict the XBRL tags correctly, our generated output has substantial overlap with the ground-truth in majority of the cases.

03 Dec 2017

This work develops a distributed optimization strategy with guaranteed exact convergence for a broad class of left-stochastic combination policies. The resulting exact diffusion strategy is shown in Part II to have a wider stability range and superior convergence performance than the EXTRA strategy. The exact diffusion solution is applicable to non-symmetric left-stochastic combination matrices, while many earlier developments on exact consensus implementations are limited to doubly-stochastic matrices; these latter matrices impose stringent constraints on the network topology. The derivation of the exact diffusion strategy in this work relies on reformulating the aggregate optimization problem as a penalized problem and resorting to a diagonally-weighted incremental construction. Detailed stability and convergence analyses are pursued in Part II and are facilitated by examining the evolution of the error dynamics in a transformed domain. Numerical simulations illustrate the theoretical conclusions.

08 Oct 2024

We explore the important task of applying a phase exp(if(x)) to a computational basis state ∣x⟩. The closely related task of rotating a target qubit by an angle depending on f(x) is also studied. Such operations are key in many quantum subroutines, and often the function f can be well-approximated by a piecewise linear composition. Examples range from the application of diagonal Hamiltonian terms (such as the Coulomb interaction) in grid-based many-body simulation, to derivative pricing algorithms. Here we exploit a parallelisation of the piecewise approach so that all constituent elementary rotations are performed simultaneously, that is, we achieve a total rotation depth of one. Moreover, we explore the use of recursive catalyst 'towers' to implement these elementary rotations efficiently. Depending on the choice of implementation strategy, we find a depth as low as O(logn+logS) for a register of n qubits and a piecewise approximation of S sections. In the limit of multiple repetitions of the oracle, we find that catalyst tower approaches have an O(S⋅n) T-count, whereas linear interpolation with QROM has an O(nlog2(3)) T-count.

30 Oct 2007

Hypoelliptic diffusion processes can be used to model a variety of phenomena in applications ranging from molecular dynamics to audio signal analysis. We study parameter estimation for such processes in situations where we observe some components of the solution at discrete times. Since exact likelihoods for the transition densities are typically not known, approximations are used that are expected to work well in the limit of small inter-sample times Δt and large total observation times NΔt. Hypoellipticity together with partial observation leads to ill-conditioning requiring a judicious combination of approximate likelihoods for the various parameters to be estimated. We combine these in a deterministic scan Gibbs sampler alternating between missing data in the unobserved solution components, and parameters. Numerical experiments illustrate asymptotic consistency of the method when applied to simulated data. The paper concludes with application of the Gibbs sampler to molecular dynamics data.

There are no more papers matching your filters at the moment.