programming-languages

We demonstrate that the checkable/synthesisable split in bidirectional typechecking coincides with existing dualities in polarised System L, also known as polarised μμ~-calculus. Specifically, positive terms and negative coterms are checkable, and negative terms and positive coterms are synthesisable. This combines a standard formulation of bidirectional typechecking with Zeilberger's `cocontextual' variant. We extend this to ordinary `cartesian' System L using Mc Bride's co-de Bruijn formulation of scopes, and show that both can be combined in a linear-nonlinear style, where linear types are positive and cartesian types are negative. This yields a remarkable 3-way coincidence between the shifts of polarised System L, LNL calculi, and bidirectional calculi.

Modern extensible compiler frameworks-such as MLIR-enable rapid creation of domain-specific language dialects. This flexibility, however, makes correctness harder to ensure as the same extensibility that accelerates development also complicates maintaining the testing infrastructure. Extensible languages require automated test generation that is both dialect-agnostic (works across dialects without manual adaptation) and dialect-effective (targets dialect-specific features to find bugs). Existing approaches typically sacrifice one of these goals by either requiring manually constructed seed corpora for each dialect, or by failing to be effective. We present a dialect-agnostic and dialect-effective grammar-based and coverage-guided fuzzing approach for extensible compilers that combines two key insights from existing work: (i) the grammars of dialects, which already encode the structural and type constraints, can often be extracted automatically from the dialect specification; and (ii) these grammars can be used in combination with pre-trained large language models to automatically generate representative and diverse seed inputs from the full dialect space without requiring any manual input or training data. These seeds can then be used to bootstrap coverage-guided fuzzers. We built this approach into a tool, Germinator. When evaluated on six MLIR projects spanning 91 dialects, Germinator generated seeds improve line coverage by 10-120% over grammar-based baselines. We compare against grammar-based baselines because they are the only class of existing automatic seed generators that can be applied uniformly across MLIR's heterogeneous dialect ecosystem. Germinator discovers 88 previously unknown bugs (40 confirmed), including 23 in dialects with no prior automated test generators, demonstrating effective and controllable testing of low-resource dialects at scale.

Tensor accelerators now represent a growing share of compute resources in modern CPUs and GPUs. However, they are hard to program, leading developers to use vendor-provided kernel libraries that support tensor accelerators. As a result, the usage of tensor accelerators is limited to the provided interface, mainly designed for traditional ML and scientific computing workloads.

In this paper, we show that tensor accelerators can improve the performance of applications beyond simple variants of MatMul. For example, many image processing pipelines are linear transformations over matrices in disguise and can therefore utilize such specialized hardware. This is nonetheless hindered by the difficulties in programming tensor accelerators. We tackle this problem with compiler-based techniques. We use the Halide user-schedulable language and express operations as Halide algorithms succinctly. To this end, we implement a flexible tensor instruction selector based on equality saturation. The tensor instruction selector supports both CPU- and GPU-attached tensor accelerators and works with existing scheduling operations (e.g., producer-consumer fusion). Together, this enables developers to write diverse accelerator-leveraging applications in a few dozen lines.

Using our system, we demonstrate the potential of tensor accelerators beyond their traditional domains. We implement several image processing pipelines (e.g., filtering, resampling, and denoising) in our system and evaluate them against non-accelerator-leveraging baselines. We show that these pipelines can achieve significant speedups. For example, a downsampling routine is sped up by 6.1× by utilizing Tensor Cores on an Nvidia RTX 4070 GPU.

We introduce a new approach to agent programming, the development of LLM-based agents. Current approaches to agent programming often entangle two aspects of agent design: the core workflow logic and the inference-time strategy (e.g., tree search). We introduce "probabilistic angelic nondeterminism" ("PAN"), a programming model that disentangles these two concerns, allowing the programmer to describe the agent workflow and independently experiment with different inference-time strategies by simply changing a few inputs. We provide an implementation of PAN in Python as the EnCompass framework, which uses a Python decorator to compile agent workflow programs into a search space. We present three case studies that demonstrate how the framework lets the programmer quickly improve the reliability of an agent and easily switch between different inference-time strategies, all with little additional coding.

29 Nov 2025

Large language models (LLMs) have shown remarkable capabilities in code translation, yet their performance deteriorates in low-resource programming domains such as Fortran and emerging frameworks like CUDA, where high-quality parallel data are scarce. We present an automated dataset generation pipeline featuring a dual-LLM Questioner-Solver design that incorporates external knowledge from compilers and runtime feedback. Beyond traditional source-target code pair datasets, our approach additionally generates (1) verified translations with unit tests for assessing functional consistency, and (2) multi-turn dialogues that capture the reasoning process behind translation refinement. Applied to Fortran -> C++ and C++ -> CUDA, the pipeline yields 3.64k and 3.93k dialogues, respectively. Fine-tuning on this data yields dramatic improvements in functional correctness, boosting unit test success rates by over 56% on the challenging C++-to-CUDA task. We show this data enables a 7B open-weight model to significantly outperform larger proprietary systems on key metrics like compilation success.

Bounding volume hierarchies are ubiquitous acceleration structures in graphics, scientific computing, and data analytics. Their performance depends critically on data layout choices that affect cache utilization, memory bandwidth, and vectorization -- increasingly dominant factors in modern computing. Yet, in most programming systems, these layout choices are hopelessly entangled with the traversal logic. This entanglement prevents developers from independently optimizing data layouts and algorithms across different contexts, perpetuating a false dichotomy between performance and portability. We introduce Scion, a domain-specific language and compiler for specifying the data layouts of bounding volume hierarchies independent of tree traversal algorithms. We show that Scion can express a broad spectrum of layout optimizations used in high performance computing while remaining architecture-agnostic. We demonstrate empirically that Pareto-optimal layouts (along performance and memory footprint axes) vary across algorithms, architectures, and workload characteristics. Through systematic design exploration, we also identify a novel ray tracing layout that combines optimization techniques from prior work, achieving Pareto-optimality across diverse architectures and scenes.

Trees can accelerate queries that search or aggregate values over large collections. They achieve this by storing metadata that enables quick pruning (or inclusion) of subtrees when predicates on that metadata can prove that none (or all) of the data in a subtree affect the query result. Existing systems implement this pruning logic manually for each query predicate and data structure. We generalize and mechanize this class of optimization. Our method derives conditions for when subtrees can be pruned (or included wholesale), expressed in terms of the metadata available at each node. We efficiently generate these conditions using symbolic interval analysis, extended with new rules to handle geometric predicates (e.g., intersection, containment). Additionally, our compiler fuses compound queries (e.g., reductions on filters) into a single tree traversal. These techniques enable the automatic derivation of generalized single-index and dual-index tree joins that support a wide class of join predicates beyond standard equality and range predicates. The generated traversals match the behavior of expert-written code that implements query-specific traversals, and can asymptotically outperform the linear scans and nested-loop joins that existing systems fall back to when hand-written cases do not apply.

Analysis of entire programs as a single unit, or whole-program analysis, involves propagation of large amounts of information through the control flow of the program. This is especially true for pointer analysis, where, unless significant compromises are made in the precision of the analysis, there is a combinatorial blowup of information. One of the key problems we observed in our own efforts to this end is that a lot of duplicate data was being propagated, and many low-level data structure operations were repeated a large number of times.

We present what we consider to be a novel and generic data structure, LatticeHashForest (LHF), to store and operate on such data in a manner that eliminates a majority of redundant computations and duplicate data in scenarios similar to those encountered in compilers and program optimization. LHF differs from similar work in this vein, such as hash-consing, ZDDs, and BDDs, by not only providing a way to efficiently operate on large, aggregate structures, but also modifying the elements of such structures in a manner that they can be deduplicated immediately. LHF also provides a way to perform a nested construction of elements such that they can be deduplicated at multiple levels, cutting down the need for additional, nested computations.

We provide a detailed structural description, along with an abstract model of this data structure. An entire C++ implementation of LHF is provided as an artifact along with evaluations of LHF using examples and benchmark programs. We also supply API documentation and a user manual for users to make independent applications of LHF. Our main use case in the realm of pointer analysis shows memory usage reduction to an almost negligible fraction, and speedups beyond 4x for input sizes approaching 10 million when compared to other implementations.

Autodiff refers to the core of the automatic differentiation systems developed in projects like JAX and Dex. Autodiff has recently been formalised in a linear typed calculus by Radul et al in arXiv:2204.10923. Although this formalisation suffices to express the main program transformations of Autodiff, the calculus is very specific to this task, and it is not clear whether the type system yields a substructural logic that has interest on its own.

We propose an encoding of Autodiff into a linear λ-calculus that enjoys a Curry-Howard correspondence with Girard's linear logic. We prove that the encoding is sound both qualitatively (the encoded terms are extensionally equivalent to the original ones) and quantitatively (the encoding preserves the original work cost as described in arXiv:2204.10923). As a byproduct, we show that unzipping, one of the transformations used to implement backpropagation in Autodiff, is, in fact, optional.

ProofOptimizer introduces a system for training language models to simplify formal Lean proofs through self-supervision, eliminating the need for human demonstrations. It achieves substantial length reductions, such as 87% on miniF2F and 49% on complex Seed-Prover IMO proofs, which in turn improves the quality of training data for future provers and significantly reduces verification times.

Pedro Domingos's "Tensor Logic" introduces a foundational programming language that unifies neural, symbolic, and statistical AI through a single "tensor equation" construct, demonstrating its ability to implement diverse AI paradigms and enable reliable, hallucination-free reasoning in embedding spaces via a controllable temperature parameter.

Tensor compilers play a key role in enabling high-performance implementations of deep learning workloads. These compilers rely on existing CPU and GPU code generation backends to generate device-specific code. Recently, many tensor accelerators (neural processing units) have been proposed to further accelerate these workloads. Compared to commodity hardware, however, most of the proposed tensor accelerators do not have compiler backends with code generation support. Moreover, the accelerator designs are subject to fast iteration cycles, making it difficult to manually develop compiler backends similar to commodity hardware platforms. Therefore, to increase adoption and enable faster software development cycles for novel tensor accelerator designs, we need to make the compiler backend construction process more agile.

To address this gap, we introduce ACT, a compiler backend generator that automatically generates compiler backends for tensor accelerators, given just the instruction set architecture (ISA) descriptions. We first formally specify the compiler backend generation problem that introduces a novel specification for describing tensor accelerator ISAs. Next, we design ACT such that it supports user-programmable memories and complex parameterized instructions that are prevalent in tensor accelerators. ACT uses a novel parameterized equality saturation-based instruction selection phase and a constraint programming-based memory allocation phase. We prove that compiler backends generated by ACT are sound and complete. Finally, we generate compiler backends for three accelerator platforms from industry and academia, and show that they match or outperform code written using hand-optimized kernel libraries while maintaining low compilation overheads.

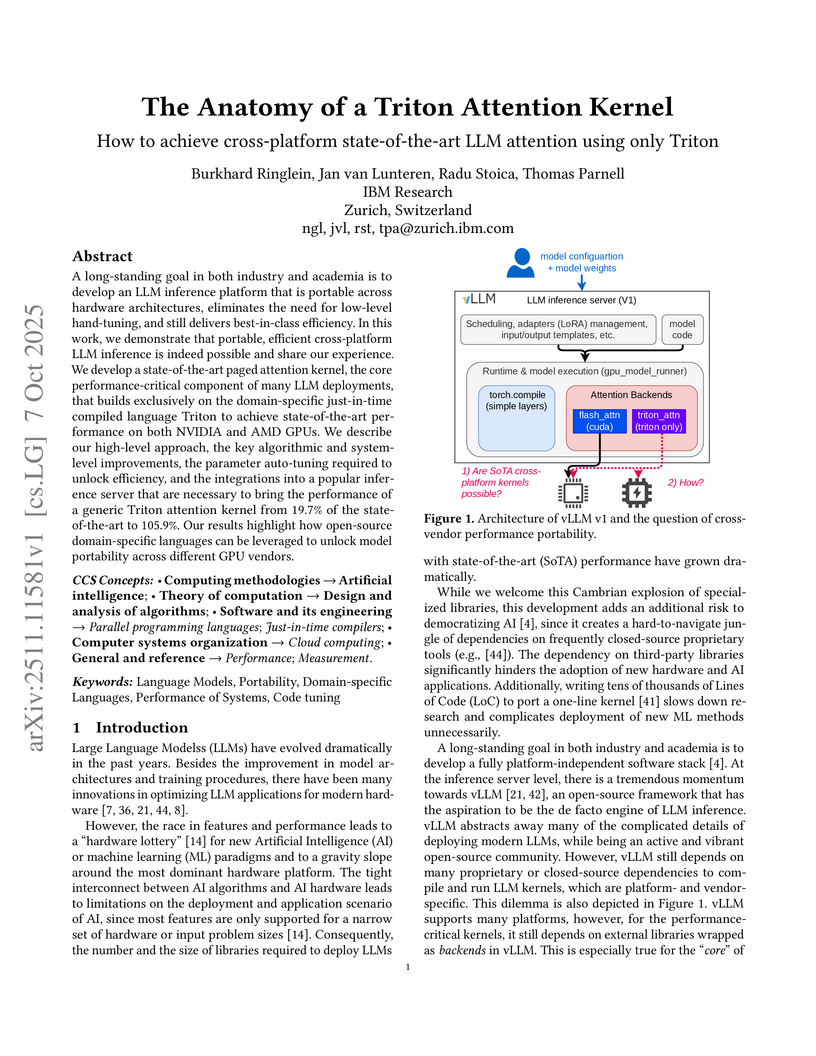

IBM Research developed a Triton-based attention kernel that achieves state-of-the-art performance for large language model inference across NVIDIA and AMD GPUs. This cross-platform solution, integrated into vLLM, eliminates reliance on proprietary hardware-specific libraries for efficient serving.

26 Sep 2025

This research introduces "vericoding," the LLM-driven generation of formally verified code from formal specifications. It establishes the largest benchmark to date, comprising 12,504 tasks across Dafny, Verus, and Lean, and demonstrates that LLMs can achieve up to an 82.2% success rate in generating provably correct code for Dafny tasks, alongside a rapid increase in pure verification capabilities from 68% to 96% within a year on existing benchmarks.

Researchers introduce SWE-QA, a benchmark and agentic framework for evaluating large language models' understanding of entire software repositories, moving beyond isolated code snippets. This work demonstrates that an iterative agentic approach significantly enhances LLM performance in complex, real-world software engineering tasks like cross-file reasoning and architectural comprehension.

Dynamic energy systems and controls require advanced modeling frameworks to design and test supervisory and fault tolerant strategies. Modelica is a widely used equation based language, but developing control modules is labor intensive and requires specialized expertise. This paper examines the use of large language models (LLMs) to automate the generation of Control Description Language modules in the Building Modelica Library as a case study. We developed a structured workflow that combines standardized prompt scaffolds, library aware grounding, automated compilation with OpenModelica, and human in the loop evaluation. Experiments were carried out on four basic logic tasks (And, Or, Not, and Switch) and five control modules (chiller enable/disable, bypass valve control, cooling tower fan speed, plant requests, and relief damper control). The results showed that GPT 4o failed to produce executable Modelica code in zero shot mode, while Claude Sonnet 4 achieved up to full success for basic logic blocks with carefully engineered prompts. For control modules, success rates reached 83 percent, and failed outputs required medium level human repair (estimated one to eight hours). Retrieval augmented generation often produced mismatches in module selection (for example, And retrieved as Or), while a deterministic hard rule search strategy avoided these errors. Human evaluation also outperformed AI evaluation, since current LLMs cannot assess simulation results or validate behavioral correctness. Despite these limitations, the LLM assisted workflow reduced the average development time from 10 to 20 hours down to 4 to 6 hours per module, corresponding to 40 to 60 percent time savings. These results highlight both the potential and current limitations of LLM assisted Modelica generation, and point to future research in pre simulation validation, stronger grounding, and closed loop evaluation.

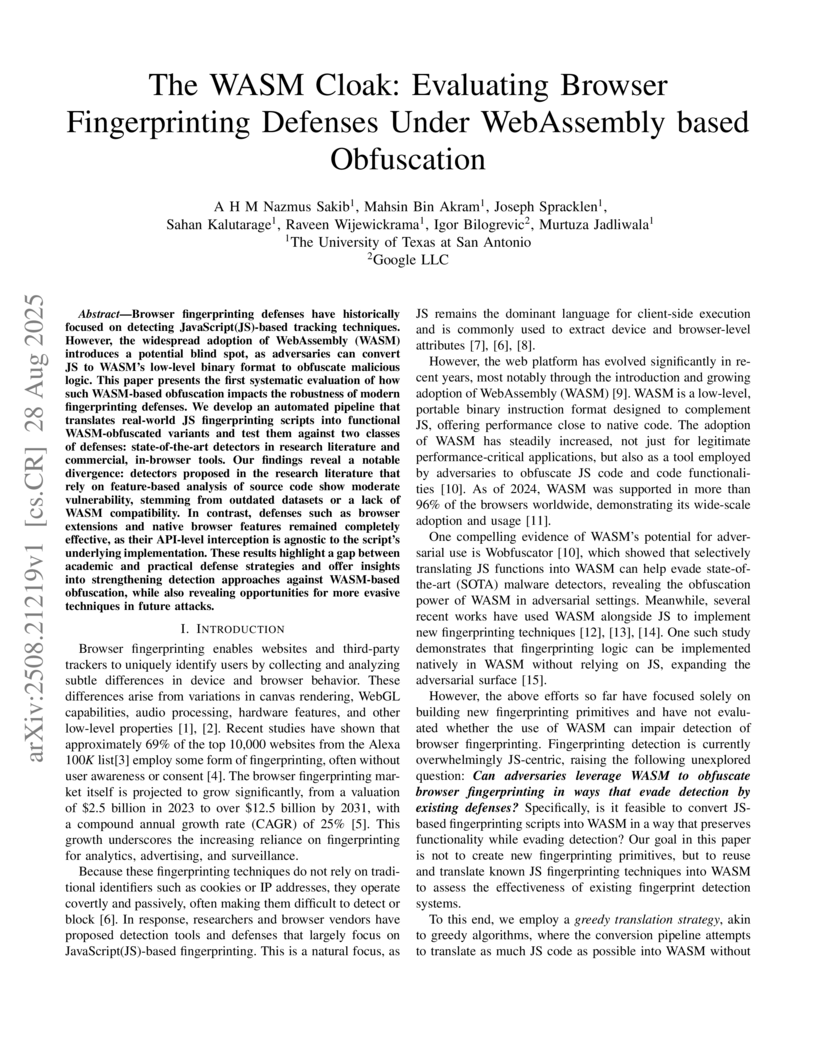

Browser fingerprinting defenses have historically focused on detecting JavaScript(JS)-based tracking techniques. However, the widespread adoption of WebAssembly (WASM) introduces a potential blind spot, as adversaries can convert JS to WASM's low-level binary format to obfuscate malicious logic. This paper presents the first systematic evaluation of how such WASM-based obfuscation impacts the robustness of modern fingerprinting defenses. We develop an automated pipeline that translates real-world JS fingerprinting scripts into functional WASM-obfuscated variants and test them against two classes of defenses: state-of-the-art detectors in research literature and commercial, in-browser tools. Our findings reveal a notable divergence: detectors proposed in the research literature that rely on feature-based analysis of source code show moderate vulnerability, stemming from outdated datasets or a lack of WASM compatibility. In contrast, defenses such as browser extensions and native browser features remained completely effective, as their API-level interception is agnostic to the script's underlying implementation. These results highlight a gap between academic and practical defense strategies and offer insights into strengthening detection approaches against WASM-based obfuscation, while also revealing opportunities for more evasive techniques in future attacks.

Probabilistic extensions of logic programming languages, such as ProbLog, integrate logical reasoning with probabilistic inference to evaluate probabilities of output relations; however, prior work does not account for potential statistical correlations among input facts. This paper introduces Praline, a new extension to Datalog designed for precise probabilistic inference in the presence of (partially known) input correlations. We formulate the inference task as a constrained optimization problem, where the solution yields sound and precise probability bounds for output facts. However, due to the complexity of the resulting optimization problem, this approach alone often does not scale to large programs. To address scalability, we propose a more efficient δ-exact inference algorithm that leverages constraint solving, static analysis, and iterative refinement. Our empirical evaluation on challenging real-world benchmarks, including side-channel analysis, demonstrates that our method not only scales effectively but also delivers tight probability bounds.

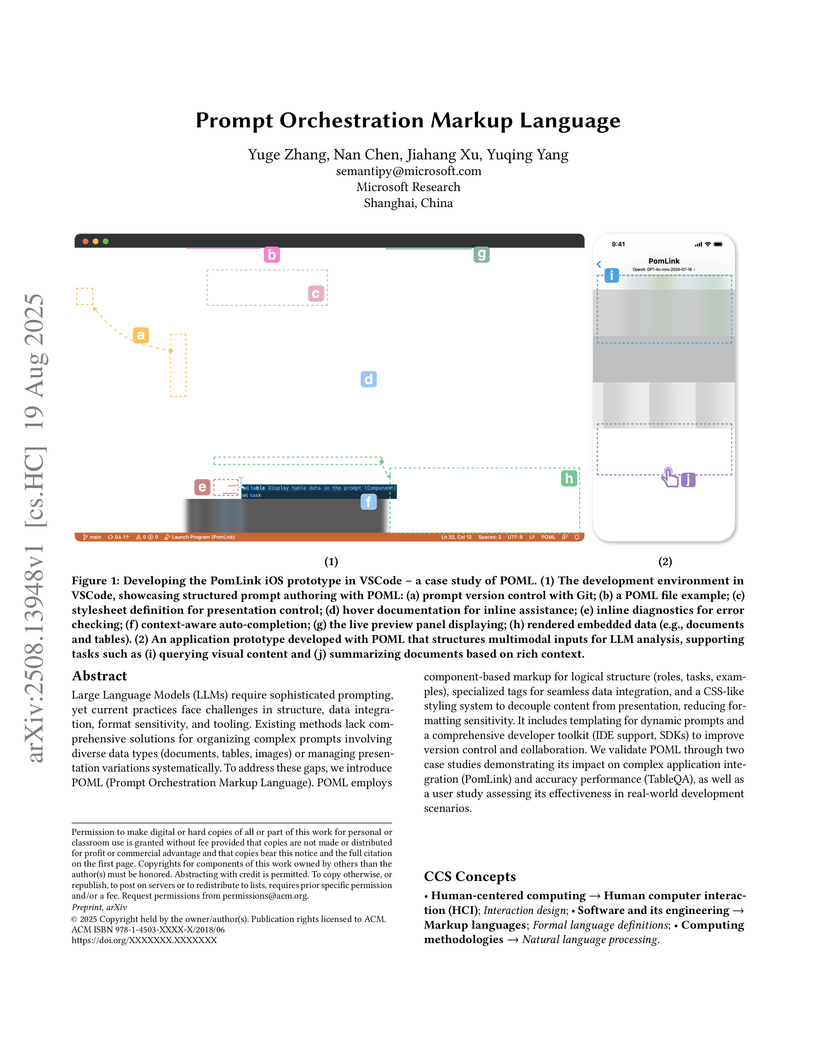

Microsoft Research introduces Prompt Orchestration Markup Language (POML), a structured framework that combines HTML-like syntax, CSS-like styling, and data components to streamline prompt engineering for Large Language Models. This approach enabled the systematic evaluation of 73,926 prompt style configurations in one study, revealing up to 929% accuracy variance for GPT-3.5-Turbo, and significantly reducing development time for LLM applications.

This work introduces the first efficient compiler and an extended language for Qunity, a quantum programming language, transforming it from a theoretical concept to a practical tool. The new compiler significantly reduces qubit and gate counts by orders of magnitude for various benchmarks compared to prior methods, often achieving performance comparable to handcrafted circuits.

There are no more papers matching your filters at the moment.