Greenwaves Technologies

14 Oct 2022

This paper presents an optimized methodology to design and deploy Speech Enhancement (SE) algorithms based on Recurrent Neural Networks (RNNs) on a state-of-the-art MicroController Unit (MCU), with 1+8 general-purpose RISC-V cores. To achieve low-latency execution, we propose an optimized software pipeline interleaving parallel computation of LSTM or GRU recurrent blocks, featuring vectorized 8-bit integer (INT8) and 16-bit floating-point (FP16) compute units, with manually-managed memory transfers of model parameters. To ensure minimal accuracy degradation with respect to the full-precision models, we propose a novel FP16-INT8 Mixed-Precision Post-Training Quantization (PTQ) scheme that compresses the recurrent layers to 8-bit while the bit precision of remaining layers is kept to FP16. Experiments are conducted on multiple LSTM and GRU based SE models trained on the Valentini dataset, featuring up to 1.24M parameters. Thanks to the proposed approaches, we speed-up the computation by up to 4x with respect to the lossless FP16 baselines. Differently from a uniform 8-bit quantization that degrades the PESQ score by 0.3 on average, the Mixed-Precision PTQ scheme leads to a low-degradation of only 0.06, while achieving a 1.4-1.7x memory saving. Thanks to this compression, we cut the power cost of the external memory by fitting the large models on the limited on-chip non-volatile memory and we gain a MCU power saving of up to 2.5x by reducing the supply voltage from 0.8V to 0.65V while still matching the real-time constraints. Our design results 10x more energy efficient than state-of-the-art SE solutions deployed on single-core MCUs that make use of smaller models and quantization-aware training.

Wearable biosignal processing applications are driving significant progress toward miniaturized, energy-efficient Internet-of-Things solutions for both clinical and consumer applications. However, scaling toward high-density multi-channel front-ends is only feasible by performing data processing and machine Learning (ML) near-sensor through energy-efficient edge processing. To tackle these challenges, we introduce BioGAP, a novel, compact, modular, and lightweight (6g) medical-grade biosignal acquisition and processing platform powered by GAP9, a ten-core ultra-low-power SoC designed for efficient multi-precision (from FP to aggressively quantized integer) processing, as required for advanced ML and DSP. BioGAPs form factor is 16x21x14 mm3 and comprises two stacked PCBs: a baseboard integrating the GAP9 SoC, a wireless Bluetooth Low Energy (BLE) capable SoC, a power management circuit, and an accelerometer; and a shield including an analog front-end (AFE) for ExG acquisition. Finally, the system also includes a flexibly placeable photoplethysmogram (PPG) PCB with a size of 9x7x3 mm3 and a rechargeable battery (ϕ 12x5 mm2). We demonstrate BioGAP on a Steady State Visually Evoked Potential (SSVEP)-based Brain-Computer Interface (BCI) application. We achieve 3.6 uJ/sample in streaming and 2.2 uJ/sample in onboard processing mode, thanks to an efficiency on the FFT computation task of 16.7 Mflops/s/mW with wireless bandwidth reduction of 97%, within a power budget of just 18.2 mW allowing for an operation time of 15 h.

20 Jan 2022

The last few years have seen the emergence of IoT processors: ultra-low power systems-on-chips (SoCs) combining lightweight and flexible micro-controller units (MCUs), often based on open-ISA RISC-V cores, with application-specific accelerators to maximize performance and energy efficiency. Overall, this heterogeneity level requires complex hardware and a full-fledged software stack to orchestrate the execution and exploit platform features. For this reason, enabling agile design space exploration becomes a crucial asset for this new class of low-power SoCs. In this scenario, high-level simulators play an essential role in breaking the speed and design effort bottlenecks of cycle-accurate simulators and FPGA prototypes, respectively, while preserving functional and timing accuracy. We present GVSoC, a highly configurable and timing-accurate event-driven simulator that combines the efficiency of C++ models with the flexibility of Python configuration scripts. GVSoC is fully open-sourced, with the intent to drive future research in the area of highly parallel and heterogeneous RISC-V based IoT processors, leveraging three foundational features: Python-based modular configuration of the hardware description, easy calibration of platform parameters for accurate performance estimation, and high-speed simulation. Experimental results show that GVSoC enables practical functional and performance analysis and design exploration at the full-platform level (processors, memory, peripherals and IOs) with a speed-up of 2500x with respect to cycle-accurate simulation with errors typically below 10% for performance analysis.

07 Mar 2025

This paper proposes a self-learning method to incrementally train (fine-tune)

a personalized Keyword Spotting (KWS) model after the deployment on ultra-low

power smart audio sensors. We address the fundamental problem of the absence of

labeled training data by assigning pseudo-labels to the new recorded audio

frames based on a similarity score with respect to few user recordings. By

experimenting with multiple KWS models with a number of parameters up to 0.5M

on two public datasets, we show an accuracy improvement of up to +19.2% and

+16.0% vs. the initial models pretrained on a large set of generic keywords.

The labeling task is demonstrated on a sensor system composed of a low-power

microphone and an energy-efficient Microcontroller (MCU). By efficiently

exploiting the heterogeneous processing engines of the MCU, the always-on

labeling task runs in real-time with an average power cost of up to 8.2 mW. On

the same platform, we estimate an energy cost for on-device training 10x lower

than the labeling energy if sampling a new utterance every 6.1 s or 18.8 s with

a DS-CNN-S or a DS-CNN-M model. Our empirical result paves the way to

self-adaptive personalized KWS sensors at the extreme edge.

30 Aug 2016

Endpoint devices for Internet-of-Things not only need to work under extremely tight power envelope of a few milliwatts, but also need to be flexible in their computing capabilities, from a few kOPS to GOPS. Near-threshold(NT) operation can achieve higher energy efficiency, and the performance scalability can be gained through parallelism. In this paper we describe the design of an open-source RISC-V processor core specifically designed for NT operation in tightly coupled multi-core clusters. We introduce instruction-extensions and microarchitectural optimizations to increase the computational density and to minimize the pressure towards the shared memory hierarchy. For typical data-intensive sensor processing workloads the proposed core is on average 3.5x faster and 3.2x more energy-efficient, thanks to a smart L0 buffer to reduce cache access contentions and support for compressed instructions. SIMD extensions, such as dot-products, and a built-in L0 storage further reduce the shared memory accesses by 8x reducing contentions by 3.2x. With four NT-optimized cores, the cluster is operational from 0.6V to 1.2V achieving a peak efficiency of 67MOPS/mW in a low-cost 65nm bulk CMOS technology. In a low power 28nm FDSOI process a peak efficiency of 193MOPS/mW(40MHz, 1mW) can be achieved.

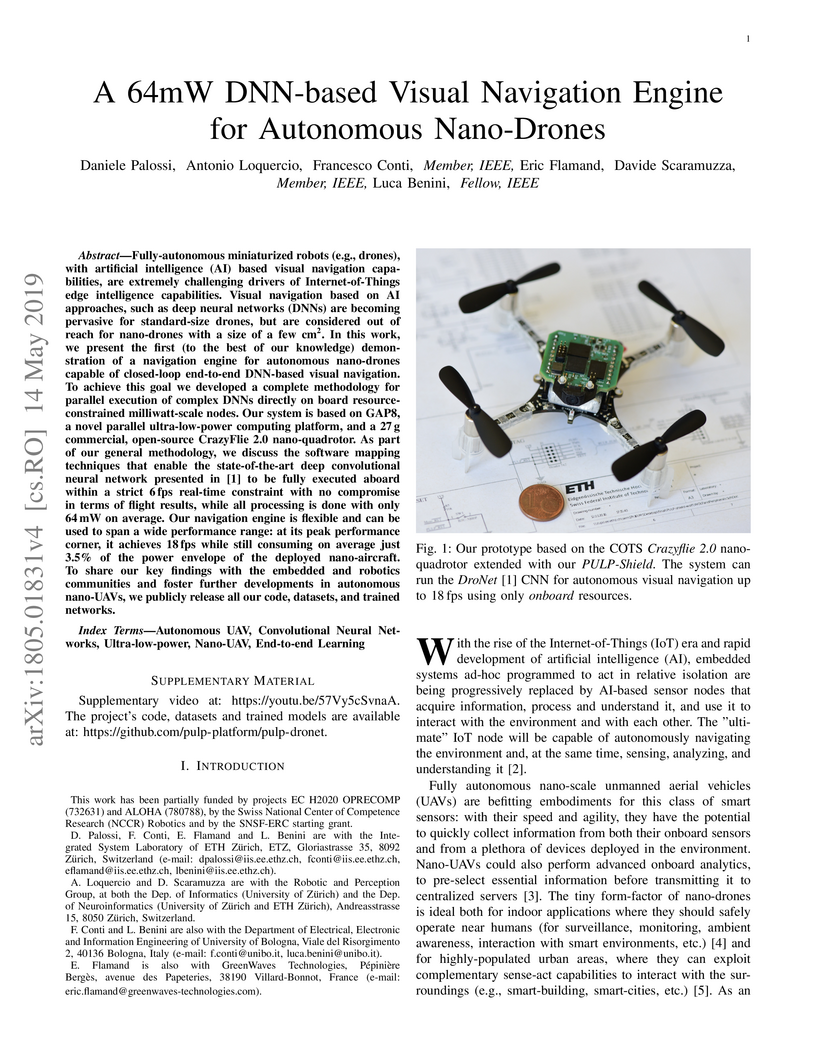

Fully-autonomous miniaturized robots (e.g., drones), with artificial intelligence (AI) based visual navigation capabilities are extremely challenging drivers of Internet-of-Things edge intelligence capabilities. Visual navigation based on AI approaches, such as deep neural networks (DNNs) are becoming pervasive for standard-size drones, but are considered out of reach for nanodrones with size of a few cm2. In this work, we present the first (to the best of our knowledge) demonstration of a navigation engine for autonomous nano-drones capable of closed-loop end-to-end DNN-based visual navigation. To achieve this goal we developed a complete methodology for parallel execution of complex DNNs directly on-bard of resource-constrained milliwatt-scale nodes. Our system is based on GAP8, a novel parallel ultra-low-power computing platform, and a 27 g commercial, open-source CrazyFlie 2.0 nano-quadrotor. As part of our general methodology we discuss the software mapping techniques that enable the state-of-the-art deep convolutional neural network presented in [1] to be fully executed on-board within a strict 6 fps real-time constraint with no compromise in terms of flight results, while all processing is done with only 64 mW on average. Our navigation engine is flexible and can be used to span a wide performance range: at its peak performance corner it achieves 18 fps while still consuming on average just 3.5% of the power envelope of the deployed nano-aircraft.

05 Dec 2020

A key challenge in scaling shared-L1 multi-core clusters towards many-core

(more than 16 cores) configurations is to ensure low-latency and efficient

access to the L1 memory. In this work we demonstrate that it is possible to

scale up the shared-L1 architecture: We present MemPool, a 32 bit many-core

system with 256 fast RV32IMA "Snitch" cores featuring application-tunable

execution units, running at 700 MHz in typical conditions (TT/0.80

V/25{\deg}C). MemPool is easy to program, with all the cores sharing a global

view of a large L1 scratchpad memory pool, accessible within at most 5 cycles.

In MemPool's physical-aware design, we emphasized the exploration, design, and

optimization of the low-latency processor-to-L1-memory interconnect. We compare

three candidate topologies, analyzing them in terms of latency, throughput, and

back-end feasibility. The chosen topology keeps the average latency at fewer

than 6 cycles, even for a heavy injected load of 0.33 request/core/cycle. We

also propose a lightweight addressing scheme that maps each core private data

to a memory bank accessible within one cycle, which leads to performance gains

of up to 20% in real-world signal processing benchmarks. The addressing scheme

is also highly efficient in terms of energy consumption since requests to local

banks consume only half of the energy required to access remote banks. Our

design achieves competitive performance with respect to an ideal,

non-implementable full-crossbar baseline.

The Internet-of-Things requires end-nodes with ultra-low-power always-on capability for a long battery lifetime, as well as high performance, energy efficiency, and extreme flexibility to deal with complex and fast-evolving near-sensor analytics algorithms (NSAAs). We present Vega, an IoT end-node SoC capable of scaling from a 1.7 μW fully retentive cognitive sleep mode up to 32.2 GOPS (@ 49.4 mW) peak performance on NSAAs, including mobile DNN inference, exploiting 1.6 MB of state-retentive SRAM, and 4 MB of non-volatile MRAM. To meet the performance and flexibility requirements of NSAAs, the SoC features 10 RISC-V cores: one core for SoC and IO management and a 9-cores cluster supporting multi-precision SIMD integer and floating-point computation. Vega achieves SoA-leading efficiency of 615 GOPS/W on 8-bit INT computation (boosted to 1.3TOPS/W for 8-bit DNN inference with hardware acceleration). On floating-point (FP) compuation, it achieves SoA-leading efficiency of 79 and 129 GFLOPS/W on 32- and 16-bit FP, respectively. Two programmable machine-learning (ML) accelerators boost energy efficiency in cognitive sleep and active states, respectively.

There are no more papers matching your filters at the moment.