Hitotsubashi University

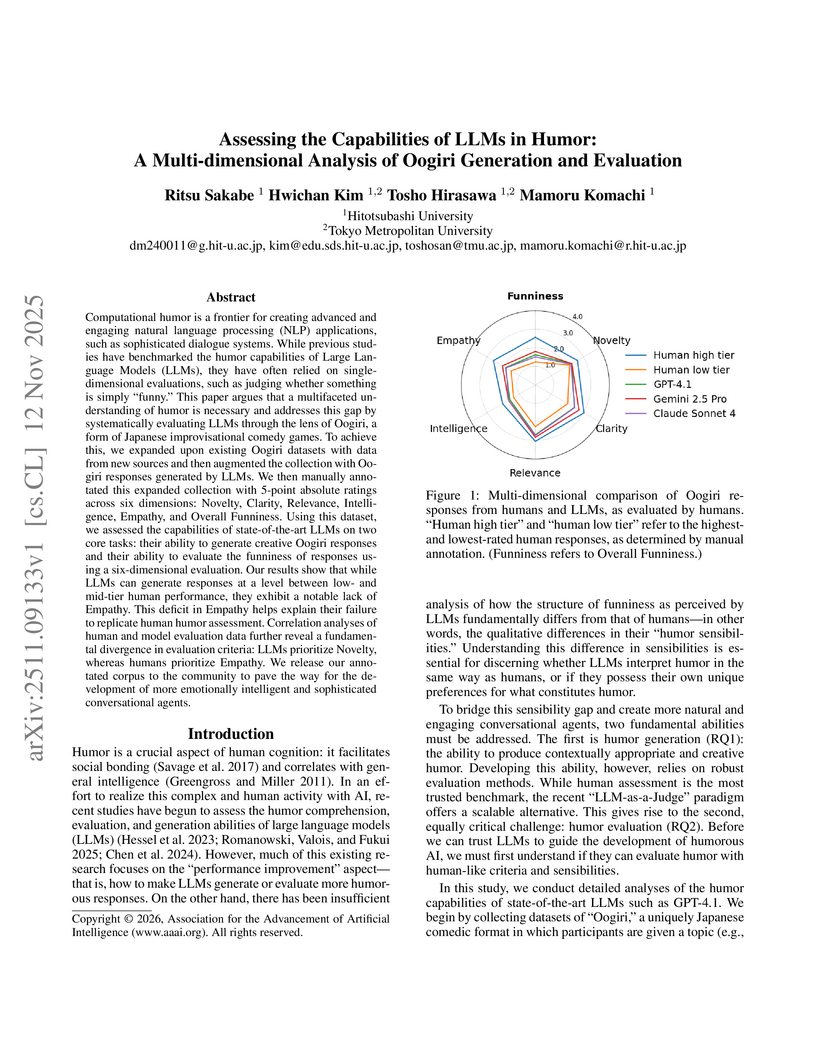

Researchers from Hitotsubashi University and Tokyo Metropolitan University conducted a multi-dimensional analysis of Large Language Models' (LLMs) capabilities in generating and evaluating Oogiri humor. The study revealed that LLMs perform at a level between low- and mid-tier human humor generation and exhibit a 'sensibility gap' with humans, prioritizing novelty over empathy in their assessments.

Researchers at Hitotsubashi University and Chuo University developed an intrinsic signal model that objectively identifies signal variables in high-dimensional, small-sample-size datasets by analyzing their persistence in the theoretical limit of zero sample size. The framework, rooted in dynamical systems theory, was validated by correctly extracting known signals from chaotic systems and identifying biologically relevant features in genomic data.

23 Sep 2025

We develop a finite-sample optimal estimator for regression discontinuity designs when the outcomes are bounded, including binary outcomes as the leading case. Our finite-sample optimal estimator achieves the exact minimax mean squared error among linear shrinkage estimators with nonnegative weights when the regression function of a bounded outcome lies in a Lipschitz class. Although the original minimax problem involves an iterating (n+1)-dimensional non-convex optimization problem where n is the sample size, we show that our estimator is obtained by solving a convex optimization problem. A key advantage of our estimator is that the Lipschitz constant is the only tuning parameter. We also propose a uniformly valid inference procedure without a large-sample approximation. In a simulation exercise for small samples, our estimator exhibits smaller mean squared errors and shorter confidence intervals than conventional large-sample techniques which may be unreliable when the effective sample size is small. We apply our method to an empirical multi-cutoff design where the sample size for each cutoff is small. In the application, our method yields informative confidence intervals, in contrast to the leading large-sample approach.

02 Oct 2025

Dependence among multiple lifetimes is a key factor for pricing and evaluating the risk of joint life insurance products. The dependence structure can be exposed to model uncertainty when available data and information are limited. We address robust pricing and risk evaluation of joint life insurance products against dependence uncertainty among lifetimes. We first show that, for some class of standard contracts, the risk evaluation based on distortion risk measure is monotone with respect to the concordance order of the underlying copula. Based on this monotonicity, we then study the most conservative and anti-conservative risk evaluations for this class of contracts. We prove that the bounds for the mean, Value-at-Risk and Expected shortfall are computed by a combination of linear programs when the uncertainty set is defined by some norm-ball centered around a reference copula. Our numerical analysis reveals that the sensitivity of the risk evaluation against the choice of the copula differs depending on the risk measure and the type of the contract, and our proposed bounds can improve the existing bounds based on the available information.

18 Sep 2025

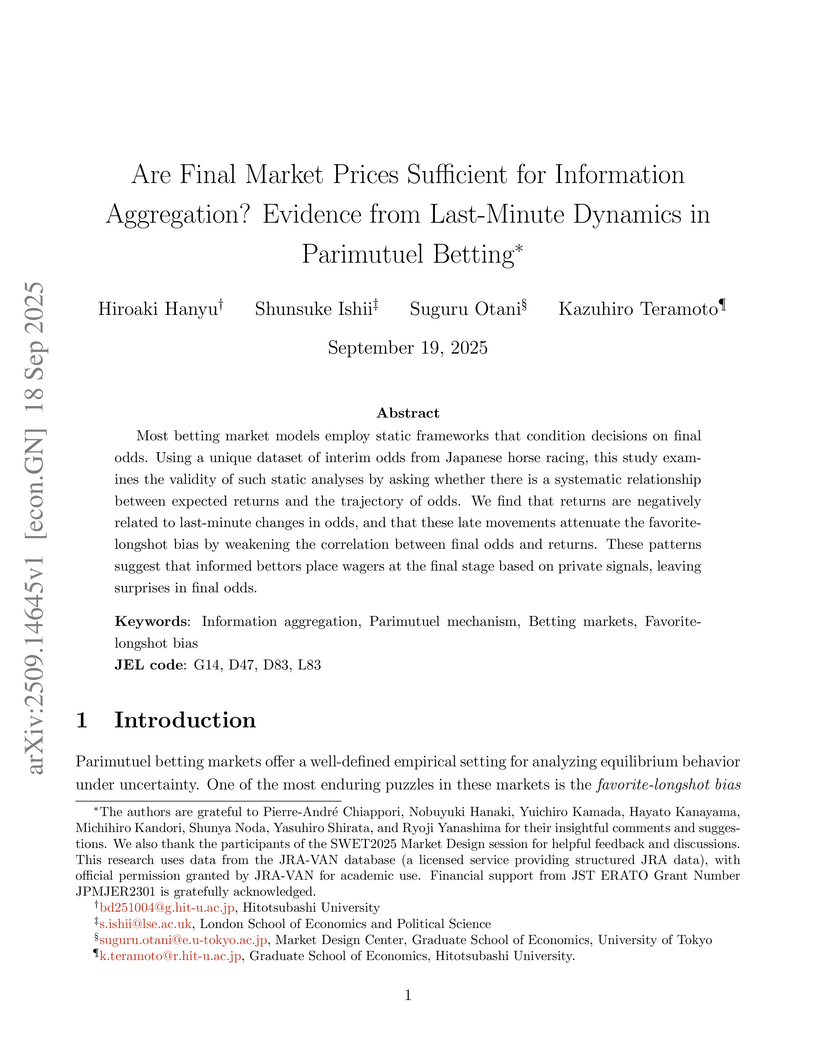

Researchers at Hitotsubashi University, LSE, and the University of Tokyo empirically demonstrate that final odds in parimutuel betting markets are insufficient for complete information aggregation, finding that last-minute odds movements contain significant predictive information that contributes to the Favorite-Longshot Bias.

17 Sep 2024

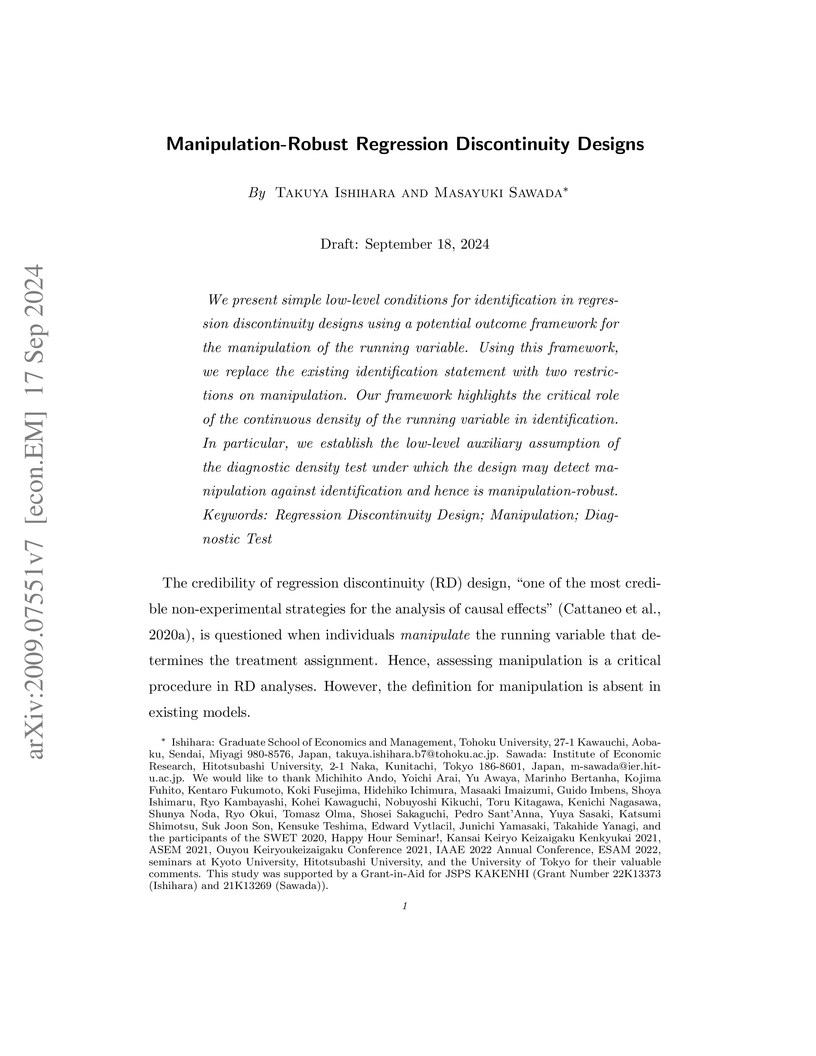

We present simple low-level conditions for identification in regression

discontinuity designs using a potential outcome framework for the manipulation

of the running variable. Using this framework, we replace the existing

identification statement with two restrictions on manipulation. Our framework

highlights the critical role of the continuous density of the running variable

in identification. In particular, we establish the low-level auxiliary

assumption of the diagnostic density test under which the design may detect

manipulation against identification and hence is manipulation-robust.

19 Nov 2025

We propose a conditional independence (CI) test based on a new measure, the \emph{spectral generalized covariance measure} (SGCM). The SGCM is constructed by approximating the basis expansion of the squared norm of the conditional cross-covariance operator, using data-dependent bases obtained via spectral decompositions of empirical covariance operators. This construction avoids direct estimation of conditional mean embeddings and reduces the problem to scalar-valued regressions, resulting in robust finite-sample size control. Theoretically, we derive the limiting distribution of the SGCM statistic, establish the validity of a wild bootstrap for inference, and obtain uniform asymptotic size control under doubly robust conditions. As an additional contribution, we show that exponential kernels induced by continuous semimetrics of negative type are characteristic on general Polish spaces -- with extensions to finite tensor products -- thereby providing a foundation for applying our test and other kernel methods to complex objects such as distribution-valued data and curves on metric spaces. Extensive simulations indicate that the SGCM-based CI test attains near-nominal size and exhibits power competitive with or superior to state-of-the-art alternatives across a range of challenging scenarios.

23 May 2025

In recent years, the application of machine learning approaches to

time-series forecasting of climate dynamical phenomena has become increasingly

active. It is known that applying a band-pass filter to a time-series data is a

key to obtaining a high-quality data-driven model. Here, to obtain longer-term

predictability of machine learning models, we introduce a new type of band-pass

filter. It can be applied to realtime operational prediction workflows since it

relies solely on past time series. We combine the filter with reservoir

computing, which is a machine-learning technique that employs a data-driven

dynamical system. As an application, we predict the multi-year dynamics of the

El Ni\~{n}o-Southern Oscillation with the prediction horizon of 24 months using

only past time series.

01 Mar 2025

We consider estimating nonparametric time-varying parameters in linear models using kernel regression. Our contributions are threefold. First, we consider a broad class of time-varying parameters including deterministic smooth functions, the rescaled random walk, structural breaks, the threshold model and their mixtures. We show that those time-varying parameters can be consistently estimated by kernel regression. Our analysis exploits the smoothness of the time-varying parameter quantified by a single parameter. The second contribution is to reveal that the bandwidth used in kernel regression determines a trade-off between the rate of convergence and the size of the class of time-varying parameters that can be estimated. We demonstrate that an improper choice of the bandwidth yields biased estimation, and argue that the bandwidth should be selected according to the smoothness of the time-varying parameter. Our third contribution is to propose a data-driven procedure for bandwidth selection that is adaptive to the smoothness of the time-varying parameter.

05 Nov 2025

What happens if selective colleges change their admission policies? We study this question by analyzing the world's first implementation of nationally centralized meritocratic admissions in the early twentieth century. We find a persistent meritocracy-equity tradeoff. Compared to the decentralized system, the centralized system admitted more high-achievers and produced more occupational elites (such as top income earners) decades later in the labor market. This gain came at a distributional cost, however. Meritocratic centralization also increased the number of urban-born elites relative to rural-born ones, undermining equal access to higher education and career advancement.

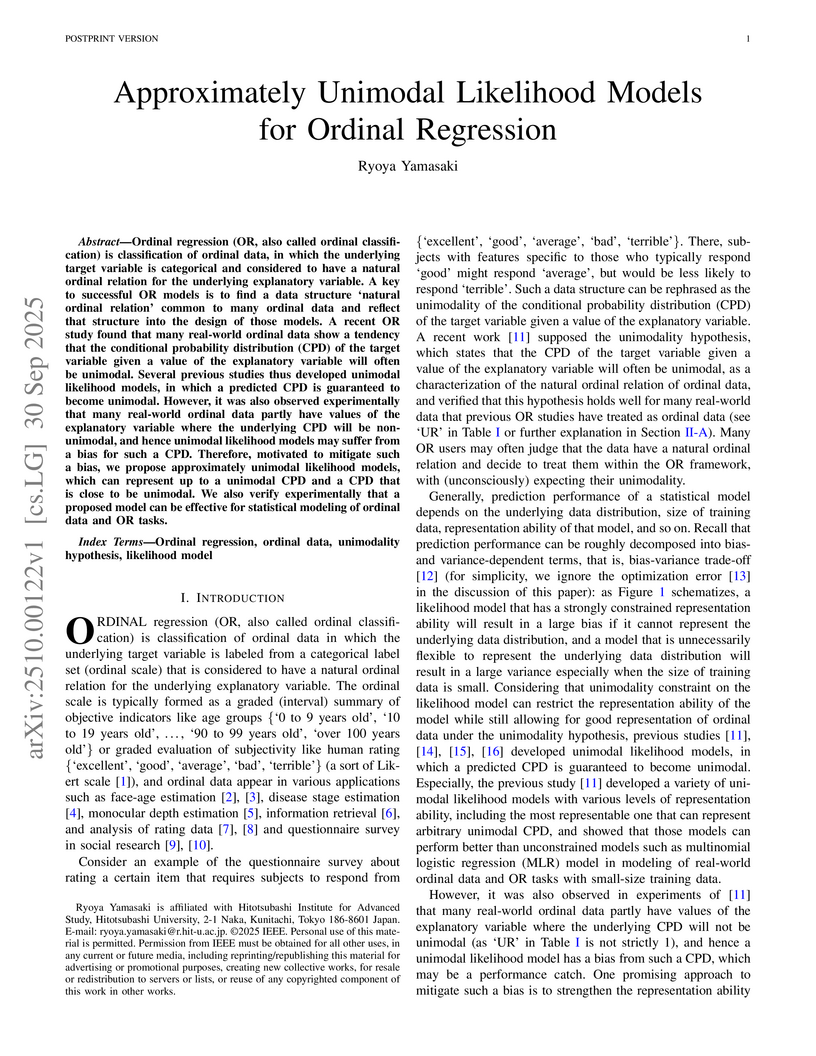

Ordinal regression (OR, also called ordinal classification) is classification of ordinal data, in which the underlying target variable is categorical and considered to have a natural ordinal relation for the underlying explanatory variable. A key to successful OR models is to find a data structure `natural ordinal relation' common to many ordinal data and reflect that structure into the design of those models. A recent OR study found that many real-world ordinal data show a tendency that the conditional probability distribution (CPD) of the target variable given a value of the explanatory variable will often be unimodal. Several previous studies thus developed unimodal likelihood models, in which a predicted CPD is guaranteed to become unimodal. However, it was also observed experimentally that many real-world ordinal data partly have values of the explanatory variable where the underlying CPD will be non-unimodal, and hence unimodal likelihood models may suffer from a bias for such a CPD. Therefore, motivated to mitigate such a bias, we propose approximately unimodal likelihood models, which can represent up to a unimodal CPD and a CPD that is close to be unimodal. We also verify experimentally that a proposed model can be effective for statistical modeling of ordinal data and OR tasks.

Researchers from The University of Tokyo and Hitotsubashi University developed a self-supervised Graph Convolutional Network (GCN) framework for direct mesh inpainting, leveraging a self-prior from a single incomplete mesh. This method effectively fills holes in 3D triangular meshes without requiring large training datasets or converting mesh formats, demonstrating superior performance over traditional methods and robustness to out-of-distribution shapes.

The meanings and relationships of words shift over time. This phenomenon is referred to as semantic shift. Research focused on understanding how semantic shifts occur over multiple time periods is essential for gaining a detailed understanding of semantic shifts. However, detecting change points only between adjacent time periods is insufficient for analyzing detailed semantic shifts, and using BERT-based methods to examine word sense proportions incurs a high computational cost. To address those issues, we propose a simple yet intuitive framework for how semantic shifts occur over multiple time periods by leveraging a similarity matrix between the embeddings of the same word through time. We compute a diachronic word similarity matrix using fast and lightweight word embeddings across arbitrary time periods, making it deeper to analyze continuous semantic shifts. Additionally, by clustering the similarity matrices for different words, we can categorize words that exhibit similar behavior of semantic shift in an unsupervised manner.

16 Sep 2025

The theory of embedding and generalized synchronization in reservoir computing has recently been developed. Under ideal conditions, reservoir computing exhibits generalized synchronization during the learning process. These insights form a rigorous basis for understanding reservoir computing ability to reconstruct and predict complex dynamics. In this study, we clarified the dynamical system structures of generalized synchronization and embedding by comparing the Lyapunov exponents of a high dimensional neural network within the reservoir computing model with those in actual systems. Furthermore, we numerically calculated the Lyapunov exponents restricted to the tangent space of the inertial manifold in a high dimensional neural network. Our results demonstrate that all Lyapunov exponents of the actual dynamics, including negative ones, are successfully identified.

07 Jun 2022

We consider enstrophy dissipation in two-dimensional (2D) Navier-Stokes flows and focus on how this quantity behaves in thelimit of vanishing viscosity. After recalling a number of a priori estimates providing lower and upper bounds on this quantity, we state an optimization problem aimed at probing the sharpness of these estimates as functions of viscosity. More precisely, solutions of this problem are the initial conditions with fixed palinstrophy and possessing the property that the resulting 2D Navier-Stokes flows locally maximize the enstrophy dissipation over a given time window. This problem is solved numerically with an adjoint-based gradient ascent method and solutions obtained for a broad range of viscosities and lengths of the time window reveal the presence of multiple branches of local maximizers, each associated with a distinct mechanism for the amplification of palinstrophy. The dependence of the maximum enstrophy dissipation on viscosity is shown to be in quantitative agreement with the estimate due to Ciampa, Crippa & Spirito (2021), demonstrating the sharpness of this bound.

23 Sep 2014

Ultra-high dimensional longitudinal data are increasingly common and the analysis is challenging both theoretically and methodologically. We offer a new automatic procedure for finding a sparse semivarying coefficient model, which is widely accepted for longitudinal data analysis. Our proposed method first reduces the number of covariates to a moderate order by employing a screening procedure, and then identifies both the varying and constant coefficients using a group SCAD estimator, which is subsequently refined by accounting for the within-subject correlation. The screening procedure is based on working independence and B-spline marginal models. Under weaker conditions than those in the literature, we show that with high probability only irrelevant variables will be screened out, and the number of selected variables can be bounded by a moderate order. This allows the desirable sparsity and oracle properties of the subsequent structure identification step. Note that existing methods require some kind of iterative screening in order to achieve this, thus they demand heavy computational effort and consistency is not guaranteed. The refined semivarying coefficient model employs profile least squares, local linear smoothing and nonparametric covariance estimation, and is semiparametric efficient. We also suggest ways to implement the proposed methods, and to select the tuning parameters. An extensive simulation study is summarized to demonstrate its finite sample performance and the yeast cell cycle data is analyzed.

23 Dec 2024

This paper presents a novel generic asymptotic expansion formula of expectations of multidimensional Wiener functionals through a Malliavin calculus technique. The uniform estimate of the asymptotic expansion is shown under a weaker condition on the Malliavin covariance matrix of the target Wiener functional. In particular, the method provides a tractable expansion for the expectation of an irregular functional of the solution to a multidimensional rough differential equation driven by fractional Brownian motion with Hurst index H<1/2, without using complicated fractional integral calculus for the singular kernel. In a numerical experiment, our expansion shows a much better approximation for a probability distribution function than its normal approximation, which demonstrates the validity of the proposed method.

25 Oct 2024

Forecasting volatility and quantiles of financial returns is essential for

accurately measuring financial tail risks, such as value-at-risk and expected

shortfall. The critical elements in these forecasts involve understanding the

distribution of financial returns and accurately estimating volatility. This

paper introduces an advancement to the traditional stochastic volatility model,

termed the realized stochastic volatility model, which integrates realized

volatility as a precise estimator of volatility. To capture the well-known

characteristics of return distribution, namely skewness and heavy tails, we

incorporate three types of skew-t distributions. Among these, two distributions

include the skew-normal feature, offering enhanced flexibility in modeling the

return distribution. We employ a Bayesian estimation approach using the Markov

chain Monte Carlo method and apply it to major stock indices. Our empirical

analysis, utilizing data from US and Japanese stock indices, indicates that the

inclusion of both skewness and heavy tails in daily returns significantly

improves the accuracy of volatility and quantile forecasts.

23 May 2023

Human flow data are rich behavioral data relevant to people's decision-making regarding where to live, work, go shopping, etc., and provide vital information for identifying city centers. However, it is not as easy to understand massive relational data, and datasets have often been reduced merely to the statistics of trip counts at destinations, discarding relational information from origin to destination. In this study, we propose an alternative center identification method based on human mobility data. This method extracts the scalar potential field of human trips based on combinatorial Hodge theory. It detects not only statistically significant attractive locations as the sinks of human trips but also significant origins as the sources of trips. As a case study, we identify the sinks and sources of commuting and shopping trips in the Tokyo metropolitan area. This aim-specific analysis leads to a combinatorial classification of city centers based on the distinct aspects of human mobility. The proposed method can be applied to other mobility datasets with relevant properties and helps us examine the complex spatial structures in contemporary metropolitan areas from the multiple perspectives of human mobility.

07 Oct 2022

Chaotic dynamics can be quite heterogeneous in the sense that in some regions the dynamics are unstable in more directions than in other regions. When trajectories wander between these regions, the dynamics is complicated. We say a chaotic invariant set is heterogeneous when arbitrarily close to each point of the set there are different periodic points with different numbers of unstable dimensions. We call such dynamics heterogeneous chaos (or hetero-chaos), While we believe it is common for physical systems to be hetero-chaotic, few explicit examples have been proved to be hetero-chaotic. Here we present two more explicit dynamical systems that are particularly simple and tractable with computer. It will give more intuition as to how complex even simple systems can be. Our maps have one dense set of periodic points whose orbits are 1D unstable and another dense set of periodic points whose orbits are 2D unstable. Moreover, they are ergodic relative to the Lebesgue measure.

There are no more papers matching your filters at the moment.