Ask or search anything...

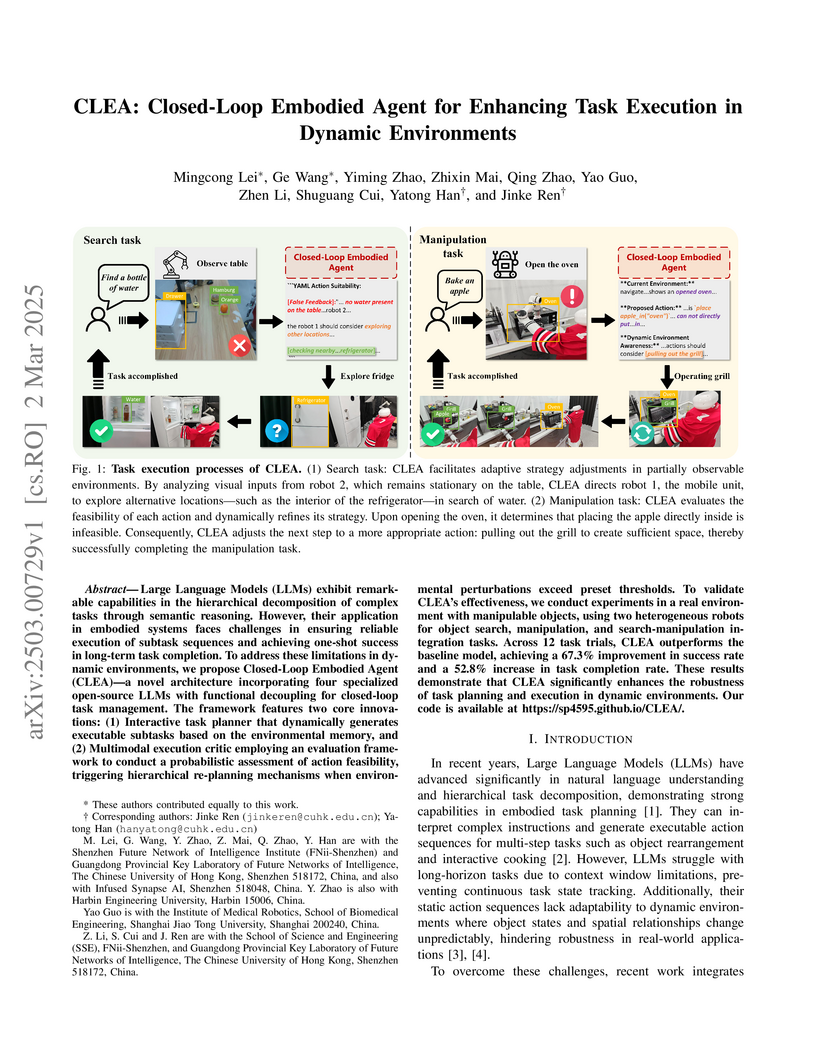

Researchers from CUHK-Shenzhen and Shanghai Jiao Tong University introduce CLEA, a groundbreaking closed-loop framework that enables robust LLM-based robotic task planning through four specialized language models working in concert, achieving a 67.3% improvement in success rate over baseline systems while demonstrating practical deployment in dynamic real-world environments.

View blogThe STMA framework enhances LLM-based agents for long-horizon embodied task planning by integrating a dynamic spatio-temporal memory with a self-correcting planner-critic mechanism. This approach achieves a 31.25% improvement in task success rate and a 24.7% increase in average score over baselines in TextWorld, particularly when leveraging open-source LLMs.

View blogSAAP, a structured pruning framework for large language models, employs adaptive importance assessment and group-wise fine-tuning to significantly reduce computational and memory costs. It achieves up to 65% faster token generation and substantially lowers memory footprint on various LLMs like LLaMA and Vicuna, while maintaining or improving performance across diverse benchmarks.

View blog Shanghai Jiao Tong University

Shanghai Jiao Tong University

Nanyang Technological University

Nanyang Technological University