International Institute of PhysicsFederal University of Rio Grande do Norte

13 Nov 2019

Steady technological advances are paving the way for the implementation of the quantum internet, a network of locations interconnected by quantum channels. Here we propose a model to simulate a quantum internet based on optical fibers and employ network-theory techniques to characterize the statistical properties of the photonic networks it generates. Our model predicts a continuous phase transition between a disconnected and a highly-connected phase characterized by the computation of critical exponents. Moreover we show that, although the networks do not present the small world property, the average distance between nodes is typically small.

Training SER models in natural, spontaneous speech is especially challenging

due to the subtle expression of emotions and the unpredictable nature of

real-world audio. In this paper, we present a robust system for the INTERSPEECH

2025 Speech Emotion Recognition in Naturalistic Conditions Challenge, focusing

on categorical emotion recognition. Our method combines state-of-the-art audio

models with text features enriched by prosodic and spectral cues. In

particular, we investigate the effectiveness of Fundamental Frequency (F0)

quantization and the use of a pretrained audio tagging model. We also employ an

ensemble model to improve robustness. On the official test set, our system

achieved a Macro F1-score of 39.79% (42.20% on validation). Our results

underscore the potential of these methods, and analysis of fusion techniques

confirmed the effectiveness of Graph Attention Networks. Our source code is

publicly available.

12 Aug 2025

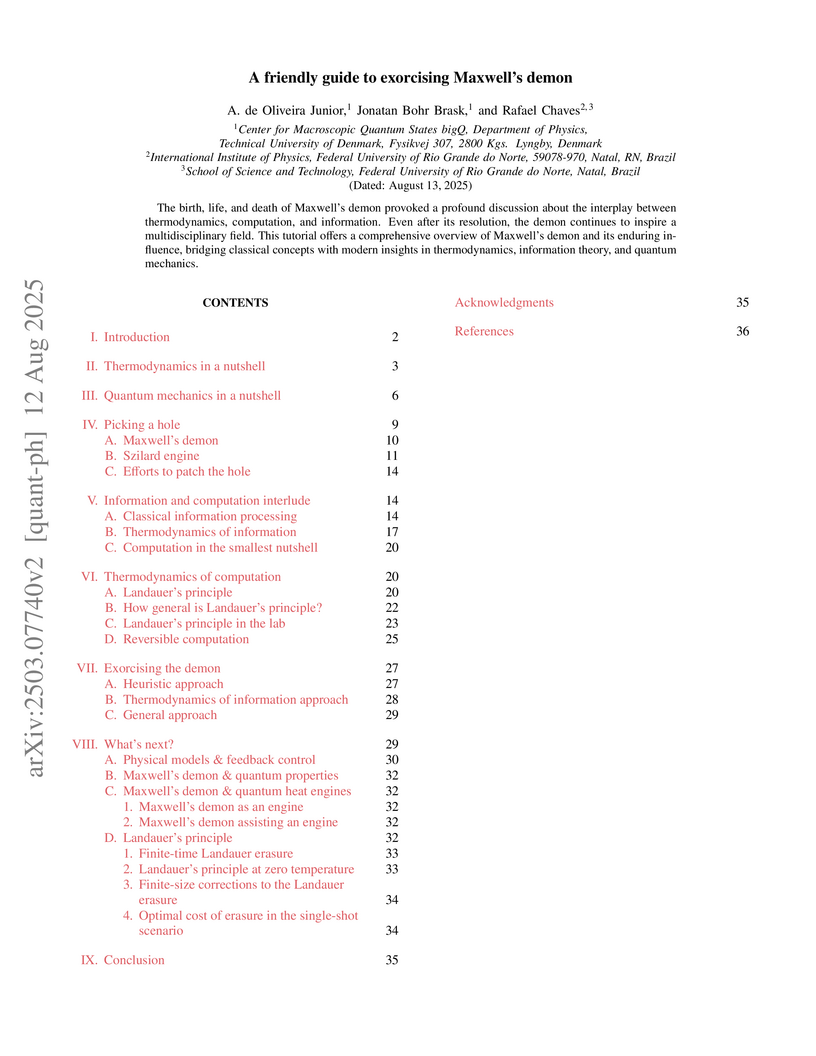

The birth, life, and death of Maxwell's demon provoked a profound discussion about the interplay between thermodynamics, computation, and information. Even after its exorcism, the demon continues to inspire a multidisciplinary field. This tutorial offers a comprehensive overview of Maxwell's demon and its enduring influence, bridging classical concepts with modern insights in thermodynamics, information theory, and quantum mechanics.

02 Sep 2021

The presence of non-local and long-range interactions in quantum systems induces several peculiar features in their equilibrium and out-of-equilibrium behavior. In current experimental platforms control parameters such as interaction range, temperature, density and dimension can be changed. The existence of universal scaling regimes, where diverse physical systems and observables display quantitative agreement, generates a common framework, where the efforts of different research communities can be -- in some cases rigorously -- connected. Still, the application of this general framework to particular experimental realisations requires the identification of the regimes where the universality phenomenon is expected to appear. In the present review we summarise the recent investigations of many-body quantum systems with long-range interactions, which are currently realised in Rydberg atom arrays, dipolar systems, trapped ion setups and cold atoms in cavity experiments. Our main aim is to present and identify the common and (mostly) universal features induced by long-range interactions in the behaviour of quantum many-body systems. We will discuss both the case of very strong non-local couplings, i.e. the non-additive regime, and the one in which energy is extensive, but nevertheless low-energy, long wavelength properties are altered with respect to the short-range limit. Cases of competition with other local effects in the above mentioned setups are also reviewed.

This work explores an alternative perspective on quantum mechanics by representing quantum states as topological entities and quantum processes as topological transformations. It provides a visual and intuitive framework for understanding concepts such as quantum entanglement and quantum algorithms by drawing connections between quantum theory, knot theory, and topological quantum field theory.

11 Dec 2022

Bell nonlocality is one of the most intriguing and counter-intuitive phenomena displayed by quantum systems. Interestingly, such stronger-than-classical quantum correlations are somehow constrained, and one important question to the foundations of quantum theory is whether there is a physical, operational principle responsible for those constraints. One candidate is the information causality principle, which, in some particular cases, is proven to hold for quantum systems and to be violated by stronger-than-quantum correlations. In multipartite scenarios, though, it is known that the original formulation of the information causality principle fails to detect even extremal stronger-than-quantum correlations, thus suggesting that a genuinely multipartite formulation of the principle is necessary. In this work, we advance towards this goal, reporting a new formulation of the information causality principle in multipartite scenarios. By proposing a change of perspective, we obtain multipartite informational inequalities that work as necessary criteria for the principle to hold. We prove that such inequalities hold for all quantum resources, and forbid some stronger-than-quantum ones. Finally, we show that our approach can be strengthened if multiple copies of the resource are available, or, counter-intuitively, if noisy communication channels are employed.

17 Sep 2025

CNRS

CNRS National University of SingaporeXiamen UniversityLanzhou UniversityUniversit`a degli Studi dell’InsubriaIstituto Nazionale di Fisica NucleareCentre for Quantum TechnologiesInternational Institute of PhysicsMajuLabFederal University of Rio Grande do NorteLanzhou Center for Theoretical PhysicsCenter for Nonlinear and Complex SystemsFujian Provincial Key Laboratory of Low Dimensional Condensed Matter Physics

National University of SingaporeXiamen UniversityLanzhou UniversityUniversit`a degli Studi dell’InsubriaIstituto Nazionale di Fisica NucleareCentre for Quantum TechnologiesInternational Institute of PhysicsMajuLabFederal University of Rio Grande do NorteLanzhou Center for Theoretical PhysicsCenter for Nonlinear and Complex SystemsFujian Provincial Key Laboratory of Low Dimensional Condensed Matter PhysicsThis work aims to understand how quantum mechanics affects heat transport at low temperatures. In the classical setting, by considering a simple paradigmatic model, our simulations reveal the emergence of Negative Differential Thermal Resistance (NDTR): paradoxically, increasing the temperature bias by lowering the cold bath temperature reduces the steady-state heat current. In sharp contrast, the quantum version of the model, treated via a Lindblad master equation, exhibits no NDTR: the heat current increases monotonically with thermal bias. This marked divergence highlights the fundamental role of quantum effects in low-temperature thermal transport and underscores the need to reconsider classical predictions when designing and optimizing nanoscale thermal devices.

30 Jul 2025

Advances in quantum computing over the last two decades have required sophisticated mathematical frameworks to deepen the understanding of quantum algorithms. In this review, we introduce the theory of Lie groups and their algebras to analyze two fundamental problems in quantum computing as done in some recent works. Firstly, we describe the geometric formulation of quantum computational complexity, given by the length of the shortest path on the SU(2n) manifold with respect to a right-invariant Finsler metric. Secondly, we deal with the barren plateau phenomenon in Variational Quantum Algorithms (VQAs), where we use the Dynamical Lie Algebra (DLA) to identify algebraic sources of untrainability

27 Sep 2025

Global analysis of neutrino oscillation data slightly favors normal mass ordering. In this work, we investigate an extended scalar sector that naturally gives rise to a type I + II seesaw mechanism after spontaneous symmetry breaking and explore the interplay between collider physics and lepton flavor violation, adopting normal ordering. In particular, we focus on the rare muon decays μ→eγ and μ→3e and the same-sign dilepton searches at LHC, a canonical signature of a doubly charged scalar. We conclude that neither the precise value of the sum of the neutrino masses, taken from DESI data that favors ∑mν=0.07~eV, nor alternative cosmological fits which prefer a more relaxed limit ∑mν=0.1~eV, significantly changes the theoretical prediction for these rare decays. However, we observe an interesting interplay between collider physics and lepton flavor violation depending on the choices of the vacuum expectation value of the triplet scalar. In particular, we find that μ→3e is more constraining than μ→eγ, and the μ→3e decay can yield a lower mass limit of 3~TeV on the doubly charged scalar, surpassing current LHC constraint.

05 Jun 2012

The coherent states for a quantum particle on a Möbius strip are constructed and their relation with the natural phase space for fermionic fields is shown. The explicit comparison of the obtained states with previous works where the cylinder quantization was used and the spin 1/2 was introduced by hand is given, and the relation between the geometrical phase space, constraints and projection operators is analyzed and discussed.

24 May 2018

The experimental interest and developments in quantum spin-1/2-chains has

increased uninterruptedly over the last decade. In many instances, the target

quantum simulation belongs to the broader class of non-interacting fermionic

models, constituting an important benchmark. In spite of this class being

analytically efficiently tractable, no direct certification tool has yet been

reported for it. In fact, in experiments, certification has almost exclusively

relied on notions of quantum state tomography scaling very unfavorably with the

system size. Here, we develop experimentally-friendly fidelity witnesses for

all pure fermionic Gaussian target states. Their expectation value yields a

tight lower bound to the fidelity and can be measured efficiently. We derive

witnesses in full generality in the Majorana-fermion representation and apply

them to experimentally relevant spin-1/2 chains. Among others, we show how to

efficiently certify strongly out-of-equilibrium dynamics in critical Ising

chains. At the heart of the measurement scheme is a variant of importance

sampling specially tailored to overlaps between covariance matrices. The method

is shown to be robust against finite experimental-state infidelities.

We investigate the sensitivity of the projected TeV muon collider to the

gauged Lμ-Lτ model. Two processes are considered:

Z′-mediated two-body scatterings μ+μ−→ℓ+ℓ− with $\ell =

\muor\tau,andscatteringwithinitialstatephotonemission,\mu^+ \mu^-

\to \gamma Z',~Z' \to \ell \overline{\ell},where\ellcanbe\mu,\tau$

or νμ/τ. We quantitatively study the sensitivities of these two

processes by taking into account possible signals and relevant backgrounds in a

muon collider experiment with a center-of-mass energy s=3 TeV

and a luminosity L=1 ab−1. For two-body scattering one can exclude

Z′ masses MZ′≲100 TeV with O(1) gauge

couplings. When M^{}_{Z'} \lesssim 1~{\rm TeV} <\sqrt{s}, one can exclude $g'

\gtrsim 2\times 10^{-2}$. The process with photon emission is more powerful

than the two-body scattering if M^{}_{Z'} < \sqrt{s}. For instance, a

sensitivity of g′≃4×10−3 can be achieved at $M^{}_{Z'} =

1~{\rm TeV}.Theparameterspacesfavoredbythe(g-2)^{}_{\mu}andB$

anomalies with M^{}_{Z'} > 100~{\rm GeV} are entirely covered by a muon

collider.

31 Dec 2024

In this work, we use publicly available data from ATLAS collaboration collected at LHC run 2 at a center-of-mass energy of s=13TeV with an integrated luminosity of 139fb−1 to derive lower mass limits on the Z′ gauge boson associated with the B-L gauge symmetry. Using dilepton data we find that MZ′>4TeV (6TeV) for gBL=0.1 (gBL=0.5) in the absence of invisible decays. Once invisible decays are turned on these limits are substantially relaxed. Assuming an invisible branching ratio of BRinv=0.9, the LHC bound is loosened up to MZ′>4.8TeV for gBL=0.5. This analysis confirms that the LHC now imposes stricter constraints than the longstanding bounds established by LEP. We also estimate the projected HL-LHC bounds that will operate with at s=14TeV and a planned integrated luminosity of L=3ab−1 that will probe Z′ masses up to 7.5TeV.

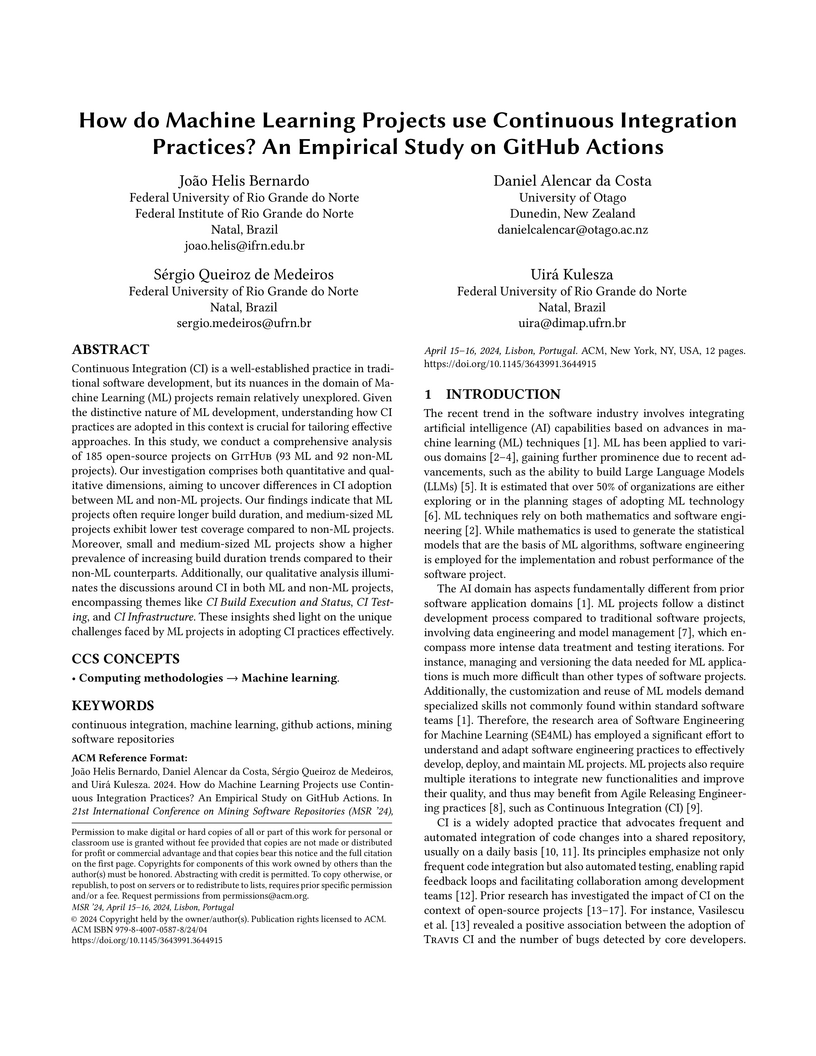

Continuous Integration (CI) is a well-established practice in traditional software development, but its nuances in the domain of Machine Learning (ML) projects remain relatively unexplored. Given the distinctive nature of ML development, understanding how CI practices are adopted in this context is crucial for tailoring effective approaches. In this study, we conduct a comprehensive analysis of 185 open-source projects on GitHub (93 ML and 92 non-ML projects). Our investigation comprises both quantitative and qualitative dimensions, aiming to uncover differences in CI adoption between ML and non-ML projects. Our findings indicate that ML projects often require longer build durations, and medium-sized ML projects exhibit lower test coverage compared to non-ML projects. Moreover, small and medium-sized ML projects show a higher prevalence of increasing build duration trends compared to their non-ML counterparts. Additionally, our qualitative analysis illuminates the discussions around CI in both ML and non-ML projects, encompassing themes like CI Build Execution and Status, CI Testing, and CI Infrastructure. These insights shed light on the unique challenges faced by ML projects in adopting CI practices effectively.

29 Dec 2023

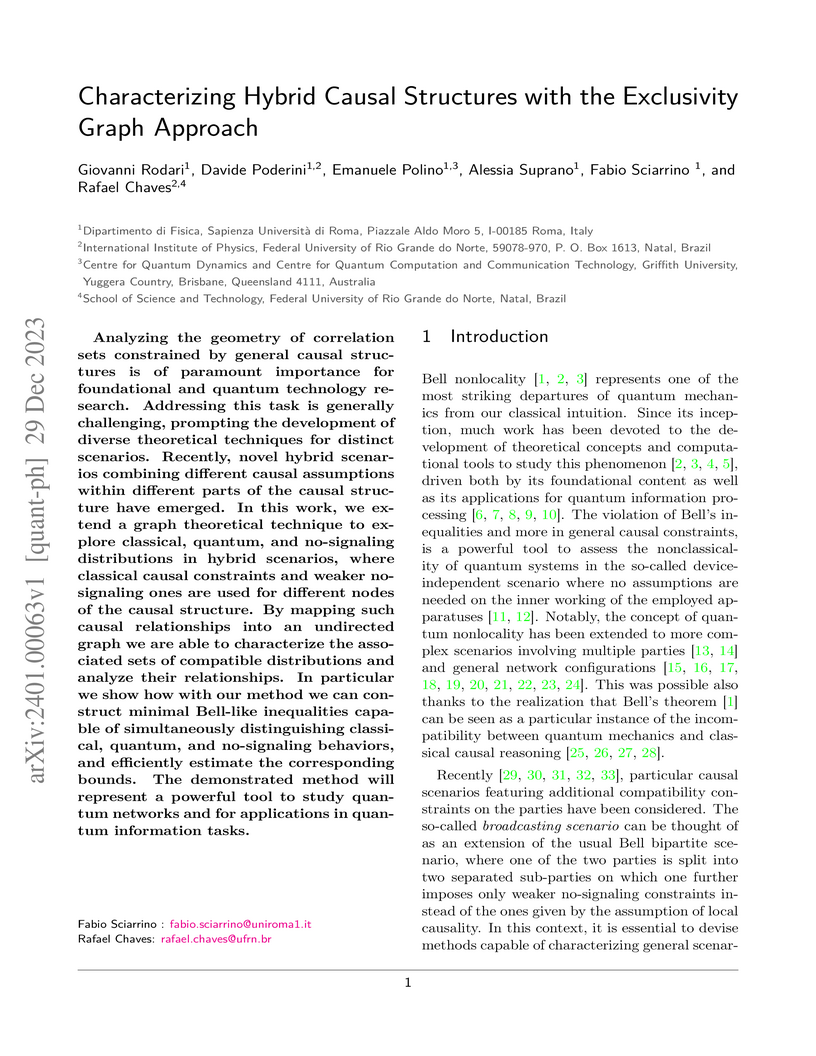

Analyzing the geometry of correlation sets constrained by general causal structures is of paramount importance for foundational and quantum technology research. Addressing this task is generally challenging, prompting the development of diverse theoretical techniques for distinct scenarios. Recently, novel hybrid scenarios combining different causal assumptions within different parts of the causal structure have emerged. In this work, we extend a graph theoretical technique to explore classical, quantum, and no-signaling distributions in hybrid scenarios, where classical causal constraints and weaker no-signaling ones are used for different nodes of the causal structure. By mapping such causal relationships into an undirected graph we are able to characterize the associated sets of compatible distributions and analyze their relationships. In particular we show how with our method we can construct minimal Bell-like inequalities capable of simultaneously distinguishing classical, quantum, and no-signaling behaviors, and efficiently estimate the corresponding bounds. The demonstrated method will represent a powerful tool to study quantum networks and for applications in quantum information tasks.

11 Aug 2014

A proposal to describe gravity duals of conformal theories with boundaries

(AdS/BCFT correspondence) was put forward by Takayanagi few years ago. However

interesting solutions describing field theories at finite temperature and

charge density are still lacking. In this paper we describe a class of theories

with boundary, which admit black hole type gravity solutions. The theories are

specified by stress-energy tensors that reside on the extensions of the

boundary to the bulk. From this perspective AdS/BCFT appears analogous to the

fluid/gravity correspondence. Among the class of the boundary extensions there

is a special (integrable) one, for which the stress-energy tensor is

fluid-like. We discuss features of that special solution as well as its

thermodynamic properties.

We consider the quality factor Q, which quantifies the trade-off between

power, efficiency, and fluctuations in steady-state heat engines modeled by

dynamical systems. We show that the nonlinear scattering theory, both in

classical and quantum mechanics, sets the bound Q=3/8 when approaching the

Carnot efficiency. On the other hand, interacting, nonintegrable and

momentum-conserving systems can achieve the value Q=1/2, which is the universal

upper bound in linear response. This result shows that interactions are

necessary to achieve the optimal performance of a steady-state heat engine.

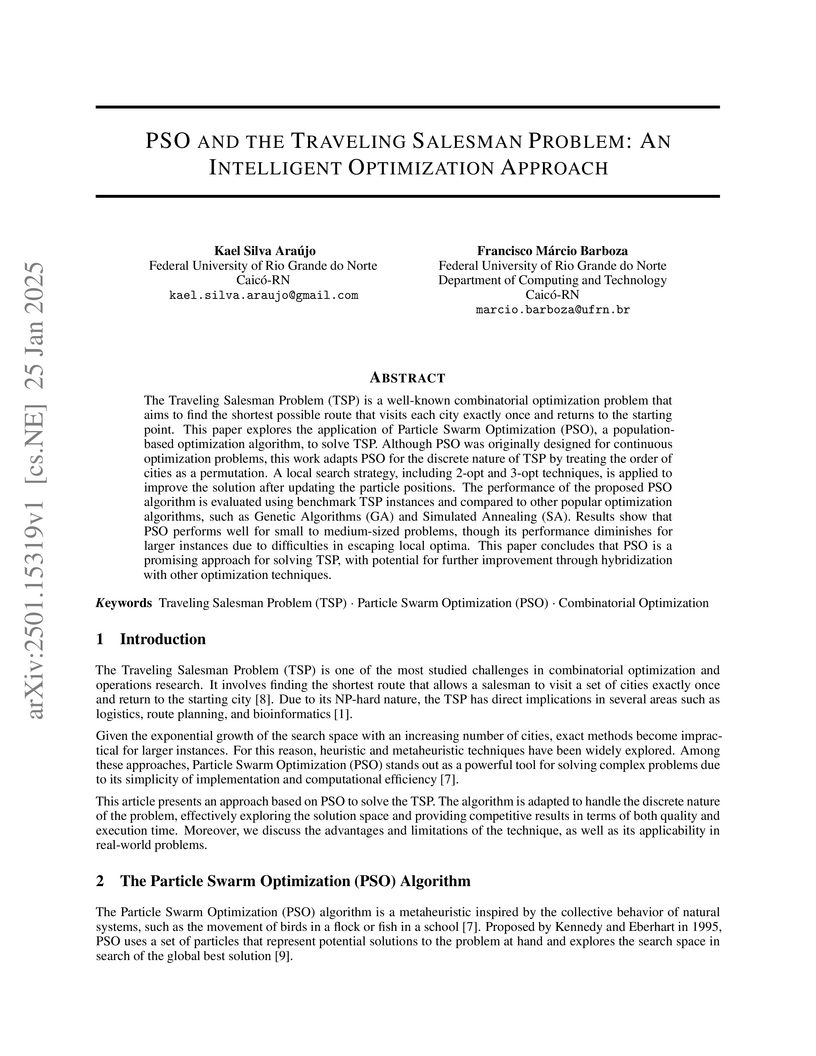

The Traveling Salesman Problem (TSP) is a well-known combinatorial optimization problem that aims to find the shortest possible route that visits each city exactly once and returns to the starting point. This paper explores the application of Particle Swarm Optimization (PSO), a population-based optimization algorithm, to solve TSP. Although PSO was originally designed for continuous optimization problems, this work adapts PSO for the discrete nature of TSP by treating the order of cities as a permutation. A local search strategy, including 2-opt and 3-opt techniques, is applied to improve the solution after updating the particle positions. The performance of the proposed PSO algorithm is evaluated using benchmark TSP instances and compared to other popular optimization algorithms, such as Genetic Algorithms (GA) and Simulated Annealing (SA). Results show that PSO performs well for small to medium-sized problems, though its performance diminishes for larger instances due to difficulties in escaping local optima. This paper concludes that PSO is a promising approach for solving TSP, with potential for further improvement through hybridization with other optimization techniques.

25 Jul 2016

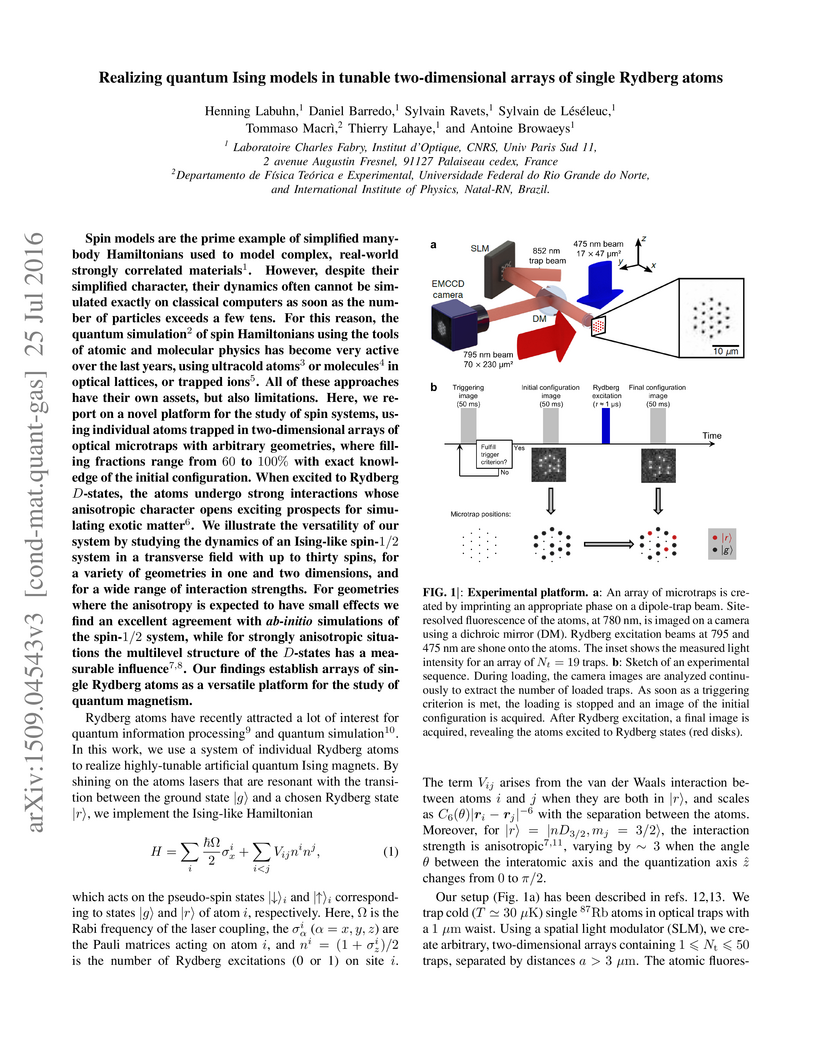

Spin models are the prime example of simplified manybody Hamiltonians used to

model complex, real-world strongly correlated materials. However, despite their

simplified character, their dynamics often cannot be simulated exactly on

classical computers as soon as the number of particles exceeds a few tens. For

this reason, the quantum simulation of spin Hamiltonians using the tools of

atomic and molecular physics has become very active over the last years, using

ultracold atoms or molecules in optical lattices, or trapped ions. All of these

approaches have their own assets, but also limitations. Here, we report on a

novel platform for the study of spin systems, using individual atoms trapped in

two-dimensional arrays of optical microtraps with arbitrary geometries, where

filling fractions range from 60 to 100% with exact knowledge of the initial

configuration. When excited to Rydberg D-states, the atoms undergo strong

interactions whose anisotropic character opens exciting prospects for

simulating exotic matter. We illustrate the versatility of our system by

studying the dynamics of an Ising-like spin-1/2 system in a transverse field

with up to thirty spins, for a variety of geometries in one and two dimensions,

and for a wide range of interaction strengths. For geometries where the

anisotropy is expected to have small effects we find an excellent agreement

with ab-initio simulations of the spin-1/2 system, while for strongly

anisotropic situations the multilevel structure of the D-states has a

measurable influence. Our findings establish arrays of single Rydberg atoms as

a versatile platform for the study of quantum magnetism.

30 Jun 2023

We compute the integrands of five-, six-, and seven-point correlation functions of twenty-prime operators with general polarizations at the two-loop order in N=4 super Yang-Mills theory. In addition, we compute the integrand of the five-point function at three-loop order. Using the operator product expansion, we extract the two-loop four-point function of one Konishi operator and three twenty-prime operators. Two methods were used for computing the integrands. The first method is based on constructing an ansatz, and then numerically fitting for the coefficients using the twistor-space reformulation of N=4 super Yang-Mills theory. The second method is based on the OPE decomposition. Only very few correlator integrands for more than four points were known before. Our results can be used to test conjectures, and to make progresses on the integrability-based hexagonalization approach for correlation functions.

There are no more papers matching your filters at the moment.