JetBrains Research

JetBrains Research introduces ENVBENCH, a comprehensive benchmark for evaluating automated environment setup approaches, incorporating diverse Python, Java, and Kotlin repositories while providing standardized evaluation metrics and baseline comparisons that reveal current limitations in automated configuration capabilities.

Large Language Models are transforming software engineering, yet prompt management in practice remains ad hoc, hindering reliability, reuse, and integration into industrial workflows. We present Prompt-with-Me, a practical solution for structured prompt management embedded directly in the development environment. The system automatically classifies prompts using a four-dimensional taxonomy encompassing intent, author role, software development lifecycle stage, and prompt type. To enhance prompt reuse and quality, Prompt-with-Me suggests language refinements, masks sensitive information, and extracts reusable templates from a developer's prompt library. Our taxonomy study of 1108 real-world prompts demonstrates that modern LLMs can accurately classify software engineering prompts. Furthermore, our user study with 11 participants shows strong developer acceptance, with high usability (Mean SUS=73), low cognitive load (Mean NASA-TLX=21), and reported gains in prompt quality and efficiency through reduced repetitive effort. Lastly, we offer actionable insights for building the next generation of prompt management and maintenance tools for software engineering workflows.

Long Code Arena is a new benchmark suite designed to evaluate long-context code models on six distinct, real-world software engineering tasks that require project-wide understanding. The benchmarks show that while current LLMs can approach these complex tasks, there is significant room for improvement, particularly for open-source models, validating the need for advanced techniques in handling extensive code contexts.

27 Oct 2025

Large Language Model (LLM)-based agents solve complex tasks through iterative reasoning, exploration, and tool-use, a process that can result in long, expensive context histories. While state-of-the-art Software Engineering (SE) agents like OpenHands or Cursor use LLM-based summarization to tackle this issue, it is unclear whether the increased complexity offers tangible performance benefits compared to simply omitting older observations. We present a systematic comparison of these approaches within SWE-agent on SWE-bench Verified across five diverse model configurations. Moreover, we show initial evidence of our findings generalizing to the OpenHands agent scaffold. We find that a simple environment observation masking strategy halves cost relative to the raw agent while matching, and sometimes slightly exceeding, the solve rate of LLM summarization. Additionally, we introduce a novel hybrid approach that further reduces costs by 7% and 11% compared to just observation masking or LLM summarization, respectively. Our findings raise concerns regarding the trend towards pure LLM summarization and provide initial evidence of untapped cost reductions by pushing the efficiency-effectiveness frontier. We release code and data for reproducibility.

JetBrains Research introduces PandasPlotBench, a new benchmark of 175 human-curated tasks with synthetically generated data to evaluate Large Language Models (LLMs) in generating Python plotting code from natural language instructions. The work demonstrates that top LLMs achieve up to 89 task-based scores for Matplotlib and Seaborn but struggle considerably with Plotly (22% incorrect code rate), and surprisingly maintain high performance even with concise user prompts when provided with rich data descriptions.

JetBrains Research demonstrated that the OpenCoder 1.5B model can achieve project-level code completion performance comparable to models trained on hundreds of billions of tokens by utilizing only 72 million tokens, primarily through adapting Rotary Positional Embedding (RoPE) for extended context windows. This approach shows that efficient architectural adjustments can yield strong in-context learning capabilities with substantially fewer training resources.

27 Oct 2025

The Best of N Worlds: Aligning Reinforcement Learning with Best-of-N Sampling via max@k Optimisation

The Best of N Worlds: Aligning Reinforcement Learning with Best-of-N Sampling via max@k Optimisation

JetBrains Research introduced an off-policy reinforcement learning framework that optimizes Large Language Models for Best-of-N sampling in code generation tasks. The framework derives an unbiased gradient estimate for the `max@k` metric using continuous rewards, demonstrating superior performance on coding benchmarks by preventing diversity degradation.

28 May 2025

Benchmarks for Software Engineering (SE) AI agents, most notably SWE-bench,

have catalyzed progress in programming capabilities of AI agents. However, they

overlook critical developer workflows such as Version Control System (VCS)

operations. To address this issue, we present GitGoodBench, a novel benchmark

for evaluating AI agent performance on VCS tasks. GitGoodBench covers three

core Git scenarios extracted from permissive open-source Python, Java, and

Kotlin repositories. Our benchmark provides three datasets: a comprehensive

evaluation suite (900 samples), a rapid prototyping version (120 samples), and

a training corpus (17,469 samples). We establish baseline performance on the

prototyping version of our benchmark using GPT-4o equipped with custom tools,

achieving a 21.11% solve rate overall. We expect GitGoodBench to serve as a

crucial stepping stone toward truly comprehensive SE agents that go beyond mere

programming.

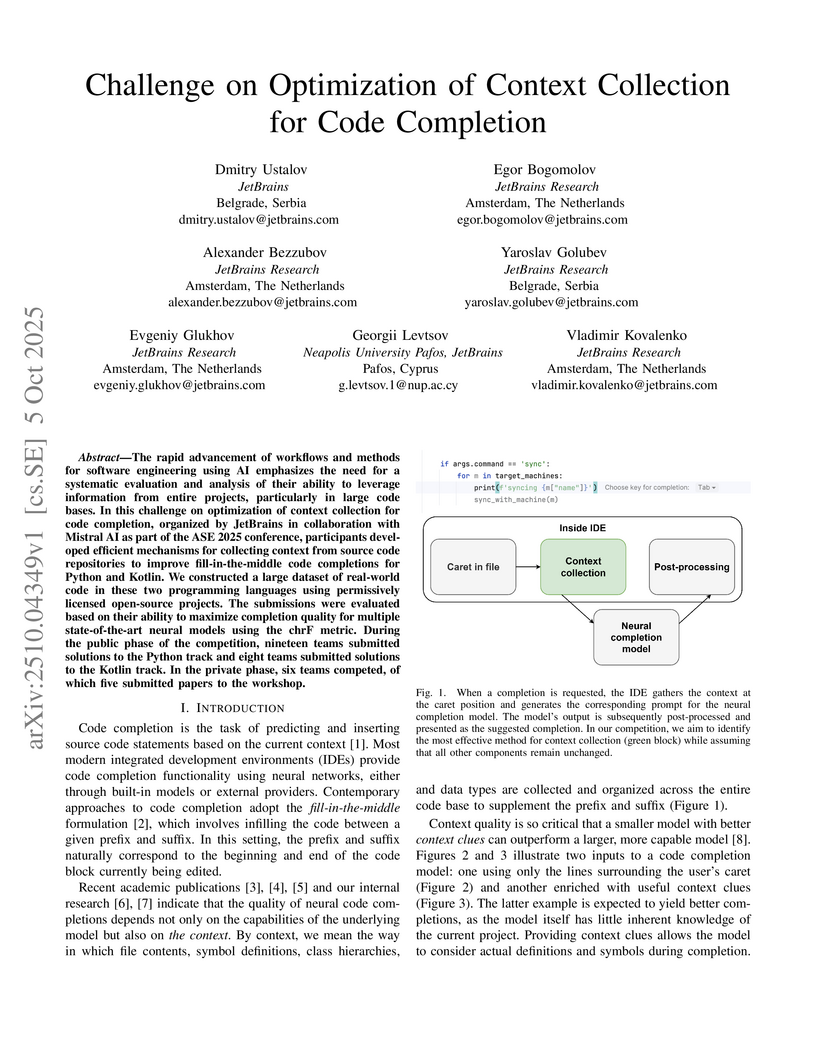

The rapid advancement of workflows and methods for software engineering using AI emphasizes the need for a systematic evaluation and analysis of their ability to leverage information from entire projects, particularly in large code bases. In this challenge on optimization of context collection for code completion, organized by JetBrains in collaboration with Mistral AI as part of the ASE 2025 conference, participants developed efficient mechanisms for collecting context from source code repositories to improve fill-in-the-middle code completions for Python and Kotlin. We constructed a large dataset of real-world code in these two programming languages using permissively licensed open-source projects. The submissions were evaluated based on their ability to maximize completion quality for multiple state-of-the-art neural models using the chrF metric. During the public phase of the competition, nineteen teams submitted solutions to the Python track and eight teams submitted solutions to the Kotlin track. In the private phase, six teams competed, of which five submitted papers to the workshop.

Researchers from JetBrains and Delft University of Technology conducted the first systematic literature review specifically on Human-AI Experience in Integrated Development Environments (in-IDE HAX), synthesizing 90 studies to map the research landscape and identify critical gaps, existing findings, and future directions across AI impact, design, and code quality.

10 Oct 2025

Machine learning (ML) libraries such as PyTorch and TensorFlow are essential for a wide range of modern applications. Ensuring the correctness of ML libraries through testing is crucial. However, ML APIs often impose strict input constraints involving complex data structures such as tensors. Automated test generation tools such as Pynguin are not aware of these constraints and often create non-compliant inputs. This leads to early test failures and limited code coverage. Prior work has investigated extracting constraints from official API documentation. In this paper, we present PynguinML, an approach that improves the Pynguin test generator to leverage these constraints to generate compliant inputs for ML APIs, enabling more thorough testing and higher code coverage. Our evaluation is based on 165 modules from PyTorch and TensorFlow, comparing PynguinML against Pynguin. The results show that PynguinML significantly improves test effectiveness, achieving up to 63.9 % higher code coverage.

This work introduces a framework that integrates Foundation Inference Models (FIMs) with an autoencoder architecture to identify coarse-grained latent variables and their governing dynamics from high-dimensional data. The approach rapidly and robustly recovers the drift and diffusion functions for a stochastic double-well system, demonstrating enhanced stability and computational efficiency.

30 Mar 2025

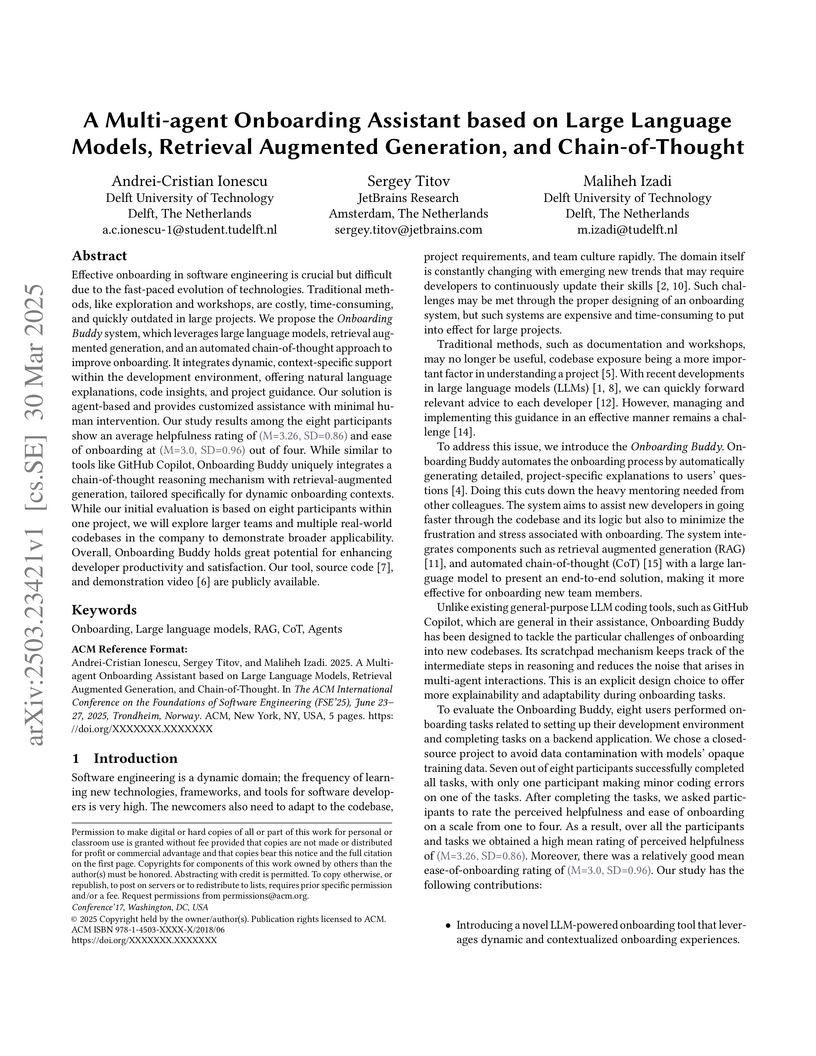

A multi-agent onboarding assistant, Onboarding Buddy, leverages Large Language Models, Retrieval Augmented Generation, and Chain-of-Thought reasoning to help new developers understand unfamiliar codebases and project requirements. An empirical study showed 7 out of 8 participants successfully completed onboarding tasks with a mean helpfulness rating of 3.26 out of 4.

09 Dec 2020

The process of writing code and use of features in an integrated development

environment (IDE) is a fruitful source of data in computing education research.

Existing studies use records of students' actions in the IDE, consecutive code

snapshots, compilation events, and others, to gain deep insight into the

process of student programming. In this paper, we present a set of tools for

collecting and processing data of student activity during problem-solving. The

first tool is a plugin for IntelliJ-based IDEs (PyCharm, IntelliJ IDEA, CLion).

By capturing snapshots of code and IDE interaction data, it allows to analyze

the process of writing code in different languages -- Python, Java, Kotlin, and

C++. The second tool is designed for the post-processing of data collected by

the plugin and is capable of basic analysis and visualization. To validate and

showcase the toolkit, we present a dataset collected by our tools. It consists

of records of activity and IDE interaction events during solution of

programming tasks by 148 participants of different ages and levels of

programming experience. We propose several directions for further exploration

of the dataset.

29 Oct 2025

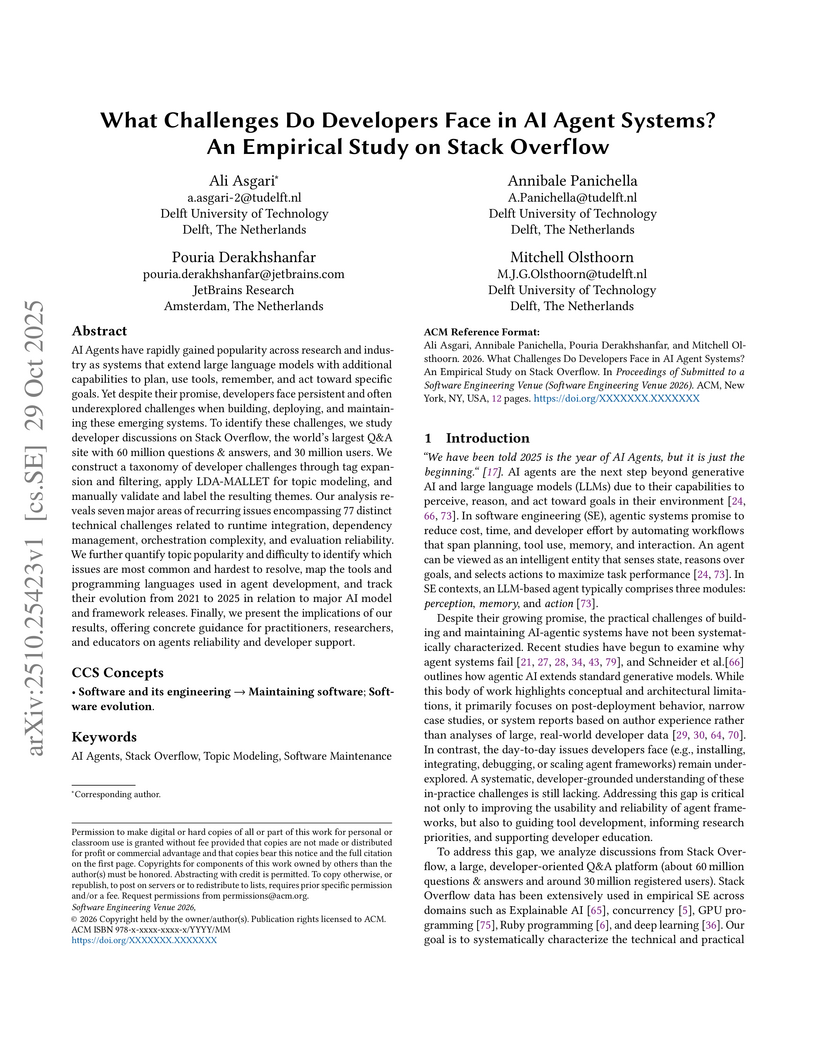

AI agents have rapidly gained popularity across research and industry as systems that extend large language models with additional capabilities to plan, use tools, remember, and act toward specific goals. Yet despite their promise, developers face persistent and often underexplored challenges when building, deploying, and maintaining these emerging systems. To identify these challenges, we study developer discussions on Stack Overflow, the world's largest developer-focused Q and A platform with about 60 million questions and answers and 30 million users. We construct a taxonomy of developer challenges through tag expansion and filtering, apply LDA-MALLET for topic modeling, and manually validate and label the resulting themes. Our analysis reveals seven major areas of recurring issues encompassing 77 distinct technical challenges related to runtime integration, dependency management, orchestration complexity, and evaluation reliability. We further quantify topic popularity and difficulty to identify which issues are most common and hardest to resolve, map the tools and programming languages used in agent development, and track their evolution from 2021 to 2025 in relation to major AI model and framework releases. Finally, we present the implications of our results, offering concrete guidance for practitioners, researchers, and educators on agent reliability and developer support.

Despite the increasing presence of AI assistants in Integrated Development Environments, it remains unclear what developers actually need from these tools and which features are likely to be implemented in practice. To investigate this gap, we conducted a two-phase study. First, we interviewed 35 professional developers from three user groups (Adopters, Churners, and Non-Users) to uncover unmet needs and expectations. Our analysis revealed five key areas: Technology Improvement, Interaction, and Alignment, as well as Simplifying Skill Building, and Programming Tasks. We then examined the feasibility of addressing selected needs through an internal prediction market involving 102 practitioners. The results demonstrate a strong alignment between the developers' needs and the practitioners' judgment for features focused on implementation and context awareness. However, features related to proactivity and maintenance remain both underestimated and technically unaddressed. Our findings reveal gaps in current AI support and provide practical directions for developing more effective and sustainable in-IDE AI systems.

Recent Multi-Agent Reinforcement Learning (MARL) literature has been largely focused on Centralized Training with Decentralized Execution (CTDE) paradigm. CTDE has been a dominant approach for both cooperative and mixed environments due to its capability to efficiently train decentralized policies. While in mixed environments full autonomy of the agents can be a desirable outcome, cooperative environments allow agents to share information to facilitate coordination. Approaches that leverage this technique are usually referred as communication methods, as full autonomy of agents is compromised for better performance. Although communication approaches have shown impressive results, they do not fully leverage this additional information during training phase. In this paper, we propose a new method called MAMBA which utilizes Model-Based Reinforcement Learning (MBRL) to further leverage centralized training in cooperative environments. We argue that communication between agents is enough to sustain a world model for each agent during execution phase while imaginary rollouts can be used for training, removing the necessity to interact with the environment. These properties yield sample efficient algorithm that can scale gracefully with the number of agents. We empirically confirm that MAMBA achieves good performance while reducing the number of interactions with the environment up to an orders of magnitude compared to Model-Free state-of-the-art approaches in challenging domains of SMAC and Flatland.

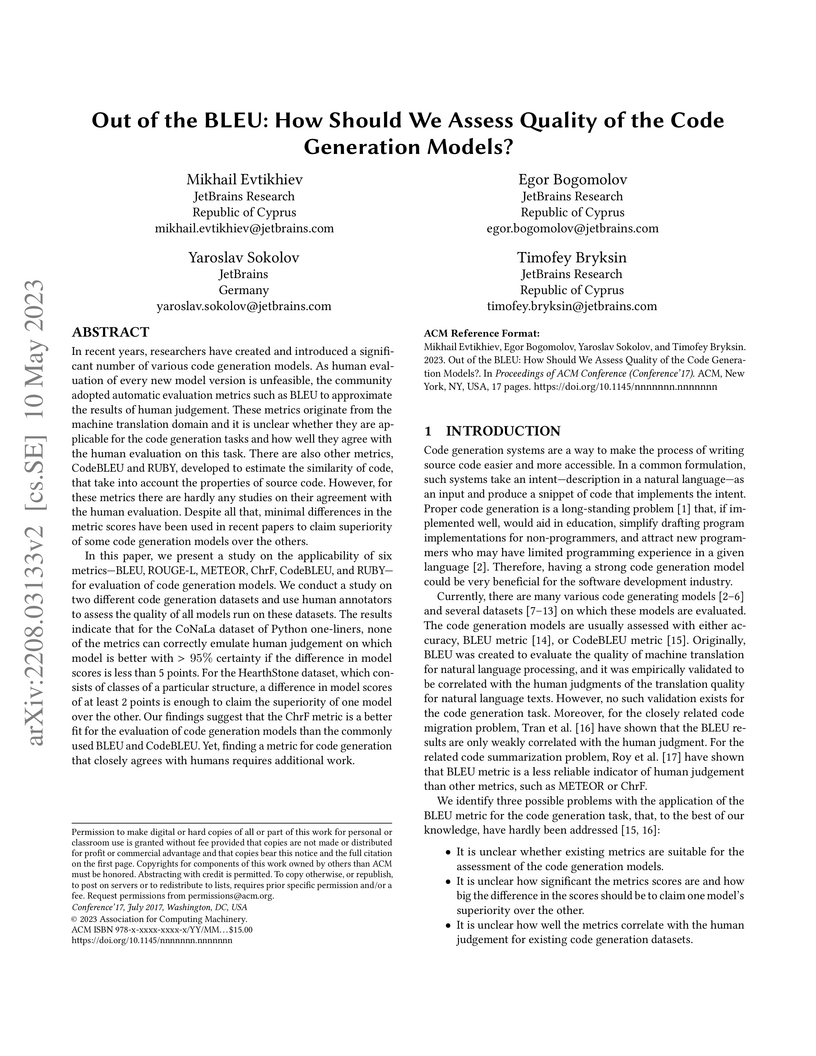

In recent years, researchers have created and introduced a significant number of various code generation models. As human evaluation of every new model version is unfeasible, the community adopted automatic evaluation metrics such as BLEU to approximate the results of human judgement. These metrics originate from the machine translation domain and it is unclear whether they are applicable for the code generation tasks and how well they agree with the human evaluation on this task. There are also other metrics, CodeBLEU and RUBY, developed to estimate the similarity of code, that take into account the properties of source code. However, for these metrics there are hardly any studies on their agreement with the human evaluation. Despite all that, minimal differences in the metric scores have been used in recent papers to claim superiority of some code generation models over the others.

In this paper, we present a study on the applicability of six metrics -- BLEU, ROUGE-L, METEOR, ChrF, CodeBLEU, and RUBY -- for evaluation of code generation models. We conduct a study on two different code generation datasets and use human annotators to assess the quality of all models run on these datasets. The results indicate that for the CoNaLa dataset of Python one-liners, none of the metrics can correctly emulate human judgement on which model is better with >95% certainty if the difference in model scores is less than 5 points. For the HearthStone dataset, which consists of classes of a particular structure, a difference in model scores of at least 2 points is enough to claim the superiority of one model over the other. Our findings suggest that the ChrF metric is a better fit for the evaluation of code generation models than the commonly used BLEU and CodeBLEU. Yet, finding a metric for code generation that closely agrees with humans requires additional work.

A large-scale survey of 481 professional programmers details the practical usage patterns, perceptions, and challenges of AI-based coding assistants across the full software development lifecycle. The study finds 84.2% adoption, highlights varying trust in AI-generated code quality (especially for security), and identifies specific development activities where AI is utilized or desired for delegation, alongside key barriers like inaccuracy and lack of context.

Students often struggle with solving programming problems when learning to code, especially when they have to do it online, with one of the most common disadvantages of working online being the lack of personalized help. This help can be provided as next-step hint generation, i.e., showing a student what specific small step they need to do next to get to the correct solution. There are many ways to generate such hints, with large language models (LLMs) being among the most actively studied right now.

While LLMs constitute a promising technology for providing personalized help, combining them with other techniques, such as static analysis, can significantly improve the output quality. In this work, we utilize this idea and propose a novel system to provide both textual and code hints for programming tasks. The pipeline of the proposed approach uses a chain-of-thought prompting technique and consists of three distinct steps: (1) generating subgoals - a list of actions to proceed with the task from the current student's solution, (2) generating the code to achieve the next subgoal, and (3) generating the text to describe this needed action. During the second step, we apply static analysis to the generated code to control its size and quality. The tool is implemented as a modification to the open-source JetBrains Academy plugin, supporting students in their in-IDE courses.

To evaluate our approach, we propose a list of criteria for all steps in our pipeline and conduct two rounds of expert validation. Finally, we evaluate the next-step hints in a classroom with 14 students from two universities. Our results show that both forms of the hints - textual and code - were helpful for the students, and the proposed system helped them to proceed with the coding tasks.

There are no more papers matching your filters at the moment.