Ask or search anything...

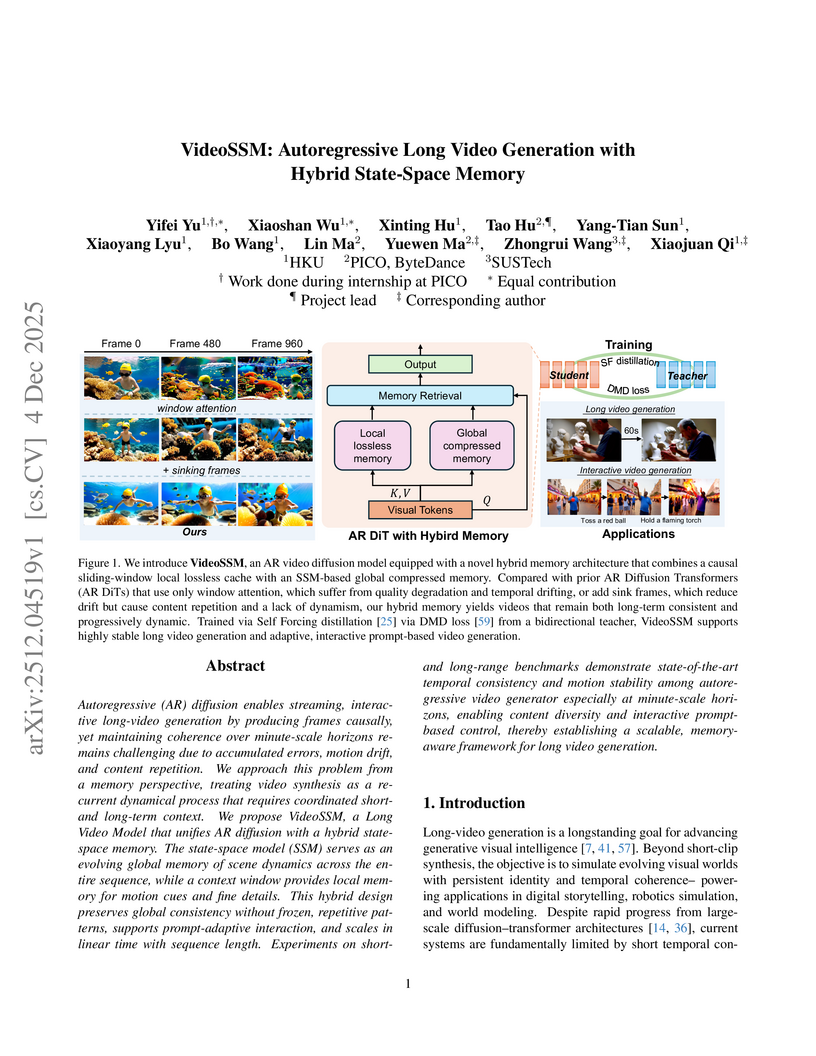

VideoSSM, developed by researchers at The University of Hong Kong and PICO, ByteDance, introduces a hybrid state-space memory architecture to enable autoregressive long video generation. The model maintains temporal consistency and dynamism over minute-scale durations, achieving superior quality and preventing motion drift or content repetition while operating with linear computational complexity.

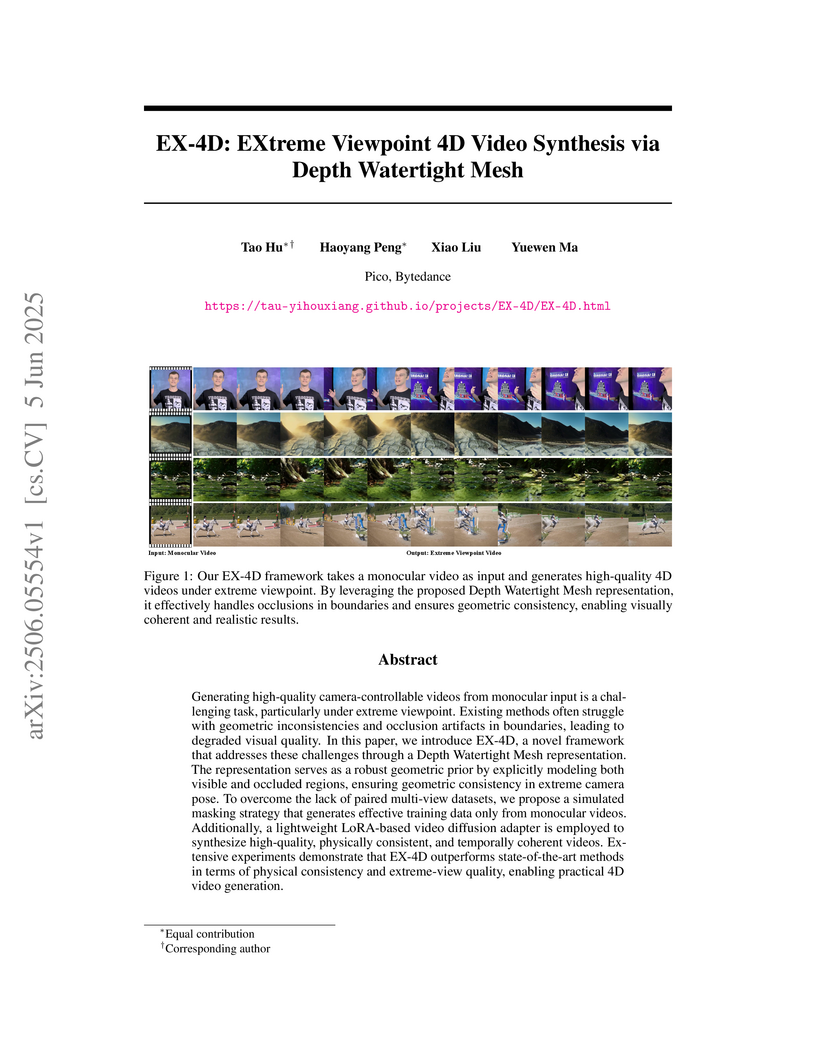

View blogEX-4D, developed by Pico, ByteDance, introduces a method for synthesizing high-quality, camera-controllable (4D) videos from monocular input, particularly excelling under extreme viewpoints. The framework leverages a novel Depth Watertight Mesh and a simulated masking strategy, consistently outperforming state-of-the-art baselines in FID and FVD metrics and achieving a 70.70% user preference for physical consistency.

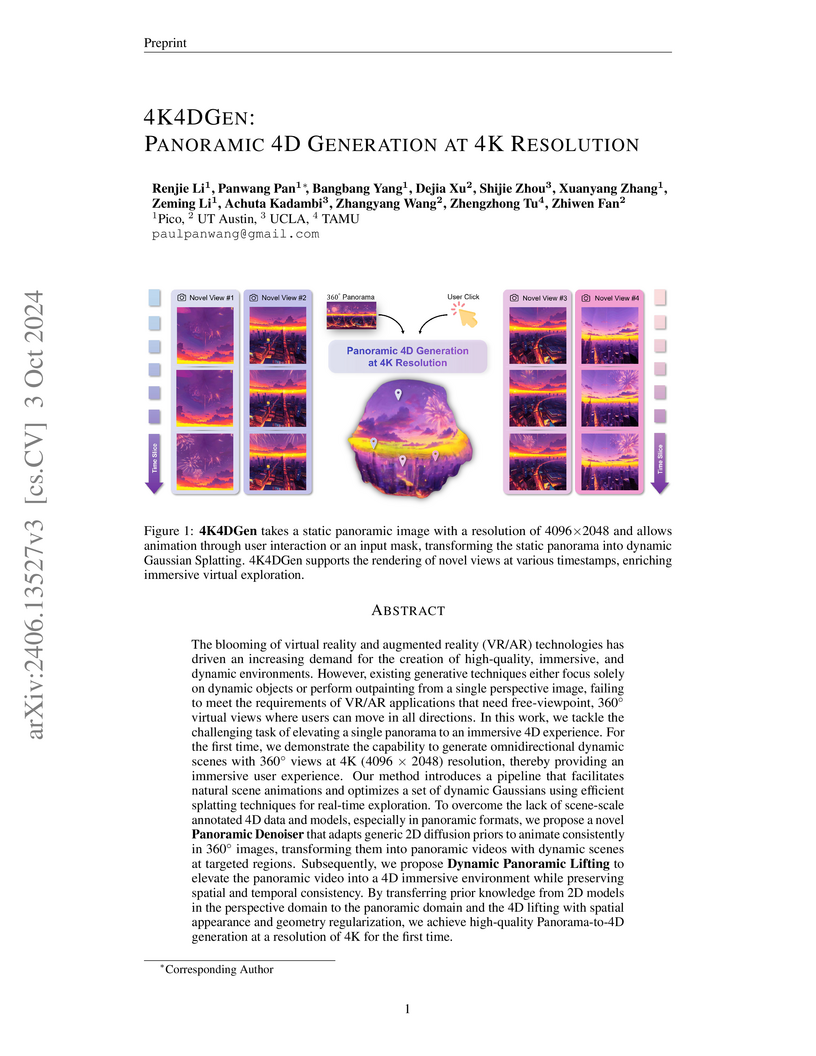

View blogA framework named 4K4DGen enables the creation of dynamic, immersive 4D panoramic scenes at 4K resolution (4096x2048) from a single static input image. It achieves superior visual and video quality compared to existing methods, with user studies showing 81% preference and quantitative metrics like FID at 16.59 versus a competitor's high 50s.

View blog ByteDance

ByteDance

Georgia Institute of Technology

Georgia Institute of Technology

UCLA

UCLA

ETH Zurich

ETH Zurich

Tsinghua University

Tsinghua University

Beihang University

Beihang University