Universidad de Leon

A systematic evaluation of embedding compression techniques for RAG systems demonstrates that combining PCA with float8 quantization achieves 8x storage reduction while maintaining retrieval performance, outperforming traditional int8 quantization approaches across multiple embedding models and dimensions.

19 Aug 2025

Eulerian nonlinear uncertainty propagation methods often suffer from finite domain limitations and computational inefficiencies. A recent approach to this class of algorithm, Grid-based Bayesian Estimation Exploiting Sparsity, addresses the first challenge by dynamically allocating a discretized grid in regions of phase space where probability is non-negligible. However, the design of the original algorithm causes the second challenge to persist in high-dimensional systems. This paper presents an architectural optimization of the algorithm for CPU implementation, followed by its adaptation to the CUDA framework for single GPU execution. The algorithm is validated for accuracy and convergence, with performance evaluated across distinct GPUs. Tests include propagating a three-dimensional probability distribution subject to the Lorenz '63 model and a six-dimensional probability distribution subject to the Lorenz '96 model. The results imply that the improvements made result in a speedup of over 1000 times compared to the original implementation.

Cybersecurity in robotics stands out as a key aspect within Regulation (EU)

2024/1689, also known as the Artificial Intelligence Act, which establishes

specific guidelines for intelligent and automated systems. A fundamental

distinction in this regulatory framework is the difference between robots with

Artificial Intelligence (AI) and those that operate through automation systems

without AI, since the former are subject to stricter security requirements due

to their learning and autonomy capabilities. This work analyzes cybersecurity

tools applicable to advanced robotic systems, with special emphasis on the

protection of knowledge bases in cognitive architectures. Furthermore, a list

of basic tools is proposed to guarantee the security, integrity, and resilience

of these systems, and a practical case is presented, focused on the analysis of

robot knowledge management, where ten evaluation criteria are defined to ensure

compliance with the regulation and reduce risks in human-robot interaction

(HRI) environments.

Facial age estimation has achieved considerable success under controlled conditions. However, in unconstrained real-world scenarios, which are often referred to as 'in the wild', age estimation remains challenging, especially when faces are partially occluded, which may obscure their visibility. To address this limitation, we propose a new approach integrating generative adversarial networks (GANs) and transformer architectures to enable robust age estimation from occluded faces. We employ an SN-Patch GAN to effectively remove occlusions, while an Attentive Residual Convolution Module (ARCM), paired with a Swin Transformer, enhances feature representation. Additionally, we introduce a Multi-Task Age Head (MTAH) that combines regression and distribution learning, further improving age estimation under occlusion. Experimental results on the FG-NET, UTKFace, and MORPH datasets demonstrate that our proposed approach surpasses existing state-of-the-art techniques for occluded facial age estimation by achieving an MAE of 3.00, 4.54, and 2.53 years, respectively.

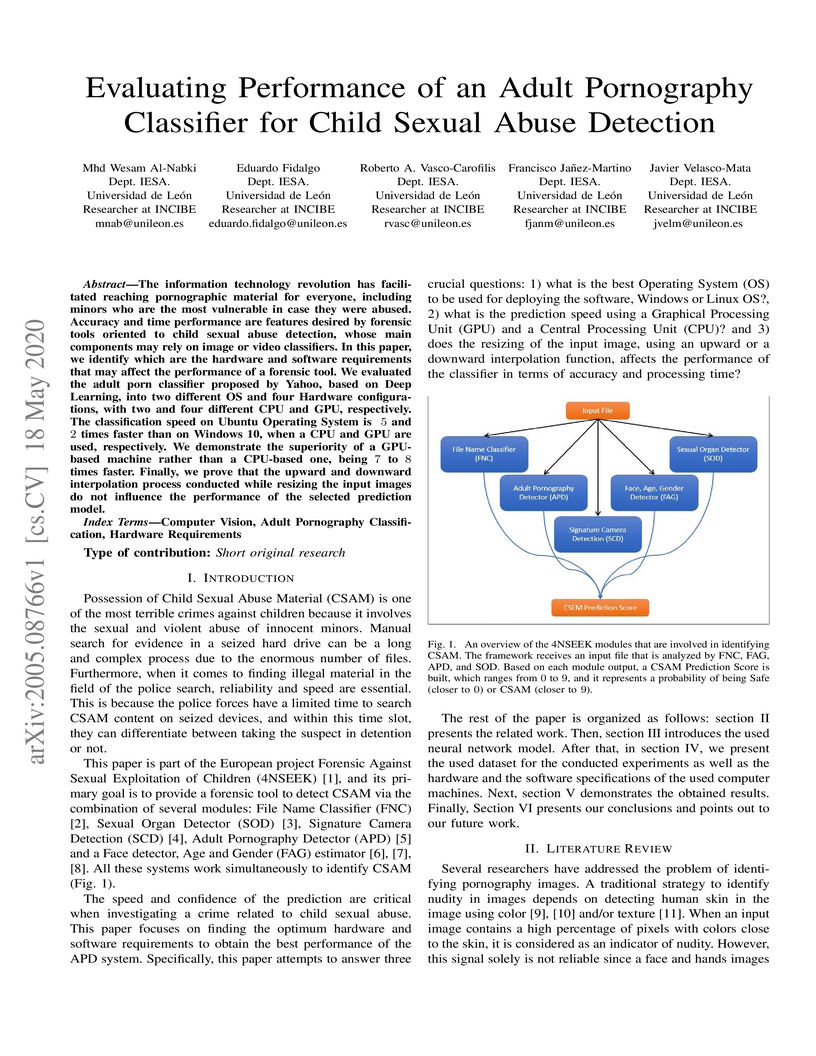

The information technology revolution has facilitated reaching pornographic material for everyone, including minors who are the most vulnerable in case they were abused. Accuracy and time performance are features desired by forensic tools oriented to child sexual abuse detection, whose main components may rely on image or video classifiers. In this paper, we identify which are the hardware and software requirements that may affect the performance of a forensic tool. We evaluated the adult porn classifier proposed by Yahoo, based on Deep Learning, into two different OS and four Hardware configurations, with two and four different CPU and GPU, respectively. The classification speed on Ubuntu Operating System is 5 and 2 times faster than on Windows 10, when a CPU and GPU are used, respectively. We demonstrate the superiority of a GPU-based machine rather than a CPU-based one, being 7 to 8 times faster. Finally, we prove that the upward and downward interpolation process conducted while resizing the input images do not influence the performance of the selected prediction model.

There are no more papers matching your filters at the moment.