deep-reinforcement-learning

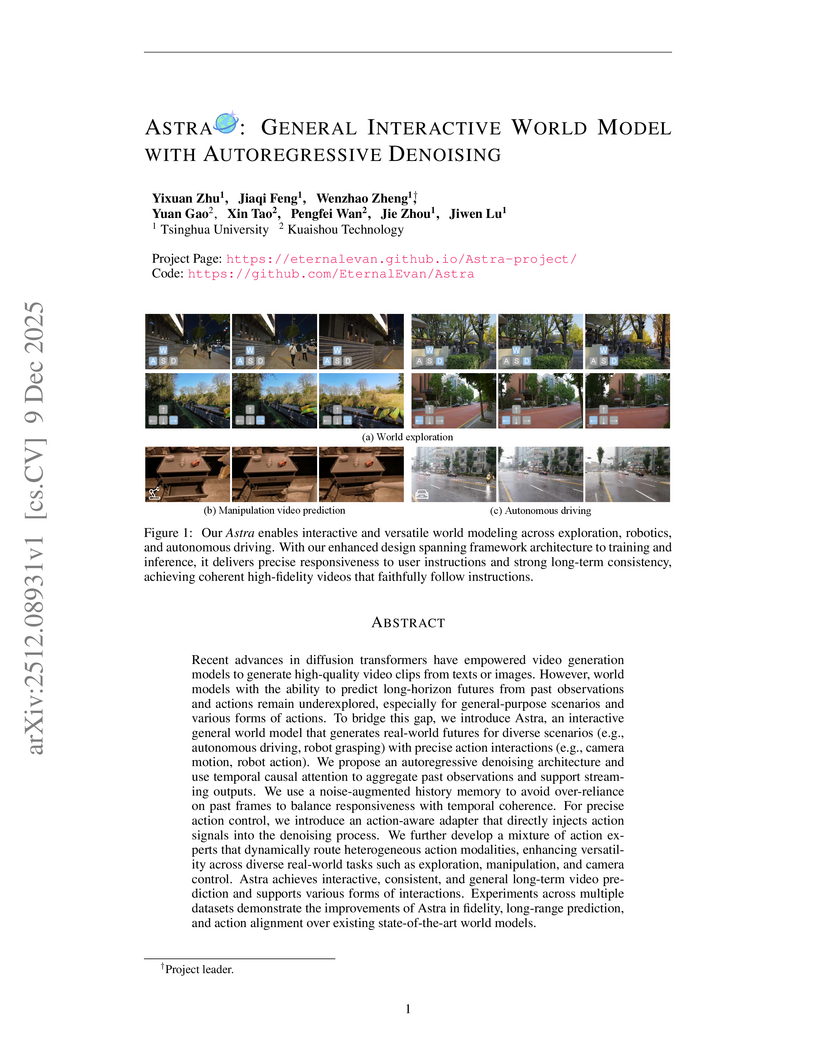

Astra, a collaborative effort from Tsinghua University and Kuaishou Technology, introduces an interactive general world model using an autoregressive denoising framework to generate real-world futures with precise action interactions. The model achieves superior performance in instruction following and visual fidelity across diverse simulation scenarios while efficiently extending a pre-trained video diffusion backbone.

The Astribot Team developed Lumo-1, a Vision-Language-Action (VLA) model that explicitly integrates structured reasoning with physical actions to achieve purposeful robotic control on their Astribot S1 bimanual mobile manipulator. This system exhibits superior generalization to novel objects and instructions, improves reasoning-action consistency through reinforcement learning, and outperforms state-of-the-art baselines in complex, long-horizon, and dexterous tasks.

TreeGRPO introduces a reinforcement learning framework that reinterprets diffusion model denoising as a sparse search tree, enabling both sample efficiency and precise credit assignment for post-training. This method achieves 2.4 times faster training convergence and enhances alignment quality with human preferences compared to prior approaches.

Researchers from Columbia University and NYU introduced Online World Modeling (OWM) and Adversarial World Modeling (AWM) to mitigate the train-test gap in world models for gradient-based planning (GBP). These methods enabled GBP to achieve performance comparable to or better than search-based planning algorithms like CEM, while simultaneously reducing computation time by an order of magnitude across various robotic tasks.

Researchers at Southern Methodist University systematically compared various memory encoding and injection methods for transformer-based world models, finding that State-Space Models (SSMs) combined with attention-based injection offer a scalable approach for enhancing long-term recall. This hybrid strategy significantly improved consistency over extended imagination horizons compared to a vanilla Vision Transformer, effectively mitigating perceptual drift.

This document introduces foundational concepts and algorithms in Deep Reinforcement Learning (DRL) and Deep Imitation Learning (DIL) for embodied agents, providing a self-contained pedagogical resource from ISCTE – University Institute of Lisbon. It offers clear, in-depth explanations of core methods, including necessary mathematical prerequisites, to build a strong understanding for learners.

The Khalasi framework implements an end-to-end reinforcement learning pipeline, enabling autonomous surface vehicles (ASVs) to navigate energy-efficiently in complex, vortical flow fields using only local sensor data. This approach achieves a 43.37% energy saving over baselines and demonstrates robust generalization to unseen synthetic and real-world ocean currents.

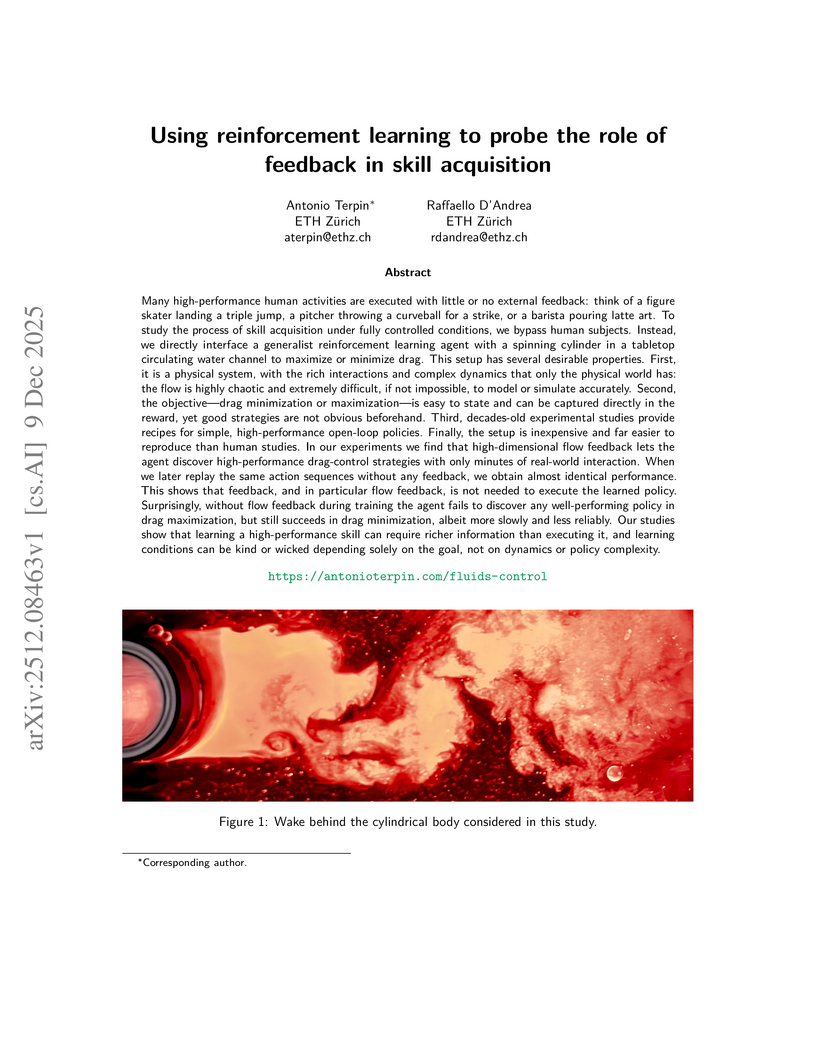

Researchers at ETH Zürich used a reinforcement learning agent to investigate how feedback influences skill acquisition in a complex physical fluid system. Their work demonstrated that learning high-performance skills, particularly those involving non-minimum phase dynamics, can require substantially richer sensory information during training than is necessary for their execution.

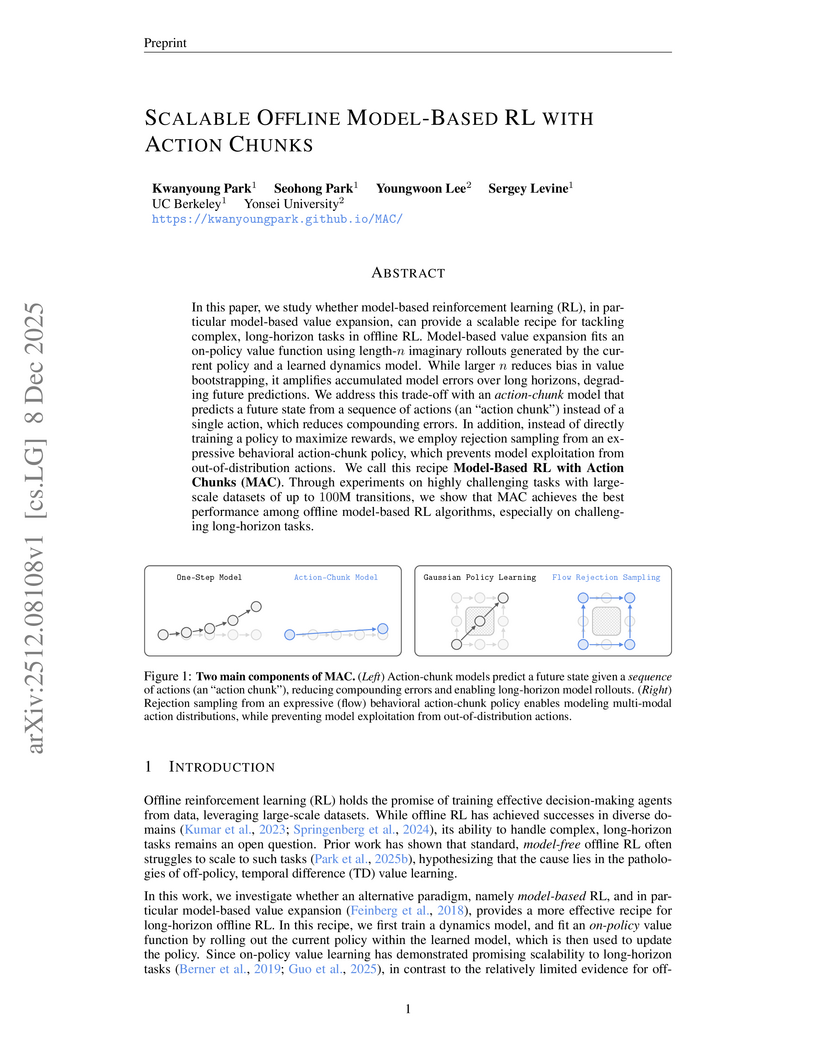

MAC introduces a model-based offline reinforcement learning approach that uses action chunks and flow-based generative policies to tackle complex, long-horizon tasks. It achieves state-of-the-art performance on challenging manipulation benchmarks, outperforming prior model-based methods and many model-free algorithms.

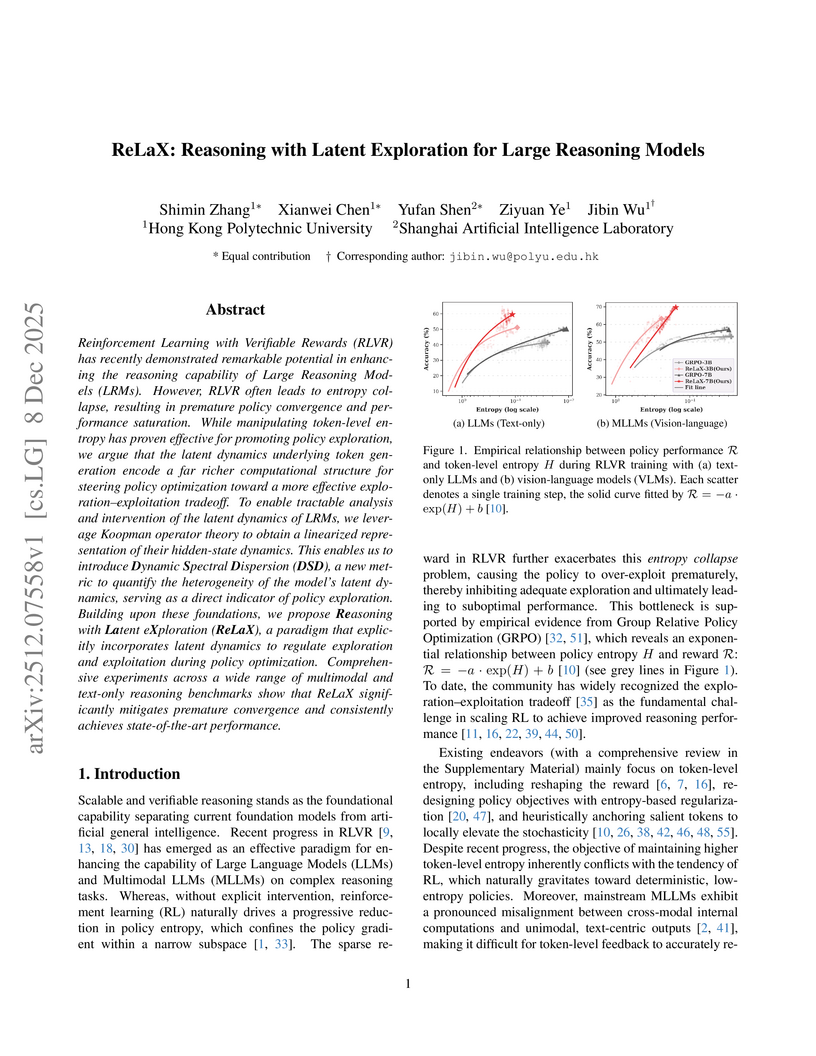

The ReLaX framework improves reasoning capabilities in Large Reasoning Models by addressing premature policy convergence through latent exploration, employing Koopman operator theory and Dynamic Spectral Dispersion. This approach enables sustained performance gains, achieving state-of-the-art results in both multimodal and mathematical reasoning benchmarks.

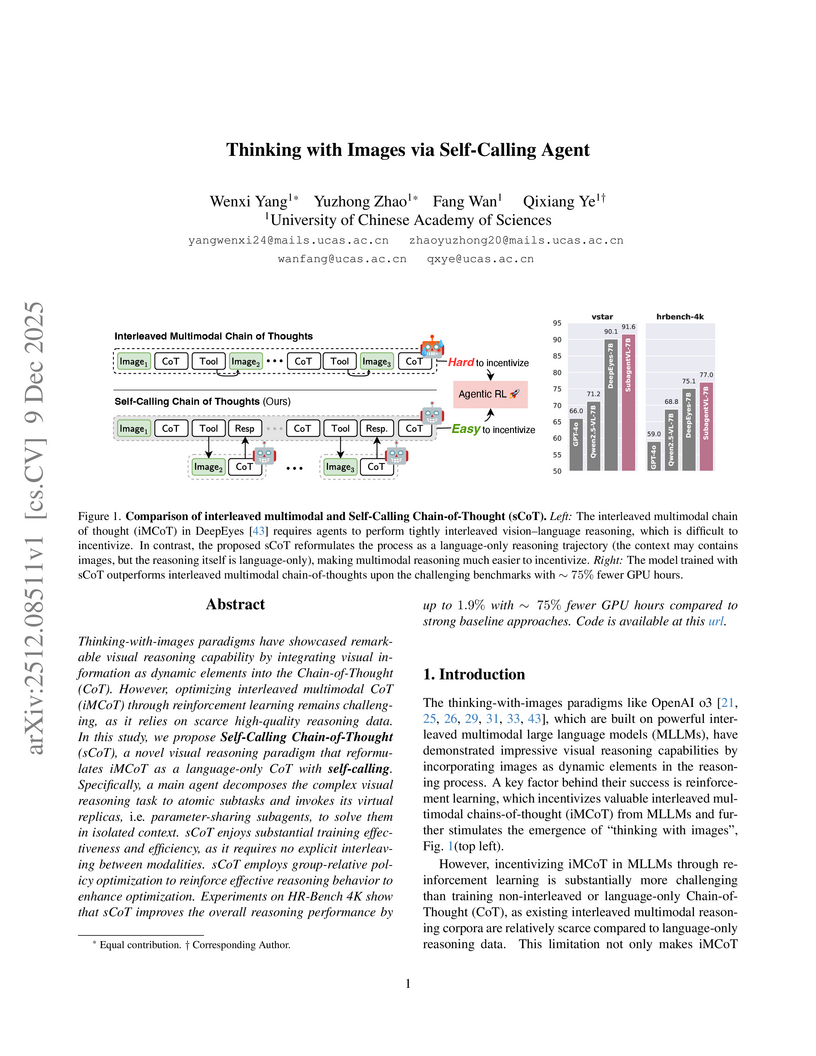

Thinking-with-images paradigms have showcased remarkable visual reasoning capability by integrating visual information as dynamic elements into the Chain-of-Thought (CoT). However, optimizing interleaved multimodal CoT (iMCoT) through reinforcement learning remains challenging, as it relies on scarce high-quality reasoning data. In this study, we propose Self-Calling Chain-of-Thought (sCoT), a novel visual reasoning paradigm that reformulates iMCoT as a language-only CoT with self-calling. Specifically, a main agent decomposes the complex visual reasoning task to atomic subtasks and invokes its virtual replicas, i.e. parameter-sharing subagents, to solve them in isolated context. sCoT enjoys substantial training effectiveness and efficiency, as it requires no explicit interleaving between modalities. sCoT employs group-relative policy optimization to reinforce effective reasoning behavior to enhance optimization. Experiments on HR-Bench 4K show that sCoT improves the overall reasoning performance by up to 1.9% with ∼75% fewer GPU hours compared to strong baseline approaches. Code is available at this https URL.

MMDUET2, a Video MLLM developed by Peking University and Meituan, improves proactive interaction by using multi-turn reinforcement learning and a text-to-text chat template to autonomously determine when and what to respond during streaming video. The model achieves state-of-the-art performance on proactive interaction benchmarks, with a PAUC of 53.3 on the WEB dataset and significantly reduced reply duplication, while maintaining general video understanding capabilities.

Since the 1990s, considerable empirical work has been carried out to train statistical models, such as neural networks (NNs), as learned heuristics for combinatorial optimization (CO) problems. When successful, such an approach eliminates the need for experts to design heuristics per problem type. Due to their structure, many hard CO problems are amenable to treatment through reinforcement learning (RL). Indeed, we find a wealth of literature training NNs using value-based, policy gradient, or actor-critic approaches, with promising results, both in terms of empirical optimality gaps and inference runtimes. Nevertheless, there has been a paucity of theoretical work undergirding the use of RL for CO problems. To this end, we introduce a unified framework to model CO problems through Markov decision processes (MDPs) and solve them using RL techniques. We provide easy-to-test assumptions under which CO problems can be formulated as equivalent undiscounted MDPs that provide optimal solutions to the original CO problems. Moreover, we establish conditions under which value-based RL techniques converge to approximate solutions of the CO problem with a guarantee on the associated optimality gap. Our convergence analysis provides: (1) a sufficient rate of increase in batch size and projected gradient descent steps at each RL iteration; (2) the resulting optimality gap in terms of problem parameters and targeted RL accuracy; and (3) the importance of a choice of state-space embedding. Together, our analysis illuminates the success (and limitations) of the celebrated deep Q-learning algorithm in this problem context.

Role-playing agents (RPAs) must simultaneously master many conflicting skills -- following multi-turn instructions, exhibiting domain knowledge, and adopting a consistent linguistic style. Existing work either relies on supervised fine-tuning (SFT) that over-fits surface cues and yields low diversity, or applies reinforcement learning (RL) that fails to learn multiple dimensions for comprehensive RPA optimization. We present MOA (Multi-Objective Alignment), a reinforcement-learning framework that enables multi-dimensional, fine-grained rubric optimization for general RPAs. MOA introduces a novel multi-objective optimization strategy that trains simultaneously on multiple fine-grained rubrics to boost optimization performance. Besides, to address the issues of model output diversity and quality, we have also employed thought-augmented rollout with off-policy guidance. Extensive experiments on challenging benchmarks such as PersonaGym and RoleMRC show that MOA enables an 8B model to match or even outperform strong baselines such as GPT-4o and Claude across numerous dimensions. This demonstrates the great potential of MOA in building RPAs that can simultaneously meet the demands of role knowledge, persona style, diverse scenarios, and complex multi-turn conversations.

Researchers from UC Berkeley and ByteDance Seed developed Natural Language Actor-Critic (NLAC), an off-policy reinforcement learning algorithm that trains LLM agents using a generative natural language critic to provide rich, explanatory feedback. NLAC demonstrated superior performance and enhanced sample efficiency on multi-turn dialogue and tool-use tasks compared to existing RL methods and strong prompting baselines.

We present Masked Generative Policy (MGP), a novel framework for visuomotor imitation learning. We represent actions as discrete tokens, and train a conditional masked transformer that generates tokens in parallel and then rapidly refines only low-confidence tokens. We further propose two new sampling paradigms: MGP-Short, which performs parallel masked generation with score-based refinement for Markovian tasks, and MGP-Long, which predicts full trajectories in a single pass and dynamically refines low-confidence action tokens based on new observations. With globally coherent prediction and robust adaptive execution capabilities, MGP-Long enables reliable control on complex and non-Markovian tasks that prior methods struggle with. Extensive evaluations on 150 robotic manipulation tasks spanning the Meta-World and LIBERO benchmarks show that MGP achieves both rapid inference and superior success rates compared to state-of-the-art diffusion and autoregressive policies. Specifically, MGP increases the average success rate by 9% across 150 tasks while cutting per-sequence inference time by up to 35x. It further improves the average success rate by 60% in dynamic and missing-observation environments, and solves two non-Markovian scenarios where other state-of-the-art methods fail.

MPDiffuser is a model-based diffusion framework that addresses dynamic infeasibility in offline decision-making by employing an alternating sampling scheme between a planner and a forward dynamics model. This approach generates dynamically feasible, task-aligned, and constraint-compliant trajectories, demonstrating improved performance across D4RL and DSRL benchmarks and successful real-world robot deployment.

PRISM-WM introduces a world model that leverages a Mixture-of-Experts architecture with latent orthogonalization to accurately model hybrid dynamics in robotic systems. This approach significantly extends reliable planning horizons and achieves superior performance in high-dimensional continuous control tasks.

Large Language Models and multi-agent systems have shown promise in decomposing complex tasks, yet they struggle with long-horizon reasoning tasks and escalating computation cost. This work introduces a hierarchical multi-agent architecture that distributes reasoning across a 64*64 grid of lightweight agents, supported by a selective oracle. A spatial curriculum progressively expands the operational region of the grid, ensuring that agents master easier central tasks before tackling harder peripheral ones. To improve reliability, the system integrates Negative Log-Likelihood as a measure of confidence, allowing the curriculum to prioritize regions where agents are both accurate and well calibrated. A Thompson Sampling curriculum manager adaptively chooses training zones based on competence and NLL-driven reward signals. We evaluate the approach on a spatially grounded Tower of Hanoi benchmark, which mirrors the long-horizon structure of many robotic manipulation and planning tasks. Results demonstrate improved stability, reduced oracle usage, and stronger long-range reasoning from distributed agent cooperation.

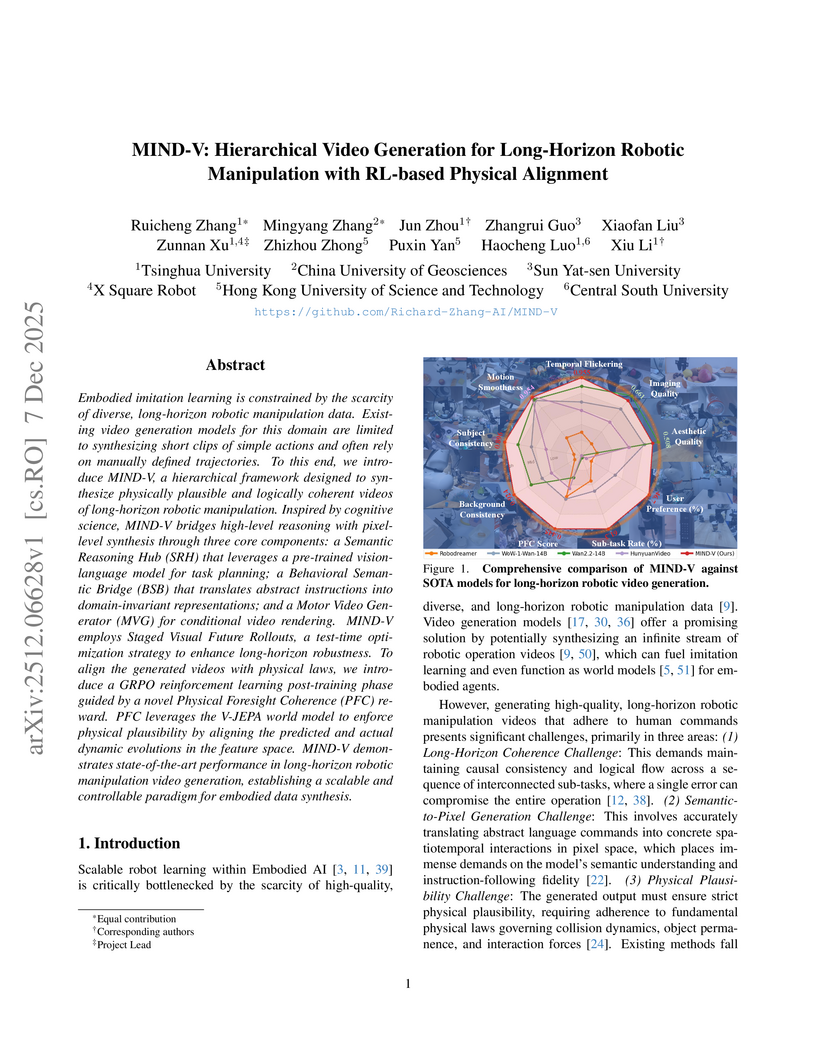

MIND-V introduces a hierarchical video generation framework for long-horizon robotic manipulation, autonomously synthesizing physically plausible and logically coherent operation videos. It employs a multi-stage architecture with reinforcement learning for physical alignment, providing a scalable method for generating robot training data.

There are no more papers matching your filters at the moment.