Ask or search anything...

FG-CLIP, developed by 360 AI Research, enhances fine-grained vision-language understanding by addressing limitations in token length and regional alignment of previous models like CLIP. It establishes new state-of-the-art performance on fine-grained benchmarks, achieving 48.4% on the "hard" subset of FG-OVD, and also improves open-vocabulary object detection and image-text retrieval.

View blogFG-CLIP 2 presents a bilingual vision-language model that achieves fine-grained alignment for both English and Chinese, setting new state-of-the-art results across 29 datasets and 8 tasks. The model, from 360 AI Research, notably outperforms larger previous multilingual models and introduces comprehensive Chinese fine-grained benchmarks.

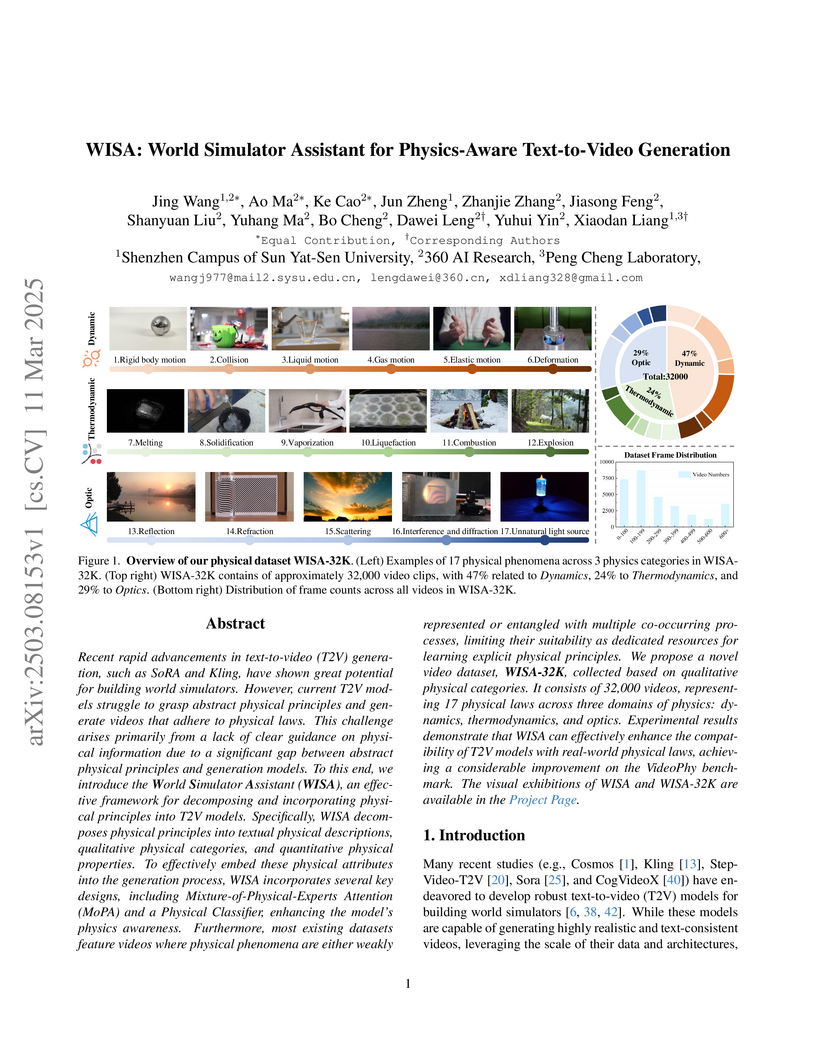

View blogThe WISA framework and WISA-32K dataset enhance text-to-video generation models by enabling them to inherently adhere to physical laws in generated content. This approach improves physical law consistency by 0.05 and semantic coherence by 0.07 on the VideoPhy benchmark, outperforming prior methods with significantly greater efficiency and minimal model overhead.

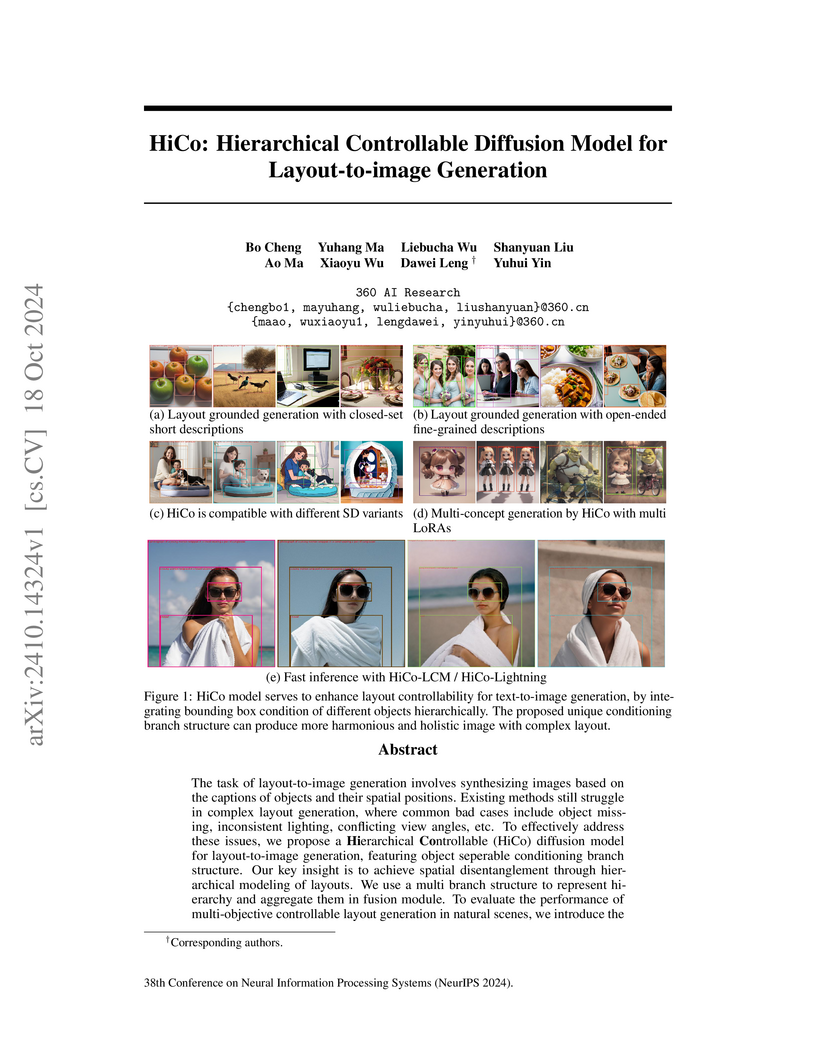

View blogResearchers from 360 AI Research developed HiCo, a hierarchical controllable diffusion model that generates high-quality images from complex spatial layouts by disentangling background and foreground object conditions. The model achieves state-of-the-art perceptual quality and precise spatial control, outperforming existing methods on both established datasets and a newly introduced HiCo-7K benchmark.

View blog

the University of Tokyo

the University of Tokyo HKUST

HKUST

Zhejiang University

Zhejiang University

Sun Yat-Sen University

Sun Yat-Sen University

Beihang University

Beihang University

Chinese Academy of Sciences

Chinese Academy of Sciences

Tsinghua University

Tsinghua University Communication University of China

Communication University of China