Centrale Marseille

26 Jul 2023

Deep operator networks (DeepONets) have demonstrated their capability of

approximating nonlinear operators for initial- and boundary-value problems. One

attractive feature of DeepONets is their versatility since they do not rely on

prior knowledge about the solution structure of a problem and can thus be

directly applied to a large class of problems. However, convergence in

identifying the parameters of the networks may sometimes be slow. In order to

improve on DeepONets for approximating the wave equation, we introduce the

Green operator networks (GreenONets), which use the representation of the exact

solution to the homogeneous wave equation in term of the Green's function. The

performance of GreenONets and DeepONets is compared on a series of numerical

experiments for homogeneous and heterogeneous media in one and two dimensions.

03 Mar 2023

We define a metric in the space of positive finite positive measures that extends the 2-Wasserstein metric, i.e. its restriction to the set of probability measures is the 2-Wasserstein metric. We prove a dual and a dynamic formulation and extend the gradient flow machinery of the Wasserstein space. In addition, we relate the barycenter in this space to the barycenter in the Wasserstein space of the normalized measures.

Methods based on class activation maps (CAM) provide a simple mechanism to interpret predictions of convolutional neural networks by using linear combinations of feature maps as saliency maps. By contrast, masking-based methods optimize a saliency map directly in the image space or learn it by training another network on additional data.

In this work we introduce Opti-CAM, combining ideas from CAM-based and masking-based approaches. Our saliency map is a linear combination of feature maps, where weights are optimized per image such that the logit of the masked image for a given class is maximized. We also fix a fundamental flaw in two of the most common evaluation metrics of attribution methods. On several datasets, Opti-CAM largely outperforms other CAM-based approaches according to the most relevant classification metrics. We provide empirical evidence supporting that localization and classifier interpretability are not necessarily aligned.

04 Jun 2024

This is the third in a series of three papers in which we study a lattice gas

subject to Kawasaki dynamics at inverse temperature \beta>0 in a large finite

box Λβ⊂Z2 whose volume depends on β. Each

pair of neighbouring particles has a negative binding energy -U<0, while each

particle has a positive activation energy \Delta>0. The initial configuration

is drawn from the grand-canonical ensemble restricted to the set of

configurations where all the droplets are subcritical. Our goal is to describe,

in the metastable regime Δ∈(U,2U) and in the limit as

β→∞, how and when the system nucleates, i.e., creates a critical

droplet somewhere in Λβ that subsequently grows by absorbing

particles from the surrounding gas.

In the first paper we showed that subcritical droplets behave as quasi-random

walks. In the second paper we used the results in the first paper to analyse

how subcritical droplets form and dissolve on multiple space-time scales when

the volume is moderately large, namely, $|\Lambda_\beta| =

\mathrm{e}^{\theta\beta}with\Delta < \theta < 2\Delta-U$. In the present

paper we consider the setting where the volume is very large, namely,

∣Λβ∣=eΘβ with $\Delta < \Theta <

\Gamma-(2\Delta-U),where\Gamma$ is the energy of the critical droplet in

the local model with fixed volume, and use the results in the first two papers

to identify the nucleation time and the tube of typical trajectories towards

nucleation. We will see that in a very large volume critical droplets appear

more or less independently in boxes of moderate volume, a phenomenon referred

to as homogeneous nucleation. One of the key ingredients in the proof is an

estimate showing that no information can travel between these boxes on relevant

time scales.

Learning from an imbalanced distribution presents a major challenge in predictive modeling, as it generally leads to a reduction in the performance of standard algorithms. Various approaches exist to address this issue, but many of them concern classification problems, with a limited focus on regression. In this paper, we introduce a novel method aimed at enhancing learning on tabular data in the Imbalanced Regression (IR) framework, which remains a significant problem. We propose to use variational autoencoders (VAE) which are known as a powerful tool for synthetic data generation, offering an interesting approach to modeling and capturing latent representations of complex distributions. However, VAEs can be inefficient when dealing with IR. Therefore, we develop a novel approach for generating data, combining VAE with a smoothed bootstrap, specifically designed to address the challenges of IR. We numerically investigate the scope of this method by comparing it against its competitors on simulations and datasets known for IR.

We present 4D topological textures in (quasi)monochromatic nonparaxial

optical lattices that contain all possible polarization ellipses with every

combination of ellipticity and orientation in 3D space. These fields span the

nonparaxial polarization space (a complex projective plane) and a 4-sphere

within specific spatiotemporal regions, forming 4D skyrmionic structures.

Constructed from five plane waves with adiabatically varying relative

amplitudes, they are experimentally realizable in free space by focusing a

temporally variant beam with a high numerical aperture lens.

23 Nov 2016

We demonstrate pixelation-free real-time widefield endoscopic imaging through an aperiodic multicore fiber (MCF) without any distal opto-mechanical elements or proximal scanners. Exploiting the memory effect in MCFs the images in our system are directly obtained without any post-processing using a static wavefront correction obtained from a single calibration procedure. Our approach allows for video-rate 3D widefield imaging of incoherently illuminated objects with imaging speed not limited by the wavefront shaping device refresh rate.

CNRS

CNRS Michigan State UniversityUniversity of Goettingen

Michigan State UniversityUniversity of Goettingen Argonne National Laboratory

Argonne National Laboratory Delft University of Technology

Delft University of Technology University of Maryland

University of Maryland Technical University of MunichUtrecht UniversityLudwig-Maximilians-Universität MünchenTechnische Universität BerlinEcole Polytechnique Fédérale de LausannePaul Scherrer InstituteCentrale MarseilleCentrum Wiskunde & InformaticaInstitut FresnelUniversit

de LorraineAix-Marseille Universit",Koc

University

Technical University of MunichUtrecht UniversityLudwig-Maximilians-Universität MünchenTechnische Universität BerlinEcole Polytechnique Fédérale de LausannePaul Scherrer InstituteCentrale MarseilleCentrum Wiskunde & InformaticaInstitut FresnelUniversit

de LorraineAix-Marseille Universit",Koc

UniversityPhase retrieval is an inverse problem that, on one hand, is crucial in many

applications across imaging and physics, and, on the other hand, leads to deep

research questions in theoretical signal processing and applied harmonic

analysis. This survey paper is an outcome of the recent workshop Phase

Retrieval in Mathematics and Applications (PRiMA) (held on August 5--9 2024 at

the Lorentz Center in Leiden, The Netherlands) that brought together experts

working on theoretical and practical aspects of the phase retrieval problem

with the purpose to formulate and explore essential open problems in the field.

22 Dec 2023

We describe a phase-retrieval-based imaging method to directly spatially resolve the vector lattice distortions in an extended crystalline sample by explicit coupling of independent Bragg ptychography data sets into the reconstruction process. Our method addresses this multi-peak Bragg ptychography (MPBP) inverse problem by explicit gradient descent optimization of an objective function based on modeling of the probe-lattice interaction, along with corrective steps to address spurious reconstruction artifacts. Robust convergence of the optimization process is ensured by computing exact gradients with the automatic differentiation capabilities of high-performance computing software packages. We demonstrate MPBP reconstruction with simulated ptychography data mimicking diffraction from a single crystal membrane containing heterogeneities that manifest as phase discontinuities in the diffracted wave. We show the superior ability of such an optimization-based approach in removing reconstruction artifacts compared to existing phase retrieval and lattice distortion reconstruction approaches.

06 Jul 2017

The logarithmic derivative of a point process plays a key role in the general approach, due to the third author, to constructing diffusions preserving a given point process. In this paper we explicitly compute the logarithmic derivative for determinantal processes on R with integrable kernels, a large class that includes all the classical processes of random matrix theory as well as processes associated with de Branges spaces. The argument uses the quasi-invariance of our processes established by the first author.

03 Mar 2023

We define a metric in the space of positive finite positive measures that extends the 2-Wasserstein metric, i.e. its restriction to the set of probability measures is the 2-Wasserstein metric. We prove a dual and a dynamic formulation and extend the gradient flow machinery of the Wasserstein space. In addition, we relate the barycenter in this space to the barycenter in the Wasserstein space of the normalized measures.

25 Jun 2015

Objective. The main goal of this work is to develop a model for multi-sensor

signals such as MEG or EEG signals, that accounts for the inter-trial

variability, suitable for corresponding binary classification problems. An

important constraint is that the model be simple enough to handle small size

and unbalanced datasets, as often encountered in BCI type experiments.

Approach. The method involves linear mixed effects statistical model, wavelet

transform and spatial filtering, and aims at the characterization of localized

discriminant features in multi-sensor signals. After discrete wavelet transform

and spatial filtering, a projection onto the relevant wavelet and spatial

channels subspaces is used for dimension reduction. The projected signals are

then decomposed as the sum of a signal of interest (i.e. discriminant) and

background noise, using a very simple Gaussian linear mixed model. Main

results. Thanks to the simplicity of the model, the corresponding parameter

estimation problem is simplified. Robust estimates of class-covariance matrices

are obtained from small sample sizes and an effective Bayes plug-in classifier

is derived. The approach is applied to the detection of error potentials in

multichannel EEG data, in a very unbalanced situation (detection of rare

events). Classification results prove the relevance of the proposed approach in

such a context. Significance. The combination of linear mixed model, wavelet

transform and spatial filtering for EEG classification is, to the best of our

knowledge, an original approach, which is proven to be effective. This paper

improves on earlier results on similar problems, and the three main ingredients

all play an important role.

Explanations obtained from transformer-based architectures in the form of raw attention, can be seen as a class-agnostic saliency map. Additionally, attention-based pooling serves as a form of masking the in feature space. Motivated by this observation, we design an attention-based pooling mechanism intended to replace Global Average Pooling (GAP) at inference. This mechanism, called Cross-Attention Stream (CA-Stream), comprises a stream of cross attention blocks interacting with features at different network depths. CA-Stream enhances interpretability in models, while preserving recognition performance.

20 Jun 2013

The classical Transfer-Matrix Method (TMM) is often used to calculate the input impedance of woodwind instruments. However, the TMM ignores the possible influence of the radiated sound from toneholes on other open holes. In this paper a method is proposed to account for external tonehole interactions. We describe the Transfer-Matrix Method with external Interaction (TMMI) and then compare results using this approach with the Finite Element Method (FEM) and TMM, as well as with experimental data. It is found that the external tonehole interactions increase the amount of radiated energy, reduce slightly the lower resonance frequencies, and modify significantly the response near and above the tonehole lattice cutoff frequency. In an appendix, a simple perturbation of the TMM to account for external interactions is investigated, though it is found to be inadequate at low frequencies and for holes spaced far apart.

Infotaxis is a popular search algorithm designed to track a source of odor in a turbulent environment using information provided by odor detections. To exemplify its capabilities, the source-tracking task was framed as a partially observable Markov decision process consisting in finding, as fast as possible, a stationary target hidden in a 2D grid using stochastic partial observations of the target location. Here we provide an extended review of infotaxis, together with a toolkit for devising better strategies. We first characterize the performance of infotaxis in domains from 1D to 4D. Our results show that, while being suboptimal, infotaxis is reliable (the probability of not reaching the source approaches zero), efficient (the mean search time scales as expected for the optimal strategy), and safe (the tail of the distribution of search times decays faster than any power law, though subexponentially). We then present three possible ways of beating infotaxis, all inspired by methods used in artificial intelligence: tree search, heuristic approximation of the value function, and deep reinforcement learning. The latter is able to find, without any prior human knowledge, the (near) optimal strategy. Altogether, our results provide evidence that the margin of improvement of infotaxis toward the optimal strategy gets smaller as the dimensionality increases.

Given its unrivaled potential of integration and scalability, silicon is

likely to become a key platform for large-scale quantum technologies.

Individual electron-encoded artificial atoms either formed by impurities or

quantum dots have emerged as a promising solution for silicon-based integrated

quantum circuits. However, single qubits featuring an optical interface needed

for large-distance exchange of information have not yet been isolated in such a

prevailing semiconductor. Here we show the isolation of single optically-active

point defects in a commercial silicon-on-insulator wafer implanted with carbon

atoms. These artificial atoms exhibit a bright, linearly polarized

single-photon emission at telecom wavelengths suitable for long-distance

propagation in optical fibers. Our results demonstrate that despite its small

bandgap (~ 1.1 eV) a priori unfavorable towards such observation, silicon can

accommodate point defects optically isolable at single scale, like in

wide-bandgap semiconductors. This work opens numerous perspectives for

silicon-based quantum technologies, from integrated quantum photonics to

quantum communications and metrology.

11 Apr 2015

Error estimates for a numerical approximation to the compressible barotropic Navier-Stokes equations

Error estimates for a numerical approximation to the compressible barotropic Navier-Stokes equations

We present here a general method based on the investigation of the relative energy of the system, that provides an unconditional error estimate for the approximate solution of the barotropic Navier Stokes equations obtained by time and space discretization. We use this methodology to derive an error estimate for a specific DG/finite element scheme for which the convergence was proved in [27]. This is an extended version of the paper submitted to IMAJNA.

This paper from researchers at Sorbonne Paris Cité and Institut Fresnel introduces a wavefront sensing scheme that uses a thin diffuser placed in front of a camera. The method leverages the diffuser's 'memory effect' to translate wavefront gradients into measurable speckle pattern displacements, enabling quantitative phase imaging with a sensitivity of "lambda"/300 and exhibiting broadband compatibility under white-light illumination.

12 Jul 2018

The study of quantum quasi-particles at low temperatures including their

statistics, is a frontier area in modern physics. In a seminal paper F.D.

Haldane proposed a definition based on a generalization of the Pauli exclusion

principle for fractional quantum statistics. The present paper is a study of

quantum quasi-particles obeying Haldane statistics in a fully non-linear

kinetic Boltzmann equation model with large initial data on a torus. Strong L1

solutions are obtained for the Cauchy problem. The main results concern

existence, uniqueness and stability. Depending on the space dimension and the

collision kernel, the results obtained are local or global in time.

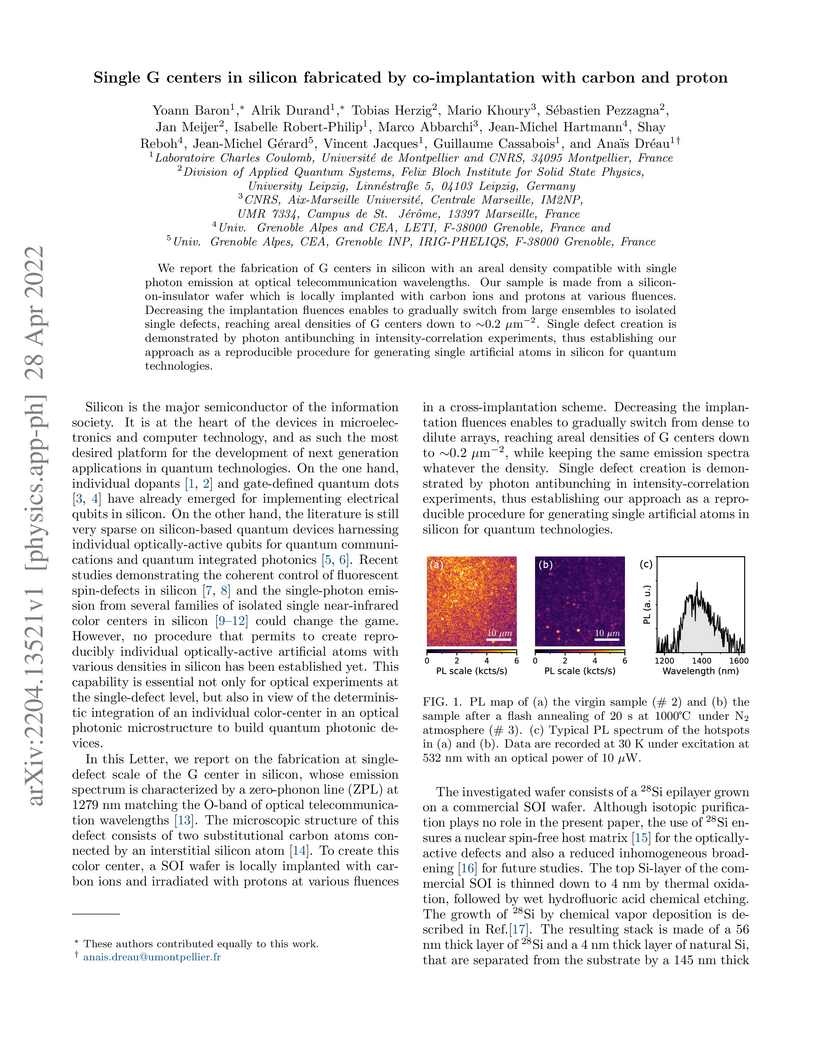

28 Apr 2022

We report the fabrication of G centers in silicon with an areal density

compatible with single photon emission at optical telecommunication

wavelengths. Our sample is made from a silicon-on-insulator wafer which is

locally implanted with carbon ions and protons at various fluences. Decreasing

the implantation fluences enables to gradually switch from large ensembles to

isolated single defects, reaching areal densities of G centers down to

∼0.2 μm−2. Single defect creation is demonstrated by photon

antibunching in intensity-correlation experiments, thus establishing our

approach as a reproducible procedure for generating single artificial atoms in

silicon for quantum technologies.

There are no more papers matching your filters at the moment.