China Mobile Research

Researchers from Nanjing University and China Mobile Research decompose large language model reasoning into knowledge retrieval and knowledge-free reasoning components, finding that knowledge-free reasoning achieves near-perfect cross-lingual transfer, while knowledge retrieval significantly hinders it. Their interpretability analysis points to shared neural mechanisms for knowledge-free reasoning across different languages.

17 Apr 2025

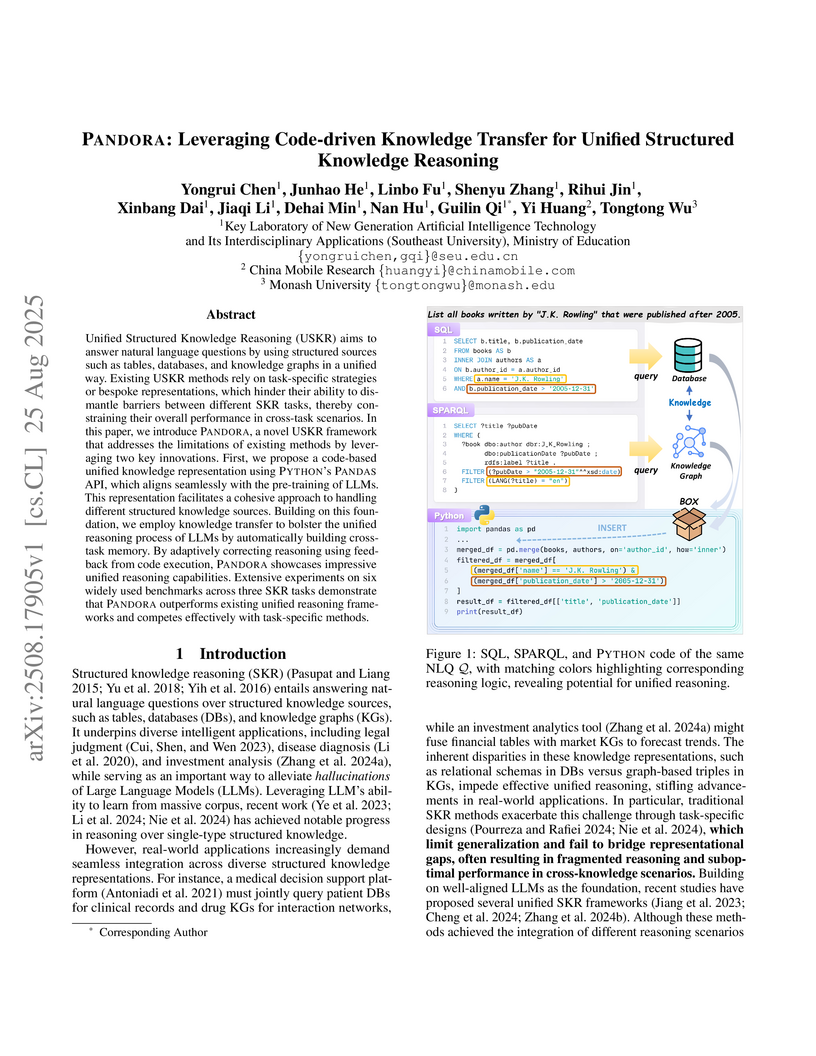

A code-driven framework called PANDORA enables unified reasoning across diverse structured knowledge sources (tables, databases, knowledge graphs) by representing all data using Python's Pandas API, achieving performance comparable to task-specific methods while facilitating knowledge transfer through shared demonstrations and execution guidance.

Unified Structured Knowledge Reasoning (USKR) aims to answer natural language questions by using structured sources such as tables, databases, and knowledge graphs in a unified way. Existing USKR methods rely on task-specific strategies or bespoke representations, which hinder their ability to dismantle barriers between different SKR tasks, thereby constraining their overall performance in cross-task scenarios. In this paper, we introduce \textsc{Pandora}, a novel USKR framework that addresses the limitations of existing methods by leveraging two key innovations. First, we propose a code-based unified knowledge representation using \textsc{Python}'s \textsc{Pandas} API, which aligns seamlessly with the pre-training of LLMs. This representation facilitates a cohesive approach to handling different structured knowledge sources. Building on this foundation, we employ knowledge transfer to bolster the unified reasoning process of LLMs by automatically building cross-task memory. By adaptively correcting reasoning using feedback from code execution, \textsc{Pandora} showcases impressive unified reasoning capabilities. Extensive experiments on six widely used benchmarks across three SKR tasks demonstrate that \textsc{Pandora} outperforms existing unified reasoning frameworks and competes effectively with task-specific methods.

Cascading multiple pre-trained models is an effective way to compose an end-to-end system. However, fine-tuning the full cascaded model is parameter and memory inefficient and our observations reveal that only applying adapter modules on cascaded model can not achieve considerable performance as fine-tuning. We propose an automatic and effective adaptive learning method to optimize end-to-end cascaded multi-task models based on Neural Architecture Search (NAS) framework. The candidate adaptive operations on each specific module consist of frozen, inserting an adapter and fine-tuning. We further add a penalty item on the loss to limit the learned structure which takes the amount of trainable parameters into account. The penalty item successfully restrict the searched architecture and the proposed approach is able to search similar tuning scheme with hand-craft, compressing the optimizing parameters to 8.7% corresponding to full fine-tuning on SLURP with an even better performance.

Pre-trained speech language models such as HuBERT and WavLM leverage unlabeled speech data for self-supervised learning and offer powerful representations for numerous downstream tasks. Despite the success of these models, their high requirements for memory and computing resource hinder their application on resource restricted devices. Therefore, this paper introduces GenDistiller, a novel knowledge distillation framework which generates the hidden representations of the pre-trained teacher model directly by a much smaller student network. The proposed method takes the previous hidden layer as history and implements a layer-by-layer prediction of the teacher model autoregressively. Experiments on SUPERB reveal the advantage of GenDistiller over the baseline distilling method without an autoregressive framework, with 33% fewer parameters, similar time consumption and better performance on most of the SUPERB tasks. Ultimately, the proposed GenDistiller reduces the size of WavLM by 82%.

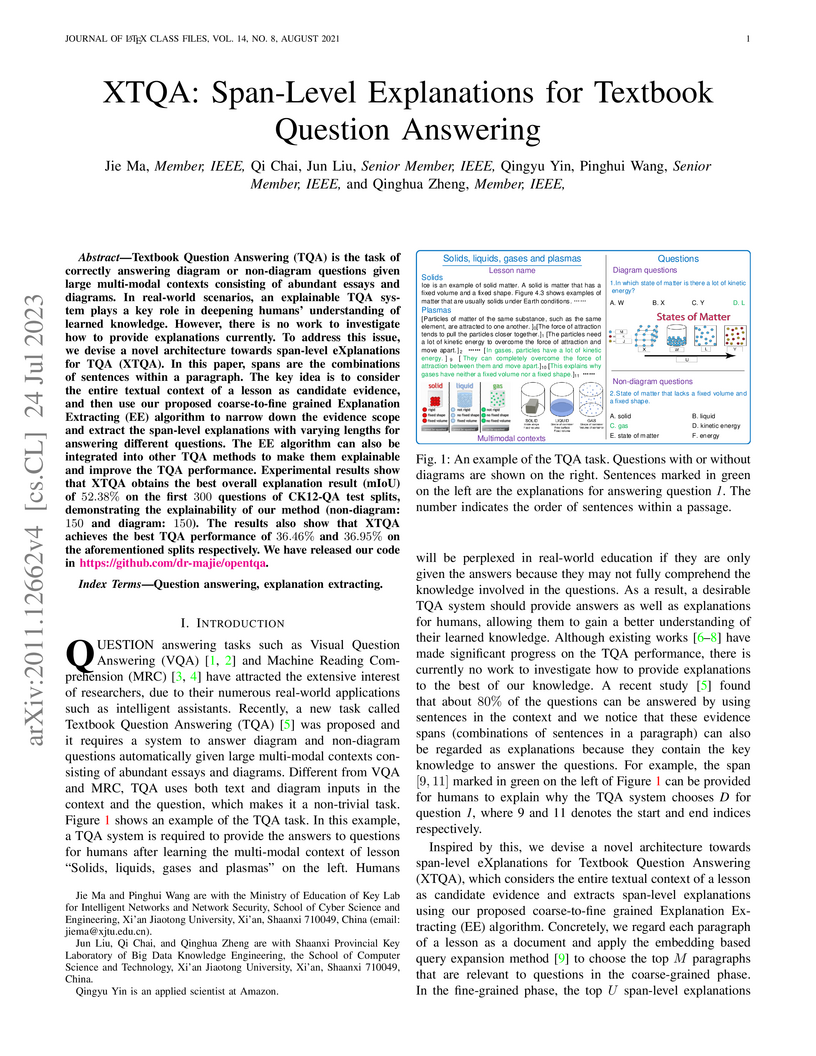

Textbook Question Answering (TQA) is a task that one should answer a

diagram/non-diagram question given a large multi-modal context consisting of

abundant essays and diagrams. We argue that the explainability of this task

should place students as a key aspect to be considered. To address this issue,

we devise a novel architecture towards span-level eXplanations of the TQA

(XTQA) based on our proposed coarse-to-fine grained algorithm, which can

provide not only the answers but also the span-level evidences to choose them

for students. This algorithm first coarsely chooses top M paragraphs relevant

to questions using the TF-IDF method, and then chooses top K evidence spans

finely from all candidate spans within these paragraphs by computing the

information gain of each span to questions. Experimental results shows that

XTQA significantly improves the state-of-the-art performance compared with

baselines. The source code is available at

this https URL

25 Apr 2022

Multi-action dialog policy (MADP), which generates multiple atomic dialog actions per turn, has been widely applied in task-oriented dialog systems to provide expressive and efficient system responses. Existing MADP models usually imitate action combinations from the labeled multi-action dialog samples. Due to data limitations, they generalize poorly toward unseen dialog flows. While interactive learning and reinforcement learning algorithms can be applied to incorporate external data sources of real users and user simulators, they take significant manual effort to build and suffer from instability. To address these issues, we propose Planning Enhanced Dialog Policy (PEDP), a novel multi-task learning framework that learns single-action dialog dynamics to enhance multi-action prediction. Our PEDP method employs model-based planning for conceiving what to express before deciding the current response through simulating single-action dialogs. Experimental results on the MultiWOZ dataset demonstrate that our fully supervised learning-based method achieves a solid task success rate of 90.6%, improving 3% compared to the state-of-the-art methods.

Multi-action dialog policy, which generates multiple atomic dialog actions

per turn, has been widely applied in task-oriented dialog systems to provide

expressive and efficient system responses. Existing policy models usually

imitate action combinations from the labeled multi-action dialog examples. Due

to data limitations, they generalize poorly toward unseen dialog flows. While

reinforcement learning-based methods are proposed to incorporate the service

ratings from real users and user simulators as external supervision signals,

they suffer from sparse and less credible dialog-level rewards. To cope with

this problem, we explore to improve multi-action dialog policy learning with

explicit and implicit turn-level user feedback received for historical

predictions (i.e., logged user feedback) that are cost-efficient to collect and

faithful to real-world scenarios. The task is challenging since the logged user

feedback provides only partial label feedback limited to the particular

historical dialog actions predicted by the agent. To fully exploit such

feedback information, we propose BanditMatch, which addresses the task from a

feedback-enhanced semi-supervised learning perspective with a hybrid objective

of semi-supervised learning and bandit learning. BanditMatch integrates

pseudo-labeling methods to better explore the action space through constructing

full label feedback. Extensive experiments show that our BanditMatch

outperforms the state-of-the-art methods by generating more concise and

informative responses. The source code and the appendix of this paper can be

obtained from this https URL

Previous contrastive learning methods for sentence representations often focus on insensitive transformations to produce positive pairs, but neglect the role of sensitive transformations that are harmful to semantic representations. Therefore, we propose an Equivariant Self-Contrastive Learning (ESCL) method to make full use of sensitive transformations, which encourages the learned representations to be sensitive to certain types of transformations with an additional equivariant learning task. Meanwhile, in order to improve practicability and generality, ESCL simplifies the implementations of traditional equivariant contrastive methods to share model parameters from the perspective of multi-task learning. We evaluate our ESCL on semantic textual similarity tasks. The proposed method achieves better results while using fewer learning parameters compared to previous methods.

Recent years have seen increasing concerns about the unsafe response

generation of large-scale dialogue systems, where agents will learn offensive

or biased behaviors from the real-world corpus. Some methods are proposed to

address the above issue by detecting and replacing unsafe training examples in

a pipeline style. Though effective, they suffer from a high annotation cost and

adapt poorly to unseen scenarios as well as adversarial attacks. Besides, the

neglect of providing safe responses (e.g. simply replacing with templates) will

cause the information-missing problem of dialogues. To address these issues, we

propose an unsupervised pseudo-label sampling method, TEMP, that can

automatically assign potential safe responses. Specifically, our TEMP method

groups responses into several clusters and samples multiple labels with an

adaptively sharpened sampling strategy, inspired by the observation that unsafe

samples in the clusters are usually few and distribute in the tail. Extensive

experiments in chitchat and task-oriented dialogues show that our TEMP

outperforms state-of-the-art models with weak supervision signals and obtains

comparable results under unsupervised learning settings.

There are no more papers matching your filters at the moment.