mechanistic-interpretability

AI systems can be trained to conceal their true capabilities during evaluations, a phenomenon termed "sandbagging," which poses a risk to safety assessments. Research by UK AISI, FAR.AI, and Anthropic demonstrated that black-box detection methods failed against such strategically underperforming models, although one-shot fine-tuning proved effective for eliciting hidden capabilities.

A study by NYU Abu Dhabi and UC Davis demonstrated that Large Language Models internalize a signal of code correctness, which can be extracted to rank code candidates more effectively than traditional confidence measures. Their Representation Engineering method improved code selection accuracy by up to 29 percentage points and often surpassed execution-feedback based rankers.

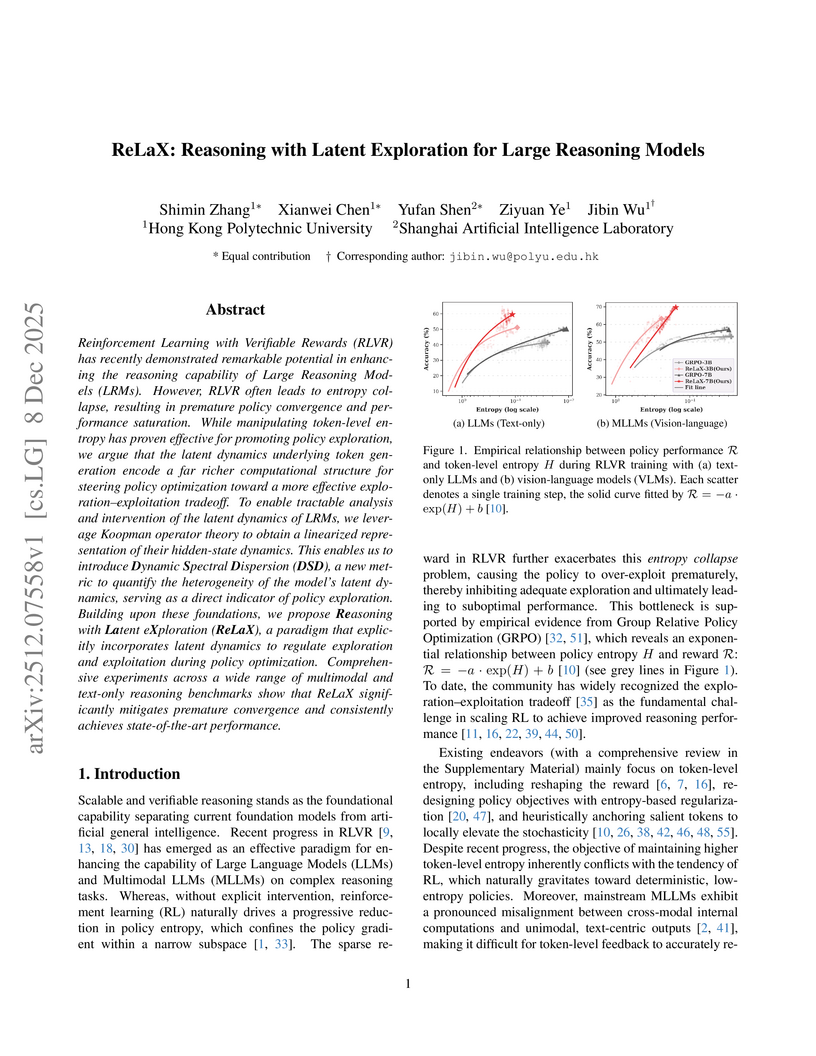

The ReLaX framework improves reasoning capabilities in Large Reasoning Models by addressing premature policy convergence through latent exploration, employing Koopman operator theory and Dynamic Spectral Dispersion. This approach enables sustained performance gains, achieving state-of-the-art results in both multimodal and mathematical reasoning benchmarks.

A lightweight framework, RAGLens, accurately identifies and explains faithfulness issues in Retrieval-Augmented Generation outputs by leveraging Sparse Autoencoders on LLM internal states. This approach achieves over 80% AUC on RAG benchmarks and provides interpretable, token-level feedback for effective hallucination mitigation.

We present SDialog, an MIT-licensed open-source Python toolkit that unifies dialog generation, evaluation and mechanistic interpretability into a single end-to-end framework for building and analyzing LLM-based conversational agents. Built around a standardized \texttt{Dialog} representation, SDialog provides: (1) persona-driven multi-agent simulation with composable orchestration for controlled, synthetic dialog generation, (2) comprehensive evaluation combining linguistic metrics, LLM-as-a-judge and functional correctness validators, (3) mechanistic interpretability tools for activation inspection and steering via feature ablation and induction, and (4) audio generation with full acoustic simulation including 3D room modeling and microphone effects. The toolkit integrates with all major LLM backends, enabling mixed-backend experiments under a unified API. By coupling generation, evaluation, and interpretability in a dialog-centric architecture, SDialog enables researchers to build, benchmark and understand conversational systems more systematically.

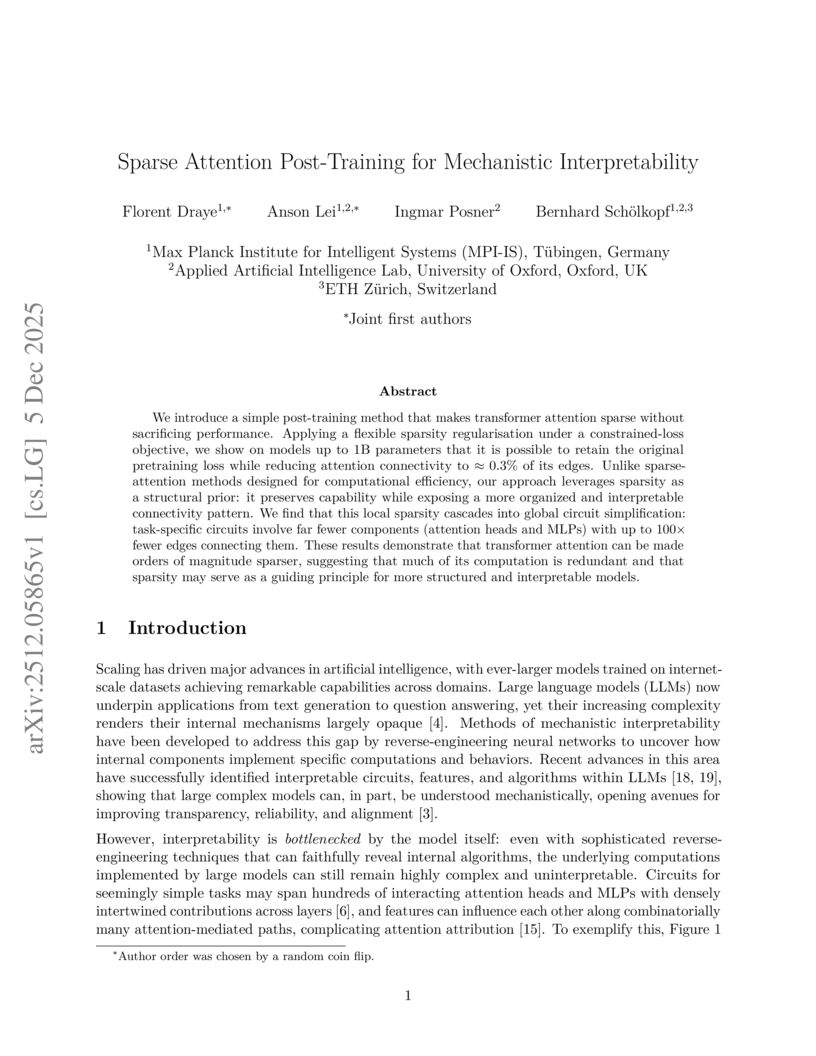

Researchers at MPI-IS, University of Oxford, and ETH Zürich developed a post-training method that makes Transformer attention layers sparse by retaining only 0.2-0.3% of active attention edges. This approach maintains the original model's performance while drastically simplifying its internal computational graphs for enhanced mechanistic interpretability.

Diffusion models have achieved remarkable performance in generative modeling, yet their theoretical foundations are often intricate, and the gap between mathematical formulations in papers and practical open-source implementations can be difficult to bridge. Existing tutorials primarily focus on deriving equations, offering limited guidance on how diffusion models actually operate in code. To address this, we present a concise implementation of approximately 300 lines that explains diffusion models from a code-execution perspective. Our minimal example preserves the essential components -- including forward diffusion, reverse sampling, the noise-prediction network, and the training loop -- while removing unnecessary engineering details. This technical report aims to provide researchers with a clear, implementation-first understanding of how diffusion models work in practice and how code and theory correspond. Our code and pre-trained models are available at: this https URL.

Transformers and more specifically decoder-only transformers dominate modern LLM architectures. While they have shown to work exceptionally well, they are not without issues, resulting in surprising failure modes and predictably asymmetric performance degradation. This article is a study of many of these observed failure modes of transformers through the lens of graph neural network (GNN) theory. We first make the case that much of deep learning, including transformers, is about learnable information mixing and propagation. This makes the study of model failure modes a study of bottlenecks in information propagation. This naturally leads to GNN theory, where there is already a rich literature on information propagation bottlenecks and theoretical failure modes of models. We then make the case that many issues faced by GNNs are also experienced by transformers. In addition, we analyze how the causal nature of decoder-only transformers create interesting geometric properties in information propagation, resulting in predictable and potentially devastating failure modes. Finally, we observe that existing solutions in transformer research tend to be ad-hoc and driven by intuition rather than grounded theoretical motivation. As such, we unify many such solutions under a more theoretical perspective, providing insight into why they work, what problem they are actually solving, and how they can be further improved to target specific failure modes of transformers. Overall, this article is an attempt to bridge the gap between observed failure modes in transformers and a general lack of theoretical understanding of them in this space.

This research investigates how RL post-training enhances large language models' reasoning by disentangling length and compositional generalization, establishing that compositional structure is a primary determinant of problem difficulty. The work demonstrates that models can synthesize novel reasoning patterns from learned substructures without direct training on those specific compositions.

A research collaboration led by Hong Kong Baptist University, RIKEN AIP, and The University of Tokyo introduced a gradient alignment framework to mechanistically analyze the optimization dynamics of preference optimization (PO) methods. The study found that Direct Preference Optimization (DPO) functions as stable supervised learning where negative learning regularizes overfitting, while Proximal Policy Optimization (PPO) operates as stable reinforcement learning through balanced exploration, leading to improved LLM performance across Pythia-2.8B, Qwen3-1.7B, and Llama3-8B models.

Researchers identified a remarkably sparse subset of "H-Neurons" in large language models whose activation reliably predicts hallucinatory outputs and is causally linked to over-compliance behaviors. These hallucination-associated neural circuits are found to emerge primarily during the pre-training phase and remain largely stable through post-training alignment.

Training vision language models (VLMs) aims to align visual representations from a vision encoder with the textual representations of a pretrained large language model (LLM). However, many VLMs exhibit reduced factual recall performance compared to their LLM backbones, raising the question of how effective multimodal fine-tuning is at extending existing mechanisms within the LLM to visual inputs. We argue that factual recall based on visual inputs requires VLMs to solve a two-hop problem: (1) forming entity representations from visual inputs, and (2) recalling associated factual knowledge based on these entity representations. By benchmarking 14 VLMs with various architectures (LLaVA, Native, Cross-Attention), sizes (7B-124B parameters), and training setups on factual recall tasks against their original LLM backbone models, we find that 11 of 14 models exhibit factual recall degradation. We select three models with high and two models with low performance degradation, and use attribution patching, activation patching, and probing to show that degraded VLMs struggle to use the existing factual recall circuit of their LLM backbone, because they resolve the first hop too late in the computation. In contrast, high-performing VLMs resolve entity representations early enough to reuse the existing factual recall mechanism. Finally, we demonstrate two methods to recover performance: patching entity representations from the LLM backbone into the VLM, and prompting with chain-of-thought reasoning. Our results highlight that the speed of early entity resolution critically determines how effective VLMs are in using preexisting LLM mechanisms. More broadly, our work illustrates how mechanistic analysis can explain and unveil systematic failures in multimodal alignment.

This work presents a comprehensive framework for AI deception, defining it functionally and outlining its emergence from interacting factors and its adaptive nature as an iterative "Deception Cycle." It categorizes deceptive behaviors and associated risks, while also surveying current detection and mitigation strategies, identifying limitations, and highlighting grand challenges for future research and governance.

Researchers from the National University of Singapore and Indian Institute of Technology established the first unified theoretical framework for Sparse Dictionary Learning (SDL) methods in mechanistic interpretability. This work formalizes how SDL recovers interpretable features and offers the first theoretical explanations for observed phenomena like feature absorption and dead neurons in neural network representations.

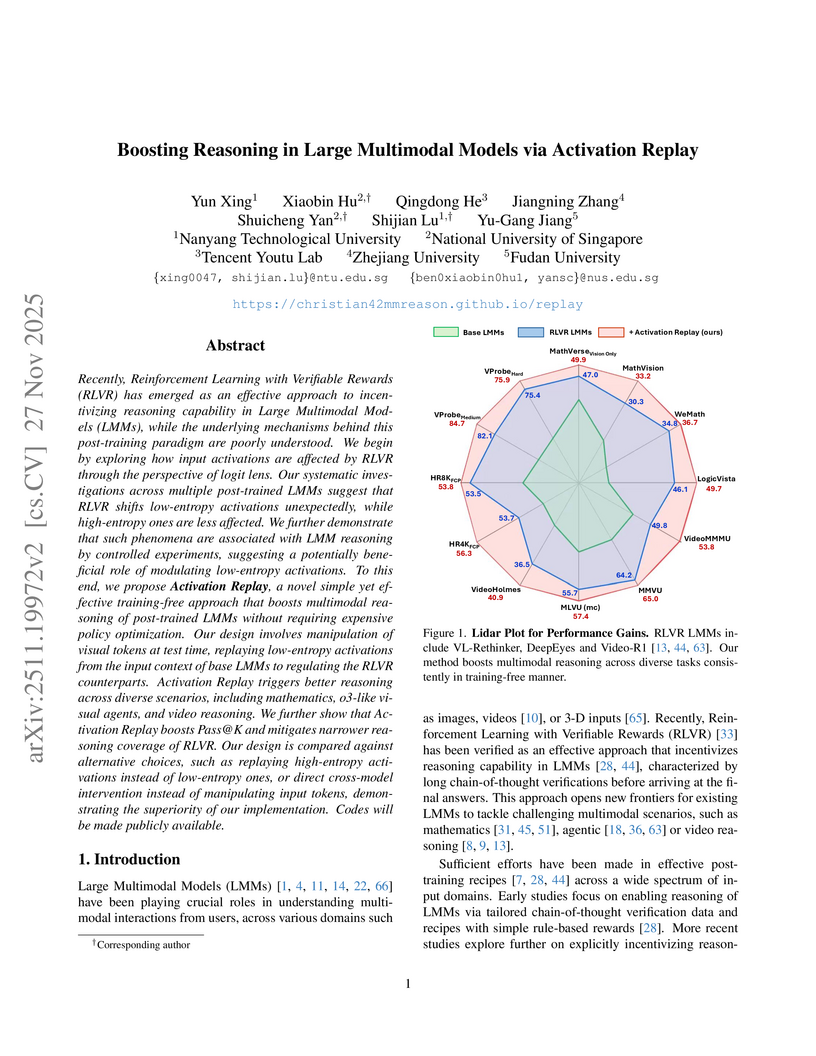

An investigation into Reinforcement Learning with Verifiable Rewards (RLVR) in Large Multimodal Models (LMMs) uncovers that RLVR unexpectedly alters low-entropy input activations. Leveraging this insight, a training-free method called "Activation Replay" is introduced, which enhances multimodal reasoning across various tasks and improves Pass@K metrics with negligible additional computational overhead during inference.

Large language models (LLMs) can reliably distinguish grammatical from ungrammatical sentences, but how grammatical knowledge is represented within the models remains an open question. We investigate whether different syntactic phenomena recruit shared or distinct components in LLMs. Using a functional localization approach inspired by cognitive neuroscience, we identify the LLM units most responsive to 67 English syntactic phenomena in seven open-weight models. These units are consistently recruited across sentences containing the phenomena and causally support the models' syntactic performance. Critically, different types of syntactic agreement (e.g., subject-verb, anaphor, determiner-noun) recruit overlapping sets of units, suggesting that agreement constitutes a meaningful functional category for LLMs. This pattern holds in English, Russian, and Chinese; and further, in a cross-lingual analysis of 57 diverse languages, structurally more similar languages share more units for subject-verb agreement. Taken together, these findings reveal that syntactic agreement-a critical marker of syntactic dependencies-constitutes a meaningful category within LLMs' representational spaces.

Researchers at MIT rigorously analyze the mean-field dynamics of Transformer attention, framing it as an interacting particle system that leads to eventual representation collapse but also exhibits long-lived metastable multi-cluster states. The work quantitatively explains the benefits of Pre-Layer Normalization in delaying collapse and identifies a phase transition in long-context attention with logarithmic scaling.

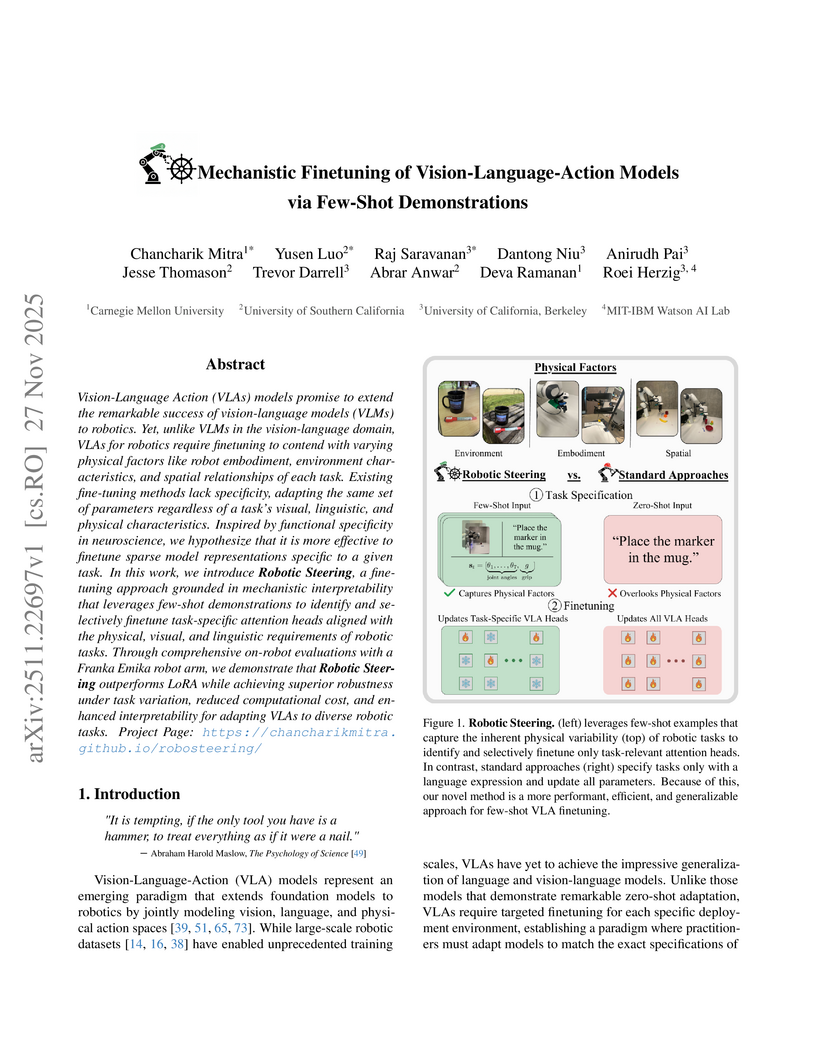

Researchers from Carnegie Mellon, USC, UC Berkeley, and MIT-IBM Watson AI Lab developed "Robotic Steering," a method for efficiently adapting Vision-Language-Action (VLA) models by mechanistically identifying and finetuning only task-specific attention heads using few-shot demonstrations. This approach achieves comparable or superior task performance, reducing trainable parameters by 96% and finetuning time by 21% while enhancing robustness and generalization compared to general finetuning techniques like LoRA.

A collaborative effort from Stanford, University of Wisconsin–Madison, and University at Buffalo researchers introduces a constructive framework for building Multi-Layer Perceptrons that efficiently store factual knowledge as key-value mappings for Transformer architectures. The proposed method achieves asymptotically optimal parameter efficiency and facilitates a modular approach to fact editing, demonstrably outperforming current state-of-the-art techniques.

Research from the University of Michigan, Princeton, and Harvard investigated how explicitly training Transformer models for a "world model" influences their internal representations and downstream performance. The study demonstrated that models pre-trained with world-modeling objectives develop more linearly decodable and causally steerable state representations, which subsequently leads to greater accuracy gains when combined with reinforcement learning post-training.

There are no more papers matching your filters at the moment.