Dark Matter AI Inc.

Recent advances in multi-agent reinforcement learning have been largely limited in training one model from scratch for every new task. The limitation is due to the restricted model architecture related to fixed input and output dimensions. This hinders the experience accumulation and transfer of the learned agent over tasks with diverse levels of difficulty (e.g. 3 vs 3 or 5 vs 6 multi-agent games). In this paper, we make the first attempt to explore a universal multi-agent reinforcement learning pipeline, designing one single architecture to fit tasks with the requirement of different observation and action configurations. Unlike previous RNN-based models, we utilize a transformer-based model to generate a flexible policy by decoupling the policy distribution from the intertwined input observation with an importance weight measured by the merits of the self-attention mechanism. Compared to a standard transformer block, the proposed model, named as Universal Policy Decoupling Transformer (UPDeT), further relaxes the action restriction and makes the multi-agent task's decision process more explainable. UPDeT is general enough to be plugged into any multi-agent reinforcement learning pipeline and equip them with strong generalization abilities that enables the handling of multiple tasks at a time. Extensive experiments on large-scale SMAC multi-agent competitive games demonstrate that the proposed UPDeT-based multi-agent reinforcement learning achieves significant results relative to state-of-the-art approaches, demonstrating advantageous transfer capability in terms of both performance and training speed (10 times faster).

Researchers from Sun Yat-sen University, Hong Kong University of Science and Technology, Hong Kong Polytechnic University, and Tencent Jarvis Lab introduce MedDG, a large-scale, entity-centric medical consultation dataset. Experiments using MedDG demonstrate that explicitly incorporating medical entity information significantly improves the quality and medical accuracy of generated dialogue responses.

Previous math word problem solvers following the encoder-decoder paradigm fail to explicitly incorporate essential math symbolic constraints, leading to unexplainable and unreasonable predictions. Herein, we propose Neural-Symbolic Solver (NS-Solver) to explicitly and seamlessly incorporate different levels of symbolic constraints by auxiliary tasks. Our NS-Solver consists of a problem reader to encode problems, a programmer to generate symbolic equations, and a symbolic executor to obtain answers. Along with target expression supervision, our solver is also optimized via 4 new auxiliary objectives to enforce different symbolic reasoning: a) self-supervised number prediction task predicting both number quantity and number locations; b) commonsense constant prediction task predicting what prior knowledge (e.g. how many legs a chicken has) is required; c) program consistency checker computing the semantic loss between predicted equation and target equation to ensure reasonable equation mapping; d) duality exploiting task exploiting the quasi duality between symbolic equation generation and problem's part-of-speech generation to enhance the understanding ability of a solver. Besides, to provide a more realistic and challenging benchmark for developing a universal and scalable solver, we also construct a new large-scale MWP benchmark CM17K consisting of 4 kinds of MWPs (arithmetic, one-unknown linear, one-unknown non-linear, equation set) with more than 17K samples. Extensive experiments on Math23K and our CM17k demonstrate the superiority of our NS-Solver compared to state-of-the-art methods.

Facial action unit (AU) recognition is a crucial task for facial expressions analysis and has attracted extensive attention in the field of artificial intelligence and computer vision. Existing works have either focused on designing or learning complex regional feature representations, or delved into various types of AU relationship modeling. Albeit with varying degrees of progress, it is still arduous for existing methods to handle complex situations. In this paper, we investigate how to integrate the semantic relationship propagation between AUs in a deep neural network framework to enhance the feature representation of facial regions, and propose an AU semantic relationship embedded representation learning (SRERL) framework. Specifically, by analyzing the symbiosis and mutual exclusion of AUs in various facial expressions, we organize the facial AUs in the form of structured knowledge-graph and integrate a Gated Graph Neural Network (GGNN) in a multi-scale CNN framework to propagate node information through the graph for generating enhanced AU representation. As the learned feature involves both the appearance characteristics and the AU relationship reasoning, the proposed model is more robust and can cope with more challenging cases, e.g., illumination change and partial occlusion. Extensive experiments on the two public benchmarks demonstrate that our method outperforms the previous work and achieves state of the art performance.

15 Mar 2020

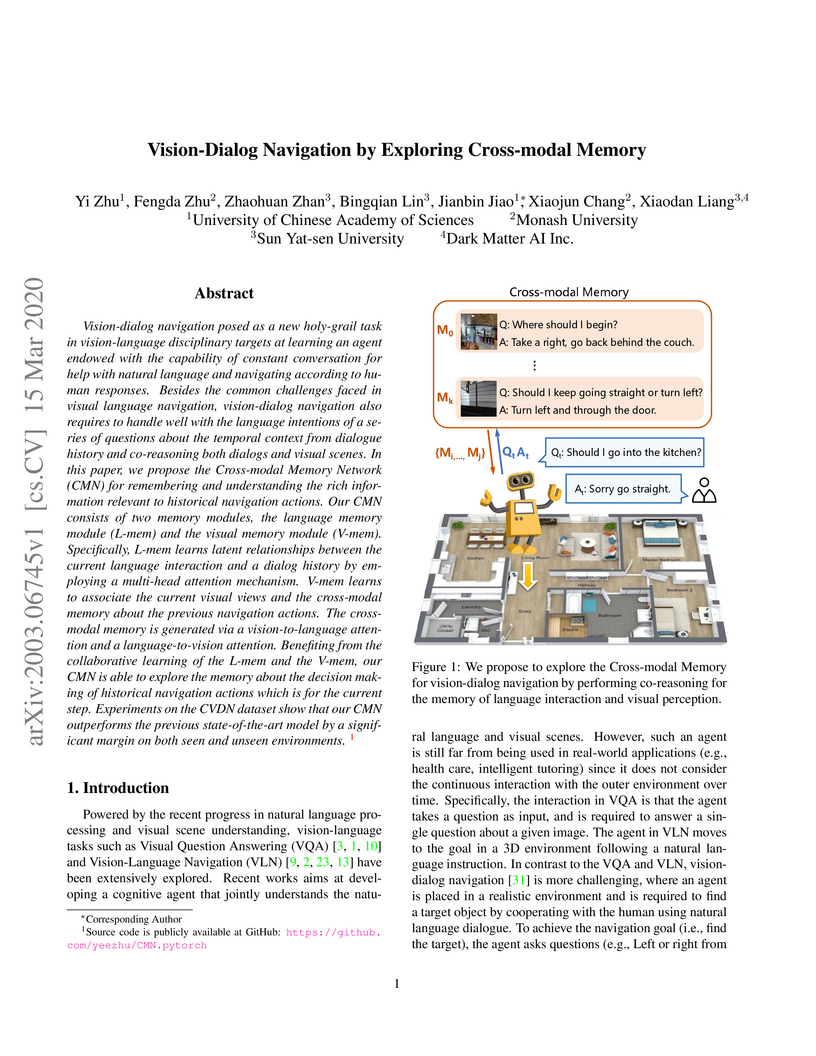

Vision-dialog navigation posed as a new holy-grail task in vision-language

disciplinary targets at learning an agent endowed with the capability of

constant conversation for help with natural language and navigating according

to human responses. Besides the common challenges faced in visual language

navigation, vision-dialog navigation also requires to handle well with the

language intentions of a series of questions about the temporal context from

dialogue history and co-reasoning both dialogs and visual scenes. In this

paper, we propose the Cross-modal Memory Network (CMN) for remembering and

understanding the rich information relevant to historical navigation actions.

Our CMN consists of two memory modules, the language memory module (L-mem) and

the visual memory module (V-mem). Specifically, L-mem learns latent

relationships between the current language interaction and a dialog history by

employing a multi-head attention mechanism. V-mem learns to associate the

current visual views and the cross-modal memory about the previous navigation

actions. The cross-modal memory is generated via a vision-to-language attention

and a language-to-vision attention. Benefiting from the collaborative learning

of the L-mem and the V-mem, our CMN is able to explore the memory about the

decision making of historical navigation actions which is for the current step.

Experiments on the CVDN dataset show that our CMN outperforms the previous

state-of-the-art model by a significant margin on both seen and unseen

environments.

Target-guided open-domain conversation aims to proactively and naturally

guide a dialogue agent or human to achieve specific goals, topics or keywords

during open-ended conversations. Existing methods mainly rely on single-turn

datadriven learning and simple target-guided strategy without considering

semantic or factual knowledge relations among candidate topics/keywords. This

results in poor transition smoothness and low success rate. In this work, we

adopt a structured approach that controls the intended content of system

responses by introducing coarse-grained keywords, attains smooth conversation

transition through turn-level supervised learning and knowledge relations

between candidate keywords, and drives an conversation towards an specified

target with discourse-level guiding strategy. Specially, we propose a novel

dynamic knowledge routing network (DKRN) which considers semantic knowledge

relations among candidate keywords for accurate next topic prediction of next

discourse. With the help of more accurate keyword prediction, our

keyword-augmented response retrieval module can achieve better retrieval

performance and more meaningful conversations. Besides, we also propose a novel

dual discourse-level target-guided strategy to guide conversations to reach

their goals smoothly with higher success rate. Furthermore, to push the

research boundary of target-guided open-domain conversation to match real-world

scenarios better, we introduce a new large-scale Chinese target-guided

open-domain conversation dataset (more than 900K conversations) crawled from

Sina Weibo. Quantitative and human evaluations show our method can produce

meaningful and effective target-guided conversations, significantly improving

over other state-of-the-art methods by more than 20% in success rate and more

than 0.6 in average smoothness score.

Automatic dialogue coherence evaluation has attracted increasing attention

and is crucial for developing promising dialogue systems. However, existing

metrics have two major limitations: (a) they are mostly trained in a simplified

two-level setting (coherent vs. incoherent), while humans give Likert-type

multi-level coherence scores, dubbed as "quantifiable"; (b) their predicted

coherence scores cannot align with the actual human rating standards due to the

absence of human guidance during training. To address these limitations, we

propose Quantifiable Dialogue Coherence Evaluation (QuantiDCE), a novel

framework aiming to train a quantifiable dialogue coherence metric that can

reflect the actual human rating standards. Specifically, QuantiDCE includes two

training stages, Multi-Level Ranking (MLR) pre-training and Knowledge

Distillation (KD) fine-tuning. During MLR pre-training, a new MLR loss is

proposed for enabling the model to learn the coarse judgement of coherence

degrees. Then, during KD fine-tuning, the pretrained model is further finetuned

to learn the actual human rating standards with only very few human-annotated

data. To advocate the generalizability even with limited fine-tuning data, a

novel KD regularization is introduced to retain the knowledge learned at the

pre-training stage. Experimental results show that the model trained by

QuantiDCE presents stronger correlations with human judgements than the other

state-of-the-art metrics.

In this paper, we revisit the solving bias when evaluating models on current Math Word Problem (MWP) benchmarks. However, current solvers exist solving bias which consists of data bias and learning bias due to biased dataset and improper training strategy. Our experiments verify MWP solvers are easy to be biased by the biased training datasets which do not cover diverse questions for each problem narrative of all MWPs, thus a solver can only learn shallow heuristics rather than deep semantics for understanding problems. Besides, an MWP can be naturally solved by multiple equivalent equations while current datasets take only one of the equivalent equations as ground truth, forcing the model to match the labeled ground truth and ignoring other equivalent equations. Here, we first introduce a novel MWP dataset named UnbiasedMWP which is constructed by varying the grounded expressions in our collected data and annotating them with corresponding multiple new questions manually. Then, to further mitigate learning bias, we propose a Dynamic Target Selection (DTS) Strategy to dynamically select more suitable target expressions according to the longest prefix match between the current model output and candidate equivalent equations which are obtained by applying commutative law during training. The results show that our UnbiasedMWP has significantly fewer biases than its original data and other datasets, posing a promising benchmark for fairly evaluating the solvers' reasoning skills rather than matching nearest neighbors. And the solvers trained with our DTS achieve higher accuracies on multiple MWP benchmarks. The source code is available at this https URL.

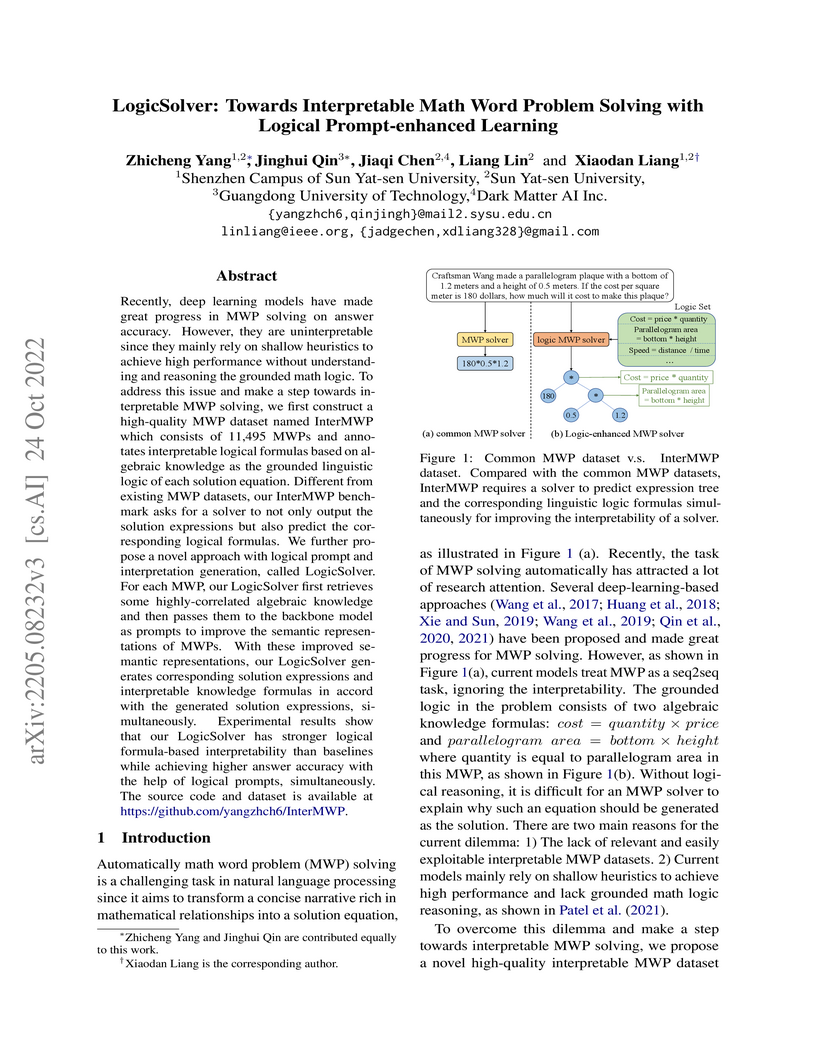

Recently, deep learning models have made great progress in MWP solving on answer accuracy. However, they are uninterpretable since they mainly rely on shallow heuristics to achieve high performance without understanding and reasoning the grounded math logic. To address this issue and make a step towards interpretable MWP solving, we first construct a high-quality MWP dataset named InterMWP which consists of 11,495 MWPs and annotates interpretable logical formulas based on algebraic knowledge as the grounded linguistic logic of each solution equation. Different from existing MWP datasets, our InterMWP benchmark asks for a solver to not only output the solution expressions but also predict the corresponding logical formulas. We further propose a novel approach with logical prompt and interpretation generation, called LogicSolver. For each MWP, our LogicSolver first retrieves some highly-correlated algebraic knowledge and then passes them to the backbone model as prompts to improve the semantic representations of MWPs. With these improved semantic representations, our LogicSolver generates corresponding solution expressions and interpretable knowledge formulas in accord with the generated solution expressions, simultaneously. Experimental results show that our LogicSolver has stronger logical formula-based interpretability than baselines while achieving higher answer accuracy with the help of logical prompts, simultaneously. The source code and dataset is available at this https URL.

FRAME (Filters, Random fields, And Maximum Entropy) is an energy-based descriptive model that synthesizes visual realism by capturing mutual patterns from structural input signals. The maximum likelihood estimation (MLE) is applied by default, yet conventionally causes the unstable training energy that wrecks the generated structures, which remains unexplained. In this paper, we provide a new theoretical insight to analyze FRAME, from a perspective of particle physics ascribing the weird phenomenon to KL-vanishing issue. In order to stabilize the energy dissipation, we propose an alternative Wasserstein distance in discrete time based on the conclusion that the Jordan-Kinderlehrer-Otto (JKO) discrete flow approximates KL discrete flow when the time step size tends to 0. Besides, this metric can still maintain the model's statistical consistency. Quantitative and qualitative experiments have been respectively conducted on several widely used datasets. The empirical studies have evidenced the effectiveness and superiority of our method.

There are no more papers matching your filters at the moment.