Fraunhofer Institute for Computer Graphics Research IGD

Recent advances in generative modeling have enabled the generation of high-quality synthetic data that is applicable in a variety of domains, including face recognition. Here, state-of-the-art generative models typically rely on conditioning and fine-tuning of powerful pretrained diffusion models to facilitate the synthesis of realistic images of a desired identity. Yet, these models often do not consider the identity of subjects during training, leading to poor consistency between generated and intended identities. In contrast, methods that employ identity-based training objectives tend to overfit on various aspects of the identity, and in turn, lower the diversity of images that can be generated. To address these issues, we present in this paper a novel generative diffusion-based framework, called ID-Booth. ID-Booth consists of a denoising network responsible for data generation, a variational auto-encoder for mapping images to and from a lower-dimensional latent space and a text encoder that allows for prompt-based control over the generation procedure. The framework utilizes a novel triplet identity training objective and enables identity-consistent image generation while retaining the synthesis capabilities of pretrained diffusion models. Experiments with a state-of-the-art latent diffusion model and diverse prompts reveal that our method facilitates better intra-identity consistency and inter-identity separability than competing methods, while achieving higher image diversity. In turn, the produced data allows for effective augmentation of small-scale datasets and training of better-performing recognition models in a privacy-preserving manner. The source code for the ID-Booth framework is publicly available at this https URL.

In this paper, we present a set of extremely efficient and high throughput

models for accurate face verification, MixFaceNets which are inspired by Mixed

Depthwise Convolutional Kernels. Extensive experiment evaluations on Label Face

in the Wild (LFW), Age-DB, MegaFace, and IARPA Janus Benchmarks IJB-B and IJB-C

datasets have shown the effectiveness of our MixFaceNets for applications

requiring extremely low computational complexity. Under the same level of

computation complexity (< 500M FLOPs), our MixFaceNets outperform

MobileFaceNets on all the evaluated datasets, achieving 99.60% accuracy on LFW,

97.05% accuracy on AgeDB-30, 93.60 TAR (at FAR1e-6) on MegaFace, 90.94 TAR (at

FAR1e-4) on IJB-B and 93.08 TAR (at FAR1e-4) on IJB-C. With computational

complexity between 500M and 1G FLOPs, our MixFaceNets achieved results

comparable to the top-ranked models, while using significantly fewer FLOPs and

less computation overhead, which proves the practical value of our proposed

MixFaceNets. All training codes, pre-trained models, and training logs have

been made available this https URL

Idiap Research InstituteUniversity of LjubljanaTU DarmstadtHochschule DarmstadtNorwegian University of Science and Technology (NTNU)Universidad Andres BelloAmadeusFraunhofer Institute for Computer Graphics Research IGDInstitute of Systems and RoboticsFacephi companyID VisionCenterIncode Technologies Inc.Universidad de Santiago (USACH)

This work summarises and reports the results of the second Presentation Attack Detection competition on ID cards. This new version includes new elements compared to the previous one. (1) An automatic evaluation platform was enabled for automatic benchmarking; (2) Two tracks were proposed in order to evaluate algorithms and datasets, respectively; and (3) A new ID card dataset was shared with Track 1 teams to serve as the baseline dataset for the training and optimisation. The Hochschule Darmstadt, Fraunhofer-IGD, and Facephi company jointly organised this challenge. 20 teams were registered, and 74 submitted models were evaluated. For Track 1, the "Dragons" team reached first place with an Average Ranking and Equal Error rate (EER) of AV-Rank of 40.48% and 11.44% EER, respectively. For the more challenging approach in Track 2, the "Incode" team reached the best results with an AV-Rank of 14.76% and 6.36% EER, improving on the results of the first edition of 74.30% and 21.87% EER, respectively. These results suggest that PAD on ID cards is improving, but it is still a challenging problem related to the number of images, especially of bona fide images.

Face Image Quality Assessment (FIQA) aims to predict the utility of a face image for face recognition (FR) systems. State-of-the-art FIQA methods mainly rely on convolutional neural networks (CNNs), leaving the potential of Vision Transformer (ViT) architectures underexplored. This work proposes ViT-FIQA, a novel approach that extends standard ViT backbones, originally optimized for FR, through a learnable quality token designed to predict a scalar utility score for any given face image. The learnable quality token is concatenated with the standard image patch tokens, and the whole sequence is processed via global self-attention by the ViT encoders to aggregate contextual information across all patches. At the output of the backbone, ViT-FIQA branches into two heads: (1) the patch tokens are passed through a fully connected layer to learn discriminative face representations via a margin-penalty softmax loss, and (2) the quality token is fed into a regression head to learn to predict the face sample's utility. Extensive experiments on challenging benchmarks and several FR models, including both CNN- and ViT-based architectures, demonstrate that ViT-FIQA consistently achieves top-tier performance. These results underscore the effectiveness of transformer-based architectures in modeling face image utility and highlight the potential of ViTs as a scalable foundation for future FIQA research this https URL.

Knowledge distillation (KD) aims at improving the performance of a compact student model by distilling the knowledge from a high-performing teacher model. In this paper, we present an adaptive KD approach, namely AdaDistill, for deep face recognition. The proposed AdaDistill embeds the KD concept into the softmax loss by training the student using a margin penalty softmax loss with distilled class centers from the teacher. Being aware of the relatively low capacity of the compact student model, we propose to distill less complex knowledge at an early stage of training and more complex one at a later stage of training. This relative adjustment of the distilled knowledge is controlled by the progression of the learning capability of the student over the training iterations without the need to tune any hyper-parameters. Extensive experiments and ablation studies show that AdaDistill can enhance the discriminative learning capability of the student and demonstrate superiority over various state-of-the-art competitors on several challenging benchmarks, such as IJB-B, IJB-C, and ICCV2021-MFR

Foundation models such as CLIP have demonstrated exceptional zero- and few-shot transfer capabilities across diverse vision tasks. However, when fine-tuned for highly specialized biometric tasks, face recognition (FR), morphing attack detection (MAD), and presentation attack detection (PAD), these models may suffer from over-specialization. Thus, they may lose one of their foundational strengths, cross-domain generalization. In this work, we systematically quantify these trade-offs by evaluating three instances of CLIP fine-tuned for FR, MAD, and PAD. We evaluate each adapted model as well as the original CLIP baseline on 14 general vision datasets under zero-shot and linear-probe protocols, alongside common FR, MAD, and PAD benchmarks. Our results indicate that fine-tuned models suffer from over-specialization, especially when fine-tuned for complex tasks of FR. Also, our results pointed out that task complexity and classification head design, multi-class (FR) vs. binary (MAD and PAD), correlate with the degree of catastrophic forgetting. The FRoundation model with the ViT-L backbone outperforms other approaches on the large-scale FR benchmark IJB-C, achieving an improvement of up to 58.52%. However, it experiences a substantial performance drop on ImageNetV2, reaching only 51.63% compared to 69.84% achieved by the baseline CLIP model. Moreover, the larger CLIP architecture consistently preserves more of the model's original generalization ability than the smaller variant, indicating that increased model capacity may help mitigate over-specialization.

Investigating new methods of creating face morphing attacks is essential to

foresee novel attacks and help mitigate them. Creating morphing attacks is

commonly either performed on the image-level or on the representation-level.

The representation-level morphing has been performed so far based on generative

adversarial networks (GAN) where the encoded images are interpolated in the

latent space to produce a morphed image based on the interpolated vector. Such

a process was constrained by the limited reconstruction fidelity of GAN

architectures. Recent advances in the diffusion autoencoder models have

overcome the GAN limitations, leading to high reconstruction fidelity. This

theoretically makes them a perfect candidate to perform representation-level

face morphing. This work investigates using diffusion autoencoders to create

face morphing attacks by comparing them to a wide range of image-level and

representation-level morphs. Our vulnerability analyses on four

state-of-the-art face recognition models have shown that such models are highly

vulnerable to the created attacks, the MorDIFF, especially when compared to

existing representation-level morphs. Detailed detectability analyses are also

performed on the MorDIFF, showing that they are as challenging to detect as

other morphing attacks created on the image- or representation-level. Data and

morphing script are made public: this https URL

The inaugural PAD-IDCard competition at IJCB 2024 established a benchmark for Presentation Attack Detection (PAD) on ID cards, evaluating algorithms in a cross-dataset scenario. The organizers' private-data-trained baseline achieved an AV_rank of 9.10%, substantially outperforming the best competitor's AV_rank of 74.30%, highlighting the critical role of data access and diversity.

Face recognition (FR) systems have a growing effect on critical

decision-making processes. Recent works have shown that FR solutions show

strong performance differences based on the user's demographics. However, to

enable a trustworthy FR technology, it is essential to know the influence of an

extended range of facial attributes on FR beyond demographics. Therefore, in

this work, we analyse FR bias over a wide range of attributes. We investigate

the influence of 47 attributes on the verification performance of two popular

FR models. The experiments were performed on the publicly available MAADFace

attribute database with over 120M high-quality attribute annotations. To

prevent misleading statements about biased performances, we introduced control

group based validity values to decide if unbalanced test data causes the

performance differences. The results demonstrate that also many non-demographic

attributes strongly affect the recognition performance, such as accessories,

hair-styles and colors, face shapes, or facial anomalies. The observations of

this work show the strong need for further advances in making FR system more

robust, explainable, and fair. Moreover, our findings might help to a better

understanding of how FR networks work, to enhance the robustness of these

networks, and to develop more generalized bias-mitigating face recognition

solutions.

The main question this work aims at answering is: "can morphing attack

detection (MAD) solutions be successfully developed based on synthetic data?".

Towards that, this work introduces the first synthetic-based MAD development

dataset, namely the Synthetic Morphing Attack Detection Development dataset

(SMDD). This dataset is utilized successfully to train three MAD backbones

where it proved to lead to high MAD performance, even on completely unknown

attack types. Additionally, an essential aspect of this work is the detailed

legal analyses of the challenges of using and sharing real biometric data,

rendering our proposed SMDD dataset extremely essential. The SMDD dataset,

consisting of 30,000 attack and 50,000 bona fide samples, is publicly available

for research purposes.

Over the past years, the main research innovations in face recognition focused on training deep neural networks on large-scale identity-labeled datasets using variations of multi-class classification losses. However, many of these datasets are retreated by their creators due to increased privacy and ethical concerns. Very recently, privacy-friendly synthetic data has been proposed as an alternative to privacy-sensitive authentic data to comply with privacy regulations and to ensure the continuity of face recognition research. In this paper, we propose an unsupervised face recognition model based on unlabeled synthetic data (USynthFace). Our proposed USynthFace learns to maximize the similarity between two augmented images of the same synthetic instance. We enable this by a large set of geometric and color transformations in addition to GAN-based augmentation that contributes to the USynthFace model training. We also conduct numerous empirical studies on different components of our USynthFace. With the proposed set of augmentation operations, we proved the effectiveness of our USynthFace in achieving relatively high recognition accuracies using unlabeled synthetic data.

This paper presents a summary of the Competition on Face Morphing Attack

Detection Based on Privacy-aware Synthetic Training Data (SYN-MAD) held at the

2022 International Joint Conference on Biometrics (IJCB 2022). The competition

attracted a total of 12 participating teams, both from academia and industry

and present in 11 different countries. In the end, seven valid submissions were

submitted by the participating teams and evaluated by the organizers. The

competition was held to present and attract solutions that deal with detecting

face morphing attacks while protecting people's privacy for ethical and legal

reasons. To ensure this, the training data was limited to synthetic data

provided by the organizers. The submitted solutions presented innovations that

led to outperforming the considered baseline in many experimental settings. The

evaluation benchmark is now available at:

this https URL

14 Aug 2025

We explore the potential application of quantum annealing to address the protein structure problem. To this end, we compare several proposed ab initio protein folding models for quantum computers and analyze their scaling and performance for classical and quantum heuristics. Furthermore, we introduce a novel encoding of coordinate based models on the tetrahedral lattice, based on interleaved grids. Our findings reveal significant variations in model performance, with one model yielding unphysical configurations within the feasible solution space. Furthermore, we conclude that current quantum annealing hardware is not yet suited for tackling problems beyond a proof-of-concept size, primarily due to challenges in the embedding. Nonetheless, we observe a scaling advantage over our in-house simulated annealing implementation, which, however, is only noticeable when comparing performance on the embedded problems.

ElasticFace introduces a novel elastic margin loss for deep face recognition that samples margin values from a Gaussian distribution, moving beyond fixed margins. This approach achieves state-of-the-art performance on seven out of nine mainstream benchmarks by better accommodating inconsistent intra- and inter-class variations in face data.

Recent deep face recognition models proposed in the literature utilized large-scale public datasets such as MS-Celeb-1M and VGGFace2 for training very deep neural networks, achieving state-of-the-art performance on mainstream benchmarks. Recently, many of these datasets, e.g., MS-Celeb-1M and VGGFace2, are retracted due to credible privacy and ethical concerns. This motivates this work to propose and investigate the feasibility of using a privacy-friendly synthetically generated face dataset to train face recognition models. Towards this end, we utilize a class-conditional generative adversarial network to generate class-labeled synthetic face images, namely SFace. To address the privacy aspect of using such data to train a face recognition model, we provide extensive evaluation experiments on the identity relation between the synthetic dataset and the original authentic dataset used to train the generative model. Our reported evaluation proved that associating an identity of the authentic dataset to one with the same class label in the synthetic dataset is hardly possible. We also propose to train face recognition on our privacy-friendly dataset, SFace, using three different learning strategies, multi-class classification, label-free knowledge transfer, and combined learning of multi-class classification and knowledge transfer. The reported evaluation results on five authentic face benchmarks demonstrated that the privacy-friendly synthetic dataset has high potential to be used for training face recognition models, achieving, for example, a verification accuracy of 91.87\% on LFW using multi-class classification and 99.13\% using the combined learning strategy.

Morphing attacks keep threatening biometric systems, especially face

recognition systems. Over time they have become simpler to perform and more

realistic, as such, the usage of deep learning systems to detect these attacks

has grown. At the same time, there is a constant concern regarding the lack of

interpretability of deep learning models. Balancing performance and

interpretability has been a difficult task for scientists. However, by

leveraging domain information and proving some constraints, we have been able

to develop IDistill, an interpretable method with state-of-the-art performance

that provides information on both the identity separation on morph samples and

their contribution to the final prediction. The domain information is learnt by

an autoencoder and distilled to a classifier system in order to teach it to

separate identity information. When compared to other methods in the literature

it outperforms them in three out of five databases and is competitive in the

remaining.

Iris Presentation Attack Detection (PAD) is essential to secure iris recognition systems. Recent iris PAD solutions achieved good performance by leveraging deep learning techniques. However, most results were reported under intra-database scenarios and it is unclear if such solutions can generalize well across databases and capture spectra. These PAD methods run the risk of overfitting because of the binary label supervision during the network training, which serves global information learning but weakens the capture of local discriminative features. This chapter presents a novel attention-based deep pixel-wise binary supervision (A-PBS) method. A-PBS utilizes pixel-wise supervision to capture the fine-grained pixel/patch-level cues and attention mechanism to guide the network to automatically find regions where most contribute to an accurate PAD decision. Extensive experiments are performed on six NIR and one visible-light iris databases to show the effectiveness and robustness of proposed A-PBS methods. We additionally conduct extensive experiments under intra-/cross-database and intra-/cross-spectrum for detailed analysis. The results of our experiments indicates the generalizability of the A-PBS iris PAD approach.

The availability of large-scale authentic face databases has been crucial to the significant advances made in face recognition research over the past decade. However, legal and ethical concerns led to the recent retraction of many of these databases by their creators, raising questions about the continuity of future face recognition research without one of its key resources. Synthetic datasets have emerged as a promising alternative to privacy-sensitive authentic data for face recognition development. However, recent synthetic datasets that are used to train face recognition models suffer either from limitations in intra-class diversity or cross-class (identity) discrimination, leading to less optimal accuracies, far away from the accuracies achieved by models trained on authentic data. This paper targets this issue by proposing IDiff-Face, a novel approach based on conditional latent diffusion models for synthetic identity generation with realistic identity variations for face recognition training. Through extensive evaluations, our proposed synthetic-based face recognition approach pushed the limits of state-of-the-art performances, achieving, for example, 98.00% accuracy on the Labeled Faces in the Wild (LFW) benchmark, far ahead from the recent synthetic-based face recognition solutions with 95.40% and bridging the gap to authentic-based face recognition with 99.82% accuracy.

Researchers from Fraunhofer IGD and TU Darmstadt developed SynthASpoof, the first large-scale, privacy-friendly synthetic dataset for face presentation attack detection (PAD). Models trained using SynthASpoof, particularly when combined with domain generalization techniques, achieved generalizability and performance competitive with or better than models trained on authentic datasets.

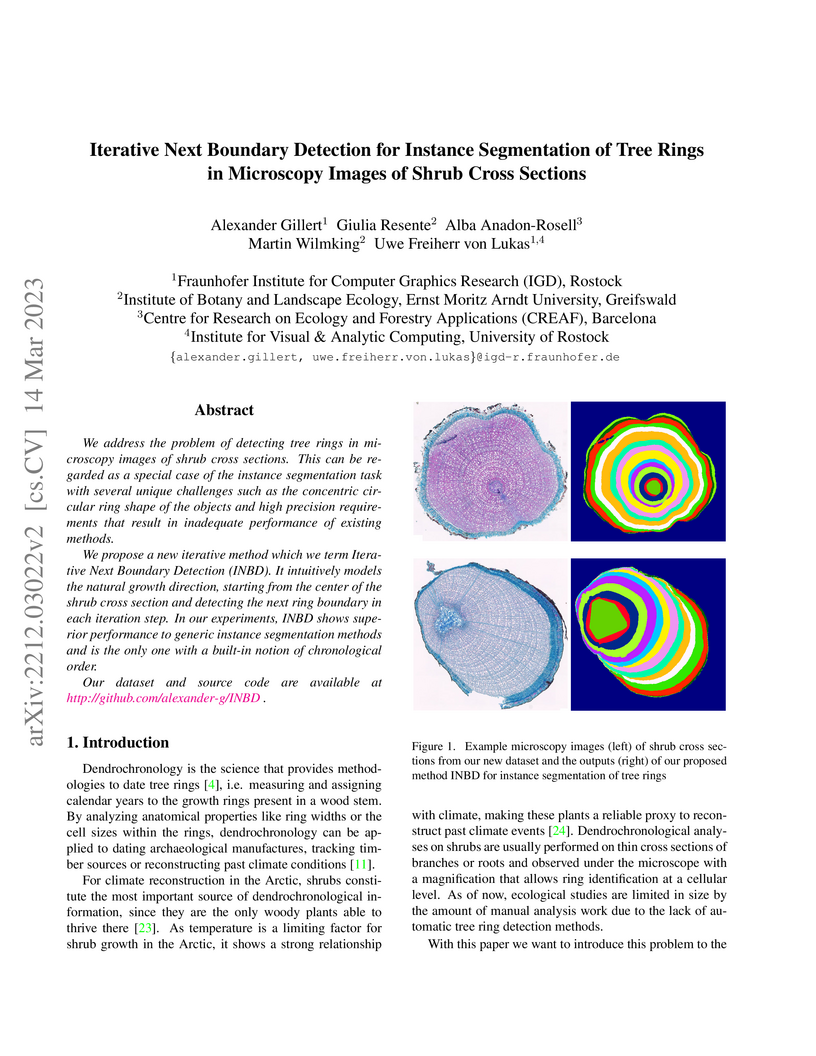

Fraunhofer Institute for Computer Graphics Research IGDFraunhofer Institute for Computer Graphics ResearchInstitute of Botany and Landscape Ecology, Ernst Moritz Arndt University, GreifswaldCentre for Research on Ecology and Forestry Applications (CREAF), BarcelonaInstitute for Visual & Analytic Computing, University of Rostock

We address the problem of detecting tree rings in microscopy images of shrub cross sections. This can be regarded as a special case of the instance segmentation task with several unique challenges such as the concentric circular ring shape of the objects and high precision requirements that result in inadequate performance of existing methods. We propose a new iterative method which we term Iterative Next Boundary Detection (INBD). It intuitively models the natural growth direction, starting from the center of the shrub cross section and detecting the next ring boundary in each iteration step. In our experiments, INBD shows superior performance to generic instance segmentation methods and is the only one with a built-in notion of chronological order. Our dataset and source code are available at this http URL.

There are no more papers matching your filters at the moment.