Friedrich-Schiller-University Jena

CNRSFreie Universität Berlin

CNRSFreie Universität Berlin University of OxfordTU Dortmund UniversityGerman Research Center for Artificial Intelligence (DFKI)University of InnsbruckCollège de FranceMax Planck Institute for the Science of LightFriedrich-Alexander-Universität Erlangen-NürnbergInstitut Polytechnique de ParisUniversity of LatviaUniversity of TurkuSaarland UniversityFondazione Bruno KesslerTU Wien

University of OxfordTU Dortmund UniversityGerman Research Center for Artificial Intelligence (DFKI)University of InnsbruckCollège de FranceMax Planck Institute for the Science of LightFriedrich-Alexander-Universität Erlangen-NürnbergInstitut Polytechnique de ParisUniversity of LatviaUniversity of TurkuSaarland UniversityFondazione Bruno KesslerTU Wien Chalmers University of TechnologyForschungszentrum JülichUniversity of RegensburgUniversity of FlorenceUniversity of AugsburgUniversity of GothenburgLeiden Institute of PhysicsDonostia International Physics CenterJohannes Kepler University LinzFraunhofer Heinrich-Hertz-InstituteSAP SEFriedrich-Schiller-University JenaEuropean Centre for Theoretical Studies in Nuclear Physics and Related Areas (ECT*)EPITA Research LabLeiden Institute of Advanced Computer ScienceÖAWVienna Center for Quantum Science and TechnologyAtominstitutUniversity of Applied Sciences Zittau/GörlitzIQOQI ViennaFraunhofer IOSB-ASTUniversit PSLInria Paris–SaclayUniversit

Paris Diderot`Ecole PolytechniqueUniversity of Naples

“Federico II”INFN

Sezione di Firenze

Chalmers University of TechnologyForschungszentrum JülichUniversity of RegensburgUniversity of FlorenceUniversity of AugsburgUniversity of GothenburgLeiden Institute of PhysicsDonostia International Physics CenterJohannes Kepler University LinzFraunhofer Heinrich-Hertz-InstituteSAP SEFriedrich-Schiller-University JenaEuropean Centre for Theoretical Studies in Nuclear Physics and Related Areas (ECT*)EPITA Research LabLeiden Institute of Advanced Computer ScienceÖAWVienna Center for Quantum Science and TechnologyAtominstitutUniversity of Applied Sciences Zittau/GörlitzIQOQI ViennaFraunhofer IOSB-ASTUniversit PSLInria Paris–SaclayUniversit

Paris Diderot`Ecole PolytechniqueUniversity of Naples

“Federico II”INFN

Sezione di FirenzeA collaborative white paper coordinated by the Quantum Community Network comprehensively analyzes the current status and future perspectives of Quantum Artificial Intelligence, categorizing its potential into "Quantum for AI" and "AI for Quantum" applications. It proposes a strategic research and development agenda to bolster Europe's competitive position in this rapidly converging technological domain.

A study introduces "ChemBench," a comprehensive framework designed to systematically evaluate Large Language Models' (LLMs) chemical knowledge and reasoning against human expert performance. The top-performing LLMs ('o1', Claude-3.5 Sonnet, GPT-4o) statistically outperformed the best human chemist on average within this benchmark, yet they critically struggled with chemical intuition, complex structural reasoning, and accurate self-assessment of their confidence.

Researchers developed a semi-automatic pipeline for ontology and knowledge graph construction, utilizing open-source Large Language Models to reduce human effort and time. The pipeline successfully generated the DLProv Ontology with 45 classes and 41 relationships, demonstrating its utility in documenting deep learning provenance in biodiversity research.

Researchers at Friedrich Schiller University Jena introduce CausalRivers, a large-scale benchmark dataset of real-world river discharge time-series data with known causal relationships, enabling comprehensive evaluation of causal discovery algorithms across 1,160 measurement stations while incorporating complex temporal dynamics and environmental factors.

Recent advancements in artificial intelligence have sparked interest in

scientific assistants that could support researchers across the full spectrum

of scientific workflows, from literature review to experimental design and data

analysis. A key capability for such systems is the ability to process and

reason about scientific information in both visual and textual forms - from

interpreting spectroscopic data to understanding laboratory setups. Here, we

introduce MaCBench, a comprehensive benchmark for evaluating how

vision-language models handle real-world chemistry and materials science tasks

across three core aspects: data extraction, experimental understanding, and

results interpretation. Through a systematic evaluation of leading models, we

find that while these systems show promising capabilities in basic perception

tasks - achieving near-perfect performance in equipment identification and

standardized data extraction - they exhibit fundamental limitations in spatial

reasoning, cross-modal information synthesis, and multi-step logical inference.

Our insights have important implications beyond chemistry and materials

science, suggesting that developing reliable multimodal AI scientific

assistants may require advances in curating suitable training data and

approaches to training those models.

University of Toronto

University of Toronto University of AlbertaTufts University

University of AlbertaTufts University Aalto UniversityNational Institute of Standards and TechnologyUniversity of JohannesburgCzech Institute of Informatics, Robotics and Cybernetics, Czech Technical UniversityUniversity of AntwerpFriedrich-Schiller-University JenaWageningen University & ResearchInstitute of Organic Chemistry and Biochemistry of the Czech Academy of SciencesAlberta Machine Intelligence InstituteUniversity of Applied Sciences, DüsseldorfEawag: Swiss Federal Institute of Aquatic Science and TechnologyBright Giant GmbH

Aalto UniversityNational Institute of Standards and TechnologyUniversity of JohannesburgCzech Institute of Informatics, Robotics and Cybernetics, Czech Technical UniversityUniversity of AntwerpFriedrich-Schiller-University JenaWageningen University & ResearchInstitute of Organic Chemistry and Biochemistry of the Czech Academy of SciencesAlberta Machine Intelligence InstituteUniversity of Applied Sciences, DüsseldorfEawag: Swiss Federal Institute of Aquatic Science and TechnologyBright Giant GmbHThe discovery and identification of molecules in biological and environmental

samples is crucial for advancing biomedical and chemical sciences. Tandem mass

spectrometry (MS/MS) is the leading technique for high-throughput elucidation

of molecular structures. However, decoding a molecular structure from its mass

spectrum is exceptionally challenging, even when performed by human experts. As

a result, the vast majority of acquired MS/MS spectra remain uninterpreted,

thereby limiting our understanding of the underlying (bio)chemical processes.

Despite decades of progress in machine learning applications for predicting

molecular structures from MS/MS spectra, the development of new methods is

severely hindered by the lack of standard datasets and evaluation protocols. To

address this problem, we propose MassSpecGym -- the first comprehensive

benchmark for the discovery and identification of molecules from MS/MS data.

Our benchmark comprises the largest publicly available collection of

high-quality labeled MS/MS spectra and defines three MS/MS annotation

challenges: de novo molecular structure generation, molecule retrieval, and

spectrum simulation. It includes new evaluation metrics and a

generalization-demanding data split, therefore standardizing the MS/MS

annotation tasks and rendering the problem accessible to the broad machine

learning community. MassSpecGym is publicly available at

this https URL

Optical microscopy is one of the most widely used techniques in research studies for life sciences and biomedicine. These applications require reliable experimental pipelines to extract valuable knowledge from the measured samples and must be supported by image quality assessment (IQA) to ensure correct processing and analysis of the image data. IQA methods are implemented with variable complexity. However, while most quality metrics have a straightforward implementation, they might be time consuming and computationally expensive when evaluating a large dataset. In addition, quality metrics are often designed for well-defined image features and may be unstable for images out of the ideal domain.

To overcome these limitations, recent works have proposed deep learning-based IQA methods, which can provide superior performance, increased generalizability and fast prediction. Our method, named μDeepIQA, is inspired by previous studies and applies a deep convolutional neural network designed for IQA on natural images to optical microscopy measurements. We retrained the same architecture to predict individual quality metrics and global quality scores for optical microscopy data. The resulting models provide fast and stable predictions of image quality by generalizing quality estimation even outside the ideal range of standard methods. In addition, μDeepIQA provides patch-wise prediction of image quality and can be used to visualize spatially varying quality in a single image. Our study demonstrates that optical microscopy-based studies can benefit from the generalizability of deep learning models due to their stable performance in the presence of outliers, the ability to assess small image patches, and rapid predictions.

15 Sep 2025

We study constrained bi-matrix games, with a particular focus on low-rank games. Our main contribution is a framework that reduces low-rank games to smaller, equivalent constrained games, along with a necessary and sufficient condition for when such reductions exist. Building on this framework, we present three approaches for computing the set of extremal Nash equilibria, based on vertex enumeration, polyhedral calculus, and vector linear programming. Numerical case studies demonstrate the effectiveness of the proposed reduction and solution methods.

07 Oct 2025

We present a universal deep-learning method that reconstructs super-resolved images of quantum emitters from a single camera frame measurement. Trained on physics-based synthetic data spanning diverse point-spread functions, aberrations, and noise, the network generalizes across experimental conditions without system-specific retraining. We validate the approach on low- and high-density In(Ga)As quantum dots and strain-induced dots in 2D monolayer WSe2, resolving overlapping emitters even under low signal-to-noise and inhomogeneous backgrounds. By eliminating calibration and iterative acquisitions, this single-shot strategy enables rapid, robust super-resolution for nanoscale characterization and quantum photonic device fabrication.

02 Dec 2024

This research enhances the NeOn-GPT pipeline to improve the generation of ontologies by Large Language Models (LLMs) within complex life science domains, specifically using the AquaDiva ontology as a case study. The work demonstrates that strategic prompt engineering and targeted ontology reuse significantly increase the structural depth and semantic accuracy of LLM-generated ontologies, with matched concepts showing over 0.85 similarity to gold standard knowledge bases.

We propose the Distance-informed Neural Process (DNP), a novel variant of Neural Processes that improves uncertainty estimation by combining global and distance-aware local latent structures. Standard Neural Processes (NPs) often rely on a global latent variable and struggle with uncertainty calibration and capturing local data dependencies. DNP addresses these limitations by introducing a global latent variable to model task-level variations and a local latent variable to capture input similarity within a distance-preserving latent space. This is achieved through bi-Lipschitz regularization, which bounds distortions in input relationships and encourages the preservation of relative distances in the latent space. This modeling approach allows DNP to produce better-calibrated uncertainty estimates and more effectively distinguish in- from out-of-distribution data. Empirical results demonstrate that DNP achieves strong predictive performance and improved uncertainty calibration across regression and classification tasks.

02 Sep 2025

Quantum teleportation and entanglement swapping are fundamental building blocks for realizing global quantum networks. Both protocols have been extensively studied and experimentally demonstrated using two-dimensional qubit systems. Their implementation in high-dimensional (HD) systems offers significant advantages, such as enhanced noise resilience, greater robustness against eavesdropping, and increased information capacity. Generally, HD photonic entanglement swapping requires ancilla photons or strong nonlinear interactions. However, specific experimental implementations that are feasible with current technology remain elusive. Here, we present a linear optics-based setup for HD entanglement swapping for up to six dimensions using ancillary photons. This scheme is applicable to any photonic degrees of freedom. Furthermore, we present an experimental design for a four-dimensional implementation using hyper-entanglement, which includes the necessary preparation of the ancilla state and analysis of the resulting swapped state.

Physics-Informed Neural Networks (PINNs) have shown continuous and increasing promise in approximating partial differential equations (PDEs), although they remain constrained by the curse of dimensionality. In this paper, we propose a generalized PINN version of the classical variable separable method. To do this, we first show that, using the universal approximation theorem, a multivariate function can be approximated by the outer product of neural networks, whose inputs are separated variables. We leverage tensor decomposition forms to separate the variables in a PINN setting. By employing Canonic Polyadic (CP), Tensor-Train (TT), and Tucker decomposition forms within the PINN framework, we create robust architectures for learning multivariate functions from separate neural networks connected by outer products. Our methodology significantly enhances the performance of PINNs, as evidenced by improved results on complex high-dimensional PDEs, including the 3d Helmholtz and 5d Poisson equations, among others. This research underscores the potential of tensor decomposition-based variably separated PINNs to surpass the state-of-the-art, offering a compelling solution to the dimensionality challenge in PDE approximation.

10 Oct 2025

Heinrich Heine Universität DüsseldorfFriedrich-Schiller-University JenaGSI Helmholtzzentrum für Schwerionenforschung GmbHGoethe Universität Frankfurt am MainFraunhofer Institute for Applied Optics and Precision Engineering IOFUniversity of Applied SciencesHelmholtz Institute JenaInstitute of Applied Physics, RASAbbe Center of Photonics

Long-living, hot and dense plasmas generated by ultra-intense laser beams are of critical importance for laser-driven nuclear physics, bright hard X-ray sources, and laboratory astrophysics. We report the experimental observation of plasmas with nanosecond-scale lifetimes, near-solid density, and keV-level temperatures, produced by irradiating periodic arrays of composite nanowires with ultra-high contrast, relativistically intense femtosecond laser pulses. Jet-like plasma structures extending up to 1~mm from the nanowire surface were observed, emitting K-shell radiation from He-like Ti20+ ions. High-resolution X-ray spectra were analyzed using 3D Particle-in-Cell (PIC) simulations of the laser-plasma interaction combined with collisional--radiative modeling (FLYCHK). The results indicate that the jets consist of plasma with densities of 1020-1022 cm−3 and keV-scale temperatures, persisting for several nanoseconds. We attribute the formation of these jets to the generation of kiloTesla-scale global magnetic fields during the laser interaction, as predicted by PIC simulations. These fields may drive long-timescale current instabilities that sustain magnetic fields of several hundred tesla, sufficient to confine hot, dense plasma over nanosecond durations.

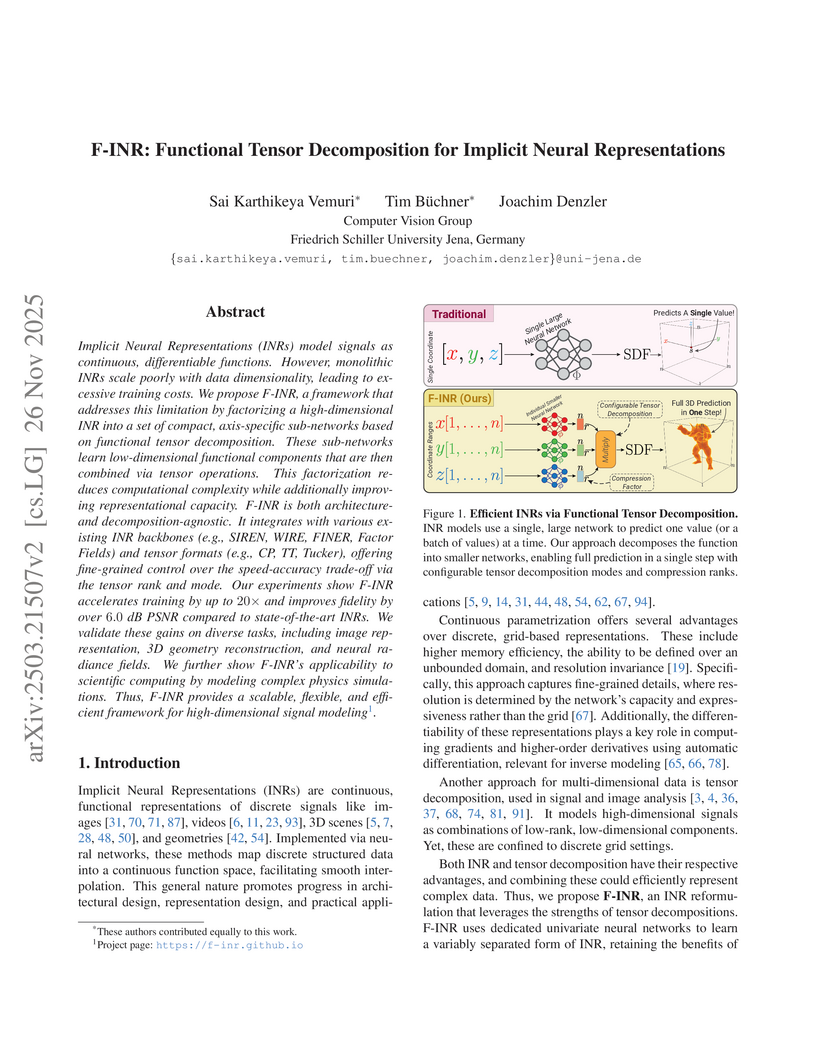

Implicit Neural Representations (INRs) model signals as continuous, differentiable functions. However, monolithic INRs scale poorly with data dimensionality, leading to excessive training costs. We propose F-INR, a framework that addresses this limitation by factorizing a high-dimensional INR into a set of compact, axis-specific sub-networks based on functional tensor decomposition. These sub-networks learn low-dimensional functional components that are then combined via tensor operations. This factorization reduces computational complexity while additionally improving representational capacity. F-INR is both architecture- and decomposition-agnostic. It integrates with various existing INR backbones (e.g., SIREN, WIRE, FINER, Factor Fields) and tensor formats (e.g., CP, TT, Tucker), offering fine-grained control over the speed-accuracy trade-off via the tensor rank and mode. Our experiments show F-INR accelerates training by up to 20× and improves fidelity by over \num{6.0} dB PSNR compared to state-of-the-art INRs. We validate these gains on diverse tasks, including image representation, 3D geometry reconstruction, and neural radiance fields. We further show F-INR's applicability to scientific computing by modeling complex physics simulations. Thus, F-INR provides a scalable, flexible, and efficient framework for high-dimensional signal modeling. Project page: this https URL

12 Sep 2025

There is a long-standing discussion in the astrophysical/astrochemical community as to the structure and morphology of dust grains in various astrophysical environments (e.g., interstellar clouds, protostellar envelopes, protoplanetary and debris disks, and the atmospheres of exoplanets). Typical grain models assume a compact dust core which becomes covered in a thick ice mantle in cold dense environments. In contrast, less compact cores are likely to exhibit porosity, leading to a pronounced increase in surface area with concomitant much thinner ice films and higher accessibility to the bare grain surface. Several laboratory experimental and theoretical studies have shown that this type of dust structure can have a marked effect on several physico-chemical processes, including adsorption, desorption, mobility, and reactivity of chemical species. Porous grains are thus thought to likely play a particularly important and wide-ranging astrochemical role. Herein, we clarify what is meant by porosity in relation to grains and grain agglomerates, assess the likely astrochemical effects of porosity and ask whether a fractal/porous structural/morphological description of dust grains is appropriate from an astronomical perspective. We provide evidence for high porosity from laboratory experiments and computational simulations of grains and their growth in various astrophysical environments. Finally, we assess the observational constraints and perspectives on cosmic dust porosity. Overall, our paper discusses the effects of including porosity in dust models and the need to use such models for future astrophysical, astrochemical and astrobiological studies involving surface or solid-state processes.

Nanophotonics has recently gained new momentum with the emergence of a novel class of nanophotonic systems consisting of resonant dielectric nanostructures integrated with single or few layers of transition metal dichalcogenides (2D-TMDs). Thinned to the single layer phase, 2D-TMDs are unique solid-state systems with excitonic states able to persist at room temperature and demonstrate notable tunability of their energies in the optical range. Based on these properties, they offer important opportunities for hybrid nanophotonic systems where a nanophotonic structure serves to enhance the light-matter interaction in the 2D-TMDs, while the 2D-TMDs can provide various active functionalities, thereby dramatically enhancing the scope of nanophotonic structures. In this work, we combine 2D-TMD materials with resonant photonic nanostructures, namely, metasurfaces composed of high-index dielectric nanoparticles. The dependence of the excitonic states on charge carrier density in 2D-TMDs leads to an amplitude modulation of the corresponding optical transitions upon changes of the Fermi level, and thereby to changes of the coupling strength between the 2D-TMDs and resonant modes of the photonic nanostructure. We experimentally implement such a hybrid nanophotonic system and demonstrate voltage tuning of its reflectance as well as its different polarization-dependent behavior. Our results show that hybridization with 2D-TMDs can serve to render resonant photonic nanostructures tunable and time-variant − important properties for practical applications in optical analog computers and neuromorphic circuits.

Selective control over the emission pattern of valley-polarized excitons in monolayer transition metal dichalcogenides is crucial for developing novel valleytronic, quantum information, and optoelectronic devices. While significant progress has been made in directionally routing photoluminescence from these materials, key challenges remain: notably, how to link routing effects to the degree of valley polarization, and how to distinguish genuine valley-dependent routing from spin-momentum coupling - an optical phenomenon related to electromagnetic scattering but not the light source itself. In this study, we address these challenges by experimentally and numerically establishing a direct relationship between the intrinsic valley polarization of the emitters and the farfield emission pattern, enabling an accurate assessment of valley-selective emission routing. We report valley-selective manipulation of the angular emission pattern of monolayer tungsten diselenide mediated by gold nanobar dimer antennas at cryogenic temperature. Experimentally, we study changes in the system's emission pattern for different circular polarization states of the excitation, demonstrating a valley-selective circular dichroism in photoluminescence of 6%. These experimental findings are supported by a novel numerical approach based on the principle of reciprocity, which allows modeling valley-selective emission in periodic systems. We further show numerically, that these valley-selective directional effects are a symmetry-protected property of the nanoantenna array owing to its extrinsic chirality for oblique emission angles, and can significantly be enhanced when tailoring the distribution of emitters. This renders our nanoantenna-based system a robust platform for valleytronic processing.

The vast majority of materials science knowledge exists in unstructured natural language, yet structured data is crucial for innovative and systematic materials design. Traditionally, the field has relied on manual curation and partial automation for data extraction for specific use cases. The advent of large language models (LLMs) represents a significant shift, potentially enabling efficient extraction of structured, actionable data from unstructured text by non-experts. While applying LLMs to materials science data extraction presents unique challenges, domain knowledge offers opportunities to guide and validate LLM outputs. This review provides a comprehensive overview of LLM-based structured data extraction in materials science, synthesizing current knowledge and outlining future directions. We address the lack of standardized guidelines and present frameworks for leveraging the synergy between LLMs and materials science expertise. This work serves as a foundational resource for researchers aiming to harness LLMs for data-driven materials research. The insights presented here could significantly enhance how researchers across disciplines access and utilize scientific information, potentially accelerating the development of novel materials for critical societal needs.

Monolayers of transition metal dichacogenides show strong second-order nonlinearity and symmetry-driven selection rules from their three-fold lattice symmetry. This process resembles the valley-contrasting selection rules for photoluminescence in these materials. However, the underlying physical mechanisms fundamentally differ since second harmonic generation is a coherent process, whereas photoluminescence is incoherent, leading to distinct interactions with photonic nanoresonators. In this study, we investigate the far- field circular polarization properties of second harmonic generation from MoS2 monolayers resonantly interacting with spherical gold nanoparticles. Our results indicate that the coherence of the second harmonic allows its polarization to be mostly preserved, unlike in an incoherent process, where the polarization is scrambled. These findings provide important insights for future applications in valleytronics and quantum nanooptics, where both coherent and incoherent processes can be probed in such hybrid systems without altering sample geometry or operational wavelength.

There are no more papers matching your filters at the moment.